Hola Habr! Continuamos publicando reseñas de artículos científicos de miembros de la comunidad Open Data Science del canal #article_essense. Si quieres recibirlos antes que los demás, ¡únete a la comunidad !

Artículos para hoy:

- TResNet: High Performance GPU-Dedicated Architecture (DAMO Academy, Alibaba Group, 2020)

- Controllable Person Image Synthesis with Attribute-Decomposed GAN (China, 2020)

- Learning to See Through Obstructions (Taiwan, USA, 2020)

- Tracking Objects as Points (UT Austin, Intel Labs, 2020)

- CookGAN: Meal Image Synthesis from Ingredients (USA, UK, 2020)

- Designing Network Design Spaces (FAIR, 2020)

- Gradient Centralization: A New Optimization Technique for Deep Neural Networks (Hong Kong, Alibaba, 2020)

- When Does Unsupervised Machine Translation Work? (Johns Hopkins University, USA, 2020)

: Tal Ridnik, Hussam Lawen, Asaf Noy, Itamar Friedman (DAMO Academy, Alibaba Group, 2020)

:: GitHub project ::

: ( artgor, habr artgor)

, . TResNet-XL top-1 accuracy 84.3% imagenet. , Resnet50 gpu throughput, top-1 accuracy 80.7%

, :

- depthwise 11 convolutions. FLOPS, GPU , . .

- Multi-path, , backpropagation, . - inplace .

3 : TResNet-M, TResNet-L and TResNet-XL. . : SpaceToDepth stem, Anti-Alias downsampling, In-Place Activated BatchNorm, Blocks selection and SE layers.

Stem Design

Stem Block — . , Resnet50 conv 7x7, stride 2 + maxpooling, 224 56. SpaceToDepth, , conv 1x1, .

Anti-Alias Downsampling

downscaling . , shift-equivariance -.

Inplace-ABN

Inplace-ABN BatchNorm+ReLU . . ReLU Leaky-ReLU. , .

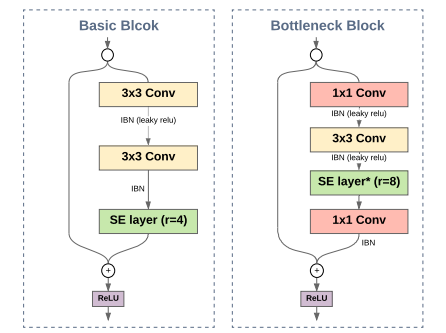

Blocks Selection

ResNet34 BasicBlocks conv 3x3, ResNet50 Bottleneck conv 1x1 conv 3x3 — , GPU. : 2 BasicBlock, 2 Bottleneck.

SE Layers

SE 3 stage . :

JIT Compilation , — AA blur filter and the SpaceToDepth. GPU cost 2 .

Global Average Pooling

view mean Pytorch AvgPool2d 5

Inplace Operations

, : Inplace-ABN, residual connections, SE blocks, activations . .

:

224224, 300 , SGD 1-cycle policy. : Auto-augment, Cutout, Label-smooth and Trueweight-decay. ImageNet, 0 1. Resnet50 TResNet.

Ablation Study

SE AA. .

2. Controllable Person Image Synthesis with Attribute-Decomposed GAN

: Yifang Men, Yiming Mao, Yuning Jiang, Wei-Ying Ma, Zhouhui Lian (China, 2020)

:: GitHub project :: Blog :: Video

: ( digitman, habr digitman)

, " " . , , . — (, , ) . .

, , — . . , .

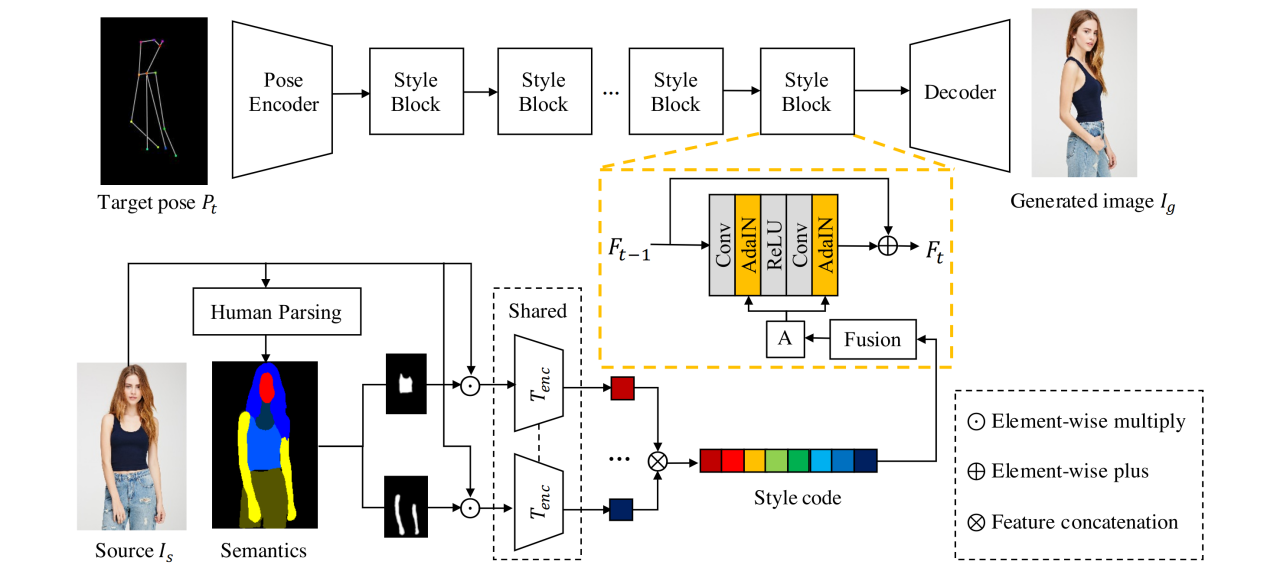

2 , ( I_s) ( I_t). , . 18 P_t ( ). Pose encoder 2 downsampling .

( 8, , . . , style code. , , .

texture encoder : — , — vgg, vgg.

style code - , . — StyleBlock AdaIN ( StyleGAN). 8 . — I_g.

. . — ( ). — PatchGAN , .

. Adversarial loss- . Reconstruction loss — l1 . Perceptual loss — l2 vgg . Contextual loss (CX) — ( VGG , ).

— DeepFashion. , , .

, . , . . , , , .

3. Learning to See Through Obstructions

: Yu-Lun Liu, Wei-Sheng Lai, Ming-Hsuan Yang, Yung-Yu Chuang, and Jia-Bin Huang (Taiwan, USA, 2020)

:: GitHub project :: Blog

: ( belskikh)

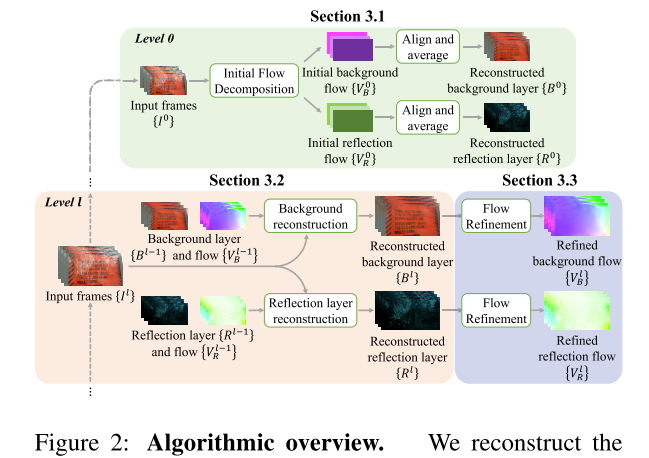

(, , ..) , . , , — . , optical flow, .

coarse to fine multi stage , , . optical flow , , .

:

Initial Flow Decompositon

OF . : flow estimator. , cost volume ( Cnns for optical flow using pyramid, warping, and cost volume), , . Flow Estimator FC , , initial flow, , .

Background/Reflection Layer Reconstruction

, , . , optical flow, , . , .

Optical Flow Refinement

optical flow PWC-Net.

— initial flow, L1 , PWC-Net.

, , L1 reconstruction loss gradient loss (L1 - -. ). , . unsupervised .

L1 , ( 0). total variation loss.

4. Tracking Objects as Points

: Xingyi Zhou, Vladlen Koltun, Philipp Krähenbühl (UT Austin, Intel Labs, 2020)

:: GitHub project

: ( belskikh)

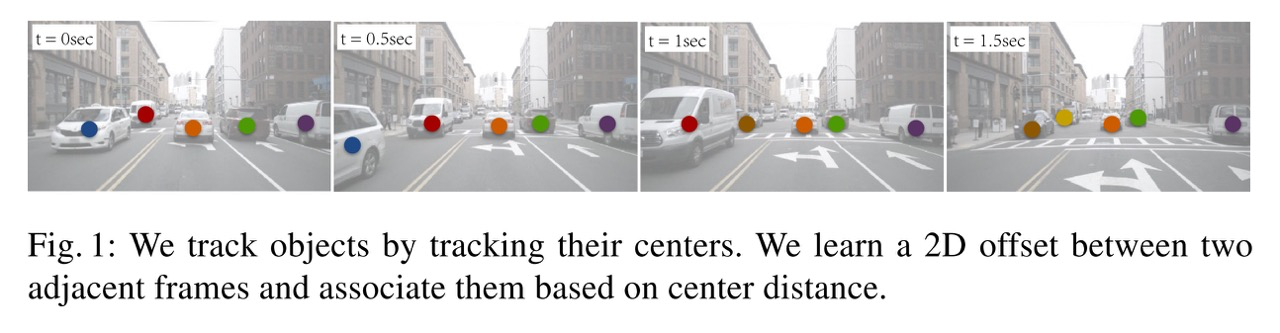

CenterNet , .

Objects as Points, CenterNet — anchorbox free object detector, . , , — .

, :

- ;

- class-agnostic ( , , CenterNet);

- — ; .

— , , ( , ).

, :

- - ground truth,

- false positives ground truth

- false negatives, .

— CenterNet, , , .

, , .

— 67.3% MOTA on the MOT17 challenge at 22 FPS and 89.4% MOTA on the KITTI tracking benchmark at 15 FPS.

CenterNet monocular 3D detection, 3D tracking , .

(Kalman, Optical Flow), , , .

, , , - .

, anchor box .

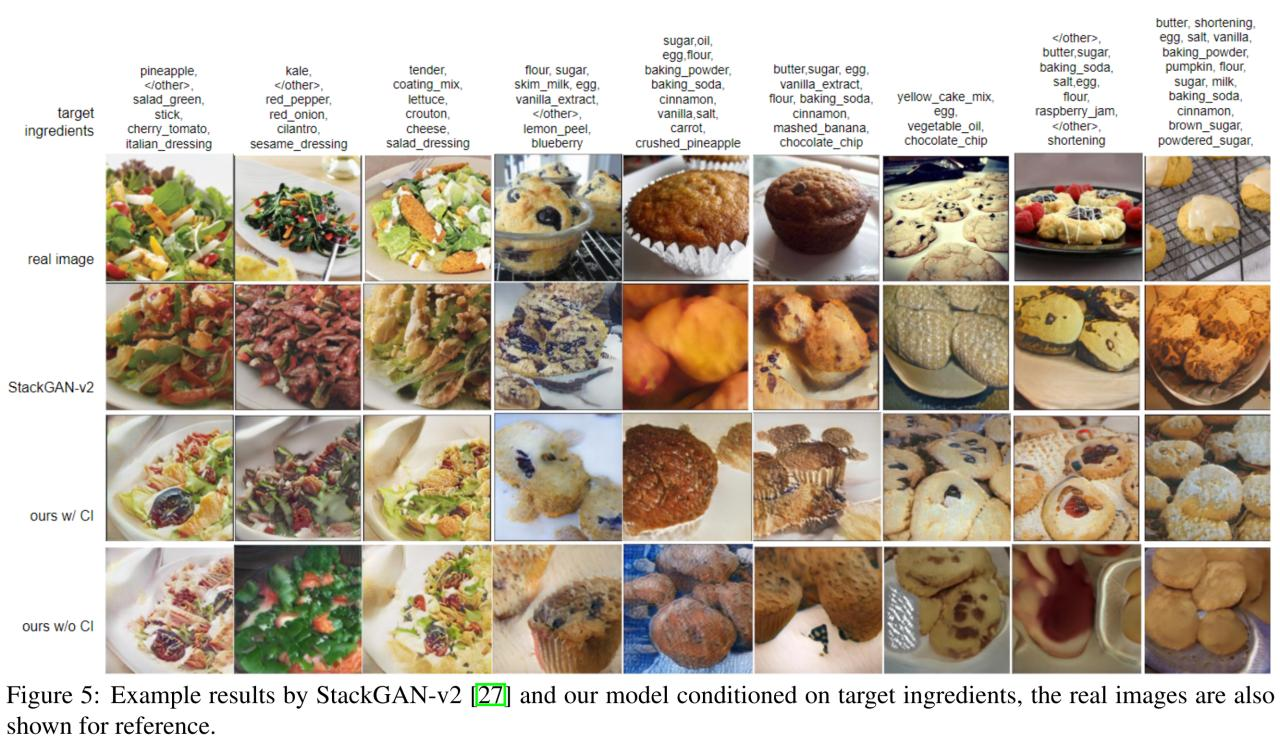

5. CookGAN: Meal Image Synthesis from Ingredients

: Fangda Han, Ricardo Guerrero, Vladimir Pavlovic (USA, UK, 2020)

: ( digitman, habr digitman)

. , , , .

. — bidirectional LSTM, word2vec , LSTM attention. — ResNet50, Imagenet, UPMC-Food-101. "FoodSpace" ["", " ", " "]. .

, p+. , . , . , , , .

, 'c', , 'c' (64, 128, 256). . :

conditional , .. 'c'. — unconditional ( "", "").

, cycle-consistency ( ). v+, q+, .. , ( ) v~+ q~+. : L_ci = cos(q+, q~+).

:

16 4, . 1989 , .

FID IS — , .

, . .

:

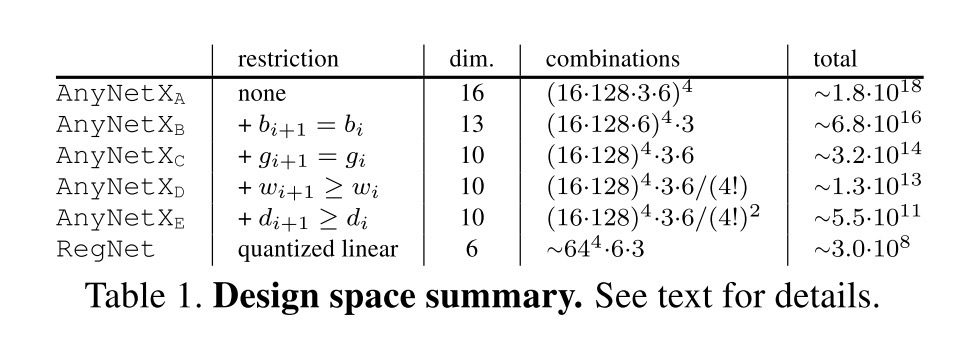

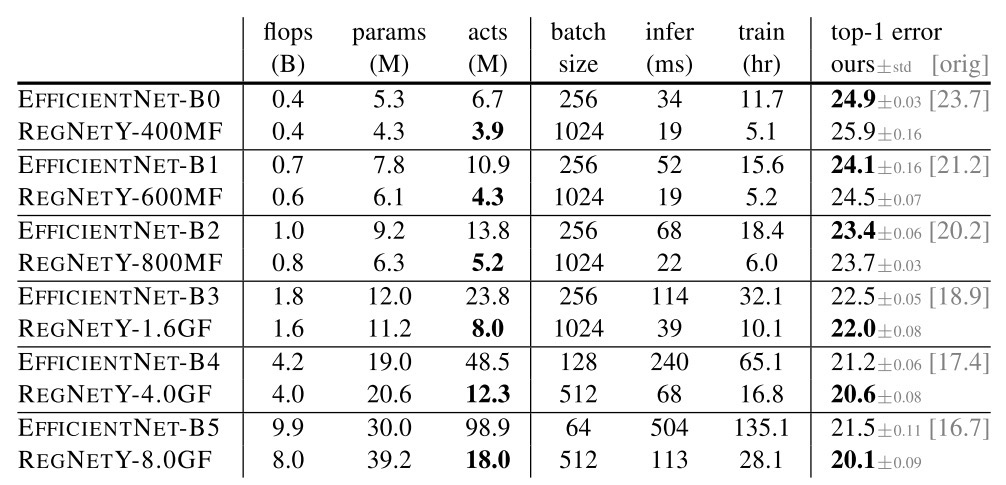

6. Designing Network Design Spaces

: Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár (FAIR, 2020)

:: GitHub project

: ( belskikh)

, - — , . .

. RegNets FLOPs EffNet , 5 .

, , , .

, , , , RL . — , , . empirical distribution function, , .

, ( ROC AUC). , .

(10^18 ), AnyNet.

:

- stem, body head;

- body 4 stage;

- stage block. , 11, 33, 11 residual , , .

stage:

- (d);

- (w);

- bottleneck ratio (b);

- group convolution (g).

, EDF (empirical distributionfunction), , , .

:

- .

- .

- , stage , — .

- .

, , . - RegNet

RegNet . DropPath, AutoAugment .., weight decay.

, , , Efficient Nets, (Efficient Nets , , , ),

: , . , NAS .

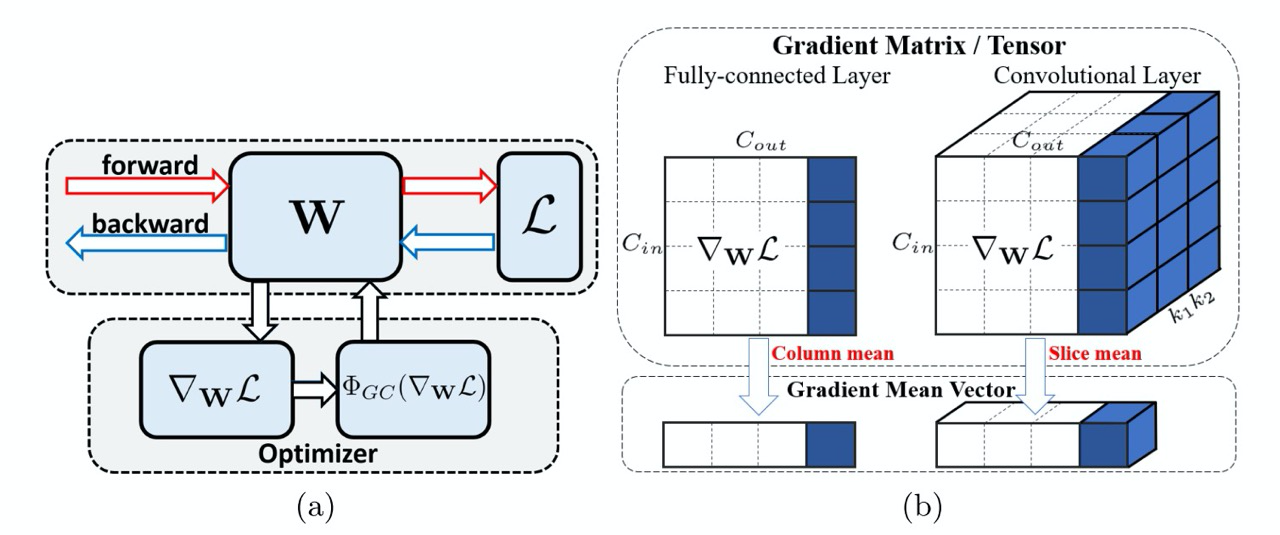

7. Gradient Centralization: A New Optimization Technique for Deep Neural Networks

: Hongwei Yong, Jianqiang Huang, Xiansheng Hua, Lei Zhang (Hong Kong, Alibaba, 2020)

:: GitHub project

: ( belskikh)

«» , ( = 0), , , / .

— () (), () , , .. , . , , .

, :

. , , , , .

. , , ( — Kaiming, ), , , .

, , ( weight standartization). .

. L2 . , .

Mini-Imagenet, CIFAR100, ImageNet, Cars, Dogs, CUB-200-2011, COCO.

8. When Does Unsupervised Machine Translation Work?

: Kelly Marchisio, Kevin Duh, Philipp Koehn (Johns Hopkins University, USA, 2020)

: ( evgeniyzh, habr Randl)

Unsupervised Machine Translation (UMT). , Lample et al. 2018 (Bai et al. 2020 30+ BLEU — n-). (, , ), domain . .

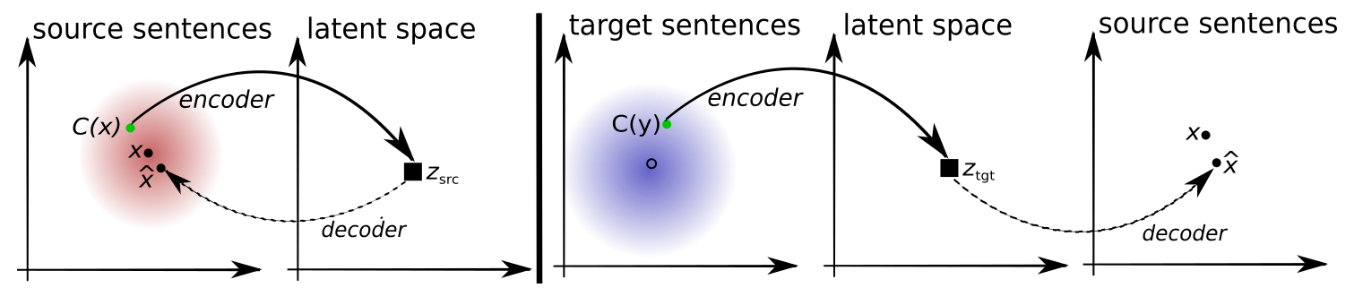

UMT . encoder-decoder latent space : , .

domains (UN =United Nations, CC = Common Crawl, News = Newscrawl) (, , , ).

, , Artetxe et al. 2019 .

— (- -) . supervised training . ( , — ), , . , domains .

, , ( ), UMT, ( 10-20% 1%).

, domains , ( , ).

(BLEU).