One of the problems that multi-product software vendors often encounter is duplication of the competencies of engineers - developers, testers and infrastructure administrators - in almost every team. This also applies to expensive engineers - experts in the field of load testing.Instead of engaging in their direct responsibilities and using their unique experience to build the load testing process, choosing a methodology, optimal metrics and writing self-tests according to load profiles, engineers often have to deploy a test infrastructure from scratch, configure load tools, and embed them in CI-systems, configure monitoring and publication of reports.You can find solutions to some organizational problems in testing that we use at Positive Technologies in another article . And in this I’ll talk about the possibility of integrating load tests into a common CI pipeline using the concept of “load testing as a service”. You will learn how and which docker images of load sources can be used in the CI pipeline; How to connect load sources to your CI project using an assembly template; what a demo pipe looks like to run load tests and publish results. The article may be useful to software testing engineers and automation engineers at CI who have thought about the architecture of their load system.

One of the problems that multi-product software vendors often encounter is duplication of the competencies of engineers - developers, testers and infrastructure administrators - in almost every team. This also applies to expensive engineers - experts in the field of load testing.Instead of engaging in their direct responsibilities and using their unique experience to build the load testing process, choosing a methodology, optimal metrics and writing self-tests according to load profiles, engineers often have to deploy a test infrastructure from scratch, configure load tools, and embed them in CI-systems, configure monitoring and publication of reports.You can find solutions to some organizational problems in testing that we use at Positive Technologies in another article . And in this I’ll talk about the possibility of integrating load tests into a common CI pipeline using the concept of “load testing as a service”. You will learn how and which docker images of load sources can be used in the CI pipeline; How to connect load sources to your CI project using an assembly template; what a demo pipe looks like to run load tests and publish results. The article may be useful to software testing engineers and automation engineers at CI who have thought about the architecture of their load system.Essence of the concept

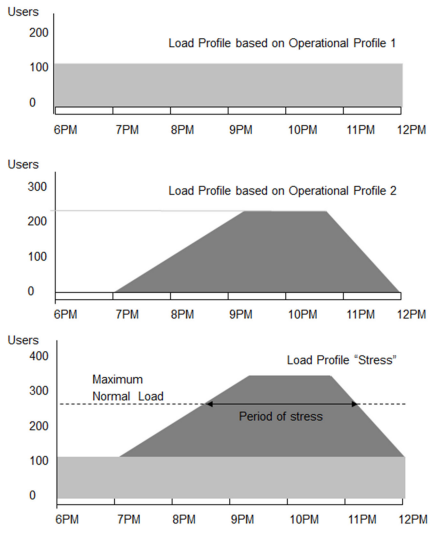

The concept of load testing as a service implies the ability to integrate Apache JMeter, Yandex.Tank load tools and own frameworks into an arbitrary continuous integration system. A demo will be for GitLab CI, but the principles are stated common to all CI-systems.Load testing as a service is a centralized service for conducting load testing. Load tests are run in dedicated agent pools, the results are published automatically in GitLab Pages, Influx DB and Grafana or in test reporting systems (TestRail, ReportPortal, etc.). Automation and scaling are realized as simple as possible - by adding and parameterizing the usual gitlab-ci.yml template in the GitLab CI project.The advantage of the approach is that the entire CI infrastructure, load agents, docker images of load sources, test pipelines and publication of reports are supported by the central automation department (DevOps engineers), and load testing engineers can focus their efforts on test development and analysis of their results, without dealing with infrastructure issues.For simplicity of description, we assume that the target test application or server is already deployed and configured in advance (for this, automated scripts in Python, SaltStack, Ansible, etc. can be used). Then the whole concept of stress testing as a service fits into three stages: preparation, testing, publication of reports . More details on the diagram (all pictures are clickable): When conducting stress tests, we try to adhere to the standards and methodology of ISTQB , we use the appropriate terminology and recommended metrics. I will give a short list of basic concepts and definitions in load testing.Load agent (load agent) - the virtual machine on which the application will be launched - the source of the load (Apache JMeter, Yandex.Tank or self-written load module).The purpose of testing (target) is a server or application installed on the server that will be subjected to load.A test case is a set of parameterized steps: user actions and expected reactions to these actions, with fixed network requests and responses, depending on the specified parameters.Profile or load plan (profile) - in the ISTQB methodology (Section 4.2.4, p. 43), load profiles determine the metrics critical for a particular test and options for changing load parameters during the test. You can see examples of profiles in the figure. Test is a script with a predefined set of parameters. Test plan - test suite and load profile. Testrun (testrun) - one iteration of running one test with a fully executed load scenario and a received report. Network Request (request) - An HTTP request sent from the agent to the target. Network response (response) - An HTTP response sent from the target to the agent.

When conducting stress tests, we try to adhere to the standards and methodology of ISTQB , we use the appropriate terminology and recommended metrics. I will give a short list of basic concepts and definitions in load testing.Load agent (load agent) - the virtual machine on which the application will be launched - the source of the load (Apache JMeter, Yandex.Tank or self-written load module).The purpose of testing (target) is a server or application installed on the server that will be subjected to load.A test case is a set of parameterized steps: user actions and expected reactions to these actions, with fixed network requests and responses, depending on the specified parameters.Profile or load plan (profile) - in the ISTQB methodology (Section 4.2.4, p. 43), load profiles determine the metrics critical for a particular test and options for changing load parameters during the test. You can see examples of profiles in the figure. Test is a script with a predefined set of parameters. Test plan - test suite and load profile. Testrun (testrun) - one iteration of running one test with a fully executed load scenario and a received report. Network Request (request) - An HTTP request sent from the agent to the target. Network response (response) - An HTTP response sent from the target to the agent. HTTP response status is a standard response code from the application server.Transaction (transaction) - a full cycle of "request - response." A transaction is counted from the start of sending a request (request) to the completion of receiving a response (response).Transaction status (transactions status) - was it possible to successfully complete the cycle "request-response". If there was any error in this loop, then the entire transaction is considered unsuccessful.Response time (latency) - time from the end of sending a request (request) to the start of receiving a response (response).Metrics of loading (metrics) - the characteristics of the loaded service and the loading agent determined during the process of load testing.

HTTP response status is a standard response code from the application server.Transaction (transaction) - a full cycle of "request - response." A transaction is counted from the start of sending a request (request) to the completion of receiving a response (response).Transaction status (transactions status) - was it possible to successfully complete the cycle "request-response". If there was any error in this loop, then the entire transaction is considered unsuccessful.Response time (latency) - time from the end of sending a request (request) to the start of receiving a response (response).Metrics of loading (metrics) - the characteristics of the loaded service and the loading agent determined during the process of load testing.Basic metrics for measuring load parameters

Some of the most common and recommended metrics in the ISTQB methodology (p. 36, 52) are shown in the table below. Similar metrics for the agent and target are shown on the same line.The basic scheme of load testing is very simple and consists of three main stages, which I already mentioned: Prepare - Test - Report , that is, preparing test goals and setting parameters for load sources, then performing load tests and, finally, generating and publishing a report about testing. Notes to the scheme:

- QA.Tester - stress testing expert,

- Target is the target application for which you need to know its behavior under load.

Classifier of entities, stages and steps in the diagram

CI-

Let's move on to the practical part. I want to show how on some projects at Positive Technologies we implemented the concept of load testing as a service.First, with the help of our DevOps engineers, we created a dedicated agent pool in GitLab CI to run load tests. In order not to confuse them in templates with others, such as assembly pools, we added tags to these agents, tags : load. You can use any other clear tags. They are set during the registration of GitLab CI Runners.How to find out the required power by hardware? The characteristics of load agents - a sufficient amount of vCPU, RAM and Disk - can be calculated on the basis that Docker, Python (for Yandex.Tank), GitLab CI agent, Java (for Apache JMeter) must be running on the agent. For Java, JMeter also recommends using a minimum of 512 MB of RAM and, as an upper limit, 80% of the available memory .Thus, based on our experience, we recommend using at least 4 vCPU, 4 GB RAM, 60 GB SSD for load agents. Network card bandwidth is determined based on the requirements of the load profile.We mainly use two load sources - docker images Apache JMeter and Yandex.Tank.Yandex.Tank- This is the open source tool of Yandex for stress testing. At the heart of its modular architecture is Phantom's high-performance asynchronous hit-based HTTP request generator. The tank has built-in monitoring of the resources of the tested server using the SSH protocol, can automatically stop the test according to the given conditions, can output the results both to the console and in the form of graphs, you can connect your modules to it to expand the functionality. By the way, we used Tank when it was not mainstream yet. In the article “ Yandex.Tank and Automation of Load Testing ”, you can read the story of how, in 2013, we used it to perform load testing of PT Appllication Firewall - one of the products of our company.Apache JMeterIs an open source tool for conducting Apache stress testing. It can be used equally well for testing both static and dynamic web applications. JMeter supports a huge number of protocols and ways of interacting with applications: HTTP, HTTPS (Java, NodeJS, PHP, ASP.NET, etc.), SOAP / REST Webservices, FTP, TCP, LDAP, SMTP (S), POP3 (S ) and IMAP (S), databases through JDBC, can execute shell commands and work with Java objects. JMeter has an IDE for creating, debugging, and executing test plans. There is also a CLI for working on the command line of any compatible Java OS (Linux, Windows, Mac OS X). The tool can dynamically generate an HTML test report.For ease of use within our company, for the ability of testers to change and add the environment, we made docker images of load sources on GitLab CI with publication in the internal docker-register on Artifactory . This way it turns out faster and easier to connect them in pipelines for load tests. How to make docker push in registry via GitLab CI - see the instructions .The basic docker file for Yandex.Tank we took this:Dockerfile

1 | FROM direvius/yandex-tank

2 | ENTRYPOINT [""]

And for Apache JMeter this one:Dockerfile

1 | FROM vmarrazzo/jmeter

2 | ENTRYPOINT [""]

You can read how our continuous integration system works in the article “ Automation of development processes: how we implemented DevOps ideas in Positive Technologies ”.Template and pipeline

An example template for conducting load tests is available in the demo-load project . You can read the instructions for using the template in the readme file . In the template itself (the .gitlab-ci.yml file ) there are notes about what this or that step is responsible for.The template is very simple and demonstrates the three stages of load testing described above in the diagram: preparation, testing and publication of reports. Responsible for it stages directory : the Prepare, the Test, and the Report .- Prepare . , - docker registry: Test. .

- Test , . : Yandex.Tank, Apache JMeter, . job-. :

: CI- . , bash-. , — QA-. . ./tests.

- Report , Test, , GitLab Pages . GitLab Pages , ./public index.html. GitLab Pages .

, :

:

In a demo example, a pipeline with load tests and two load sources (you can disable unnecessary) looks like this: Apache JMeter can generate an HTML report itself, so it’s more profitable to save it in GitLab Pages using regular tools. This is what the Apache JMeter report looks like: In the demo for Yandex.Tank, you will see only a fake text report in the section for GitLab Pages. During testing, the Tank is able to save the results to the InfluxDB database, and from there they can be displayed, for example, in Grafana (the configuration is performed in the file ./tests/example-yandextank-test.yml ). This is what Tank’s report looks like in Grafana:

Summary

In the article I talked about the concept of "load testing as a service" (load testing as a service). The main idea is to use the infrastructure of pre-configured pools of load agents, docker images of load sources, reporting systems and a pipeline uniting them in GitLab CI based on a simple .gitlab-ci.yml template (example by reference ). All this is supported by a small team of automation engineers and is replicated at the request of product teams. I hope this helps you in preparing and implementing a similar scheme in your company. Thank you for attention!PS I want to say a big thank you to my colleagues, Sergey Kurbanov and Nikolay Yusev, for technical assistance with the implementation of the concept of load testing as a service in our company.Author :Timur Gilmullin - deputy. Head of Technology and Development Processes (DevOps), Positive Technologies