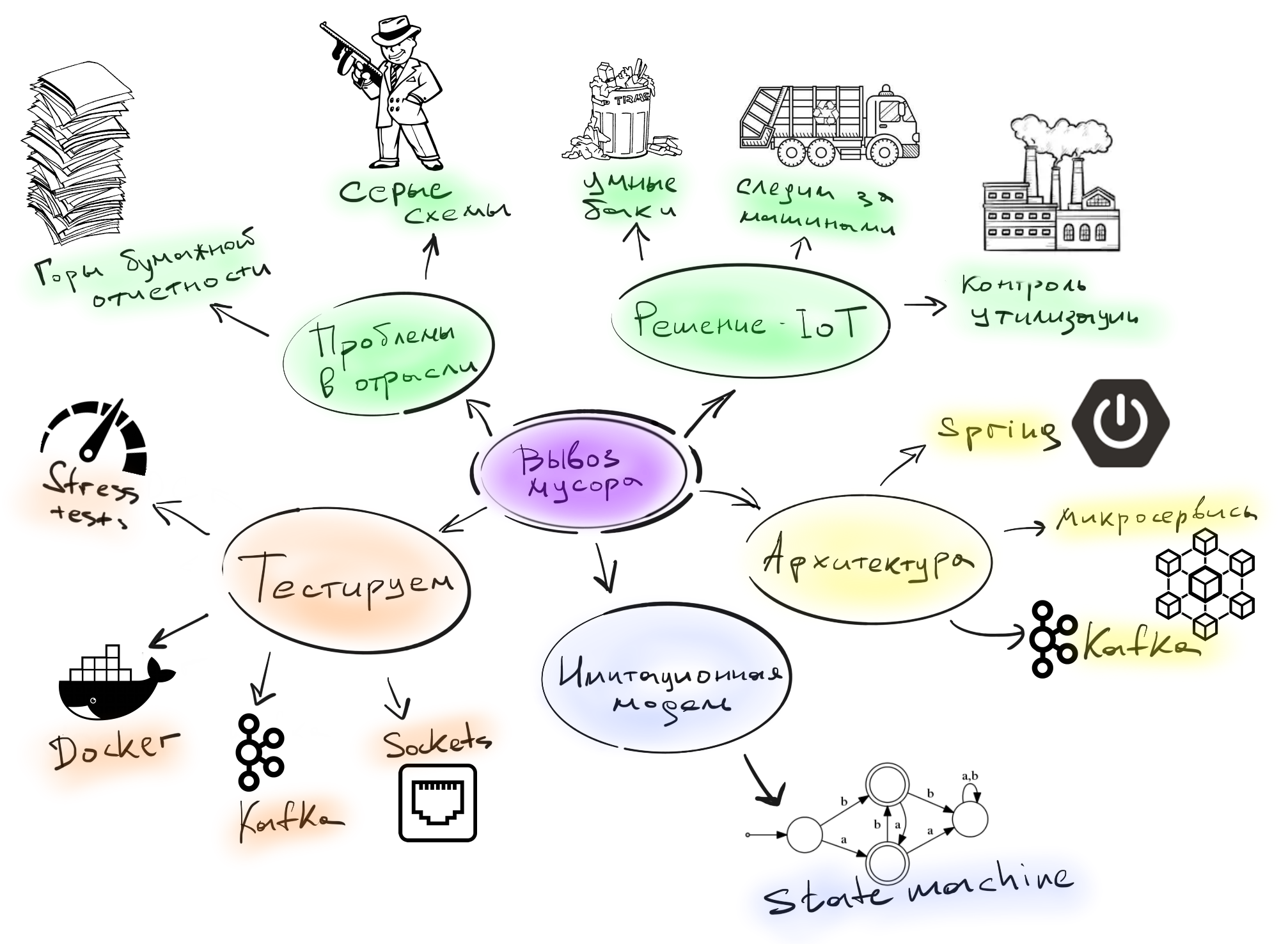

As I already said in the last part , when developing an IoT project, the protocols for interacting with devices are rather unstable, and the chances of losing contact with test devices after updating the firmware were quite large. Several teams were involved in the development, and there was a strict requirement - not to lose the ability to test the business layer of the application, even if flashing the devices breaks the entire flow of working with sensors. In order for business analysts to test their hypotheses on data more or less similar to reality, we built a simulation model of the device. Thus, if the device broke down due to new firmware, and the data needed to be urgently received, we launched a simulation model instead of a real device on the network, which, according to the old format, drove the data and produced the result.Also, the advantage of the model was that the business would never buy a large batch of devices just to test a hypothesis. For example, the business analysis team decided that predicting container filling time should work differently. And to test their hypothesis, no one will run to buy 10,000 sensors.

In order for business analysts to test their hypotheses on data more or less similar to reality, we built a simulation model of the device. Thus, if the device broke down due to new firmware, and the data needed to be urgently received, we launched a simulation model instead of a real device on the network, which, according to the old format, drove the data and produced the result.Also, the advantage of the model was that the business would never buy a large batch of devices just to test a hypothesis. For example, the business analysis team decided that predicting container filling time should work differently. And to test their hypothesis, no one will run to buy 10,000 sensors.Development of a simulation model

The simulation model itself was as follows: The state and behavior of the garbage can is described by a regular state machine. First, we initialize the state machine with the state `EMPTY (level = 0)` and we can perform some actions on it, that is, throw garbage into the container. Now you need to decide whether the container remains empty `(level? MAX_LEVEL)` or is it full` (level> = MAX_LEVEL) `. If the second, then the state changes to `FULL`.Someone can unload the garbage from a full container, or the janitor has come to clean up his mess, and we need to decide what state to switch to. The `CHOICE` state is responsible for choosing an action - in the terminology of a state machine, something similar to an if block.Another container may burn, and then the state-machine state changes to `FIRE`. Also, the container may fall, and its condition becomes `FALL` (in the report I talked about what unexpected reasons container drops may cause). But there is another `LOST` state, which is valid from any other state - it is set when the connection is lost.Such a state machine describes almost all the behavior of the container and the sensor on it. But this is not enough to make a simulation model, because we know about the possible states and transitions from them, but we do not know what the probability of these events is and when they will occur.In fact, it turned out that the probability of events depended on the time of day, because:

state and behavior of the garbage can is described by a regular state machine. First, we initialize the state machine with the state `EMPTY (level = 0)` and we can perform some actions on it, that is, throw garbage into the container. Now you need to decide whether the container remains empty `(level? MAX_LEVEL)` or is it full` (level> = MAX_LEVEL) `. If the second, then the state changes to `FULL`.Someone can unload the garbage from a full container, or the janitor has come to clean up his mess, and we need to decide what state to switch to. The `CHOICE` state is responsible for choosing an action - in the terminology of a state machine, something similar to an if block.Another container may burn, and then the state-machine state changes to `FIRE`. Also, the container may fall, and its condition becomes `FALL` (in the report I talked about what unexpected reasons container drops may cause). But there is another `LOST` state, which is valid from any other state - it is set when the connection is lost.Such a state machine describes almost all the behavior of the container and the sensor on it. But this is not enough to make a simulation model, because we know about the possible states and transitions from them, but we do not know what the probability of these events is and when they will occur.In fact, it turned out that the probability of events depended on the time of day, because:- carriers do not work at night;

- people throw more garbage at certain hours (in the morning before work and in the evening).

Therefore, we made it possible for the business analysis team to customize the simulation behavior. It was possible to set the probability of an event at a certain time of the day.Simple and intuitive stress test

Imitation itself has many advantages, and one of them is cheap load testing. It’s cheap because imitation is, in fact, a separate thread that starts the state machine, applies events to it, and the events themselves are sent to the real server.Therefore, the simulation for the backend is no different from the real sensor. And if we need to run 1000 sensors, run 1000 threads and work. In addition, the simulation scales perfectly. On the one hand, it’s rather rude to test the load, but on the other hand, the simulation made it possible to drive a lot of data close to reality for the entire project. And do not forget about gifted Chinese developers who ignored standard protocols like MQTT and sawed off their protocol on top of sockets. Therefore, we had to do our own implementation of a server that accepts data on sockets under this proprietary protocol.Such a server had to be multi-threaded, since there are many input sensors. and this part also needs to be tested separately using performance tests. You could take JMeter (write a typical test script), JMH / JCStress (test isolated parts and make a thinner benchmark), or something else of your own. When you make a decision in a similar situation, I advise you to listen to professionals, for example, Alexei Shipilev. At JPoint 2017, he very coolly talked about how to benchmark different things and what you need to think about when doing performance testing.We chose the option to do something of our own, since the project had an atypical approach to QA - we do not have a separate team of testers, and the backend team itself tested the functionality. That is, the person who wrote the socket server had to cover the code with ordinary units, integration and performance tests.We had a small tool that allowed us to quickly describe the load scenario and run it in the right amount of parallel threads:

On the one hand, it’s rather rude to test the load, but on the other hand, the simulation made it possible to drive a lot of data close to reality for the entire project. And do not forget about gifted Chinese developers who ignored standard protocols like MQTT and sawed off their protocol on top of sockets. Therefore, we had to do our own implementation of a server that accepts data on sockets under this proprietary protocol.Such a server had to be multi-threaded, since there are many input sensors. and this part also needs to be tested separately using performance tests. You could take JMeter (write a typical test script), JMH / JCStress (test isolated parts and make a thinner benchmark), or something else of your own. When you make a decision in a similar situation, I advise you to listen to professionals, for example, Alexei Shipilev. At JPoint 2017, he very coolly talked about how to benchmark different things and what you need to think about when doing performance testing.We chose the option to do something of our own, since the project had an atypical approach to QA - we do not have a separate team of testers, and the backend team itself tested the functionality. That is, the person who wrote the socket server had to cover the code with ordinary units, integration and performance tests.We had a small tool that allowed us to quickly describe the load scenario and run it in the right amount of parallel threads:StressTestRunner.test()

.mode(ExecutionMode.EXECUTOR_MODE)

.threads(THREADS_COUNT)

.iterations(MESSAGES_COUNT)

.timeout(5, TimeUnit.SECONDS)

.run(() -> sensor.send(MESSAGE));

Awaitility.await()

.atMost(5, TimeUnit.SECONDS)

.untilAsserted(() ->

verifyReceived(MESSAGES_COUNT)

);

We say how many threads you need to run, how many messages to send, how long it should take, and send data to the socket in each thread. It remains only to wait for our server to correctly process all this data. Only a few lines of code have been released that can be written by any backend developer.Emulation of network problems

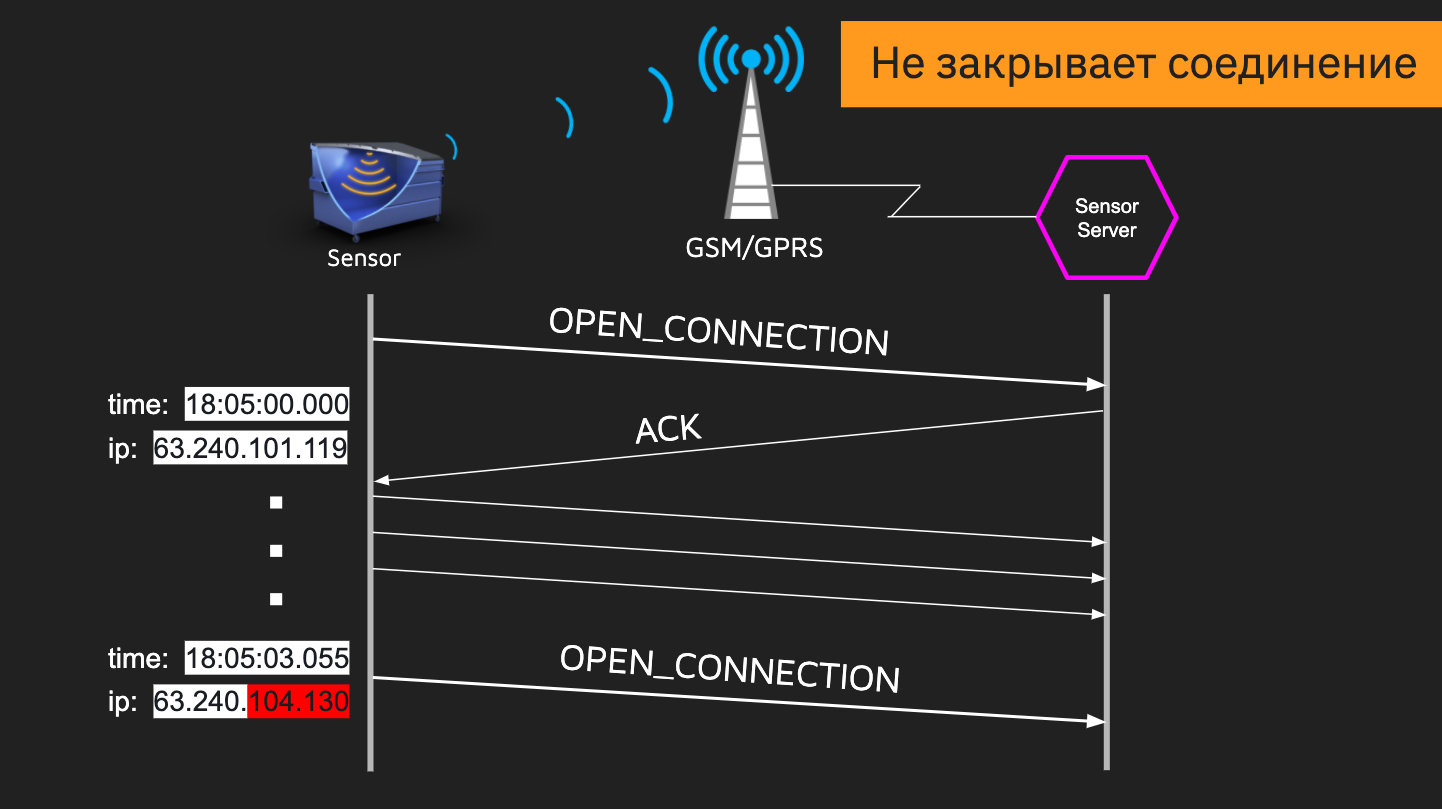

With the help of imitation, we were able to simulate both poor-quality and specific work with sockets. GSM SIM cards in the sensors do not have “white” IP addresses, and we could receive data from different IPs 50 times a day. And it often happened that the connection was opened, we started to transfer data, then the IP address changes, and the server opens a new connection without closing the old one. If we did not take this into account, then in a couple of days we would run out of free ports on the server. There was also a problem with different speed sensors. A slow device may open a connection and freeze for a while, while a fast device will send something. And all this needs to be processed correctly. In imitation, simulating a similar situation is easy using pauses.

There was also a problem with different speed sensors. A slow device may open a connection and freeze for a while, while a fast device will send something. And all this needs to be processed correctly. In imitation, simulating a similar situation is easy using pauses. This is only part of the scenarios that can be incorporated into the model.

This is only part of the scenarios that can be incorporated into the model.findings

It seems to me that it is precisely the possibility of simulation that strongly distinguishes IoT from other projects. Simulating device behavior is easier than human behavior. At the input, we get deterministic values that correlate well with our model, and not random human actions. Because the behavior of devices is logically easier to describe than the behavior of people, and testing the system becomes easier.We looked at quite a few different aspects of development in IoT. If you skipped the previous two parts of this article, you can find them here:IoT where you did not wait (Part 1) - The subject area and problems ofIoT where you did not wait (Part 2) - Application architecture and IoT testing of specific thingsGithub with the testing tools in question.

If you skipped the previous two parts of this article, you can find them here:IoT where you did not wait (Part 1) - The subject area and problems ofIoT where you did not wait (Part 2) - Application architecture and IoT testing of specific thingsGithub with the testing tools in question.. , Heisenbug , IoT. , ! JPoint, . Heisenbug JPoint 6 . !