- Does your system consist of many interconnected services?

- still manually update the service code when changing the public API?

- changes in your services often undermine the work of others, and other developers hate you for this?

If you answered yes at least once, then welcome!Terms

Public contracts, specifications - public interfaces through which you can interact with the service. In the text they mean the same thing.What is the article about

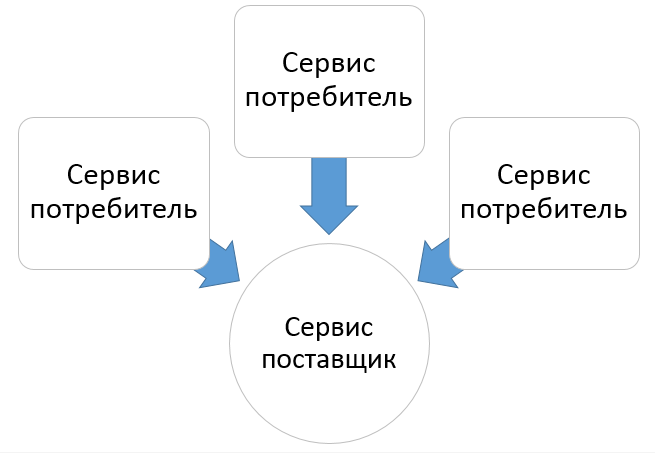

Learn how to reduce time spent developing web services using tools for unified description of contracts and automatic code generation.Proper use of the techniques and tools described below will allow you to quickly roll out new features and not break old ones.What does the problem look like

There is a system that consists of several services. These services are assigned to different teams. Consumer services depend on the service provider.The system evolves, and one day the service provider changes its public contracts.

Consumer services depend on the service provider.The system evolves, and one day the service provider changes its public contracts. If consumer services are not ready for change, then the system ceases to work fully.

If consumer services are not ready for change, then the system ceases to work fully.

How to solve this problem

The supplier service team will fix everything

This can be done if the supplier team owns the subject area of other services and has access to their git repositories. This will work only in small projects when there are few dependent services. This is the cheapest option. If possible, you should use it.Update the code of your service to the consumer team

Why are others breaking, but are we repairing?However, the main question is how to fix your service, what does the contract look like now? You need to learn the new provider service code or contact their team. We spend time studying the code and interacting with another team.Think what to do to prevent the problem from appearing

The most reasonable option in the long run. Consider it in the next section.How to prevent the manifestation of a problem

The life cycle of software development can be represented in three stages: design, implementation and testing.Each of the steps needs to be expanded as follows:- at the design stage declaratively define contracts;

- during implementation, we generate server and client code under contracts;

- when testing, we check contracts and try to take into account customer needs (CDC).

Each of the steps is explained further on as an example of our problem.How the problem looks with us

This is what our ecosystem looks like.Circles are services, and arrows are communication channels between them.Frontend is a web-based client application.Most arrows lead to the Storage Service. It stores documents. This is the most important service. After all, our product is an electronic document management system.Should this service change its contracts, the system will immediately stop working.

This is what our ecosystem looks like.Circles are services, and arrows are communication channels between them.Frontend is a web-based client application.Most arrows lead to the Storage Service. It stores documents. This is the most important service. After all, our product is an electronic document management system.Should this service change its contracts, the system will immediately stop working. The sources of our system are mainly written in c #, but there are also services in Go and Python. In this context, it does not matter what the other services in the figure do.

The sources of our system are mainly written in c #, but there are also services in Go and Python. In this context, it does not matter what the other services in the figure do. Each service has its own client implementation for working with the storage service. When changing contracts, you must manually update the code in each project.I would like to get away from manual updating towards automatic. This will help increase the rate at which client code changes and reduce errors. Errors are typos in the URL, errors due to carelessness, etc.However, this approach does not fix errors in client business logic. You can only adjust it manually.

Each service has its own client implementation for working with the storage service. When changing contracts, you must manually update the code in each project.I would like to get away from manual updating towards automatic. This will help increase the rate at which client code changes and reduce errors. Errors are typos in the URL, errors due to carelessness, etc.However, this approach does not fix errors in client business logic. You can only adjust it manually.From problem to task

In our case, it is required to implement automatic generation of client code.In doing so, the following should be considered:- server side - controllers are already written;

- the browser is a client of the service;

- Services communicate over HTTP.

- generation must be tuned. For example, to support JWT.

Questions

In the course of solving the problem, questions arose:- which tool to choose;

- how to get contracts;

- where to place the contracts;

- where to place the client code;

- at what point to do the generation.

The following are answers to these questions.Which tool to choose

Tools for working with contracts are presented in two directions - RPC and REST. RPC can be understood as just a remote call, while REST requires additional conditions for HTTP verbs and URLs.

RPC can be understood as just a remote call, while REST requires additional conditions for HTTP verbs and URLs.The differences in RPC and REST call are presented here. Tools

The table shows a comparison of tools for working with REST and RPC.Code first - first we write the server part, and then we get contracts on it. It is convenient when the server side is already written. No need to manually describe contracts.Spec first - first we define the contracts, then we get the client part and the server part from them. It is convenient at the beginning of development when there is no code yet. WSDLoutput isnot suitable due to its redundancy.Apache Thrift is too exotic and difficult to learn.GRPC requires net Core 3.0 and net Standard 2.1. At the time of analysis, net Core 2.2 and net Standard 2.0 were used. There is no GRPC support in the browser out of the box, an additional solution is required. GRPC uses Protobuf and HTTP / 2 binary serialization. Because of this, the range of utilities for testing APIs such as Postman, etc., is narrowing. Load testing through some JMeter may require additional effort. Not suitable, switching to GRPC requires a lot of resources.OpenAPI does not require additional updates. It captivates an abundance of tools that support working with REST and this specification. We select it.Tools for working with OpenAPI

The table shows a comparison of tools for working with OpenAPI.The conclusion ofSwashbuckle is not suitable, because allows you to get only the specification. To generate client code, you need to use an additional solution.OpenApiTools is an interesting tool with a bunch of settings, but it does not support code first. Its advantage is the ability to generate server code in many languages.NSwag is convenient in that it is a Nuget package. It is easy to connect when building a project. Supports everything we need: code first approach and generation of client code in c #. We select it.Where to arrange contracts. How to access services to contracts

Here are solutions for organizing contract storage. The solutions are listed in order of increasing complexity.- Provider service project folder is the easiest option. If you need to run in the approach, then choose it.

- a shared folder is a valid option if the desired projects are in the same repository. In the long run, it will be difficult to maintain the integrity of the contracts in the folder. This may require an additional tool to account for different versions of contracts, etc.

- separate repository for specifications - if the projects are in different repositories, then the contracts should be placed in a public place. The disadvantages are the same as the shared folder.

- through the service API (swagger.ui, swaggerhub) - a separate service that deals with specification management.

We decided to use the simplest option - to store contracts in the project folder of the service provider. This is enough for us at this stage, so why pay more?At what point do you generate

Now you need to decide at what point to perform code generation.If the contracts were shared, consumer services could receive the contracts and generate the code themselves if necessary.We decided to place the contracts in the folder with the service provider project. This means that generation can be done after the assembly of the supplier service project itself.Where to place the client code

Client code will be generated by contract. It remains to find out where to place it.It seems like a good idea to put the client code in a separate StorageServiceClientProxy project. Each project will be able to connect this assembly.Benefits of this solution:- client code is close to its service and is constantly up to date;

- consumers can use the link to the project within one repository.

Disadvantages:- will not work if you need to generate a client in another part of the system, for example, a different repository. It is solved using at least a shared folder for contracts;

- consumers must be written in the same language. If you need a client in another language, you need to use OpenApiTools.

We fasten NSwag

Controller Attributes

Need to tell NSwag how to generate the correct specification for our controllers.To do this, you need to arrange the attributes.[Microsoft.AspNetCore.Mvc.Routing.Route("[controller]")]

[Microsoft.AspNetCore.Mvc.ApiController]

public class DescriptionController : ControllerBase {

[NSwag.Annotations.OpenApiOperation("GetDescription")]

[Microsoft.AspNetCore.Mvc.ProducesResponseType(typeof(ConversionDescription), 200)]

[Microsoft.AspNetCore.Mvc.ProducesResponseType(401)]

[Microsoft.AspNetCore.Mvc.ProducesResponseType(403)]

[Microsoft.AspNetCore.Mvc.HttpGet("{pluginName}/{binaryDataId}")]

public ActionResult<ConversionDescription> GetDescription(string pluginName, Guid binaryDataId) {

}

By default, NSwag cannot generate the correct specification for the MIME type application / octet-stream. For example, this can happen when files are transferred. To fix this, you need to write your attribute and processor to create the specification.[Microsoft.AspNetCore.Mvc.Route("[controller]")]

[Microsoft.AspNetCore.Mvc.ApiController]

public class FileController : ControllerBase {

[NSwag.Annotations.OpenApiOperation("SaveFile")]

[Microsoft.AspNetCore.Mvc.ProducesResponseType(401)]

[Microsoft.AspNetCore.Mvc.ProducesResponseType(403)]

[Microsoft.AspNetCore.Mvc.HttpPost("{pluginName}/{binaryDataId}/{fileName}")]

[OurNamespace.FileUploadOperation]

public async Task SaveFile() {

Processor for generating specifications for file operations

The idea is that you can write your attribute and processor to handle this attribute.We hang the attribute on the controller, and when NSwag meets it, it will process it using our processor.To implement this, NSwag provides the OpenApiOperationProcessorAttribute and IOperationProcessor classes.In our project, we made our heirs:- FileUploadOperationAttribute: OpenApiOperationProcessorAttribute

- FileUploadOperationProcessor: IOperationProcessor

Read more about using processors here.NSwag configuration for spec and code generation

In the config 3 main sections:- runtime - Specifies .net runtime. For example, NetCore22;

- documentGenerator - describes how to generate a specification;

- codeGenerators - defines how to generate code according to the specification.

NSwag contains a bunch of settings, which is confusing at first.For convenience, you can use NSwag Studio. Using it, you can see in real time how various settings affect the result of code generation or specifications. After that, manually select the selected settings in the configuration file.Read more about config settings hereWe generate the specification and client code when assembling the project of the service provider

So that after the assembly of the service provider project, the specification and code are generated, do the following:- We created a WebApi project for the client.

- We wrote a config for Nswag CLI - Nswag.json (described in the previous section).

- We wrote a PostBuild Target inside the csproj service provider project.

<Target Name="GenerateWebApiProxyClient“ AfterTargets="PostBuildEvent">

<Exec Command="$(NSwagExe_Core22) run nswag.json”/>

- $ (NSwagExe_Core22) run nswag.json - run the NSwag utility under .bet runtine netCore 2.2 with the nswag.json configuration

Target does the following:- NSwag generates a specification from a vendor service assembly.

- NSwag generates client code as per specification.

After each assembly of the service provider project, the client project is updated.The project of the client and the service provider are within the same solution.Assembly takes place as part of the solution. The solution is configured that the client project should be assembled after the supplier service project.NSwag also allows you to customize specification / code generation imperatively through the software API.How to add support for JWT

We need to protect our service from unauthorized requests. For this, we will use JWT tokens. They must be sent in the headers of each HTTP request so that the service provider can check them and decide whether to fulfill the request.More information about JWT here jwt.io .The task boils down to the need to modify the headers of the outgoing HTTP request.To do this, the NSwag code generator can generate an extension point — the CreateHttpRequestMessageAsync method. Inside this method there is access to the HTTP request before it is sent.Code exampleprotected Task<HttpRequestMessage> CreateHttpRequestMessageAsync(CancellationToken cancellationToken) {

var message = new HttpRequestMessage();

if (!string.IsNullOrWhiteSpace(this.AuthorizationToken)) {

message.Headers.Authorization =

new System.Net.Http.Headers.AuthenticationHeaderValue(BearerScheme, this.AuthorizationToken);

}

return Task.FromResult(message);

}

Conclusion

We chose the option with OpenAPI, because It’s easy to implement, and the tools for working with this specification are highly developed.Conclusions on OpenAPI and GRPC:OpenAPI- the specification is verbose;

- , ;

- ;

- .

GRPC- , URL, HTTP ..;

- OpenAPI;

- ;

- ;

- HTTP/2.

Thus, we received a specification based on the already written code of the controllers. To do this, it was necessary to hang special attributes on the controllers.Then, based on the received specification, we implemented the generation of client code. Now we do not need to manually update the client code.Studies have been conducted in the area of versioning and testing contracts. However, it was not possible to test the whole thing in practice due to lack of resources.Versioning Public Contracts

Why versioning public contracts?

After changes in service providers, the entire system must remain in a consistent, operational state.Breaking changes in the public API must be avoided so as not to break clients.Solution options

Without versioning public contracts

The service provider team itself fixes the consumer services. This approach will not work if the service provider team does not have access to the consumer service repositories or lacks competencies. If there are no such problems, then you can do without versioning.

This approach will not work if the service provider team does not have access to the consumer service repositories or lacks competencies. If there are no such problems, then you can do without versioning.

Use versioning of public contracts

The service provider team leaves the previous version of the contracts. This approach does not have the drawbacks of the previous one, but adds other difficulties.You need to decide on the following:

This approach does not have the drawbacks of the previous one, but adds other difficulties.You need to decide on the following:- which tool to use;

- when to introduce a new version;

- how long to maintain old versions.

Which tool to use

The table shows the features of OpeanAPI and GRPC associated with versioning.Deprecated means that this API is no longer worth using.Both tools support version and Deprecated attributes.If you use OpenAPI and the code first approach, again you need to write processors to create the right specification.When to introduce a new version

The new version must be introduced when changes to the contracts do not preserve backward compatibility.How to verify that changes violate compatibility between the new and old versions of contracts?How long to maintain versions

There is no right answer to this question.To remove support for the old version, you need to know who uses your service.It will be bad if the version is removed, and someone else uses it. It is especially difficult if you do not control your customers.So what can be done in this situation?- notify customers that the old version will no longer be supported. In this case, we may lose customer income;

- support the entire set of versions. The cost of software support is growing;

- to answer this question, you need to ask the business - does the income from old customers exceed the cost of supporting older versions of software? Could it be more profitable to ask customers to upgrade?

The only advice in this situation is to pay more attention to public contracts in order to reduce the frequency of their changes.If public contracts are used in a closed system, you can use the CDC approach. So we can find out when customers stopped using older versions of the software. After that, you can remove the support of the old version.Conclusion

Use versioning only if you cannot do without it. If you decide to use versioning, then when designing contracts, consider version compatibility. A balance must be struck between the cost of supporting older versions and the benefits it provides. It is also worth deciding when you can stop supporting the old version.Testing Contracts and CDC

This section is illuminated superficially, as There are no serious prerequisites for the implementation of this approach.Consumer driven contracts (CDC)

CDC is the answer to the question of how to ensure that the supplier and the consumer use the same contracts. These are some kind of integration tests aimed at checking contracts.The idea is as follows:- The consumer describes the contract.

- The supplier implements this contract at home.

- This contract is used in the CI process at the consumer and supplier. If the process is violated, then someone has ceased to comply with the contract.

Pact

PACT is a tool that implements this idea.- The consumer writes tests using the PACT library.

- These tests are converted to an artifact - pact file. It contains information about the contracts.

- The provider and consumer use the pact file to run the tests.

During client tests, a provider stub is created, and during supplier tests, a client stub is created. Both of these stubs use a pact file.Similar stub creation behavior can be achieved through the Swagger Mock Validator bitbucket.org/atlassian/swagger-mock-validator/src/master .Useful links about Pact

How CDC can be embedded in CI

- deploying Pact + Pact broker yourself;

- purchase a ready-made Pact Flow SaaS solution.

Conclusion

Pact is needed to ensure contract compliance. It will show when changes in contracts violate the expectations of consumer services.This tool is suitable when the supplier adapts to the customer - the customer. This is only possible inside an isolated system.If you are making a service for the outside world and don’t know who your customers are, then Pact is not for you.