Progress does not stand still, so the reasons for upgrading to the latest versions of MySQL are becoming increasingly significant. Not so long ago, in one of our projects, it was time to upgrade cozy Percona Server 5.7 clusters to version 8. All this happened on the Ubuntu Linux 16.04 platform. How to perform a similar operation with minimal downtime and what problems we encountered during the upgrade - read this article.

Progress does not stand still, so the reasons for upgrading to the latest versions of MySQL are becoming increasingly significant. Not so long ago, in one of our projects, it was time to upgrade cozy Percona Server 5.7 clusters to version 8. All this happened on the Ubuntu Linux 16.04 platform. How to perform a similar operation with minimal downtime and what problems we encountered during the upgrade - read this article.Training

Any update of the database server is most likely connected with the migration of the database: changes in the requirements for limits on system resources and the correction of the database configurations, which must be cleared of outdated directives.Before updating, we will definitely turn to the official documentation:And make an action plan:- Fix configuration files by removing obsolete directives.

- Check compatibility with utilities.

- Update slave databases by installing the package

percona-server-server. - Update the wizard by putting the same package.

We will analyze each item in the plan and see what can go wrong.IMPORTANT! The Galera-based MySQL cluster upgrade procedure has its own subtleties that are not described in the article. You should not use this instruction in this case.Part 1: Checking Configs

In version 8, MySQL was removed query_cache. In fact, it was declared obsolete back in version 5.7, but now it is completely deleted . Accordingly, it is necessary to remove the related directives. And for caching queries, you can now use external tools - for example, ProxySQL .Also outdated pro directives were found in the config innodb_file_format. If in MySQL 5.7 it was possible to select the InnoDB format, then the 8th version already works only with the Barracuda format .Our result is the removal of the following directives:query_cache_type, query_cache_limitand query_cache_size;innodb_file_formatand innodb_file_format_max.

For verification we will use the Docker image of Percona Server. We’ll put the server config in the directory mysql_config_test, and next create the directories for data and logs. Example percona-server configuration test:mkdir -p {mysql_config_test,mysql_data,mysql_logs}

cp -r /etc/mysql/conf.d/* mysql_config_test/

docker run --name some-percona -v $(pwd)/mysql_config_test:/etc/my.cnf.d/ -v $(pwd)/mysql_data/:/var/lib/mysql/ -v $(pwd)/mysql_logs/:/var/log/mysql/ -e MYSQL_ROOT_PASSWORD=${MYSQL_PASSWORD} -d percona:8-centos

Result: either in the Docker logs, or in the directory with the logs - depending on your configs - a file will appear in which the problem directives will be described.Here is what we had:2020-04-03T12:44:19.670831Z 0 [Warning] [MY-011068] [Server] The syntax 'expire-logs-days' is deprecated and will be removed in a future release. Please use binlog_expire_logs_seconds instead.

2020-04-03T12:44:19.671678Z 0 [Warning] [MY-013242] [Server] --character-set-server: 'utf8' is currently an alias for the character set UTF8MB3, but will be an alias for UTF8MB4 in a future release. Please consider using UTF8MB4 in order to be unambiguous.

2020-04-03T12:44:19.671682Z 0 [Warning] [MY-013244] [Server] --collation-server: 'utf8_general_ci' is a collation of the deprecated character set UTF8MB3. Please consider using UTF8MB4 with an appropriate collation instead.

Thus, we still needed to deal with encodings and replace the obsolete directive expire-logs-days.Part 2: Verifying running installations

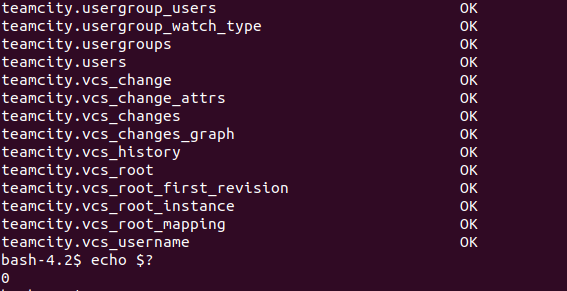

There are 2 utilities in the update documentation for checking the database for compatibility. Their use helps the administrator verify the compatibility of the existing data structure.Let's start with the classic mysqlcheck utility. Simply run:mysqlcheck -u root -p --all-databases --check-upgrade

If no problems are detected, the utility will exit with code 0: In addition, mysql-shell utility is available in modern versions of MySQL (in the case of Percona, this is a package

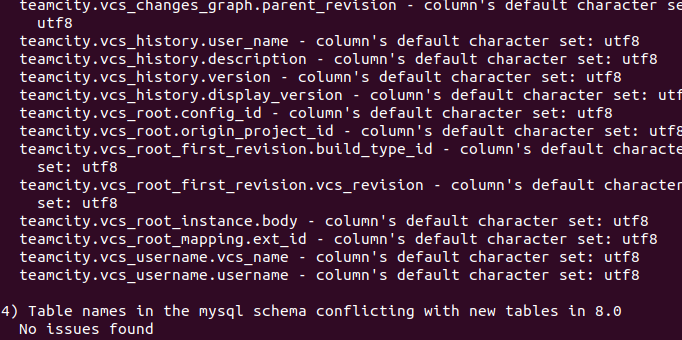

In addition, mysql-shell utility is available in modern versions of MySQL (in the case of Percona, this is a package percona-mysql-shell). It is a replacement for the classic mysql client and combines the functions of the client, the SQL editor and MySQL administration tools. To check the server before updating, you can run the following commands through it:mysqlsh -- util check-for-server-upgrade { --user=root --host=1.1.1.1 --port=3306 } --config-path=/etc/mysql/my.cnf

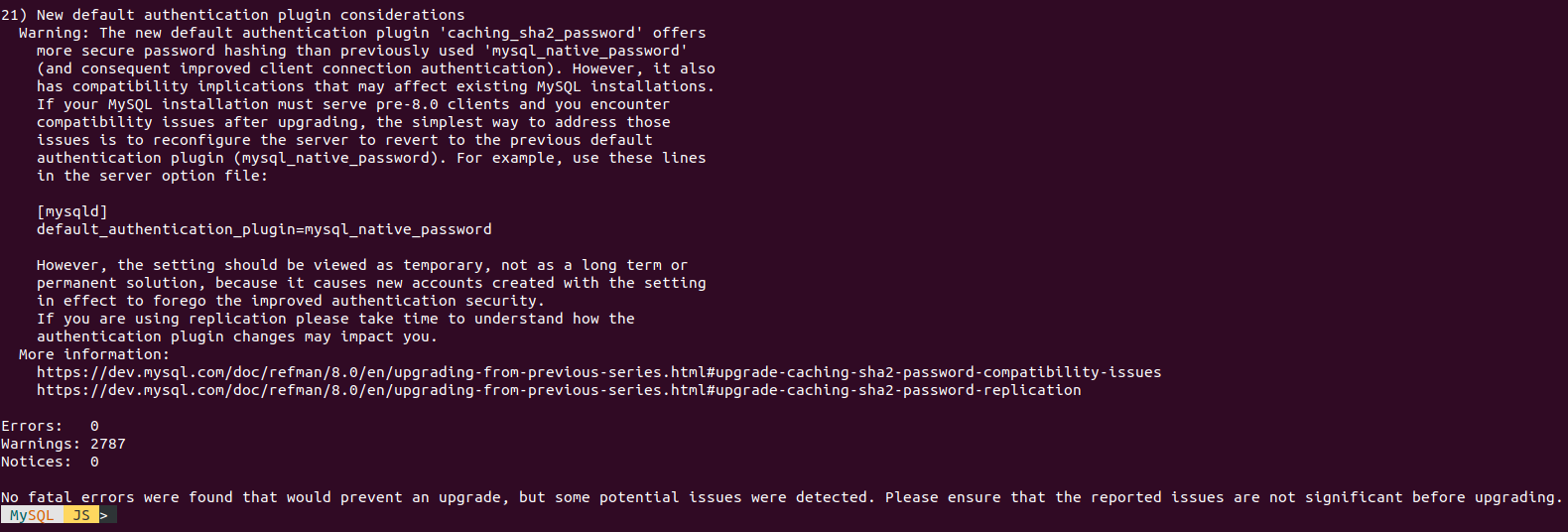

And here are the comments we received: In general, nothing critical - just warnings about encodings (see below) . The overall result of the implementation:

In general, nothing critical - just warnings about encodings (see below) . The overall result of the implementation: We decided that the update should go without problems.A note on the warnings above that indicate encoding problems. The fact is that UTF-8 in MySQL until recently was not a “real” UTF-8 , since it only stored 3 bytes instead of 4. In MySQL 8, they finally decided to fix it : the alias

We decided that the update should go without problems.A note on the warnings above that indicate encoding problems. The fact is that UTF-8 in MySQL until recently was not a “real” UTF-8 , since it only stored 3 bytes instead of 4. In MySQL 8, they finally decided to fix it : the alias utf8will soon lead to encoding utf8mb4, and the old ones the columns in the tables will become utf8mb3. In the future, the encoding utf8mb3will be deleted, but not in this release. Therefore, we decided to fix the encodings already on a working installation of the DBMS, after updating it.Part 3: Server Updates

What can go wrong when there is such a chic plan? .. Well aware that the nuances always happen, we conducted the first experiment on the MySQL dev cluster.As already mentioned, the official documentation highlights the issue of updating MySQL servers with replicas. The bottom line is that at first it’s worth updating all the replicas (slaves), since MySQL 8 can replicate from the version 5.7 wizard. Some difficulty lies in the fact that we use the master <-> master mode when the remote master is in read-only mode . That is, in fact, combat traffic enters one data center, and the second is backup.The topology is as follows: The upgrade should start with mysql replica dc 2 replicas, mysql master dc 2 and

upgrade should start with mysql replica dc 2 replicas, mysql master dc 2 and mysql replica dc 1, and ending up with the mysql master dc 1. server. For better reliability, we stopped the virtual machines, made them snapshots, and stopped the replication with the command just before the update STOP SLAVE. The rest of the update looks like this:- Each replica restart, adding the config option 3:

skip-networking, skip-slave-start, skip-log-bin. The fact is that updating the database generates binary logs with updating system tables. These directives guarantee that there will be no changes to the application data in the database, and information about updating the system tables will not get into the binary logs. This will avoid problems when resuming replication. - Install the package

percona-server-server. It is important to note that in MySQL 8, you do not need to run the command mysqlupgradeafter updating the server. - After a successful start, restart the server again - already without the parameters that were added in the first paragraph.

- We make sure that replication works successfully: we check

SHOW SLAVE STATUSand see that the tables with counters in the application database are updated.

All this looks quite simple: the dev update was successful. Ok, you can safely plan an overnight upgrade for production.There was no sadness - we updated prod

However, porting the successful dev experience to production was not without surprises.Fortunately, the update process itself begins with replicas, therefore, having encountered difficulties, we stopped work and restored the replica from the snapshot. The problem study was rescheduled the next morning. The following entries appeared in the logs:2020-01-14T21:43:21.500563Z 2 [ERROR] [MY-012069] [InnoDB] table: t1 has 19 columns but InnoDB dictionary has 20 columns

2020-01-14T21:43:21.500722Z 2 [ERROR] [MY-010767] [Server] Error in fixing SE data for db1.t1

2020-01-14T21:43:24.208365Z 0 [ERROR] [MY-010022] [Server] Failed to Populate DD tables.

2020-01-14T21:43:24.208658Z 0 [ERROR] [MY-010119] [Server] Aborting

A study of the archives of various mailing lists on Google led to the understanding that such a problem arises due to a MySQL bug . Although rather it is even a utility bug mysqlcheckand mysqlsh.It turns out that MySQL has changed the way data is presented for decimal fields (int, tinyint, etc.), so another way to store them is used inside mysql-server. If your database was originally in version 5.5 or 5.1, and then you upgraded to 5.7, then you may need to produce OPTIMIZEsome tables. Then MySQL will update the data files, transferring them to the current storage format.You can also check this with the utility mysqlfrm:mysqlfrm --diagnostic -vv /var/lib/mysql/db/table.frm

...

'field_length': 8,

'field_type': 246,

'field_type_name': 'decimal',

'flags': 3,

'flags_extra': 67,

'interval_nr': 0,

'name': 'you_deciaml_column',

...

If field_typeyou have 0, then the old type is used in the table - it must be done OPTIMIZE. However, if the value is 246, you already have a new type. More information about the types can be found in the code .Moreover, this bug considers the second possible reason that has bypassed us - the lack of InnoDB tables in the system table INNODB_SYS_TABLESPACES, if they, tables, were created in version 5.1. To avoid problems during the upgrade, you can use the attached SQL script .Why didn’t we have such problems on dev? The base is periodically copied there from production - thus, the tables are recreated .Unfortunately, on a really working large database it will not work just to take and perform the ubiquitous one OPTIMIZE. Percona-toolkit will help here: the pt-online-schema-change utility is excellent for the online OPTIMIZE operation.The updated plan was as follows:- Optimize all tables.

- Perform a database upgrade.

To check it and at the same time find out the update time, we disabled one of the replicas, and for all the tables we ran the following command:pt-online-schema-change --critical-load Threads_running=150 --alter "ENGINE=InnoDB" --execute --chunk-size 100 --quiet --alter-foreign-keys-method auto h=127.0.0.1,u=root,p=${MYSQL_PASSWORD},D=db1,t=t1

The tables are updated without long locks due to the fact that the utility creates a new temporary table into which it copies the data from the main table. At the moment when both tables are identical, the original table is locked and replaced by a new one. In our case, a test run showed that updating all the tables would take about a day, but copying the data caused too much load on the disks.To avoid this, at production we added an argument --sleepwith a value of 10 to the command - this parameter controls the length of the wait after transferring a packet of data to a new table. This way you can reduce the load if a really running application is demanding for response time.After performing the optimization, the update was successful.... but not completely!

Half an hour after the update, the client came up with a problem. The base worked very strange: periodically, connection drops began . Here's what it looked like in the monitoring: The sawtooth graph is visible in the screenshot, due to the fact that part of the threads of the MySQL server periodically fell with an error. Errors appeared in the application:

The sawtooth graph is visible in the screenshot, due to the fact that part of the threads of the MySQL server periodically fell with an error. Errors appeared in the application:[PDOException] SQLSTATE[HY000] [2002] Connection refused

A quick inspection of the logs revealed that the mysqld daemon could not obtain the required resources from the operating system. While dealing with errors, we found in the system “orphan” apparmor policy files :

dpkg-query: no path found matching pattern /etc/apparmor.d/cache/usr.sbin.mysqld

dpkg-query: no path found matching pattern /etc/apparmor.d/local/usr.sbin.mysqld

mysql-server-5.7: /etc/apparmor.d/usr.sbin.mysqld

rc mysql-server-5.7 5.7.23-0ubuntu0.16.04.1 amd64

These files were formed during the upgrade to MySQL 5.7 a couple of years ago and belong to the remote package. Deleting files and restarting the apparmor service solved the problem:systemctl stop apparmor

rm /etc/apparmor.d/cache/usr.sbin.mysqld

rm /etc/apparmor.d/local/usr.sbin.mysqld

rm /etc/apparmor.d/usr.sbin.mysqld

systemctl start apparmor

Finally

Any, even the simplest operation, can lead to unexpected problems. And even having a well-thought-out plan does not always guarantee the expected result. Now, in any update plans, our team also includes the mandatory cleaning of extra files that could appear as a result of recent actions.And with this not-so-professional graphic work, I would like to thank Percona for their great products!

PS

Read also in our blog: