previous chapters

40. Problems of generalization: from the training sample to the validation

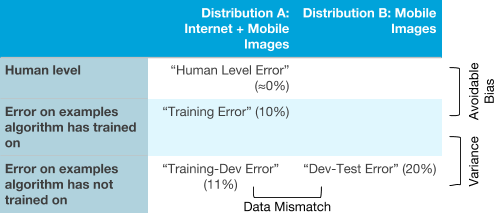

Suppose that you apply ML in conditions where the distribution of training and validation samples is different. For example, a training sample contains images from the Internet + images from a mobile application, and test and validation samples only from a mobile application. However, the algorithm does not work very well: it has a much higher error in the validation and test samples than we would like. Here are some possible reasons:

- The algorithm performs poorly on the test sample and this is due to the problem of high (avoidable) bias in the distribution of the training sample

- The algorithm is of high quality on the training set, but cannot generalize its work to data having a similar distribution with the training set that he had not seen before. This is a case of high scatter.

- The algorithm generalizes its work well to new data from the same distribution as the training sample, but cannot cope (generalize) to the distribution of validation and test samples that are obtained from another distribution. This indicates data inconsistency arising due to the difference in the distribution of the training sample from the distributions of validation and test samples.

For example, suppose the human level of recognition of cats is almost ideal. Your algorithm regarding it shows:

- 1% error in the training sample

- 1.5% error for data taken from the same distribution as the training sample, but which were not shown to the algorithm during training

- 10% error on validation and test samples

. , . , .

, , , . , , : , , « », .

:

- . , ( + ). , ( ).

- : , ( + ). , ; , .

- : , , , . (, )

- : . ( )

, :

5-7 « ».

41. ,

( ≈0%) , , , 0%.

, :

? , . , , .

, :

, . .. . .

. , , , .

:

. , .

, , :

, . Y : , , , , . , .

. ( , ), , . , ( B) , . . , ( A B).

Having determined which types of errors the algorithm is experiencing the most difficulties with, it is possible to more reasonably decide whether to focus on reducing bias, reducing scatter, or whether you need to be puzzled by the fight against data inconsistency.

continuation