When you develop a product, each new iteration is a risk of dropping metrics and losing users. Nevertheless, sometimes, especially at the initial stages, companies unknowingly take this risk - change the product, relying only on their instincts and hypotheses.We at Badoo do not trust feelings, but we believe in numbers. In total, our services have more than 500 million users, and we wrote our test framework for a long time. In six years, 2962 tests passed through it, and A / B testing proved its importance, reliability and effectiveness. But in this article I will not talk about how our system works. One article is not enough for this. In addition, many things are specific to our company and will not suit others. Today I will talk about the evolution of our ideas about A / B tests: what kind of rake we stepped in the process and how we checked the correctness of the tests. This article is for those who have not yet started testing, but think about it, as well as for those who are not sure about their test system.

But in this article I will not talk about how our system works. One article is not enough for this. In addition, many things are specific to our company and will not suit others. Today I will talk about the evolution of our ideas about A / B tests: what kind of rake we stepped in the process and how we checked the correctness of the tests. This article is for those who have not yet started testing, but think about it, as well as for those who are not sure about their test system.What is an A / B test?

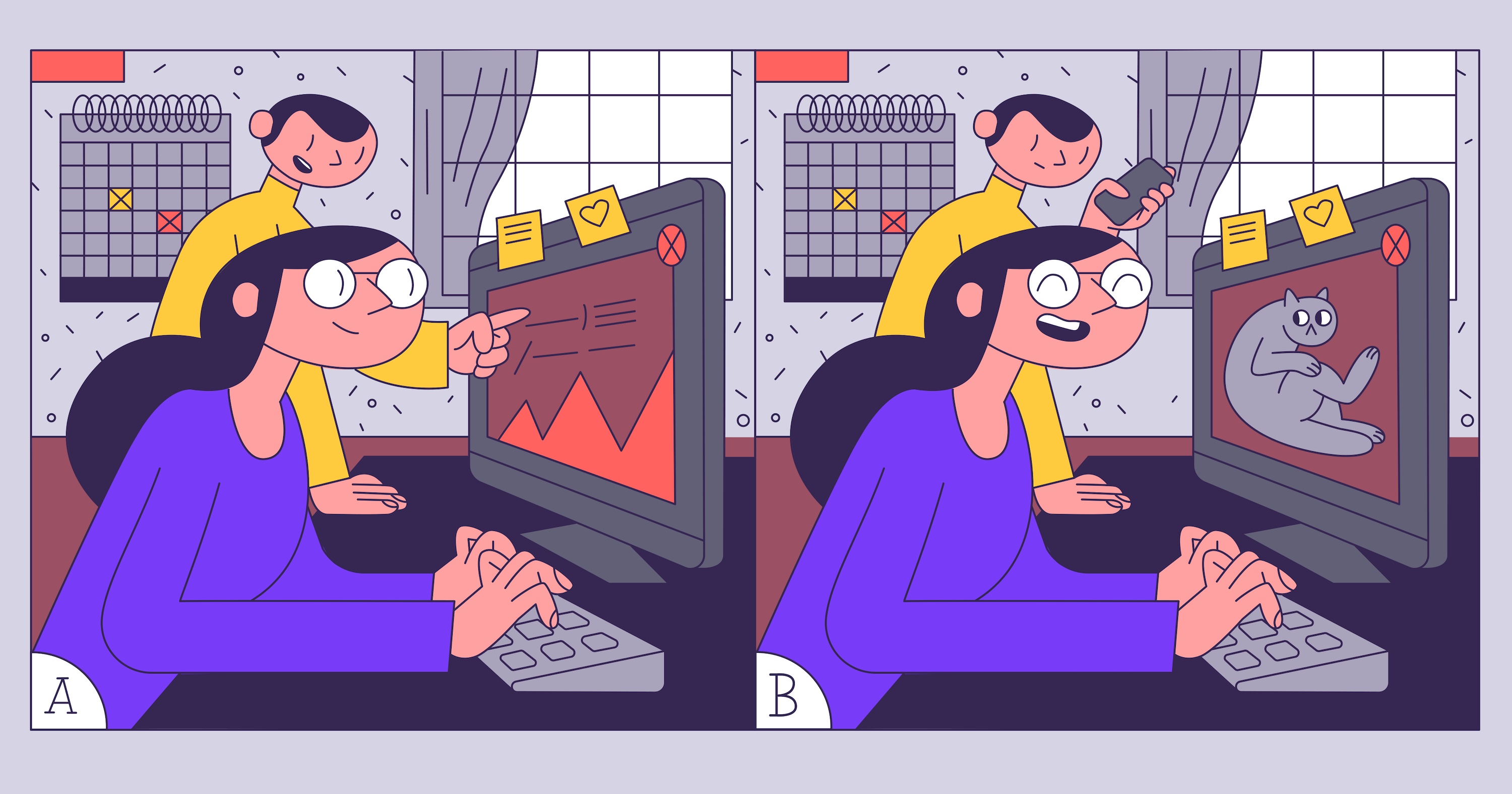

Imagine a situation: a new feature is to be launched, and our product manager is not ready to risk a small but stable income for the product. After some deliberation, he suggests launching the feature through the A / B test and see if it will take off and users will not go to competitors. We did not conduct A / B tests before, so the first thing we learn is what they are. Wikipedia says: “A / B testing is a marketing research method , the essence of which is that the control group of elements is compared with a set of test groups in which one or more indicators have been changed in order to find out which of Change Improve Target". It turns out that we need to conduct a study in which there should be a control group, at least one test group and a target indicator.For the test group, we will show an alternative version of the payment page. On it, we want to change the text, highlight the discount, replace the picture - and all this for users from Eurasia, so that they buy more. And for users from America, we do not want to change anything. We will have targets. Let's take the basic ones:- ARPU (average revenue per user) - the profit that we will receive on average from the user;

- number of clicks on CTA (call to action) elements - buttons and links on the payment page.

Technical task

It is planned to change three elements on the page at the same time: text, button and image with discount information. It will be quite difficult to understand which of these changes influenced the result if tested simultaneously. Perhaps the new text will repel users and lead to a decrease in the number of purchases, but highlighting the discount will increase it: as a result, we get zero. Development resources will be wasted and the working hypothesis will be rejected. Therefore, we will not do this. We’ll leave only one change for testing - allocation of discounts.

It will be quite difficult to understand which of these changes influenced the result if tested simultaneously. Perhaps the new text will repel users and lead to a decrease in the number of purchases, but highlighting the discount will increase it: as a result, we get zero. Development resources will be wasted and the working hypothesis will be rejected. Therefore, we will not do this. We’ll leave only one change for testing - allocation of discounts.

First test

The plan is this: we divide users into two equal groups and wait for the result. When we receive it, we will compare the indicators in the control and test groups. Everything looks pretty simple: we divide users into even and odd and then look at the statistics. We write the code:if (userId % 2 == 1) {

showNewAwesomeFeature();

}

We look forward to a couple of weeks: the results are amazing! ARPU increased by 100%! People click, pay, the product manager asks to quickly roll out the change at all.  We turn it on, another couple of weeks pass, but there are no results. Total profit has not increased. What are we doing wrong?

We turn it on, another couple of weeks pass, but there are no results. Total profit has not increased. What are we doing wrong?We select a test group

We simply divided the users into two equal groups and ran the test, but you can’t do this. After all, something has changed only for users from Eurasia. And we have much less of them than users from America. Therefore, it turned out that a sudden surge in user activity from America influenced the results of our test, although in reality they were not the best.Conclusion: always select in the test group only those users for whom the changes are implemented.Let's fix our code:if (user.continent === ‘eurasia’ && userId % 2 == 1) {

showNewAwesomeFeature();

}

Now everything should work as it should! Run the test. We are waiting for a couple of weeks. Happened! ARPU is up 80%! Roll out the change to all users. And ... after the same period of time, the graphs again do not look the way we planned.

Happened! ARPU is up 80%! Roll out the change to all users. And ... after the same period of time, the graphs again do not look the way we planned.Calculate Statistical Significance

The test cannot be stopped simply “after a couple of weeks”: the results obtained may be inaccurate. The less the metric we are following has changed, and the fewer people participate in the test, the more likely it is to be random. This probability can be calculated. The value denoting it is called p-value. I'll tell you how it works.When conducting any testing, there is a chance to get random results. For example, users who visited the site may be unevenly distributed - and the entire paying audience will fall into one of the groups. In real tests, the difference in metrics is usually not so big: it is difficult or even impossible to notice a problem on the graphs, and statistical tests cannot be dispensed with. In particular, we need to fix the probabilityerrors of the first and second kind - in other words, the probability of finding changes where they do not exist, and, conversely, not finding them if they exist. Depending on the value of the metric and the established probabilities, we will need a different number of users.You can calculate it using the online calculator : specify the current and target values of the monitored metrics - you get the required number of users for the test, and vice versa. Calculation for conversion at 10% and changes at 0.2% relative to the current value.Now we have received all the necessary data and we understand when to stop the test. There are no more obstacles.Let's run our A / B test and look at the results.

Calculation for conversion at 10% and changes at 0.2% relative to the current value.Now we have received all the necessary data and we understand when to stop the test. There are no more obstacles.Let's run our A / B test and look at the results. This time, the results are more like the truth, but still excellent: ARPU growth by 55%. We stop the test, apply the test group at all. And ... the metrics are falling again. Why?

This time, the results are more like the truth, but still excellent: ARPU growth by 55%. We stop the test, apply the test group at all. And ... the metrics are falling again. Why? Check the number of users

Let's figure out how many users actually visited our site while testing was underway. Judging by the logs, only 10% of our test groups, but we did not take this into account. If your DAU (the number of unique users per day) is 1000 people, this does not mean that in ten days you will have 10,000 users in the test. Always log real hits to the test (test hits) and count only them.We implement simple logic. For each user who visits the site, we send a request to the server with the names of the A / B test and control group. Thanks to this, we will know exactly how many users have visited us, and we won’t be mistaken anymore.We launch the A / B test.The results are excellent. We earn more money again! We turn on our test for everyone - and something goes wrong again: in a couple of weeks, the metrics are again lower than predicted. Remember, we started sending hits for all users who visited the site? Never do that. Hits should be sent only to those users who interacted with the tested part of the resource. And the more accurately you define them, the better. Fortunately, this is easy to verify. To do this, you can conduct an A / A / B test. It looks like an A / B test, but in this case you have two control groups and one test group. Why is this needed? If the moment for sending a hit was chosen incorrectly, then users who did not interact with the tested part of the site will fall into the test. In this case, there will be big differences in the metrics in groups A and A, and you can immediately understand that something is wrong. We launch the A / A / B test. In group B, leave 50% of users, and divide the remaining 50% equally between groups A and A (we call them control and control_check). Yes, the results will have to wait longer, but by whether the results of groups A and A converge, you will understand whether the hit is sent correctly. The results are modest (growth of only 20%), but realistic. Let's roll the change at all. Everything works perfectly! But after a month, the metrics fell again.

The results are modest (growth of only 20%), but realistic. Let's roll the change at all. Everything works perfectly! But after a month, the metrics fell again.Testing what we can control

It turns out that our third-party billing also conducted its A / B test, which directly affected our results. Therefore, always follow the results on production and try to test what you control in full.Total

A / B tests can help the product grow. But in order to trust the tests, it is important to conduct them correctly and always check the results. This approach will allow you to qualitatively test your product and test hypotheses before they drop all metrics.- Always check the target group.

- Submit hits.

- Send the right hits.

- Test what you control in full.

- /-!