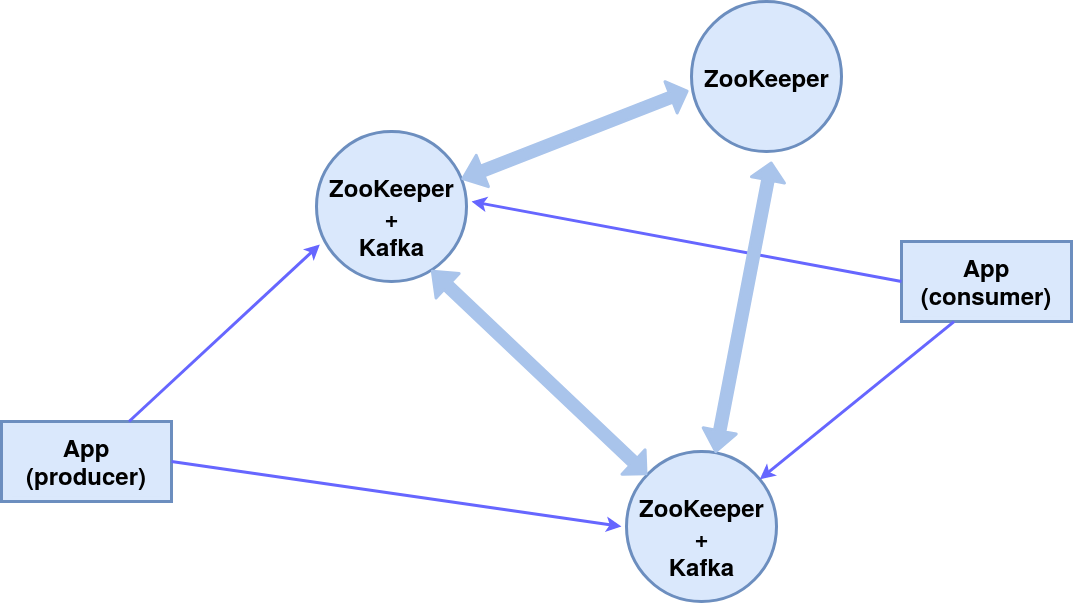

Good day!In this article, we ’ll look at setting up a cluster of three ZooKeeper nodes (distributed system coordination service), two of which are Kafka message brokers , and the third is the manager.As a result, the following component scheme will be implemented: All components on one machine, for simplicity, we will use the built-in console publisher (producer) and subscriber (consumer) messages.The ZooKeeper module is built into the Kafka package, we use it.

All components on one machine, for simplicity, we will use the built-in console publisher (producer) and subscriber (consumer) messages.The ZooKeeper module is built into the Kafka package, we use it.Installation and setup

Installing the Kafka PackageIn this case, the operating system is Ubuntu 16.04 LTS.Current versions and detailed instructions are on the official website .wget http://mirror.linux-ia64.org/apache/kafka/2.4.1/kafka_2.12-2.4.1.tgz

tar -xvzf kafka_2.12-2.4.1.tgz

Create working directories for the three nodes server_1, server_2 and server_3.mv kafka_2.12-2.4.1 kafka_server_1

cp -r kafka_server_1/ kafka_server_2/

cp -r kafka_server_1/ kafka_server_3/

Configuring nodesConfiguring a Kafka broker(from the directories kafka_server_1, kafka_server_2 and kafka_server_3, respectively)vim config/server.properties

- broker id (0, 1, 2 respectively)

- client port (9092, 9093, 9094)

- ZooKeeper port (2181, 2182, 2183)

- log directory (/ tmp / kafka-logs-1, / tmp / kafka-logs-2, / tmp / kafka-logs-3)

broker.id=0

listeners=PLAINTEXT://:9092

zookeeper.connect=localhost:2181

log.dirs=/tmp/kafka-logs-1

Znode configuration:vim config/zookeeper.properties

- directory for data (/ tmp / zookeeper_1, / tmp / zookeeper_2, / tmp / zookeeper_3)

- client port (2181, 2182, 2183)

- maximum number of client connections and connection limits

- ports for data exchange between nodes (i.e., we inform each node about the existence of others)

dataDir=/tmp/zookeeper_1

clientPort=2181

maxClientCnxns=60

initLimit=10

syncLimit=5

tickTime=2000

server.1=localhost:2888:3888

server.2=localhost:2889:3889

server.3=localhost:2890:3890

Creating directories for ZooKeeper nodes, writing node id to service files:mkdir -p /tmp/zookeeper_[1..3]

echo "1" >> /tmp/zookeeper_1/myid

echo "2" >> /tmp/zookeeper_2/myid

echo "3" >> /tmp/zookeeper_3/myid

In case of problems with access rights, change them in directories and files:sudo chmod 777 /tmp/zookeeper_1

Running z-nodes and brokers

For the first two nodes (kafka_server_1 /, kafka_server_2 /), run the scripts of ZooKeeper and Kafka-servers, the arguments are the corresponding config files:sudo bin/zookeeper-server-start.sh config/zookeeper.properties

sudo bin/kafka-server-start.sh config/server.properties

For the third node (kafka_server_3 /) - only ZooKeeper.Scripts for stopping servers:sudo bin/kafka-server-stop.sh

sudo bin/zookeeper-server-stop.sh

Creating a theme, console producer and consumer

Topic (topic) - a stream of messages of a certain type, divided into partitions (the quantity is specified by the --partition key). Each partition is duplicated on two servers (--replication-factor). After the --bootstrap-server key, specify the ports of Kafka brokers, separated by commas. The --topic switch specifies the name of the topic.sudo bin/kafka-topics.sh --create --bootstrap-server localhost:9092,localhost:9093 --replication-factor 2 --partitions 2 --topic TestTopic

To find out the list of topics on the port, information about each topic can be done with the command:sudo bin/kafka-topics.sh --list --zookeeper localhost:2181

sudo bin/kafka-topics.sh --zookeeper localhost:2181 --describe --topic TestTopic

Leader - a server with the main instance of the partition, replica - the server on which information is duplicated, ISR - servers that take on the role of leaders in case of leader failure.We create the console producer, console consumer using the appropriate scripts:sudo bin/kafka-console-producer.sh --broker-list localhost:9092,localhost:9093 --topic TestTopic

sudo bin/kafka-console-consumer.sh --bootstrap-server localhost:9092,localhost:9093 --from-beginning --topic TestTopic

This ensures continuous transmission of messages to the recipient, in case of failure of one message broker processes the second.Services for zookeeper and kafka in systemctl

For the convenience of starting a cluster, you can create services in systemctl: zookeeper_1.service, zookeeper_2.service, zookeeper_3.service, kafka_1.service, kafka_2.service.We edit the file /etc/systemd/system/zookeeper_1.service (change the directory / home / user and user user to the necessary ones).[Unit]

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

User=user

ExecStart=home/user/kafka_server_1/bin/zookeeper-server-start.sh /home/user/kafka_server_1/config/zookeeper.properties

ExecStop=/home/user/kafka_server_1/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

For the services zookeeper_2.service and zookeeper_3.service it is similar.File /etc/systemd/system/kafka_1.service:[Unit]

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

User=user

ExecStart=/bin/sh -c '/home/user/kafka_server_1/bin/kafka-server-start.sh /home/user/kafka_server_1/config/server.properties > /tmp/kafka-logs-1 2>&1'

ExecStop=/home/user/kafka_server_1/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

kafka_2.service is similar.Activation and verification of servicessystemctl daemon-reload

systemctl enable zookeeper_1.service

systemctl start zookeeper_1.service

sudo journalctl -u zookeeper_1.service

systemctl stop zookeeper_1.service

The same goes for zookeeper_2.service, zookeeper_3.service, kafka_1.service, kafka_1.service.Thank you for the attention!