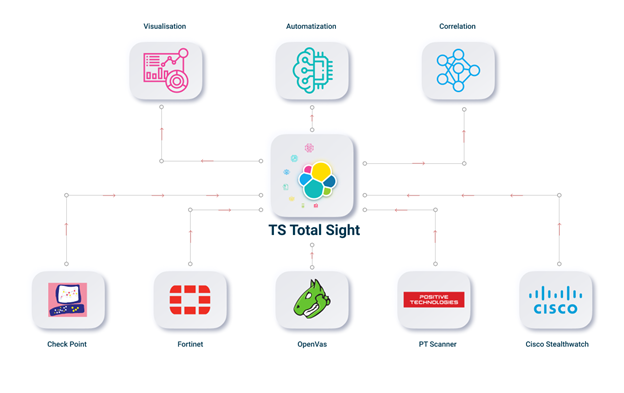

Good afternoon, in past articles we got to know the work of ELK Stack. And now we will discuss the possibilities that can be realized by an information security specialist in using these systems. What logs can and should be made in elasticsearch. Let's consider what statistics can be obtained by setting up dashboards and whether there is a profit in this. How it is possible to implement automation of information security processes using the ELK stack. We compose the architecture of the system. In sum, the implementation of all the functionality is a very big and difficult task, so the solution was singled out in a separate name - TS Total Sight.Currently, solutions consolidating and analyzing information security incidents in one logical place are gaining popularity. As a result, a specialist receives statistics and a front line of actions to improve the security status of an organization. We set this task for ourselves in using the ELK stack, as a result we allocated the main functionality in 4 sections:

Good afternoon, in past articles we got to know the work of ELK Stack. And now we will discuss the possibilities that can be realized by an information security specialist in using these systems. What logs can and should be made in elasticsearch. Let's consider what statistics can be obtained by setting up dashboards and whether there is a profit in this. How it is possible to implement automation of information security processes using the ELK stack. We compose the architecture of the system. In sum, the implementation of all the functionality is a very big and difficult task, so the solution was singled out in a separate name - TS Total Sight.Currently, solutions consolidating and analyzing information security incidents in one logical place are gaining popularity. As a result, a specialist receives statistics and a front line of actions to improve the security status of an organization. We set this task for ourselves in using the ELK stack, as a result we allocated the main functionality in 4 sections:- Statistics and visualization;

- IS incident detection;

- Prioritization of incidents;

- Automation of information security processes.

Next, we consider in more detail separately.Security Incident Detection

The main task in using elasticsearch in our case is to collect only information security incidents. It is possible to collect information security incidents from any means of protection if they support at least some modes of log forwarding, the standard is syslog or scp saving to a file.You can give standard examples of security features and not only where to configure log forwarding:- Any means of NGFW (Check Point, Fortinet);

- Any vulnerability scanners (PT Scanner, OpenVas);

- Web Application Firewall (PT AF);

- Netflow analyzers (Flowmon, Cisco StealthWatch);

- AD server.

After setting up log forwarding and configuration files in Logstash, you can correlate and compare with incidents coming from various security tools. To do this, it is convenient to use indexes in which we will store all incidents related to a specific device. In other words, one index is all incidents to one device. There are 2 ways to implement such a distribution.The first option is to configure the Logstash config. To do this, it is necessary to duplicate the log for certain fields into a separate unit with another type. And then later use this type. In the example, logs are cloned using the Check Point IPS firewall blade.filter {

if [product] == "SmartDefense" {

clone {

clones => ["CloneSmartDefense"]

add_field => {"system" => "checkpoint"}

}

}

}

In order to save such events in a separate index, depending on the log fields, for example, such as Destination IP attack signatures. You can use a similar construction:output {

if [type] == "CloneSmartDefense"{

{

elasticsearch {

hosts => [",<IP_address_elasticsearch>:9200"]

index => "smartdefense-%{dst}"

user => "admin"

password => "password"

}

}

}

And in this way, you can save all incidents to the index, for example, by IP address, or by the domain name of the machine. In this case, we save in the index “smartdefense -% {dst}” , at the IP address of the signature.However, different products will have different fields for logs, which will lead to chaos and unnecessary memory consumption. And here it will be necessary either carefully in the Logstash config settings to replace the fields with previously conceived ones, which will be the same for all types of incidents, which is also a difficult task.Second implementation option- this is writing a script or process that will access the elastic database in real time, pull out the necessary incidents, and save it to a new index, this is a difficult task, but it allows you to work with the logs as you please, and correlate directly with incidents from others security features. This option allows you to configure the work with logs as useful as possible for your case with maximum flexibility, but here there is a problem in finding a specialist who can implement this.And of course, the most important question, but what can be generally traced and discovered ?There may be several options, and depending on what security features are used in your infrastructure, a couple of examples:- NGFW . IPS . ( ) IPS, — , .

- , .

- .

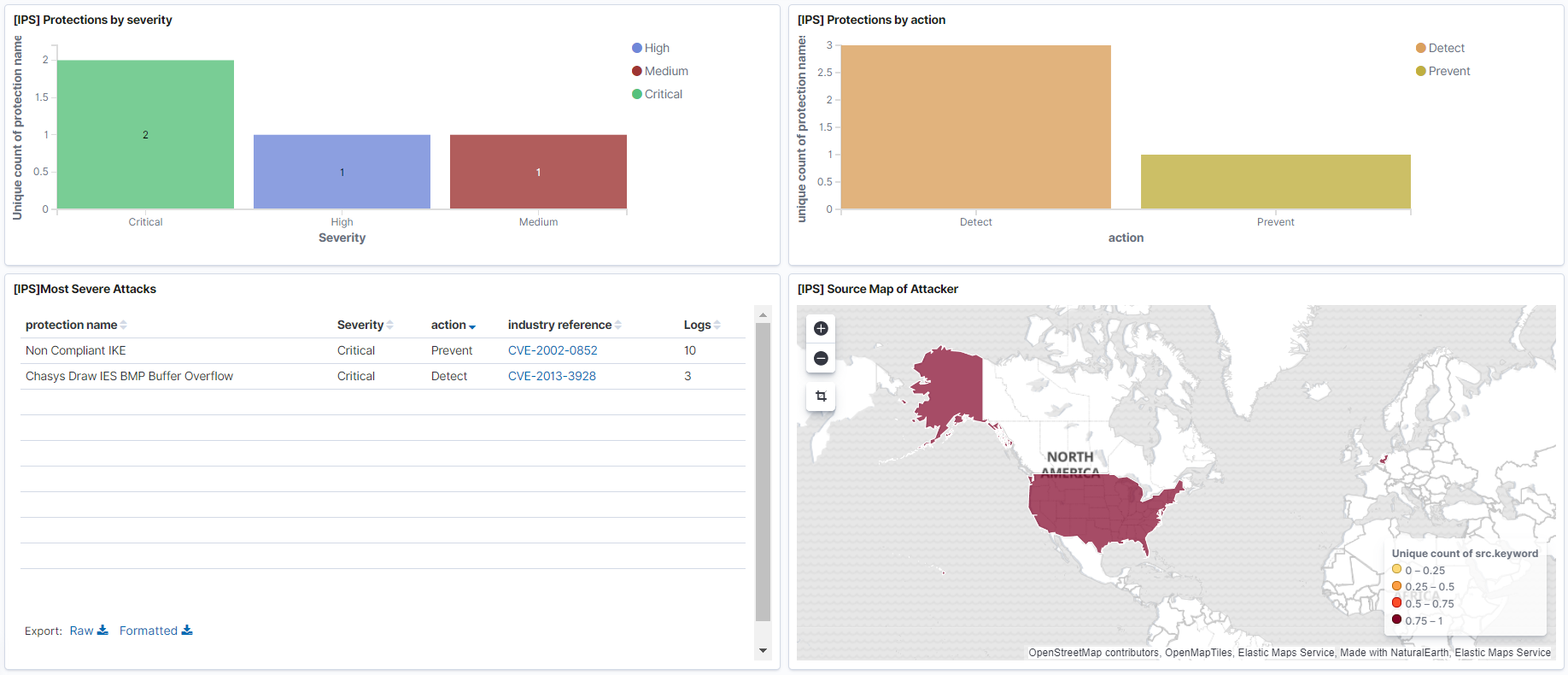

The most obvious and understandable reason for which you need ELK Stack is the storage and visualization of logs, in previous articles it was shown how you can create logs from various devices using Logstash. After the logs go to Elasticsearch, you can configure the dashboards, which were also mentioned in previous articles , with the necessary information and statistics for you through visualization.Examples:- Threat Prevention events dashboard with the most critical events. Here you can reflect which IPS signatures were detected, where they come from geographically.

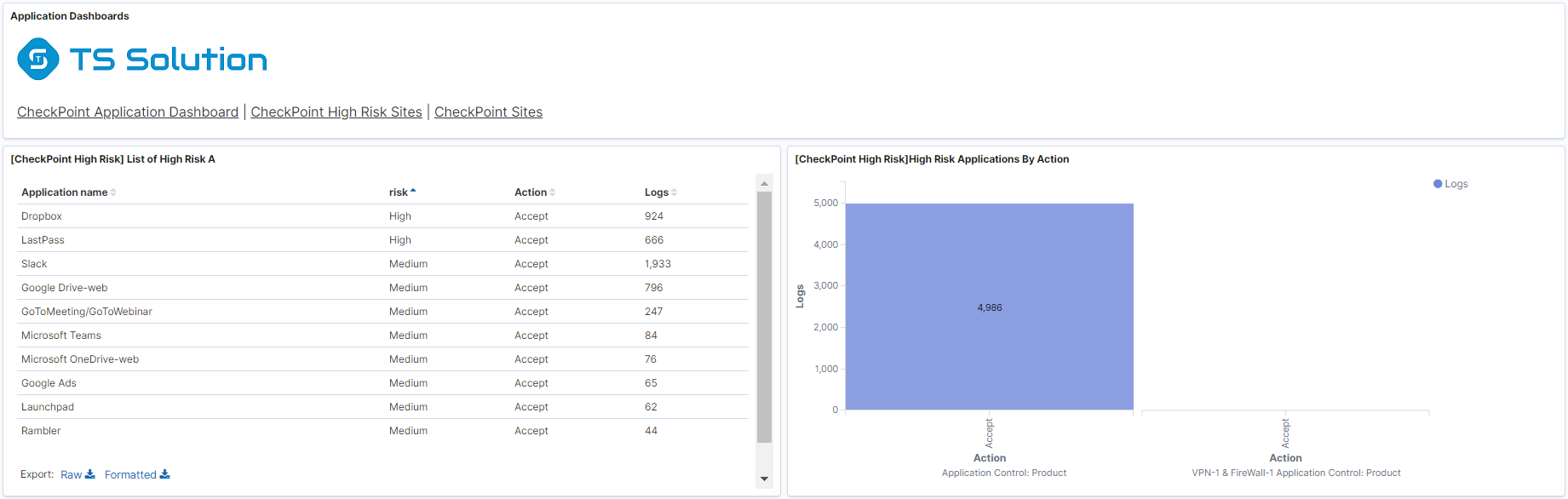

- Dashboard on the use of the most critical applications for which information can merge.

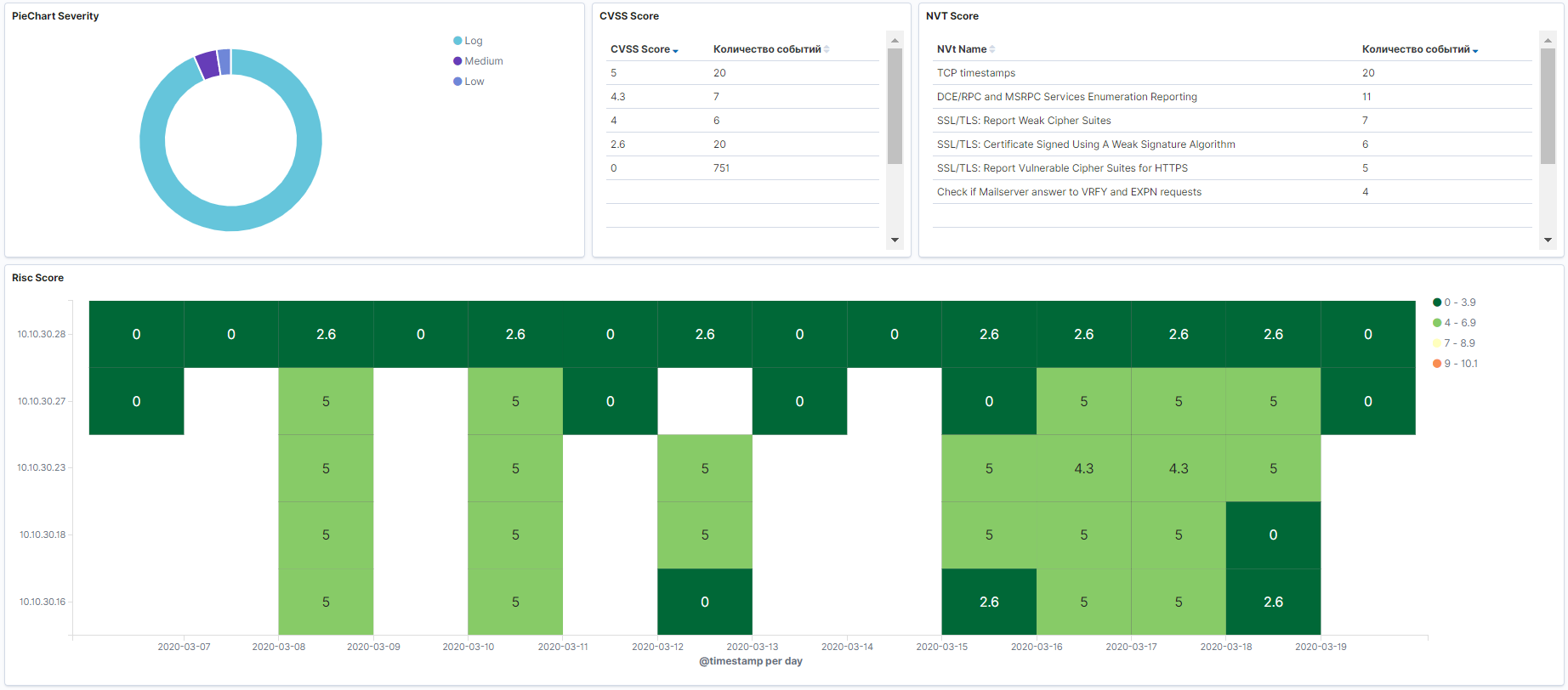

- Scan results from any security scanner.

- Logs with Active Directory by user.

- VPN connection dashboard.

In this case, if you configure dashboards to update every few seconds, you can get a fairly convenient system for monitoring events in real time, which can then be used to quickly respond to IS incidents if you put dashboards on a separate screen.Incident prioritization

In conditions of large infrastructure, the number of incidents can be off the charts, and specialists will not be able to manage all incidents on time. In this case, it is necessary first of all to single out those incidents that pose a great threat. Therefore, the system must prioritize incidents according to their danger in relation to your infrastructure. It is advisable to set up an alert in the mail or telegram of event data. Prioritization can be implemented using regular Kibana tools by setting visualization. But with a notification it’s harder, by default this functionality is not included in the base version of Elasticsearch, only in a paid one. Therefore, either buy a paid version, or again, write the process yourself which will notify specialists in real time by mail or telegram.Automation of information security processes

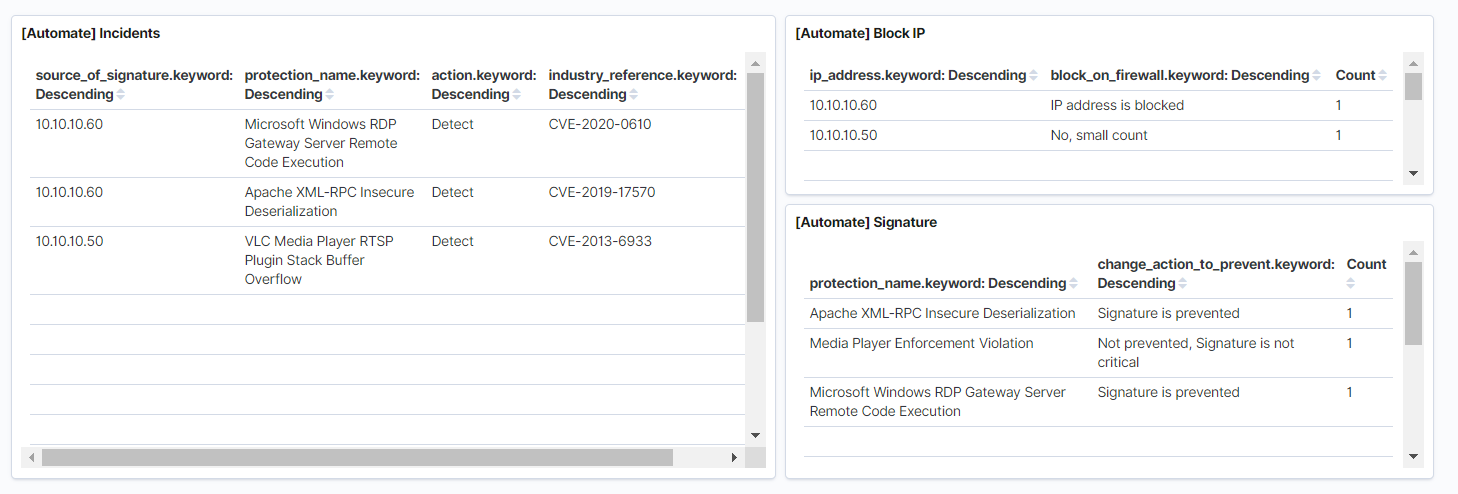

And one of the most interesting parts is the automation of actions on information security incidents. Previously, we implemented this functionality for Splunk, a little more detail, you can read in this article . The basic idea is that the IPS policy is never tested or optimized, although in some cases it is an essential part of information security processes. For example, a year after the implementation of NGFW and the absence of actions to optimize IPS, you will accumulate a large number of signatures with the Detect action, which will not be blocked, which greatly reduces the state of information security in the organization. The following are some examples of what can be automated:- IPS Detect Prevent. Prevent, , . . , NGFW REST API. , Elastcisearch API NGFW.

- IP , IP Firewall. REST API.

- Run a host scan with a vulnerability scanner if this host has a large number of signatures via IPS or other security tools, if it is OpenVas, then you can write a script that will connect via ssh to the security scanner and start scanning.

TS Total Sight

In total, the implementation of all the functionality is a very big and difficult task. Without programming skills, you can configure the minimum functionality, which may be enough for use in the product. But if you are interested in all the functionality, you can pay attention to TS Total Sight. You can find more details on our website . As a result, the whole scheme of work and architecture will look like this:

Conclusion

We examined what can be implemented using ELK Stack. In subsequent articles, we will separately consider in more detail the functionality of TS Total Sight!So stay tuned ( Telegram , Facebook , VK , TS Solution Blog ), Yandex.Zen .