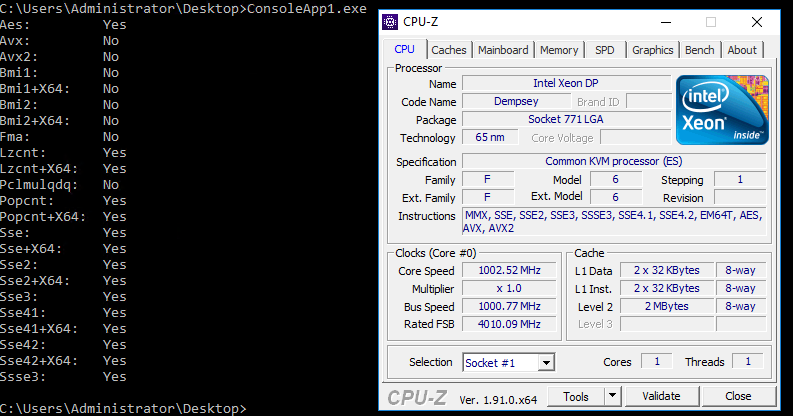

We live in an era of x86 architecture dominance. All x86-compatible processors are similar, but all are slightly different. And not only the manufacturer, the frequency and the number of cores.The x86 architecture during its existence (and popularity) has experienced many major updates (for example, the extension to 64 bits - x86_64) and the addition of “extended instruction sets”. Compilers, which by default generate code that is as common as possible for all processors, also have to adapt to this. But among the extended instructions, there are many interesting and useful. For example, in chess programs , instructions for working with bits are often used : POPCNT, BSF / BSR (or more recent analogues of TZCNT / LZCNT ), PDEP, BSWAP, etc.In C and C ++ compilers, explicit access to such instructions is implemented through "intrinsic functions of this processor". example1 example2There was no such convenient access for .NET and C #, so once upon a time I made my own wrapper, which provided emulation of such functions, but if the CPU supported them, I replaced their call directly in the calling code. Fortunately, most intrinsics I need were placed in 5 bytes of the CALL opcode. Details can be read on the hub at this link .Many years have passed since then, in .NET normal intrinsics never appeared. But .NET Core came out, in which the situation was corrected. First came the vector instructions, then almost the entire * set of System.Runtime.Intrinsics.X86 .* - there are no "outdated" BSF and BSR.And everything seemed to be nice and convenient. Except that the definition of support for each set of instructions has always been confusing (some are included immediately by the sets, for some there are separate flags). So .NET Core confused us even more with the fact that there are also some dependencies between the "allowed" sets.This surfaced when I tried to run the code on a virtual machine with the KVM hypervisor: errors fell in System.PlatformNotSupportedException: Operation is not supported on this platform at System.Runtime.Intrinsics.X86.Bmi1.X64.TrailingZeroCount(UInt64 value). Similarly for System.Runtime.Intrinsics.X86.Popcnt.X64.PopCount. But if for POPCNT it was possible to put a fairly obvious flag in the parameters of virtualization, then TZCNT led me into a dead end. In the following picture, the output of the tool that checks the availability of intrinsics in netcore (code and binary at the end of the article) and the well-known CPU-Z: And here is the output of the tool taken from the MSDN page about CPUID :

And here is the output of the tool taken from the MSDN page about CPUID : Despite the fact that the processor reports support for everything required, the instruction

Despite the fact that the processor reports support for everything required, the instruction Intrinsics.X86.Bmi1.X64.TrailingZeroCountstill continued to fall with execution System.PlatformNotSupportedException.To figure this out, we need to look at the processor through the eyes of NETCore. Which sources lie on github. Let's look for cupid there and go out to the method. EEJitManager::SetCpuInfo()There are a lot of different conditions in it, and some of them are nested. I took this method and copied it into an empty project. In addition to it, I had to pick up a couple of other methods and a whole assembler file ( how to add asm to a fresh studio ). Execution result: As you can see, the flag

As you can see, the flag InstructionSet_BMI1is still set (although some others are not set).If you look for this flag in the repository, you can come across this code :if (resultflags.HasInstructionSet(InstructionSet_BMI1) && !resultflags.HasInstructionSet(InstructionSet_AVX))

resultflags.RemoveInstructionSet(InstructionSet_BMI1);

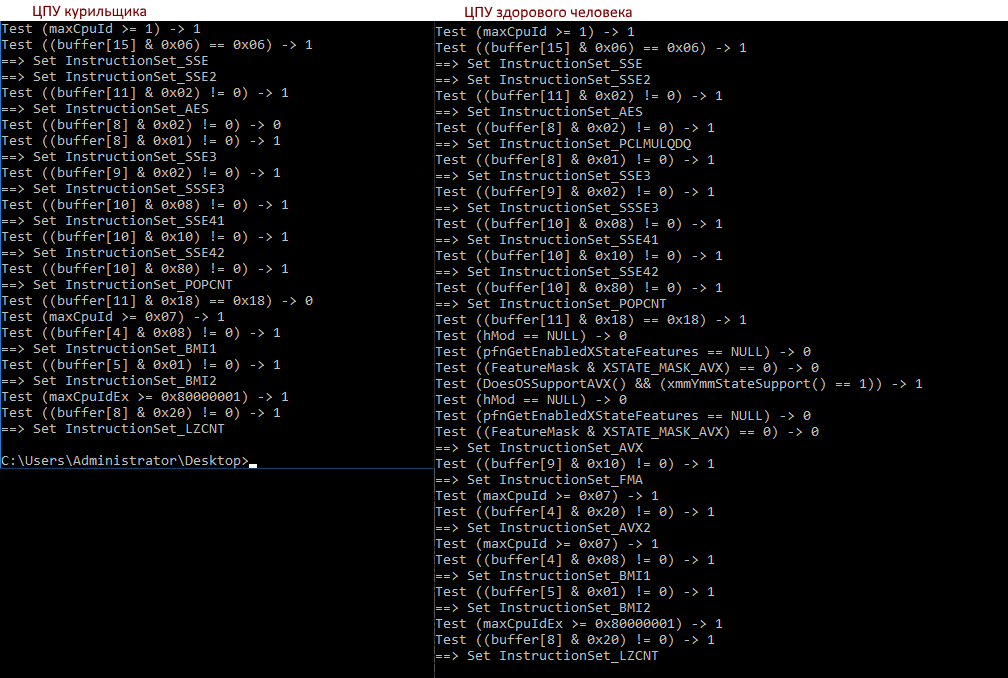

So, she is our addiction! If AVX is not defined, then BMI1 (and some other sets) is disabled. What is the logic, it’s not clear to me yet, but we hope that it still exists. Now it remains to understand why cpu-z and other tools see AVX, but netcore does not.Let's see how the output of our tool on different processors differs:>diff a b

7c7,8

< Test ((buffer[8] & 0x02) != 0) -> 0

---

> Test ((buffer[8] & 0x02) != 0) -> 1

> ==> Set InstructionSet_PCLMULQDQ

18c19,32

< Test ((buffer[11] & 0x18) == 0x18) -> 0

---

> Test ((buffer[11] & 0x18) == 0x18) -> 1

> Test (hMod == NULL) -> 0

> Test (pfnGetEnabledXStateFeatures == NULL) -> 0

> Test ((FeatureMask & XSTATE_MASK_AVX) == 0) -> 0

> Test (DoesOSSupportAVX() && (xmmYmmStateSupport() == 1)) -> 1

> Test (hMod == NULL) -> 0

> Test (pfnGetEnabledXStateFeatures == NULL) -> 0

> Test ((FeatureMask & XSTATE_MASK_AVX) == 0) -> 0

> ==> Set InstructionSet_AVX

> Test ((buffer[9] & 0x10) != 0) -> 1

> ==> Set InstructionSet_FMA

> Test (maxCpuId >= 0x07) -> 1

> Test ((buffer[4] & 0x20) != 0) -> 1

> ==> Set InstructionSet_AVX2

- The check buffer [8] & 0x02 fails, this is PCLMULQDQ

- Buffer [11] & 0x18 fails, it is AVX & OSXSAVE, AVX is already set (CPU-Z sees this), OSXSAVE is needed

- And behind it are other checks that lead to the InstructionSet_AVX flag

So what to do with the viral? If possible, it is best to put libvirt.cpu_mode in host-passthrough or host-model .But if this is not possible, then you have to add all the soup from the instructions, in particular ssse3, sse4.1, sse4.2, sse4a, popcnt, abm, bmi1, bmi2, avx, avx2, osxsave, xsave, pclmulqdq. Here I say hello and thank youvdsina_m;)And you can check your host or virtual machine for instruction support and how .NET Core looks at it using this tool: (for now, zip, I'll post it to the github later).