Six months ago, I was already starting to talk about updating the Huawei storage line - Dorado V6. In fact, I managed to get to know them before the official announcement, and it is logical that at that time I did not have the opportunity to touch them with my own hands. In my article “ Huawei Dorado V6: Sichuan Heat ”, I focused on the older Dorado 8000 and 18000 V6 models, since from the point of view of architecture they were most of all interesting to me at that moment. Finally, I got the opportunity to test the 5000V6 system in our laboratory and talk more about the technical side of these systems.On the one hand, this is a review and testing of the 5000V6 system, on the other, it is a logical continuation of the previous article, because over the past six months, more details have appeared about the various components of the system, the logic of work and the implemented functionality.But let's get right to the point. Since this system is more familiar - a dual-controller system, it has lost some of the advantages of its older sisters.- Lack of shared Frontend and Backend.

- Also, in the 5000V6 and 6000V6 systems, only two Kunpeng 920 processors are installed on each of the controllers, and one in the 3000V6.

- If the same shelves are supplied for 5000V6 and 6000V6 as for 8000V6 and 18000V6 with Kunpeng chips, which are designed to speed up the rebuild process, and these shelves are connected via 100Gb RDMA, then for the younger model 3000V6 only SAS shelves are available.

- 5000V6 and 6000V6 are designed to install 36 PALM SSDs or 25 x 2.5 "" ordinary "SAS SSDs (selected when configuring the system when ordering), the youngest 3000V6 only supports 25 SAS SSDs.

- 3000V6 supports the installation of up to three hot-swappable interface cards on the controller, 5000V6 and 6000V6 support six cards.

As in the previous article, there is a footnote regarding the End-to-End NVMe: support for NVMe over RoCE v2 and NVMe over TCP / IP is planned in the near future.Architecturally, dual-controller systems, of course, also differ.OceanStor Dorado V6 uses an active-active architecture that has the following technologies.- Load Balancing Algorithm: balances read and write requests received by each controller.

- Global cache: allows LUNs to not have owners. Each controller processes the received read and write requests, providing load balancing between the controllers.

- RAID 2.0+: evenly distributes data across all disks in the storage pool, balancing the load on the disk.

RAID 2.0+

If data is not uniformly stored on an SSD, some heavily loaded SSDs can become a system bottleneck. OceanStor Dorado V6 uses RAID 2.0+ to evenly distribute data across all LUNs on each SSD, balancing the load between drives. OceanStor Dorado V6 implements RAID 2.0+ as follows:- multiple SSDs form a storage pool

- each SSD is divided into fixed-size chunks (typically 4 MB per chunk) to simplify logical space management

- chunks from different SSDs form a chunk group based on user-defined RAID policy

- the chunk group is divided into “grains” (usually 8 KB), which are the smallest unit for volumes

ROW Full-Stripe Write

Flash memory chips on SSDs can be erased a limited number of times. In the traditional RAID rewrite mode (write-to-place), the hot data on the SSD is continuously overwritten and its flash chips wear out quickly. OceanStor Dorado V6 uses full-redirectional write (ROW) recording for both new and old data. He allocates a new flash chip for each record, balancing the number of times that all flash chips are erased. This significantly reduces the processor load on the controller itself and the read / write load on the SSD during the write process, increasing system performance at different RAID levels.End-to-End I / O Prioritization

To ensure consistent latency for certain I / O types, OceanStor Dorado V6 controllers mark each I / O operation with priority according to its type. This allows the system to plan the CPU and other resources and prioritize them, offering a guarantee of latency based on I / O priorities. In particular, after receiving several I / O SSDs check their priorities and process primarily operations with a higher priority.OceanStor Dorado V6 classifies I / O operations into the following five types and prioritizes them in descending order, providing optimal internal and external I / O response:- read / write operations

- advanced I / O functions

- rebild

- flush cache

- Garbage collection

On each drive, in addition to prioritizing input / output operations, OceanStor Dorado V6 also allows high-priority read requests to interrupt current write and erase operations. In this case, the disk read delay (if there was no data in the cache) directly affects the host read delay. Typically, an SSD flash drive performs three operations: read, write, and erase. The erase delay is from 5 ms to 15 ms, the write delay is from 2 ms to 4 ms, and the read delay is from tens of microseconds to 100 microseconds. When the flash chip performs a write or erase operation, the read operation must wait until the current operation is completed, which leads to a significant increase in the read delay.Smart disk enclosure

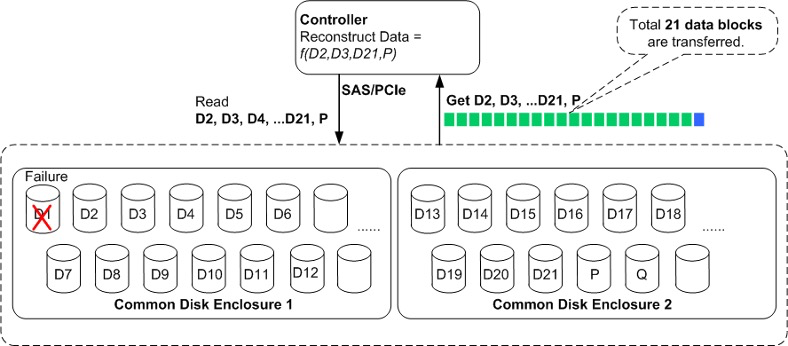

As I wrote in a previous article, the new disk shelves are equipped with their own processor and RAM. This allows you to transfer tasks such as recovering from disk failure from controllers. This significantly reduces the load on the controllers in case of data recovery due to disk failures. The following figure shows the data recovery process within a single disk shelf using RAID 6 (21 + 2) as an example. If drive D1 is faulty, the controller must read D2-D21 and P, and then recount D1. In total, 21 data blocks should be read from the disks. Read and restore operations consume large processor resources.

SmartDedupe and SmartCompression

OceanStor Dorado V6 automatically performs adaptive deduplication and compression based on the characteristics of user data, maximizing efficiency. The adaptive process of deduplication and compression is as follows.- , , . , . .

- , «» (SFP) «». , .

- When several identical SFPs are accumulated in the “capabilities” table, data corresponding to these SFPs is read from deduplication disks after processing. After deduplication is complete, the fingerprint table is updated.

Before compressing data, OceanStor Dorado V6 uses its own preprocessing algorithm to determine the part that is difficult to compress in data blocks based on the data format. OceanStor reorders the data, dividing it into two parts:- for the part that is difficult to compress, the system compresses it using a specially developed Huawei compression algorithm

- for the other part, the system uses a common compression algorithm

Some storage vendors are already using data compaction in their arsenal. But they usually use a 4K or 1K block to compress the data. Huawei has gone further and uses byte alignment. I think the illustration is clear.Thus, we fit 32KB of user data in less than 5KB.Compression and deduplication now always work from the moment you install the appropriate Storage E ffi cency license. It cannot be disabled from the Device Mabager, but if you really want to, it can be done through the CLI. According to the vendor’s logic, space-saving technologies should always work on SSDs.One important point throughout the line. Although there are only five models, they, among other things, differ among themselves in the volume of the cache of each controller:- OceanStor Dorado 3000 V6: 192 GB

- OceanStor Dorado 5000 V6: 256 GB / 512 GB

- OceanStor Dorado 6000 V6: 1024 GB

- OceanStor Dorado 8000 V6: 512 GB / 1024 GB / 2048 GB

- OceanStor Dorado 18000 V6: 512 GB / 1024 GB / 2048 GB

As you can see, the total volume of older models does not change, but the number of drives changes. According to statements by Huawei engineers, they simply did not have the opportunity to test systems on a larger volume than 2Pb. Theoretically, they support more.Maximum number of drives / maximum capacity: - OceanStor Dorado 3000 V6 - 1000 / 500TiB

- OceanStor Dorado 5000 V6 - 1200 / 1024TiB

- OceanStor Dorado 6000 V6 - 1500 / 2048TiB

- OceanStor Dorado 8000 V6 - 3200 / 2048TiB

- OceanStor Dorado 18000 V6 - 6400 / 2048TiB

In a previous article, I forgot to mention which interface cards are generally available:I already said (both in the previous article and in this one) that Huawei offers SSDs of its own design - Palm Size, or, as they call them, Huawei-developed SSDs (HSSD) as storage devices.Besides the fact that the company considers their development to be faster, it also offers some key features.

SSD / - NAND. - NAND.

HSSD . SSD , /. SSD / , , , , .- (Bad Block)

- NAND. HSSD /, - NAND. , SSD (XOR) . HSSD , . - Background check

After storing data in the NAND flash for a long time, data errors may occur due to read interference, write interference, or accidental malfunctions. HSSD periodically reads data from a NAND flash memory, checks for bit changes, and writes bit changes to new pages. This process pre-detects and processes risks, which effectively prevents data loss and improves data security and reliability.

So, let's go directly to the tests. We tested both variations of the 5000V6 controllers with both 256 GB and 512 GB cache.The first test was, so to speak, warm-up.As we can see, on this load profile of 8k 50r / 50rw, the difference was about 7% (220 kIOPs versus 205 kIOPs) and affected only the final number of IOPS, and the response time remained at the same level - 0.9 ms.By the way, it was very simple to determine that we began to rest on the controller, the system itself reported a high utilization of the controller processors.The second test that we use for all systems on SSDs is to evaluate and compare them with a more complex profile.Profile[global]

direct=1

thread=1

iodepth=16

filename=/dev/sdb

ioengine=libaio

runtime=3600000

group_reptorting

time_based

[8r]

rw=randread

numjobs=24

bs=8k

[8w]

rw=randwrite

numjobs=24

bs=8k

[32r]

rw=randread

bs=32k

numjobs=1

[32w]

rw=randwrite

numjobs=1

bs=32k

[128r]

rw=read

bs=128k

numjobs=1

[128w]

rw=write

bs=128k

numjobs=1

[512r]

rw=read

bs=512k

numjobs=1

[512w]

rw=write

bs=512k

numjobs=1

Here the picture is completely different. If the difference in the number of IOPS in two thousand (172 kIOPs versus 170 kIOPs) is not significant at all, then the delay increased five times (from 0.4 ms to 2.1 ms), tells us that when working with a large block more cache gives an advantage to the system.Last year, colleagues and I already tested the Huawei Dorado 5000 V3 , so now we can compare these two systems.Progress on the face. The load profile and testing procedure were the same in both cases.Of course, it would be good to make a comparison with competitors as well, personally, my hands were very itchy to compare with the NetApp AFF A400, Full NVMe system, which appeared not so long ago. Unfortunately, she has not yet visited our test lab, and comparing with the AFF A300 is not entirely logical, even though Huawei’s previous Dorado 5000 V3 was positioned as its competitor.In the courtyard of the 21st century, many companies offer various programs to increase the attractiveness of their systems. Huawei has decided to keep up in this direction.E ff ective Capacity Guarantee

With the new V6 storage systems, you can safely rely on efficient data storage with compression and deduplication. In general, many vendors on the market already offer similar programs, which due to more efficient storage allow guaranteed storage of more data on the acquired useful volume of the data storage system.The cost of each terabyte of SSDs is still quite high, so compression and deduplication technologies are extremely useful, and they show high efficiency on many types of data. If your data is video, audio, images, scientific data, PDF, XML or encrypted data, then deduplication will not be effective, otherwise the guarantee works. Even if you purchased a system with the maximum number of drives installed, then along with the drives under the program you will also receive an expansion shelf.Flashver

If, together with the array, you purchase the program Huawei Hi-Care Onsite or Co-Care or higher, then you can count on the free update of the controllers to new models of the same line. This allows you to have the most modern and productive system without the need to purchase new systems or data migration. The process of replacing controllers also occurs without interruption of service.If you are a fan of interfaces and want to look at the updated Device Manager, then OceanStor Dorado 18000 V6 6.0.0 DeviceManager is already available on the Huawei portal .Unfortunately, this system was only on our tests for a couple of days, so we only managed to measure performance. But I would also like to carry out functional tests, our favorite failure tests and check some performance complaints that were to the previous V3 line in performing some operations - it is important to understand what changes in the new line (and, accordingly, in the software) have occurred and what effect did they bring.I want to note that most of the tests that I conduct for my articles are teamwork. I express my gratitude to my colleagues from the Group of integration of the company "Onlanta" with whom I work. By the way, we are looking for a system architect in our team .