Yesterday, March 25, the next release of Kubernetes - 1.18. According to the tradition for our blog, we talk about the most significant changes in the new version. The information used to prepare this material is taken from the official announcement, the Kubernetes enhancements tracking table , CHANGELOG-1.18 , reviews from SUSE and Sysdig , as well as related issues, pull requests, Kubernetes Enhancement Proposals (KEP) ...Kubernetes 1.18 release received its official logo, the essence of which is reduced to comparing the project with the Large Hadron Collider. Like the LHC, which was the result of the work of thousands of scientists from around the world, Kubernetes brought together thousands of developers from hundreds of organizations around them, and they all work on a common cause: "improving cloud computing in all aspects."

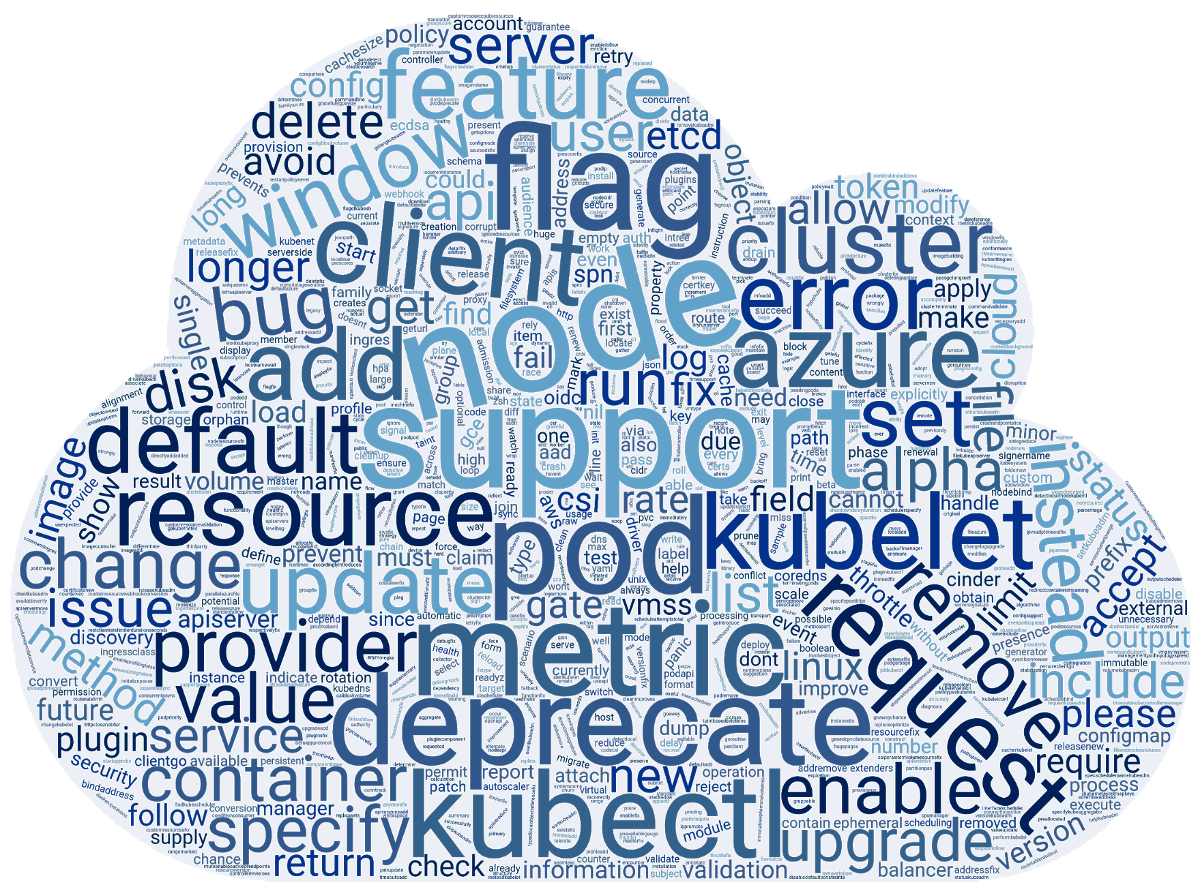

The information used to prepare this material is taken from the official announcement, the Kubernetes enhancements tracking table , CHANGELOG-1.18 , reviews from SUSE and Sysdig , as well as related issues, pull requests, Kubernetes Enhancement Proposals (KEP) ...Kubernetes 1.18 release received its official logo, the essence of which is reduced to comparing the project with the Large Hadron Collider. Like the LHC, which was the result of the work of thousands of scientists from around the world, Kubernetes brought together thousands of developers from hundreds of organizations around them, and they all work on a common cause: "improving cloud computing in all aspects." Meanwhile, enthusiasts from the SUSE team prepared a word cloud based on release notes for the 3412 commits made for K8s 1.18. And it turned out like this:

Meanwhile, enthusiasts from the SUSE team prepared a word cloud based on release notes for the 3412 commits made for K8s 1.18. And it turned out like this: Now - about what is behind these words, in a more understandable form for users.

Now - about what is behind these words, in a more understandable form for users.Scheduler

The main innovation here - it profiles Planning (Scheduling the Profiles) . It is connected with the fact that the more heterogeneous the workloads in the cluster become, the sooner the need for different approaches to their planning arises.To solve this problem, the authors propose that the scheduler use different configurations assigned to the scheduler and called profiles. Starting, kube-scheduler scans all available profiles and saves them in the registry. If there are no profiles in the configuration, the default option ( default-scheduler) is selected . After the pods are in the queue, kube-scheduler performs their planning taking into account the selected scheduler.Sami policy planning based on a predicate ( PodFitsResources, PodMatchNodeSelector,PodToleratesNodeTaintsetc.) and priorities ( SelectorSpreadPriority, InterPodAffinityPriority, MostRequestedPriority, EvenPodsSpreadPriorityetc.). The implementation immediately provides a system of plugins so that all profiles are added through a special framework.Current configuration structure (will be changed soon):type KubeSchedulerConfiguration struct {

...

SchedulerName string

AlgorithmSource SchedulerAlgorithmSource

HardPodAffinitySymmetricWeight

Plugins *Plugins

PluginConfig []PluginConfig

...

}

... and an example setup:profiles:

- schedulerName: 'default-scheduler'

pluginConfig:

- name: 'InterPodAffinity'

- args:

hadPodAffinityWeight: <value>

By the next release of K8s, the feature is expected to be translated into beta, and after two more - stabilization. For more information about profiles for the scheduler, see the appropriate KEP .Another innovation that appeared in the alpha version status is the default rule for even distribution of pods (Even Pod Spreading) . Currently, rules are defined in PodSpecand attached to pods, and now it has become possible to set global configuration at the cluster level. Details are in KEP .At the same time, the Pod Topology Spread feature itself (its feature gate - EvenPodsSpread), which allows equally distributing pods across a multi-zone cluster, has been translated into beta status.In addition, stabilization of Taint Based Eviction has been announced , designed to add taints to nodes when certain conditions occur. For the first time, the feature appeared in the already distant release of K8s 1.8 , and received beta status in 1.13 .HPA custom scaling speed

For more than a year, an interesting feature called Configurable scale velocity for HPA has been languishing in the Kubernetes enhancements furnace , i.e. customizable horizontal zoom speed. (By the way, our compatriot initiated its development .) In the new release, it was brought to the first stage of mass use - it became available in the alpha version.As you know, Horizontal Pod Autoscaler (HPA) in Kubernetes scales the number of pods for any resource that supports a subresourcescalebased on CPU consumption or other metrics. A new feature allows you to control the speed with which this scaling occurs, and in both directions. Until now, it has been possible to regulate it to a very limited extent (see, for example, the parameter global for the cluster --horizontal-pod-autoscaler-downscale-stabilization-window).The main motivation for introducing a custom scaling speed is the ability to separate the cluster workloads according to their importance for business, allowing one application (that processes the most critical traffic) to have a maximum increase speed in size and a low roll-up speed (as a new burst of load can happen), and for others - to scale according to other criteria (to save money, etc.).Proposed Solution - Added Object for HPA Configurationsbehavior, allowing you to define rules for controlling scaling in both directions ( scaleUpand scaleDown). For example, the configuration:behavior:

scaleUp:

policies:

- type: percent

value: 900%

... will lead to the fact that the number of currently running pods will increase by 900%. That is, if the application starts as a single pod, if it is necessary to scale, it will begin to grow as 1 → 10 → 100 → 1000. Forother examples and implementation details, see KEP .Knots

Progress in supporting hugepages ( total KEP for fiche ): alpha version implemented support for these pages on the container level and the ability to request pages of different sizes.Topology Manager node ( the Node the Topology Manager ) , designed to unify the approach to fine-tuning the allocation of hardware resources to different components in Kubernetes, transferred to the beta status.The status of the beta version to get an idea into a feature K8s 1.16 PodOverhead , the proposed mechanism of calculating overheads pod'y.kubectl

An alpha version of the kubectl debug command ( its KEP ) has been added , which developed the concept of “ ephemeral containers ”. They were first introduced in K8s 1.16 with the goal of simplifying debugging in pods. To do this, in the right pod, a temporary (i.e., living for a short time) container is launched containing the necessary tools for debugging. As we already wrote, this command is essentially identical to the kubectl-debug plug-in that existed so far ( its review ).Job Illustration kubectl debug:% kubectl help debug

Execute a container in a pod.

Examples:

kubectl debug mypod --image=debian

kubectl debug mypod --target=myapp

Options:

-a, --attach=true: Automatically attach to container once created

-c, --container='': Container name.

-i, --stdin=true: Pass stdin to the container

--image='': Required. Container image to use for debug container.

--target='': Target processes in this container name.

-t, --tty=true: Stdin is a TTY

Usage:

kubectl debug (POD | TYPE/NAME) [-c CONTAINER] [flags] -- COMMAND [args...] [options]

Use "kubectl options" for a list of global command-line options (applies to all commands).

Another team, kubectl diff , which first appeared in K8s 1.9 and received beta status in 1.13, is finally declared stable. As the name implies, it allows you to compare cluster configurations. On the occasion of stabilization features she appeared the KEP , and has been updated with all relevant documentation Kubernetes site.In addition, the flag kubectl --dry-run added support for different values of ( client, server, none), which lets you try to execute the command only on the client side or server. For its implementation on the server side implemented support for major teams including create,apply, patchand others.Networks

The Ingress resource began to move from the current API ( extensions.v1beta1) group networking.v1beta1to, followed by stabilization in the view v1. A series of changes ( KEP ) are planned for this . At the moment - as part of the beta version in K8s 1.18 - Ingress has received two significant innovations :- a new field

pathTypethat allows you to determine by what principle the path will be compared ( Exact, Prefixor ImplementationSpecific; the last behavior is determined in IngressClass); - a new resource

IngressClassand a new (immutable) field Classin the definition IngressSpecthat indicates which controller implements the Ingress resource. These changes replace the annotation kubernetes.io/ingress.class, the use of which will be deprecated.

For many network features, the availability status has been increased:- the NodeLocal DNS Cache plugin has become stable , which improves DNS performance by using an agent for the DNS cache on cluster nodes;

- stable and declared a field

AppProtocolthat defines the application protocol for each service port (resources ServicePortand EndpointPort); - IPv6 support is recognized as stable enough to translate it into a beta version;

- By default, the EndpointSlices API is now activated (as part of the beta version) , acting as a more scalable and expandable replacement for the usual Endpoints.

Storage facilities

The alpha version provides the basis for creating volumes with data preloaded onto them - Generic Data Populators ( KEP ). As an implementation, it is proposed to weaken the field validation DataSourceso that objects of arbitrary types can be data sources.Before bind-mounting the volume into the container, the rights to all its files are changed according to the value fsGroup. This operation can break the work of some applications (for example, popular DBMSs), and also take a lot of time (for large volumes - more than 1 TB). The new fieldPermissionChangePolicy for PersistentVolumeClaimVolumeSource allows you to determine whether you want to change the owner for the contents of the volume. The current implementation is an alpha version, details are inKEP .For objects Secrets, ConfigMaps a new field has been immutableadded , making them immutable. As a rule, these objects are used in pods as files. Any changes in these files quickly (after about a minute) apply to all pods that mounted the files. Thus, an unsuccessful update of a secret or ConfigMap can lead to the failure of the entire application.The authors of the feature say that even in the case of updating the applications with the recommended method - through rolling upgrades - there may be failures caused by unsuccessful updates of existing secrets / ConfigMaps. Moreover, the process of updating these objects in running pods is complicated in terms of performance and scalability (each kubelet is forced to constantly monitor each unique secret / CM).In the current implementation, it is made so that after the resource is marked as unchangeable, this change cannot be rolled back. You will need to not only delete the object and create it again, but also recreate pods that use the remote secret. Version - alpha, details - KEP .Stable declared:Other changes

Among other innovations in Kubernetes 1.18 (for a more complete list, see CHANGELOG ) :Dependency Changes:- CoreDNS version in kubeadm - 1.6.7;

- cri-tools 1.17.0;

- CNI (Container Networking Interface) 0.8.5, Calico 3.8.4;

- The version of Go used is 1.13.8.

What is outdated?

- API Server is not servicing outdated API: all the resources

apps/v1beta1and the extensions/v1beta1need to move on apps/v1, and pay attention to the particular resource daemonsets, deployments, replicasets, networkpolicies, podsecuritypolicies; - endpoint for metrics is

/metrics/resource/v1alpha1 not serviced - now instead /metrics/resource; - all team generators

kubectl run removed except the one responsible for pod generation.

PS

Read also in our blog: