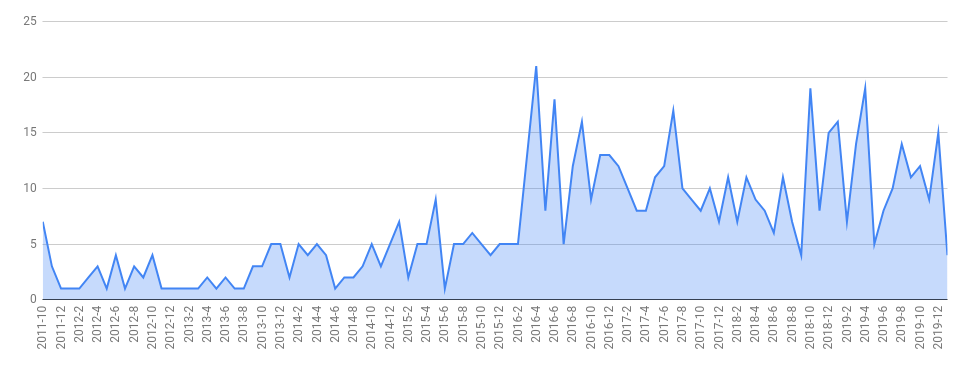

In the era of ubiquitous CI / CD, we are faced with a wide range of related tools, including CI systems. However, it was GitLab that became the closest for us, truly “native”. He gained notable popularity in the industry as a whole *. The developers of the product did not lag behind the growing interest in its use, regularly delighting the community of developers and DevOps engineers with new versions.

In the era of ubiquitous CI / CD, we are faced with a wide range of related tools, including CI systems. However, it was GitLab that became the closest for us, truly “native”. He gained notable popularity in the industry as a whole *. The developers of the product did not lag behind the growing interest in its use, regularly delighting the community of developers and DevOps engineers with new versions. GitLab repository month and tag aggregationGitLab is the case when active development brings many new and interesting features. If for potential users this is just one of the factors of choosing a tool, for existing ones, the situation is as follows: if you have not updated your GitLab installation in the last month, then with a high probability you missed something interesting. Including regularly emerging security updates.About the most significant - i.e. demanded by our DevOps engineers and customers - innovations in the latest releases of the Community edition of GitLab, and this article will be discussed.* 5 , «GitLab», : «, GitHub?». — , Google Trends 5- «gitlab» . «» , . , , «» .

GitLab repository month and tag aggregationGitLab is the case when active development brings many new and interesting features. If for potential users this is just one of the factors of choosing a tool, for existing ones, the situation is as follows: if you have not updated your GitLab installation in the last month, then with a high probability you missed something interesting. Including regularly emerging security updates.About the most significant - i.e. demanded by our DevOps engineers and customers - innovations in the latest releases of the Community edition of GitLab, and this article will be discussed.* 5 , «GitLab», : «, GitHub?». — , Google Trends 5- «gitlab» . «» , . , , «» .№1: needs

Thought dependencies- this is what you need? Probably, we were not the only ones who made the mistake of assigning this directive ... It is needed to list the previous jobs, the artifacts of which will be required. It is artifacts, and not dependence on the performance of the previous task.Suppose it happened that in one stage there are jobs that are not necessary to be performed, but for some reason there is no possibility or just desire to take them out to a separate stage (laziness is the engine of progress, but do not get carried away).Situation: As you can see, stage Deploy contains buttons for rolling out both production and stage, and job Selenium testsfor some reason is not executed. It's simple: he waits until all jobs from the previous stage are successfully completed. However, in the framework of the same pipeline, we do not need to deploy stage now to run the tests (it was pumped out earlier not within the tag). What to do? Then come to the rescue needs !We list only the necessary previous jobs to run our tests:

As you can see, stage Deploy contains buttons for rolling out both production and stage, and job Selenium testsfor some reason is not executed. It's simple: he waits until all jobs from the previous stage are successfully completed. However, in the framework of the same pipeline, we do not need to deploy stage now to run the tests (it was pumped out earlier not within the tag). What to do? Then come to the rescue needs !We list only the necessary previous jobs to run our tests: needs:

- To production (Cluster 1)

- To production (Cluster 2)

... and we get a job, which is automatically called after only the listed jobs are executed: Conveniently, right? But once I expected that the directive would work something like this

Conveniently, right? But once I expected that the directive would work something like this dependencies...No. 2: extends

Tired of reading rolls .gitlab-ci.yaml? Missing the code reuse principle? Then you have already tried and probably successfully brought yours .gitlab-ci.yamlto a state like this:.base_deploy: &base_deploy

stage: deploy

script:

- my_deploy_command.sh

variables:

CLUSTER: "default-cluster"

MY_VAR: "10"

Deploy Test:

<<: *base_deploy

environment:

url: test.example.com

name: test

Deploy Production:

<<: *base_deploy

environment:

url: production.example.com

name: production

variables:

CLUSTER: "prod-cluster"

MY_VAR: "10"

Sounds great? However, if you look closely, something catches your eye ... Why did we change in production not only variables.CLUSTER, but also prescribed a second time variables.MY_VAR=10? Should this variable be taken from base_deploy? It turns out that it shouldn't: YAML works so that, redefining what is received from the anchor, it does not expand the contents of the matching fields, but replaces it . Therefore, we are forced to list the variables already known to us in the matching paragraph.Yes, “expands” is the right word: this is exactly what the feature in question is called. ExtendsThey allow us not just to rewrite the field, as it happens with anchor, but to conduct a smart merge for it:.base_deploy:

stage: deploy

script:

- my_deploy_command.sh

variables:

CLUSTER: "default-cluster"

MY_VAR: "10"

Deploy Production:

extends: .base_deploy

environment:

url: production.example.com

name: production

variables:

CLUSTER: "prod-cluster"

Here in the final job Deploy Production there will be both a variable MY_VARwith a default value and an overridden one CLUSTER.It seems that this is such a trifle, but imagine: you have one base_deployand 20 circuits deployed similarly. They need to be passed on to others cluster, environment.namewhile preserving a certain set of variables or other matching fields ... This small pleasantness allowed us to reduce the deployment description of the set of dev-circuits by 2-3 times.No. 3: include

.gitlab-ci.yamlit still looks like a folding instruction for a vacuum cleaner in 20 languages (of which you only understand your native one) is it difficult when you need to deal with one of its sections without changing in the face of unknown jobs encountered on the way?A long-time programming friend will help include:stages:

- test

- build

- deploy

variables:

VAR_FOR_ALL: 42

include:

- local: .gitlab/ci/test.yml

- local: .gitlab/ci/build.yml

- local: .gitlab/ci/deploy-base.yml

- local: .gitlab/ci/deploy-production.yml

Those. Now we are boldly editing the deployment in production, while testers are busy modifying their file, which we may not even look at. In addition, this helps to avoid merge conflicts: it’s not always fun to understand someone else’s code.But what if we know the pipeline of our 20 projects along and across, can we explain the logic of each job from it? How will this help us? For those who have achieved enlightenment in code reuse and for all who have many similar projects, you can:A dozen of the same type of projects with different code, but deployed the same way - easily and without maintaining up-to-date CI in all repositories!An example of practical use includewas also given in this article .No. 4: only / except refs

- Comprehensive conditions, including variables and file changes.

- Since this is a whole family of functions, some parts began to appear in GitLab 10.0, while others (for example,

changes) began to appear in 11.4. - docs.gitlab.com/ce/ci/yaml/#onlyexcept-advanced

Sometimes it seems to me that this is not a pipeline listening to us, but we him. An excellent management tool are only/ except- now integrated. What does this mean?In the simplest (and perhaps the most pleasant) case, skipping stages:Tests:

only:

- master

except:

refs:

- schedules

- triggers

variables:

- $CI_COMMIT_MESSAGE =~ /skip tests/

In the example job, it runs only on a branch of the master, but cannot be triggered by a schedule or trigger (GitLab shares API calls and triggers, although this is essentially the same API). Job will not be executed if there is a skip tests passphrase in the commit message . For example, a typo in the README.mdproject or documentation has been fixed - why wait for the test results?“Hey, 2020 is outside!” Why should I each time explain to the iron box that it is not necessary to run tests when changing the documentation? ” And really: only:changesit allows you to run tests when changing files only in certain directories. For instance: only:

refs:

- master

- merge_requests

changes:

- "front/**/*"

- "jest.config.js"

- "package.json"

And for the reverse action - i.e. do not run - yes except:changes.No. 5: rules

This directive is very similar to the previous ones only:*, but with an important difference: it allows you to control the parameter when. For example, if you want not to completely remove the possibility of starting a job. You can simply leave the button, which, if desired, will be called independently, without launching a new pipeline or without making commit.# 6: environment: auto_stop_in

We learned about this opportunity right before the publication of the article and have not yet had enough time to try it out in practice, but this is definitely “the same thing” that was so expected in several projects.You can specify a parameter in GitLab environments on_stop- it is very useful when you want to create and delete environments dynamically, for example, to each branch. The job marked with k on_stopis executed, for example, when the merge of MR is in the master branch or when the MR is closed (or even just by clicking on the button), due to which the unnecessary environment is automatically deleted.Everything is convenient, logical, works ... if not for the human factor. Many developers merge MRs not by clicking a button in GitLab, but locally via git merge. You can understand them: it's convenient! But in this case, the logicon_stopit doesn’t work, we have accumulated forgotten surroundings ... This is where the long-awaited come in handy auto_stop_in.Bonus: temporary huts when there are not enough opportunities

Despite all these (and many others) new, demanded functions of GitLab, unfortunately, sometimes the conditions for fulfilling a job are simply impossible to describe within the framework of the currently available capabilities.GitLab is not perfect, but it provides the basic tools for building a dream pipeline ... if you are ready to go beyond the modest DSL, plunging into the world of scripting. Here are a few solutions from our experience, which in no way pretend to be ideologically correct or recommended, but are presented more to demonstrate different possibilities with a lack of built-in API functionality.Workaround No. 1: launch two jobs with one button

script:

- >

export CI_PROD_CL1_JOB_ID=`curl -s -H "PRIVATE-TOKEN: ${GITLAB_API_TOKEN}" \

"https://gitlab.domain/api/v4/projects/${CI_PROJECT_ID}/pipelines/${CI_PIPELINE_ID}/jobs" | \

jq '[.[] | select(.name == "Deploy (Cluster 1)")][0] | .id'`

- >

export CI_PROD_CL2_JOB_ID=`curl -s -H "PRIVATE-TOKEN: ${GITLAB_API_TOKEN}" \

"https://gitlab.domain/api/v4/projects/${CI_PROJECT_ID}/pipelines/${CI_PIPELINE_ID}/jobs" | \

jq '[.[] | select(.name == "Deploy (Cluster 2)")][0] | .id'`

- >

curl -s --request POST -H "PRIVATE-TOKEN: ${GITLAB_API_TOKEN}" \

"https://gitlab.domain/api/v4/projects/${CI_PROJECT_ID}/jobs/$CI_PROD_CL1_JOB_ID/play"

- >

curl -s --request POST -H "PRIVATE-TOKEN: ${GITLAB_API_TOKEN}" \

"https://gitlab.domain/api/v4/projects/${CI_PROJECT_ID}/jobs/$CI_PROD_CL2_JOB_ID/play"

And why not, if you really want to?Workaround No. 2: transfer changed in MR rb-files for rubocop inside the image

Rubocop:

stage: test

allow_failure: false

script:

...

- export VARFILE=$(mktemp)

- export MASTERCOMMIT=$(git merge-base origin/master HEAD)

- echo -ne 'CHANGED_FILES=' > ${VARFILE}

- if [ $(git --no-pager diff --name-only ${MASTERCOMMIT} | grep '.rb$' | wc -w |awk '{print $1}') -gt 0 ]; then

git --no-pager diff --name-only ${MASTERCOMMIT} | grep '.rb$' |tr '\n' ' ' >> ${VARFILE} ;

fi

- if [ $(wc -w ${VARFILE} | awk '{print $1}') -gt 1 ]; then

werf --stages-storage :local run rails-dev --docker-options="--rm --user app --env-file=${VARFILE}" -- bash -c /scripts/rubocop.sh ;

fi

- rm ${VARFILE}

There is no image inside .git, so I had to get out to check only the changed files.Note: This is not a very standard situation and a desperate attempt to comply with many conditions of the problem, the description of which is not included in the scope of this article.Workaround # 3: trigger to start jobs from other repositories when rolling out

before_script:

- |

echo '### Trigger review: infra'

curl -s -X POST \

-F "token=$REVIEW_TOKEN_INFRA" \

-F "ref=master" \

-F "variables[REVIEW_NS]=$CI_ENVIRONMENT_SLUG" \

-F "variables[ACTION]=auto_review_start" \

https://gitlab.example.com/api/v4/projects/${INFRA_PROJECT_ID}/trigger/pipeline

It would seem that such a simple and necessary (in the world of microservices) thing is rolling out another microservice into a freshly created circuit as a dependency. But it is not, therefore, an API call and an already familiar (described above) feature are required: only:

refs:

- triggers

variables:

- $ACTION == "auto_review_start"

Notes:- Job on trigger is designed to be tied to passing a variable to the API, similarly to example No. 1. It is more logical to implement this on the API with the job name passed.

- Yes, the function is in the commercial (EE) version of GitLab, but we do not consider it.

Conclusion

GitLab tries to keep up with the trends, gradually implementing features that are pleasant and in demand by the DevOps community. They are quite easy to use, and when the basic capabilities are not enough, they can always be expanded with scripts. And if we see that it turns out not so elegantly and conveniently in support ... it remains to wait for new releases of GitLab - or to help the project with our contribution .PS

Read also in our blog: