The brain is my old neighbor. Considering how much time we spent, and how much we still have to be together, not to be interested in him - sheer tactlessness.You walk with a black box inside the skull box, and this box understands that he gives himself such a description. This is very curious. If they had given me a piece of iron with such features, I would have killed all my free time to understand how it works. Actually, I'm killing. The object of study is always with me - very convenient. The only pity is that you can’t dig inside.The brain records and processes information. But how? Why is something stored for a long time, but something is forgotten in a couple of days? How is this related to neurons?Is it possible, based on information from neurobiology, to build a model of the brain that gives behavior similar to a real brain?What to guess? Let's just try it.DISCLAIMER:There will not be a full explanation of how the brain works. This is a brief description of the basic principles. The purpose of this article is to create an approximate model. Sometimes it will not work. But this is better than not having any model.We can draw an analogy with the formula of friction in physics. It is obtained empirically, and is not entirely accurate. But it is accurate enough to make estimates and use it in calculations.All links below are for in-depth study. They will not be needed to read the article. And almost everything is in English. The Russian Internet is poor in relevant information on issues of interest to us.

The brain is my old neighbor. Considering how much time we spent, and how much we still have to be together, not to be interested in him - sheer tactlessness.You walk with a black box inside the skull box, and this box understands that he gives himself such a description. This is very curious. If they had given me a piece of iron with such features, I would have killed all my free time to understand how it works. Actually, I'm killing. The object of study is always with me - very convenient. The only pity is that you can’t dig inside.The brain records and processes information. But how? Why is something stored for a long time, but something is forgotten in a couple of days? How is this related to neurons?Is it possible, based on information from neurobiology, to build a model of the brain that gives behavior similar to a real brain?What to guess? Let's just try it.DISCLAIMER:There will not be a full explanation of how the brain works. This is a brief description of the basic principles. The purpose of this article is to create an approximate model. Sometimes it will not work. But this is better than not having any model.We can draw an analogy with the formula of friction in physics. It is obtained empirically, and is not entirely accurate. But it is accurate enough to make estimates and use it in calculations.All links below are for in-depth study. They will not be needed to read the article. And almost everything is in English. The Russian Internet is poor in relevant information on issues of interest to us.Where to begin?

Let's put aside memory models from psychology for now. All descriptions like: “short-term - long-term” , “Working Memory” , “theory of processing levels” , “magic number 7 + -2” now they only confuse us. Trying to understand the brain with their help is like trying to guess the computer’s device by looking into the monitor from under the account with parental control. For us, they will become useful only after we understand the basic principles.We will go from below and start the path with neurons.Neurons and Communications

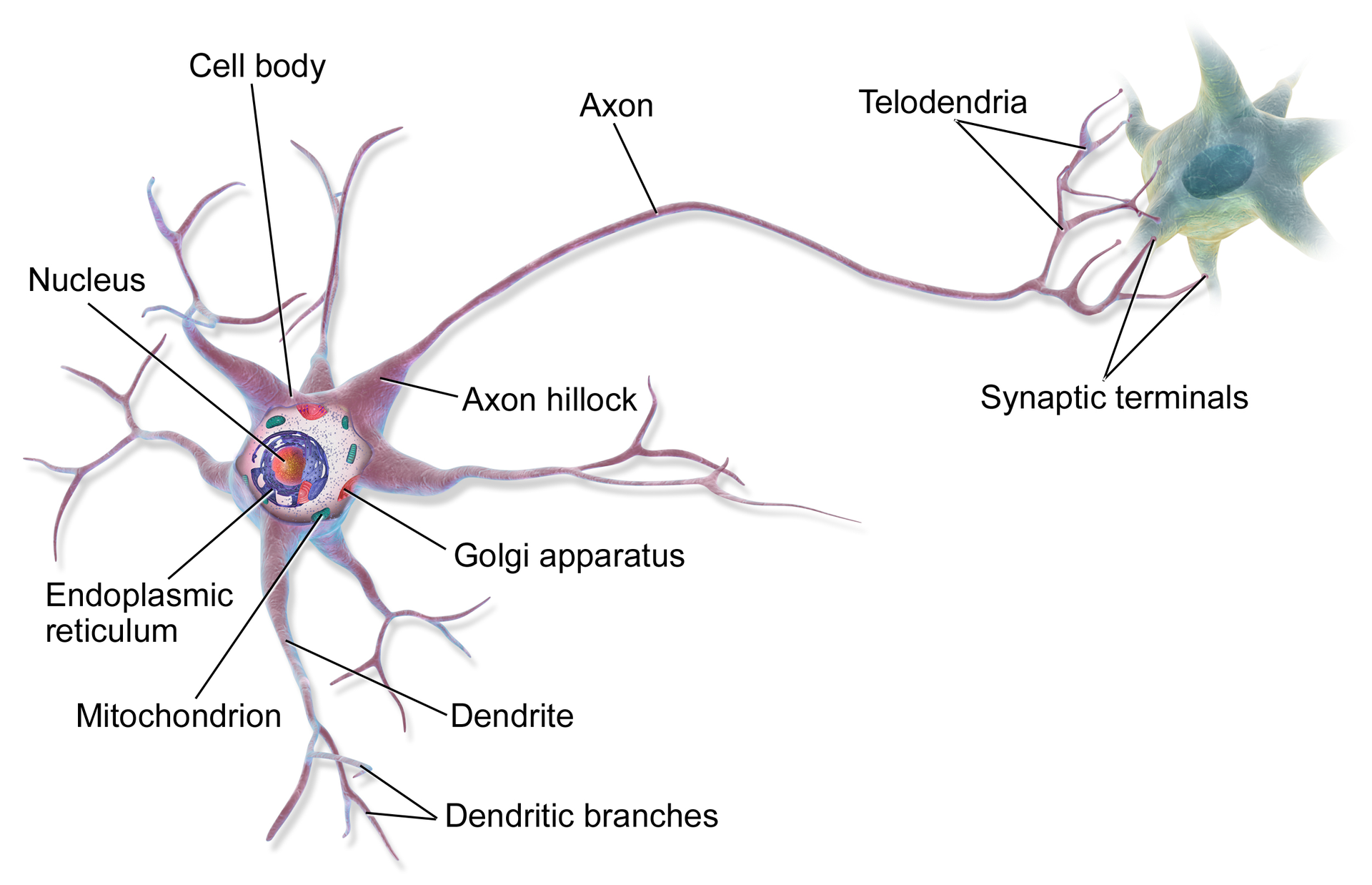

from: wikiThere are many types of neurons. They differ in the number of dendrites used by neurotransmitters , and a bunch of other parameters by which they can be classified. We will not go into the jungle of implementation. Let's get down to basic - signaling.If you describe the process very roughly, it looks like this:1. There is a charged neuron containing ions. When its charge has crossed the activation threshold (we have accumulated a lot of charged particles), the ions begin to move along the axon .

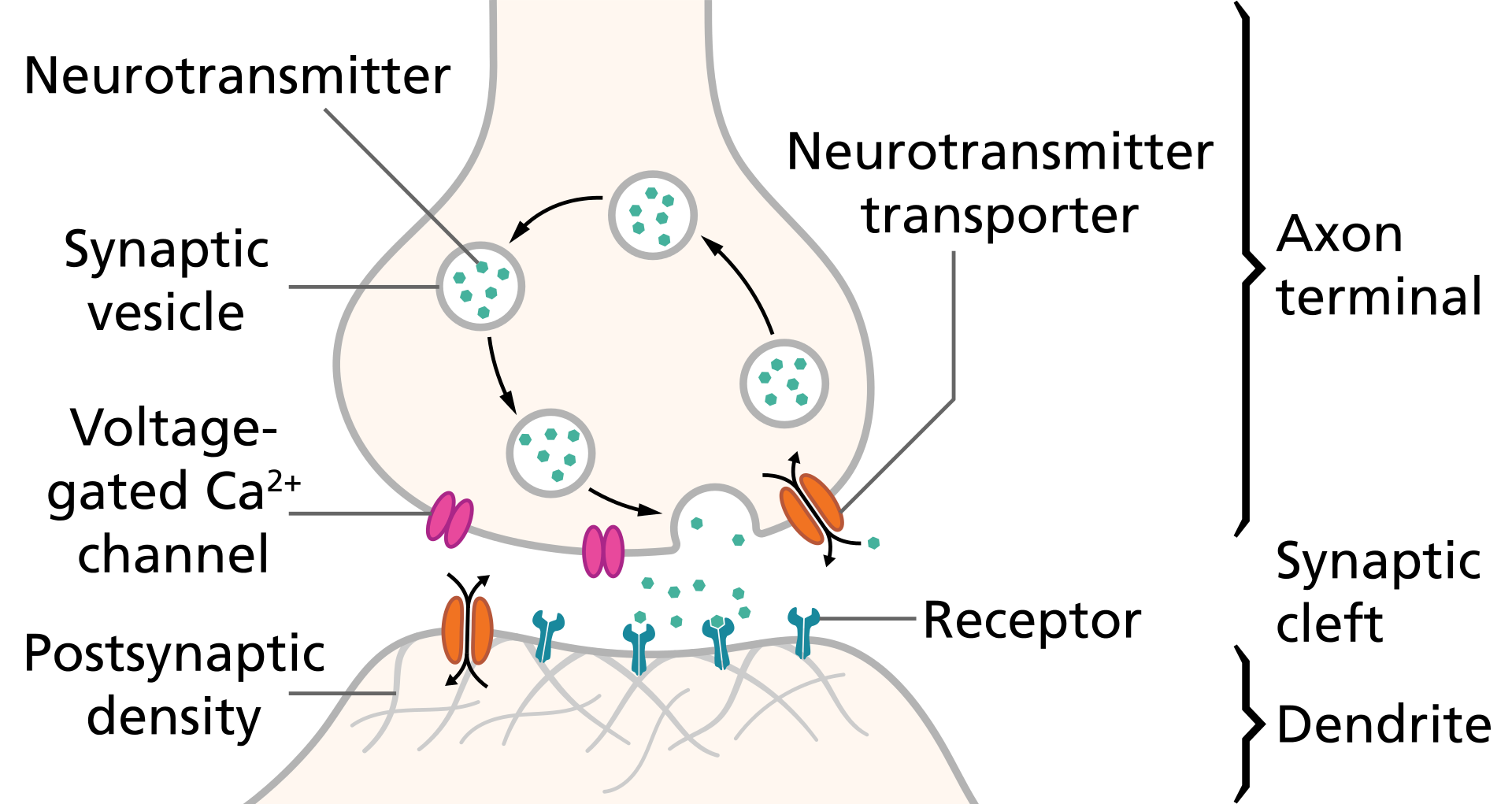

from: wikiThere are many types of neurons. They differ in the number of dendrites used by neurotransmitters , and a bunch of other parameters by which they can be classified. We will not go into the jungle of implementation. Let's get down to basic - signaling.If you describe the process very roughly, it looks like this:1. There is a charged neuron containing ions. When its charge has crossed the activation threshold (we have accumulated a lot of charged particles), the ions begin to move along the axon . from: wiki2. After reaching the end of the axon, ions fall into the synapse . Neurotransmitters are stored in the synapse, and ions release them to freedom.

from: wiki2. After reaching the end of the axon, ions fall into the synapse . Neurotransmitters are stored in the synapse, and ions release them to freedom. from:wiki3. Below is another neuron that has receptors . They accept released neurotransmitters and open channels for ion charging of the next neuron. In short, the neurotransmitter is the key. Once in the corresponding receptor lock, it opens the neuron to charge.

from:wiki3. Below is another neuron that has receptors . They accept released neurotransmitters and open channels for ion charging of the next neuron. In short, the neurotransmitter is the key. Once in the corresponding receptor lock, it opens the neuron to charge.About substances that are allowed and not very., , — .

— , . , , « ». , .

— . , — () . .. , , . .

— , , .

, !

— .

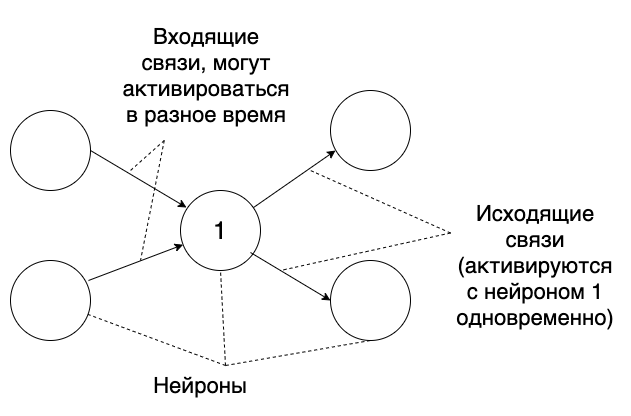

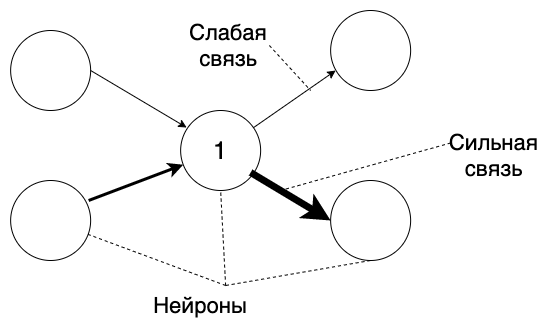

One neuron can receive signals from several, through dendrites . And one axon can be connected to several neurons.Now let's squeeze it all:- A neuron can activate and transmit signals to another neuron.

- Another neuron, having received a signal, is charged, and approaches activation.

- One neuron can receive signals from several neurons.

- When a neuron is activated, it transmits a signal to all neurons associated with it.

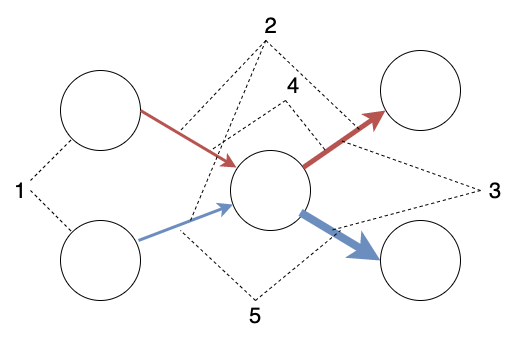

Or, in terms of circles and arrows: This is all very informative, but where is the data? How to store information in neurons, how to read and how to write?

This is all very informative, but where is the data? How to store information in neurons, how to read and how to write?Storage and reading

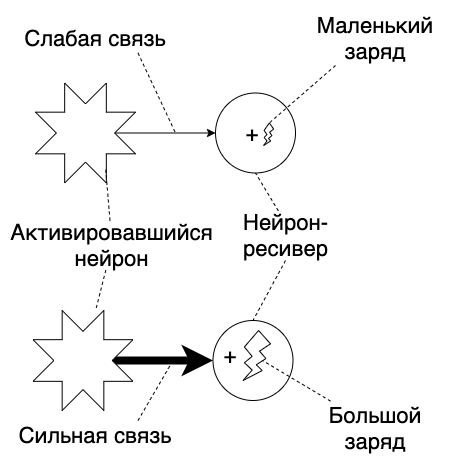

For storage and recording, there is a mechanism called synaptic plasticity .On the fingers, it can be explained as follows: connections between neurons have different “strengths”. The stronger the connection, the more charged the neuron receiver when it is activated. And now a moment that may be a little difficult to understand. The strength of ties is our data. You see this text - this is the activation of neurons in your brain. The "activation pattern" that it produces in our neural network is what we call "see." And also I hear, feel, imagine, remember, and so on. All this is the activation of a specific sequence of neurons.In other words, if you find in the visual cortex of the brain a section that is activated when we see a spoon, bring the wires there and cut the current- the brain will see a spoon, and it won’t go anywhere. “I see a spoon” = activation of neurons in the visual cortex due to signals from photoreceptors in the eye.Welcome to the real world, Neo. A spoon exists, photoreceptors exist, neurons exist, and all attempts to see something with a mental effort are doomed to failure. Although, no - you can close your eyes.What specific areas of the brain will be activated depends on how the signals go through the connections. This is determined by the strength of the bonds.Supplement our picture:Information in the brain is stored in the form of connections of different strength between neurons.Reading this information is carried out using the activation of neurons. How the “activation pattern” will look like depends on the connections and their strength.

And now a moment that may be a little difficult to understand. The strength of ties is our data. You see this text - this is the activation of neurons in your brain. The "activation pattern" that it produces in our neural network is what we call "see." And also I hear, feel, imagine, remember, and so on. All this is the activation of a specific sequence of neurons.In other words, if you find in the visual cortex of the brain a section that is activated when we see a spoon, bring the wires there and cut the current- the brain will see a spoon, and it won’t go anywhere. “I see a spoon” = activation of neurons in the visual cortex due to signals from photoreceptors in the eye.Welcome to the real world, Neo. A spoon exists, photoreceptors exist, neurons exist, and all attempts to see something with a mental effort are doomed to failure. Although, no - you can close your eyes.What specific areas of the brain will be activated depends on how the signals go through the connections. This is determined by the strength of the bonds.Supplement our picture:Information in the brain is stored in the form of connections of different strength between neurons.Reading this information is carried out using the activation of neurons. How the “activation pattern” will look like depends on the connections and their strength. Any person engaged in discrete mathematics will recognize a weighted digraph in this picture .Okay, but how do you change the strength of relationships and write data?

Any person engaged in discrete mathematics will recognize a weighted digraph in this picture .Okay, but how do you change the strength of relationships and write data?Record

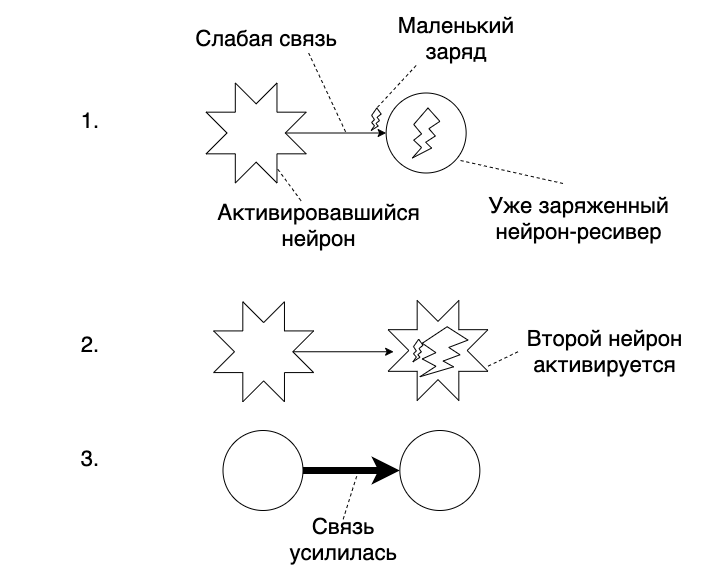

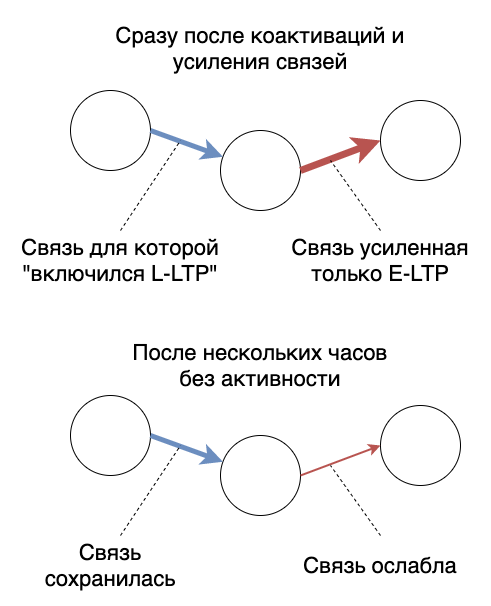

There is such a thing, called the Hebb theory , or the Hebb rule:Neurons that turn on together - connect together. (Neurons that fire together - wire together).At the lower level, this is provided by the E-LTP (Early Long-Term Potentiation or LTP1) mechanism .It can be reformulated as follows:If we activated one neuron and the next activated after it, the connection will become stronger. Due to the fact that we can activate brain neurons from the outside, for example using vision or hearing, we can record information on them. They will be activated together, the strength of communication will change. The next time, information can be obtained by activating the beginning of the “chain” of strong ties.But it’s not so simple. The problem is that we tend to forget something. And this means that relations are not only strengthening, but also weakening. And at the same time, the degradation of relations occurs unevenly - some of them become thinner faster, others - last very long. How else to explain the fact that I do not remember the chemistry exam in grade 11, but I remember my birthday in the same period?You can come up with a tricky system of closed loops of activation, and get a constantly maintained connection. But the real brain has a much simpler method, it is called Late Long-Term Potentiation , or L-LTP.Instead of maintaining communication through constant activation, the brain simply captures its current state.Okay, with "just" I went too far. There are studies in favor of the fact that the process is launched using the synthesis of special proteins . There are other studies that claim that inhibition of protein synthesis does not affect L-LTP. After reading about this, I concluded that no one doubts the hypothesis of fixing the state for a long period. But I could not figure out the details of the process.Fortunately, in our simple world of arrows and circles these details are not. For now, we just remember that the connections are able to maintain state and not weaken over time.

Due to the fact that we can activate brain neurons from the outside, for example using vision or hearing, we can record information on them. They will be activated together, the strength of communication will change. The next time, information can be obtained by activating the beginning of the “chain” of strong ties.But it’s not so simple. The problem is that we tend to forget something. And this means that relations are not only strengthening, but also weakening. And at the same time, the degradation of relations occurs unevenly - some of them become thinner faster, others - last very long. How else to explain the fact that I do not remember the chemistry exam in grade 11, but I remember my birthday in the same period?You can come up with a tricky system of closed loops of activation, and get a constantly maintained connection. But the real brain has a much simpler method, it is called Late Long-Term Potentiation , or L-LTP.Instead of maintaining communication through constant activation, the brain simply captures its current state.Okay, with "just" I went too far. There are studies in favor of the fact that the process is launched using the synthesis of special proteins . There are other studies that claim that inhibition of protein synthesis does not affect L-LTP. After reading about this, I concluded that no one doubts the hypothesis of fixing the state for a long period. But I could not figure out the details of the process.Fortunately, in our simple world of arrows and circles these details are not. For now, we just remember that the connections are able to maintain state and not weaken over time.

Summary

Let's summarize the interim results of our short excursion into the world of neurobiology:- There are neurons. In our picture they are balls. They accumulate a charge, and are activated when it exceeds a certain threshold.

- . . , — , - . .

- . — . , -, .

- . , - . , , E-LTP.

- . — , — , — . L-LTP.

, :

If you are interested in a more accurate neuron model, and a list of the characteristic differences of biological neurons from their models in the ANN, see this article . As part of this post, I described only what I will need in the future.This part of the study took 4 months. I read articles and broke through dozens of obscure terms for me, turned into the wrong areas and stumbled upon outdated information on my issue.Level up. Subnets and objects

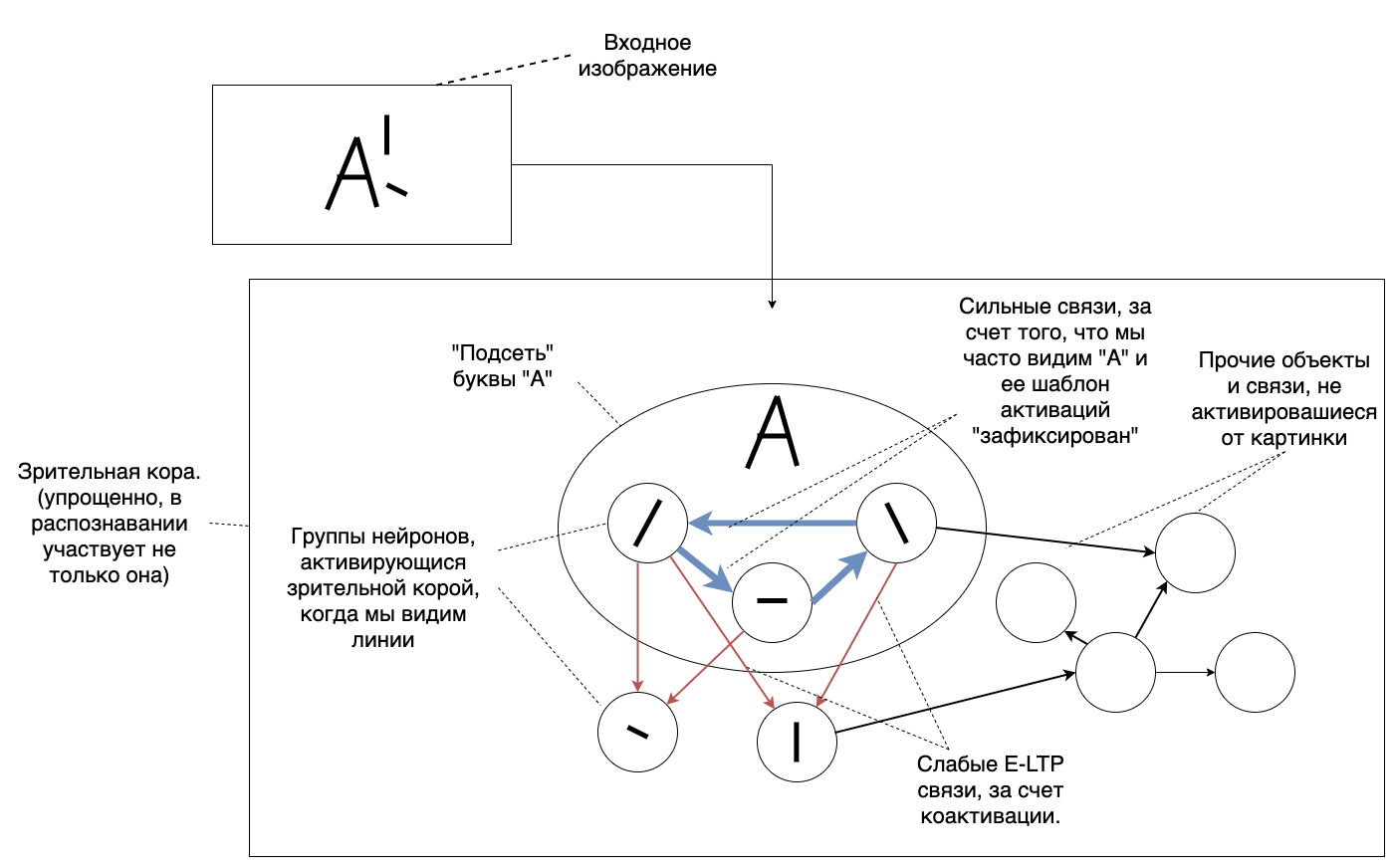

We got a model of the processes occurring in the brain with neurons. Unfortunately, we do not have a detailed description of the larger structures, "subnets" of our brain. But we have a base on which to build it yourself. Now we will conduct experiments and collect information about the behavior of the brain. And then on the basis of the base model we will build an explanation of the experimental results. If we build it correctly, it will not only explain what we already know, but also predict the results of further experiments.The first thing I noticed was that we have the concept of an object. Or the whole. Well, in general, everything that can be considered natural numbers: tables, chairs, houses, trees, leaves, brains ... The brain clearly likes this concept, it is intuitive. But we know that the world does not consist of objects, as we see them. The monitor you are reading this text from is not a solid object. We can break it down into its components. It has pixels, there is a frame ... Breaking it further, we will reach molecules and atoms. But atoms and molecules are also not a whole, they are composed of other particles.But why does the brain really like the concept of a particle? Seriously, the best way to break it ru is to get ru tasks not expressed in integers ru to be processed .And I thought - what if the existence of the object perception of the world is explained by the very structure of our neural network? What if “object” is a word that describes the activation of a connected area? This explains why we perceive a chair or table as a whole - they have one contour that stands out against the general background. Perhaps this causes the simultaneous activation of an entire subnet in the visual cortex?Similarly, words and letters can be recognized. To simplify it very much, then in our picture it will look like this: I formulated a hypothesis: There are areas in the brain with strong connections between neurons. Their activation gives a sense of "integrity" or "presence of an object." Now you need to check it for strength.The first consequence that can be obtained is that if we have a strongly connected subnet, then it is activated even with incomplete information . We must be able to independently complete the familiar pattern. And vice versa - if there is no such template, the brain will not be able to restore it. Here I came across a couple of interesting articles about optical illusions and glitches in the processing of information by vision. Here is one of them. I also spent a lot of time forcing my friends and colleagues to fill out questionnaires with missing letters. I divided the words with omissions into 3 categories:

I formulated a hypothesis: There are areas in the brain with strong connections between neurons. Their activation gives a sense of "integrity" or "presence of an object." Now you need to check it for strength.The first consequence that can be obtained is that if we have a strongly connected subnet, then it is activated even with incomplete information . We must be able to independently complete the familiar pattern. And vice versa - if there is no such template, the brain will not be able to restore it. Here I came across a couple of interesting articles about optical illusions and glitches in the processing of information by vision. Here is one of them. I also spent a lot of time forcing my friends and colleagues to fill out questionnaires with missing letters. I divided the words with omissions into 3 categories:- General concepts.

- Special terms that are known to the person undergoing the survey.

- Special terms from a narrow area unknown to the subject.

For example, I gave colleagues to programmers a questionnaire that included everyday words, such as “tables” and “chairs”, words from the IT domain, such as “ patterns ” and “ hash tables ”, and words from the field of biology and genetics, such as “ polyadenylation ” or " adenosine monophosphate ".It turned out that people successfully fill in the blanks in familiar words and cannot do this with strangers. This was in good agreement with what I read in other sources and with my hypothesis.For hearing, this also worked. People perfectly recognized speech familiar to them, even with a bad signal, but could not cope if they met an unfamiliar pattern.If you have a question of format: Why the hell did you check the obvious things yourself?, , , , . , . , — .

, , . , , . , , . ! , . , — .

. , , — . , . , « » — , . , . — .

, , « » « », . , , ,

. — .

. I tested my assumption for 3 months and it worked surprisingly well.You submit a strongly connected template to the input - people say that it evokes a sense of the whole, define it as 1 object. Breaking order, trying to create a different activation pattern - the whole is divided into parts and becomes several objects.For example: “Fields”, “Theory”, “Unified” / “Unified”, “Theory”, “Fields” (I tried to find an example in Russian for 7 minutes. Who came up with the idea of synchronizing the perception of words through their form? It's easier with English: Special Relativity / Relativity Special, Einstein Field Equations / Field Equations Einstein)So I came to Hypothesis 1 :There are strongly connected networks of neurons in the brain. The activation of such a network gives rise to a sense of “one object” or “whole”. Writing new objects to memory occurs through the creation of a new strongly connected area.In other words, I believe that the ability to distinguish between objects is provided by the connectivity and time delay of activation.PSI will make a reservation that there is most likely a restriction on the size of the subnet. No matter how you learn the verse, the whole text will not become one object, it will be sequential activation along the chain.Pros:- Explains the recovery of information based on incomplete data and the existence of optical illusions, through the mechanism of activation of the subnet as a whole.

- -> -> -> , . , .

- , , .. . -> -> .

- UX-.

- SRP. , , « ». , . , , .

- The list goes on.

Cons:It is unclear exactly how the "sense of integrity" appears. Where does the message come to the part of the brain that we perceive as Self that the other part “closed the gestalt”?Falsification criteria:This hypothesis will be thrown into the trash if:- Neurons do not form stable, highly connected subnets.

- There is a way to prove experimentally that the ability to "highlight individual objects" is not related to the activation of the network from the point above.

- There will be an explanation of all the items from the list of "pluses", using fewer entities. At the same time, it should be reduced to neurons, or other objects that exist authentically in the brain.

- Any other method of formal or experimental refutation. Logical contradictions, consequences that are not confirmed, etc.

Falsification bounty programm:The hypothesis participates in a critical thinking reward program: $ 50 per rebuttal.Additional description under the spoiler below:Conditions:50 , .

, . . , — . , (, , , ).

:

, …, ..., , .

:

« , ?» — .

« , ...» — , - .

« ?» — , . , .

« ?» — . . .

« , , 4 »:

1 — , , .

2 — , . , .

3 — . , , , ---… , , «» - ---.

4 — , , — . , , .

“Strongly connected subnets of neurons” I will call simply “objects”. I'm too lazy to write 3 words. All psychological theories of memory operated on precisely this concept, and in everyday life it makes sense "that which can be distinguished as a whole."Subjectively, , — .

, , .

«» . .

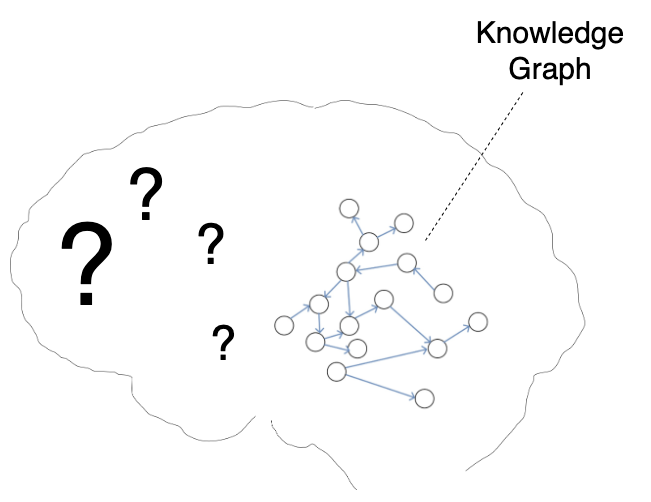

As you understand, objects are associated with other objects. If the result is a subnet that activates instantly, we will assume that we have formed a new object. If the connection is not strong enough or there are too many subobjects for instant activation, I will call this configuration a “model”.I propose to call the entire set of objects and the connections between them the Knowledge Graph and designate it on the KDPV.

Summary

We looked at how neurons are arranged and compiled their mathematical model (yes, arrows and circles are graph theory). Lightly touched on how the antidepressants and antipsychotics work in the brain - they regulate the levels of neurotransmitters and thus affect the activation of neurons (in fact, they change the strength of connections). We learned about the Hebb rule (neurons that turn on together - connect together), and the E-LTP mechanism, which is responsible for short-term memory. We looked at how the brain solved the problem of long-term memorization - by fixing the strength of communication through L-LTP.Based on our model, we predicted the existence of subnets of neurons with strong connections.With the help of this assumption, we tried to explain some of the effects that we observe: optical illusions, guessing and guessing objects based on incomplete information, the existence of the sensation of “one object”. Oddly enough - it turned out. We were able to use the same mechanism to compose more complex structures - models and complex objects. And it works too.It seems to me that this is a good intermediate result, but so far we have more questions than answers:- Why memory is not used "entirely"? Where is instant access to everything we know? In other words, why do we have to remember, and how does it work?

- At what point does L-LTP turn on and the information goes into long-term memory?

- , , ? : « ?» « ?» — , .

UPD: . - , ? ?

- ?

We will talk about them in the following articles.PSIf you have any questions about any part - I can state something in more detail in the comments or write a clarifying article. This one and so draws on Longrid, to state even more in detail - we get a whole book. Not sure if this matches the Habr format.If you have any suggestions on the style of presentation - I will be glad to hear them.License: CC BY-NC-ND 4.0