In order to ' adequately ' debug Celery from under Windows, there are several ways such as:> celery worker --app=demo_app.core --pool=solo --loglevel=INFO

But, in fact, for normal development, you need a Unix system. If you don’t have the opportunity to use it as a native, then it’s worth considering ...) Well, to be honest, there is always a way out and this is Docker, as well as WSL. If you use such “cool” IDEs like PyCharm, then everything becomes more complicated, because when using the interpreter with a source from WSL, after installing the package through pip, you will have to manually update the project skeleton due to indexing problems.But with Docker, everything is different. For cool management, we will need Windows 10 Pro, since the Home version does not support virtualization.Now install and test Docker . After this happens in the tray, the corresponding icon will appear. Next, we create and run the project on Django. Here I am using version 2.2. There will be a structure similar to this:

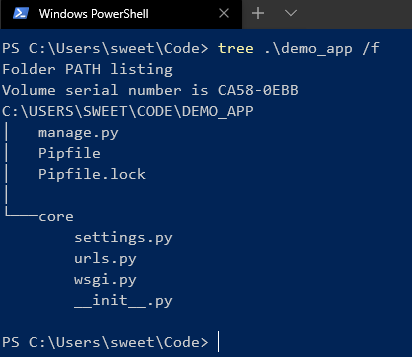

Next, we create and run the project on Django. Here I am using version 2.2. There will be a structure similar to this: After we install Celery and Redis as a broker.Now add some code to check:

After we install Celery and Redis as a broker.Now add some code to check:

CELERY_BROKER_URL = 'redis://demo_app_redis:6379'

CELERY_ACCEPT_CONTENT = ['json']

CELERY_TASK_SERIALIZER = 'json'

from __future__ import absolute_import, unicode_literals

import os

from celery import Celery

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'core.settings')

app = Celery('core')

app.config_from_object('django.conf:settings', namespace='CELERY')

app.autodiscover_tasks()

from __future__ import absolute_import, unicode_literals

from .celery import app as celery_app

__all__ = ('celery_app',)

Add a new django application in which our tasks will be and add a new file:

from celery.task import periodic_task

from datetime import timedelta

@periodic_task(run_every=(timedelta(seconds=5)), name='hello')

def hello():

print("Hello there")

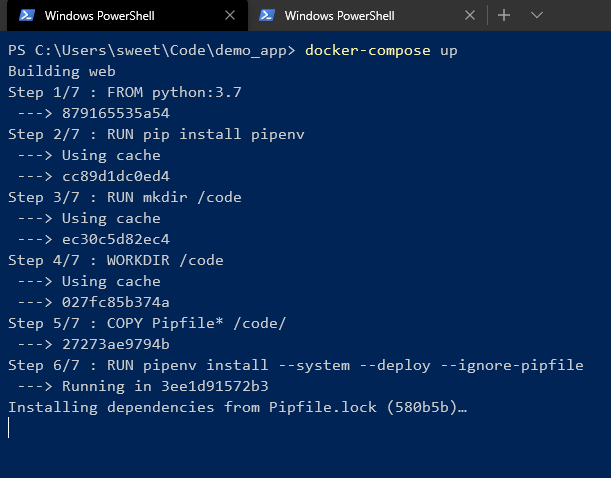

Next, create a Dockerfile and docker-compose.yml in the root of the project:# Dockerfile

FROM python:3.7

RUN pip install pipenv

RUN mkdir /code

WORKDIR /code

COPY Pipfile* /code/

RUN pipenv install --system --deploy --ignore-pipfile

ADD core /code/

# docker-compose.yml

version: '3'

services:

redis:

image: redis

restart: always

container_name: 'demo_app_redis'

command: redis-server

ports:

- '6379:6379'

web:

build: .

restart: always

container_name: 'demo_app_django'

command: python manage.py runserver 0.0.0.0:8000

volumes:

- .:/code

ports:

- '8000:8000'

celery:

build: .

container_name: 'demo_app_celery'

command: celery -A core worker -B

volumes:

- .:/code

links:

- redis

depends_on:

- web

- redis

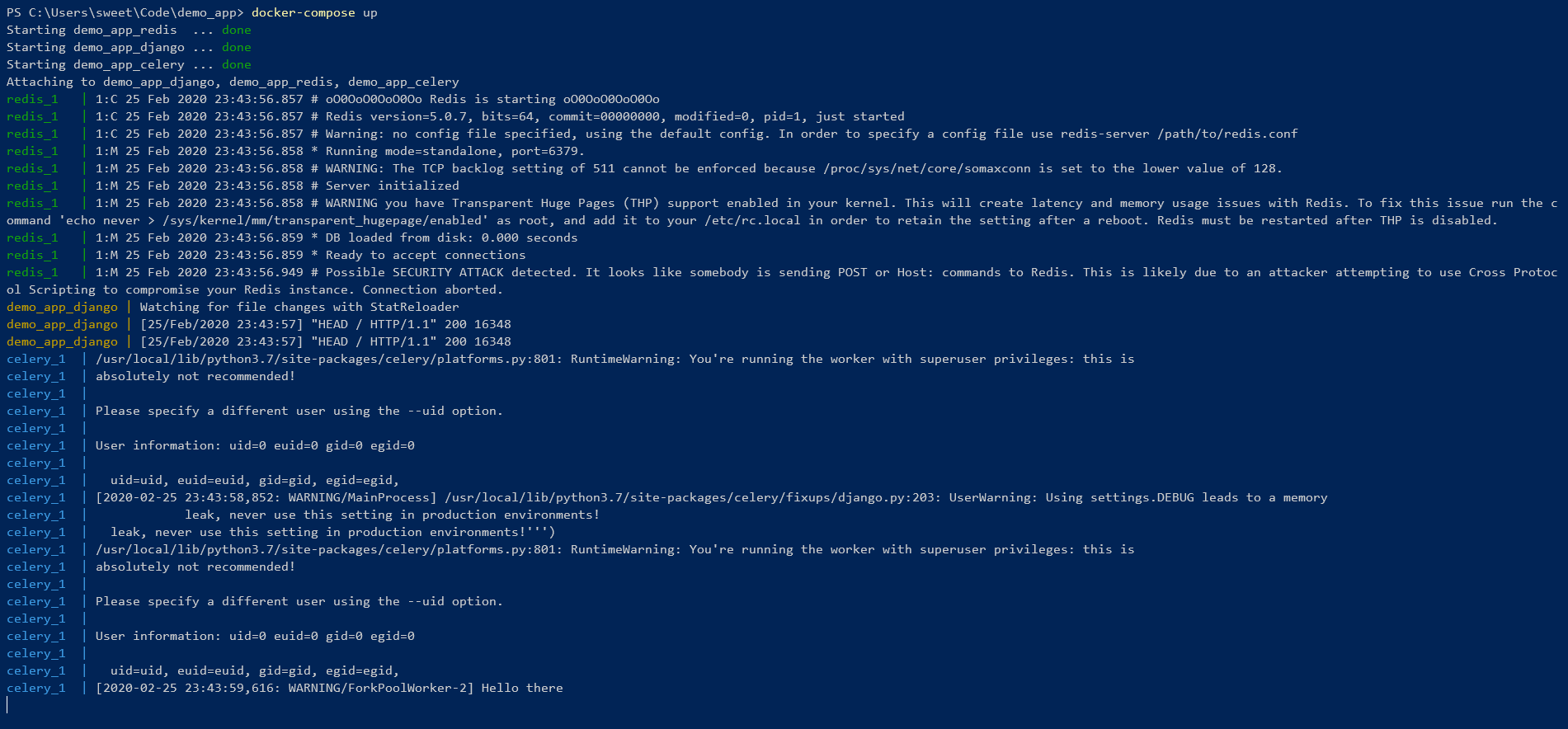

And start! We are waiting for the installation of all the dependencies in the pipenv environment. In the end, you should see something like this:

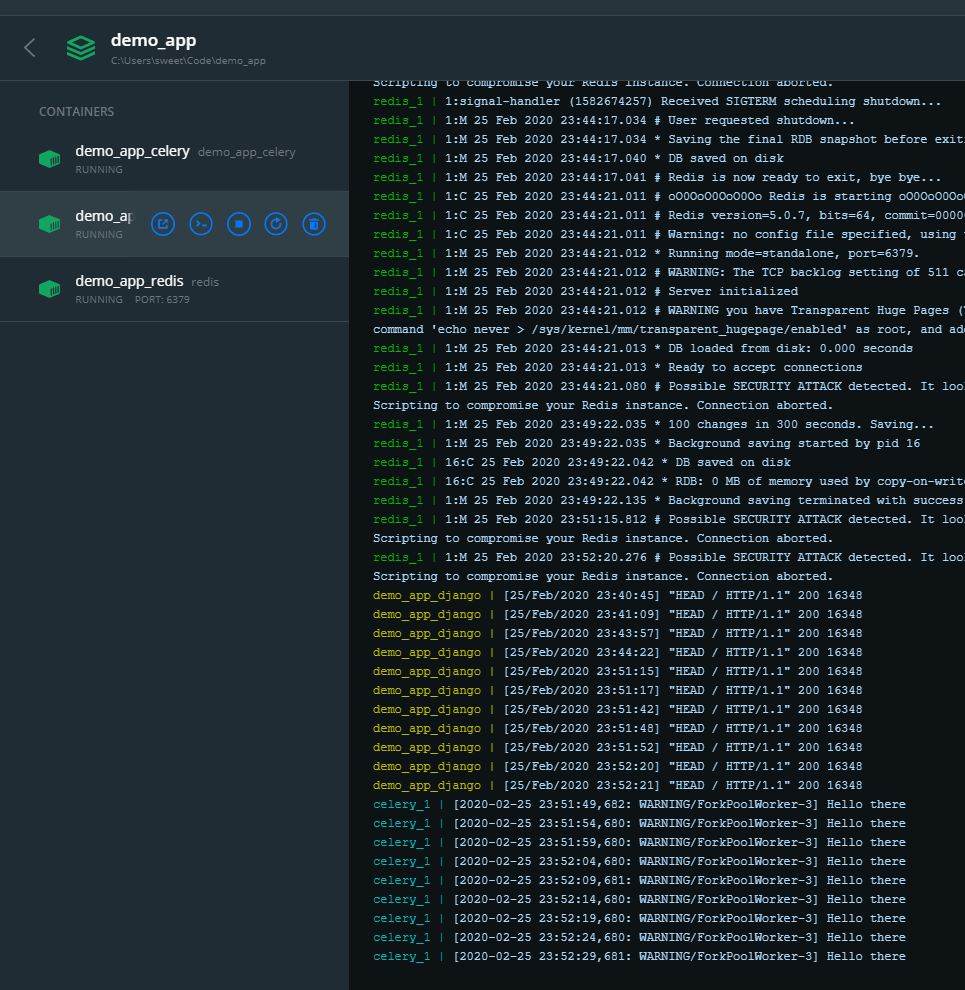

We are waiting for the installation of all the dependencies in the pipenv environment. In the end, you should see something like this: That means everything is cool ! You can control from the command line:

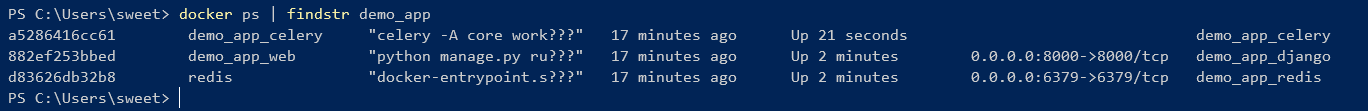

That means everything is cool ! You can control from the command line:

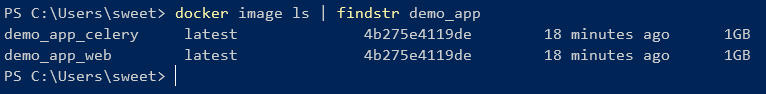

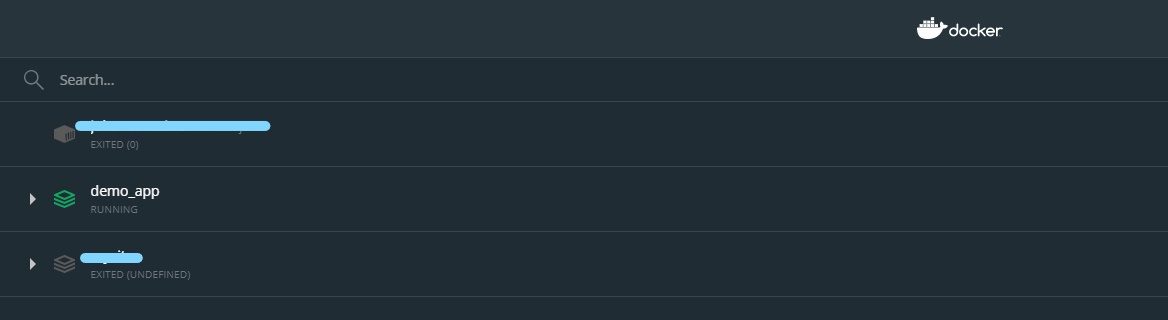

As you can see, 2 images that were created from one Dockerfile have one ID.But you can also manage containers using the GUI:

As you can see, 2 images that were created from one Dockerfile have one ID.But you can also manage containers using the GUI:

In this way, you can easily turn on / off, restart, delete the container.

In this way, you can easily turn on / off, restart, delete the container.