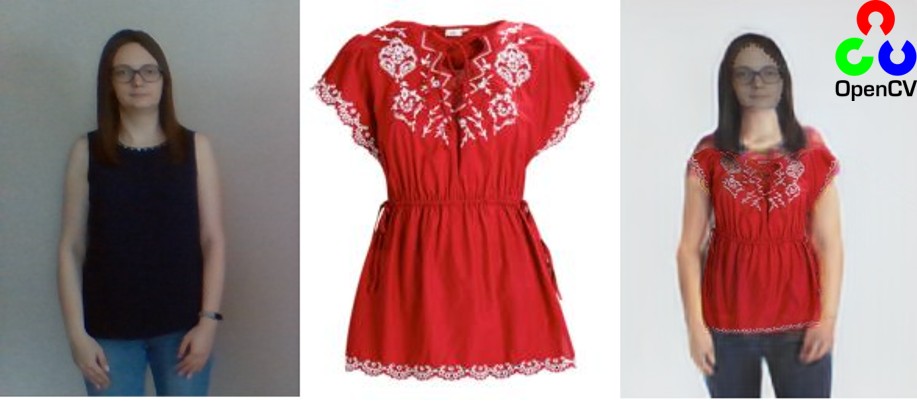

Did you have such a thing that you liked a thing in the online store, but do not want to buy it without trying it on? Of course, in some stores there is an opportunity to try on clothes after ordering before payment. However, according to statistics, the share of online orders in online stores of clothes and shoes is growing every year, but the share of returns is also growing, it amounts to 50–70% - these are huge logistics costs that can be significantly reduced using the online fitting room. Imagine you upload your photo, choose clothes and it is transferred to your image. Virtual fitting rooms for shoes already exist, they work quite successfully. Some time ago, we were interested in this topic, what about clothes? Such works also exist, but much less successful ones; in many of them, in addition to the article, nothing can be found,One can only dream of a working example. We decided to fix this and support one of the networks in the OpenCV library. What came of this can be seen invirtual_try_on.py sample.

The result is not perfect, but in this area is considered quite good.

If you want to know how the virtual fitting room works and what difficulties we encountered when integrating the model into OpenCV - welcome to the cat!

2019 CP-VTON . CP-VTON , , (, ). 3D , 3D-. . github. CP-VTON , , .

.

CP-VTON : GMM (Geometric Matching Module) — TOM (Try-On Module) — .

GMM , TOM — , — , — , — GMM, — ground truth ( ), — ground truth ( , ). , . VITON, . , , , , (, , ). . , GMM , . TOM , .

. , . OpenPose. . LIP_JPPNet. OpenCV () sample human_parsing.py.

, — , : , , . , .

.

GMM . , , . . . , . .

TOM . Unet. , . Unet , . , Unet . , . Upsample . (encoder) VGG-19. . Unet . , .

— .

Try-On — . . , , . perceptual loss. VGG , . VGG — , .

OpenCV

. json , OpenPose Caffe, LIP.

, , .

python3 virtual_try_on.py -i person_img.jpg -c cloth.jpg

OpenCV PIL. . LIP , . CP-VTON . , :

shape = (segm > 0).astype(np.float32)

head = (segm == 1).astype(np.float32) + \

(segm == 2).astype(np.float32) + \

(segm == 4).astype(np.float32) + \

(segm == 13).astype(np.float32)

cloth = (segm == 5).astype(np.float32) + \

(segm == 6).astype(np.float32) + \

(segm == 7).astype(np.float32)

, PIL , . human_colormap.mat. ? Matlab . , scipy . () .

– . 16 , .

mask = mask.resize((width // 16, height // 16), Image.BILINEAR)

mask = mask.resize((width, height), Image.BILINEAR)

. . , OpenCV. PIL resize cv.resize .

, PIL resize, — cv.resize.

, ? .

, PIL resize, — cv.resize.

, . ? , . , – . , bilinear resize bilinear, area. scale factor, 33 = 16 * 2 + 1, OpenCV – 3. , , . . . , . . :

PILclass BilinearFilter(object):

"""

PIL bilinear resize implementation

image = image.resize((image_width // 16, image_height // 16), Image.BILINEAR)

"""

def _precompute_coeffs(self, inSize, outSize):

filterscale = max(1.0, inSize / outSize)

ksize = int(np.ceil(filterscale)) * 2 + 1

kk = np.zeros(shape=(outSize * ksize, ), dtype=np.float32)

bounds = np.empty(shape=(outSize * 2, ), dtype=np.int32)

centers = (np.arange(outSize) + 0.5) * filterscale + 0.5

bounds[::2] = np.where(centers - filterscale < 0, 0, centers - filterscale)

bounds[1::2] = np.where(centers + filterscale > inSize, inSize, centers + filterscale) - bounds[::2]

xmins = bounds[::2] - centers + 1

points = np.array([np.arange(row) + xmins[i] for i, row in enumerate(bounds[1::2])]) / filterscale

for xx in range(0, outSize):

point = points[xx]

bilinear = np.where(point < 1.0, 1.0 - abs(point), 0.0)

ww = np.sum(bilinear)

kk[xx * ksize : xx * ksize + bilinear.size] = np.where(ww == 0.0, bilinear, bilinear / ww)

return bounds, kk, ksize

def _resample_horizontal(self, out, img, ksize, bounds, kk):

for yy in range(0, out.shape[0]):

for xx in range(0, out.shape[1]):

xmin = bounds[xx * 2 + 0]

xmax = bounds[xx * 2 + 1]

k = kk[xx * ksize : xx * ksize + xmax]

out[yy, xx] = np.round(np.sum(img[yy, xmin : xmin + xmax] * k))

def _resample_vertical(self, out, img, ksize, bounds, kk):

for yy in range(0, out.shape[0]):

ymin = bounds[yy * 2 + 0]

ymax = bounds[yy * 2 + 1]

k = kk[yy * ksize: yy * ksize + ymax]

out[yy] = np.round(np.sum(img[ymin : ymin + ymax, 0:out.shape[1]] * k[:, np.newaxis], axis=0))

def imaging_resample(self, img, xsize, ysize):

height, width, *args = img.shape

bounds_horiz, kk_horiz, ksize_horiz = self._precompute_coeffs(width, xsize)

bounds_vert, kk_vert, ksize_vert = self._precompute_coeffs(height, ysize)

out_hor = np.empty((img.shape[0], xsize), dtype=np.uint8)

self._resample_horizontal(out_hor, img, ksize_horiz, bounds_horiz, kk_horiz)

out = np.empty((ysize, xsize), dtype=np.uint8)

self._resample_vertical(out, out_hor, ksize_vert, bounds_vert, kk_vert)

return out

4 , , OpenCV. . , . , . 256 192, . sample . , - , .

P.S. 2020 OpenCV 20-. . !