Hello, Habr! This is the second post in a three-part series, which will give an idea of the mechanics and semantics of the work of the scheduler in Go. This post is about the Go planner.In the first part of this series, I explained aspects of the operating system scheduler that, in my opinion, are important for understanding and evaluating the Go scheduler's semantics. In this post, I will explain at a semantic level how the Go scheduler works. The Go Scheduler is a complex system and small mechanical details are not important. It is important to have a good model of how everything works and behaves. This will allow you to make the best engineering decisions.Your program is starting

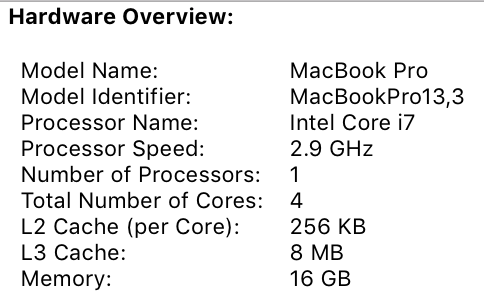

When your Go program starts, it is assigned a logical processor (P) for each virtual core defined on the host machine. If you have a processor with several hardware threads per physical core (Hyper-Threading), each hardware thread will be presented to your program as a virtual core. To better understand this, take a look at the system report for my MacBook Pro. You can see that I have one processor with 4 physical cores. This report does not disclose the number of hardware threads per physical core. The Intel Core i7 processor has Hyper-Threading technology, which means that the physical core has 2 hardware threads. This tells Go that 8 virtual cores are available for running OS threads in parallel. To verify this, consider the following program:

You can see that I have one processor with 4 physical cores. This report does not disclose the number of hardware threads per physical core. The Intel Core i7 processor has Hyper-Threading technology, which means that the physical core has 2 hardware threads. This tells Go that 8 virtual cores are available for running OS threads in parallel. To verify this, consider the following program:package main

import (

"fmt"

"runtime"

)

func main() {

fmt.Println(runtime.NumCPU())

}

When I run this program on my computer, the result of calling the NumCPU () function will be 8. Any Go program that I run on my computer will get 8 (P).Each P is assigned an OS stream ( M ). This thread is still managed by the OS, and the OS is still responsible for placing the thread in the kernel for execution. This means that when I run Go on my computer, I have 8 threads available to do my work, each individually linked to P.Each Go program is also given an initial Goroutine ( G) Goroutine is essentially Coroutine, but it is Go, so we replace the letter C with G and get the word Goroutine. You can think of Goroutines as application-level threads, and they are a lot like OS threads. Just as OS threads are turned on and off by the kernel, context programs are turned on and off by the context.The last puzzle is the execution queues. There are two different execution queues in the Go scheduler: the global execution queue (GRQ) and the local execution queue (LRQ). Each P is assigned an LRQ that controls the goroutins assigned to execute in the context of P. These goroutines turn on and off from the context M assigned to this P. GRQ is for goroutines that have not been assigned to P. There is a process to move the goroutines from GRQ to LRQ, which we will discuss later.The image shows all of these components together.

Cooperative planner

As we said in the first post, the OS scheduler is a preemptive scheduler. Essentially, this means that you cannot predict what the planner is going to do at any given time. The kernel makes decisions and everything is non-deterministic. Applications running on top of the operating system do not control what happens inside the kernel with scheduling unless they use synchronization primitives such as atomic instructions and mutex calls.The Go Scheduler is part of the Go Runtime, and the Go Runtime is built into your application. This means that the Go scheduler works in user space on the kernel. The current Go scheduler implementation is not a preemptive, but an interactive scheduler. Being a cooperative planner means that the planner needs clearly defined events in the user space that occur at safe points in the code to make planning decisions.What is good about Go's collaborative planner is that it looks and feels proactive. You cannot predict what the Go scheduler is going to do. This is due to the fact that decision-making for this scheduler does not depend on the developers, but on the execution time of Go. It is important to think of the Go scheduler as a proactive scheduler, and since the scheduler is non-deterministic, it is not too difficult.Gorutin States

Just like streams, goroutines have the same three high-level states. They determine the role that the Go planner plays with any goroutine. Goroutin can be in one of three states: Waiting, Ready, or Fulfilling.Waiting : This means that goroutine is stopped and waiting for something to continue. This can happen for reasons such as waiting for the operating system (system calls) or synchronization of calls (atomic and mutex operations). These types of delays are the main cause of poor performance.Readiness: this means that goroutine wants time to follow the assigned instructions. If you have a lot of goroutines who need time, then goroutines will have to wait longer to get time. In addition, the individual amount of time that any goroutine receives is reduced as more goroutines compete for time. This type of scheduling delay can also cause poor performance.Fulfillment : this means that goroutine has been placed in M and is following its instructions. The work associated with the application has been completed. This is what everyone wants.Context switch

The Go Scheduler requires well-defined user-space events that occur at secure points in the code to switch context. These events and safe points appear in function calls. Function calls are critical to the performance of the Go Scheduler. If you execute any narrow loops that do not make function calls, you will cause delays in the scheduler and garbage collection. It is imperative that function calls occur within a reasonable amount of time.There are four classes of events that occur in your Go programs that allow the planner to make planning decisions. This does not mean that this will always happen at one of these events. This means that the scheduler gets the opportunity.- Using the go keyword

- Garbage collector

- System calls

- Synchronization

Using the gokeyword The go keyword is how you create goroutine. As soon as a new goroutine is created, it gives the planner the opportunity to make a planning decision.Garbage Collector (GC)Since the GC works with its own set of goroutines, these gorutins need time on M to run. This forces the GC to create a lot of chaos in planning. However, the planner is very smart at what goroutine does, and he will use it to make decisions. One reasonable solution is to switch the context to goroutine, which wants to access the system resource, and no one else but it during garbage collection. When the GC works, many planning decisions are made.System callsIf goroutine makes a system call that will make it block M, the scheduler can switch the context to another goroutine, to the same M.SynchronizationIf a call to an atomic operation, a mutex or a channel causes goroutine to be blocked, the scheduler can switch the context to start a new goroutine. Once goroutine can work again, it can be queued and eventually switch back to M.Asynchronous system calls

When the operating system you are working on has the ability to process a system call asynchronously, what is called a network poller can be used to process the system call more efficiently. This is achieved using kqueue (MacOS), epoll (Linux) or iocp (Windows) in these respective OSs.Network system calls can be handled asynchronously by many of the operating systems we use today. This is where the network poller shows itself, since its main purpose is to process network operations. Using the network poller for network system calls, the scheduler can prevent goroutines from blocking M during these system calls. This helps keep M available to execute other goroutines in LRQ P without the need to create new M. This helps reduce the planning burden in the OS.The best way to see how this works is to look at an example. The figure shows our basic planning scheme. Gorutin-1 is executed on M, and 3 more Gorutins are waiting in LRQ to get their time on M. Network poller is idle, and he has nothing to do. In the following figure, Gorutin-1 (G1) wants to make a network system call, so G1 moves to the Network poller and is treated as an asynchronous network system call. Once G1 has been moved to Network poller, M is now available to execute another goroutine from LRQ. In this case, Gorutin-2 switches to M.

In the following figure, Gorutin-1 (G1) wants to make a network system call, so G1 moves to the Network poller and is treated as an asynchronous network system call. Once G1 has been moved to Network poller, M is now available to execute another goroutine from LRQ. In this case, Gorutin-2 switches to M. In the following figure, the system network call ends with an asynchronous network call, and G1 moves back to LRQ for P. After G1 can be switched back to M, the code associated with Go, for which he answers can execute again. The big win is that no additional Ms. is required to make network system calls. Network poller has an OS thread, and it processes through an event loop.

In the following figure, the system network call ends with an asynchronous network call, and G1 moves back to LRQ for P. After G1 can be switched back to M, the code associated with Go, for which he answers can execute again. The big win is that no additional Ms. is required to make network system calls. Network poller has an OS thread, and it processes through an event loop.Synchronous system calls

What happens when goroutine wants to make a system call that cannot be executed asynchronously? In this case, the Network poller cannot be used, and the goroutine making the system call will block M. This is bad, but there is no way to prevent this. One example of a system call that cannot be made asynchronously is file-based system calls. If you use CGO, there may be other situations where calling C functions also blocks M.The Windows operating system can make file-based asynchronous system calls. Technically, when working on Windows, you can use Network poller.

Let's see what happens with a synchronous system call (for example, file I / O) that will block M. The figure shows our basic planning diagram, but this time G1 is going to make a synchronous system call that will block M1. In the following figure, the scheduler can determine that G1 caused an M lock. At this point, the scheduler disconnects M1 from P with a blocking G1 still attached. The scheduler then introduces a new M2 to serve P. At this point, G2 can be selected from LRQ and included in the M2 context. If M already exists due to a previous exchange, this transition is faster than the need to create a new M.

In the following figure, the scheduler can determine that G1 caused an M lock. At this point, the scheduler disconnects M1 from P with a blocking G1 still attached. The scheduler then introduces a new M2 to serve P. At this point, G2 can be selected from LRQ and included in the M2 context. If M already exists due to a previous exchange, this transition is faster than the need to create a new M. The next step completes the lock system call made by G1. At this point, G1 can return to LRQ and be served again by P. M1 then goes aside for future use if this scenario should be repeated.

The next step completes the lock system call made by G1. At this point, G1 can return to LRQ and be served again by P. M1 then goes aside for future use if this scenario should be repeated.

Work stealing

Another aspect of the scheduler is that it is a goroutine theft planner. This helps in several areas to support effective planning. Firstly, the last thing you need is for M to go to the standby state, because as soon as this happens, the OS will switch M from the kernel using context. This means that P cannot do any work, even if there is a Goroutine in a healthy state, until M switches back to the kernel. Gorutin Theft also helps to balance time intervals between all Ps so that work is better distributed and performed more efficiently.In the figure, we have a multi-threaded Go program with two Ps serving four Gs each and one G in GRQ. What happens if one of P quickly serves all of its G? Further, P1 no longer has goroutines to execute. But there are goroutines in working condition, both in LRQ for P2, and in GRQ. This is the moment when P1 needs to steal goroutine.

Further, P1 no longer has goroutines to execute. But there are goroutines in working condition, both in LRQ for P2, and in GRQ. This is the moment when P1 needs to steal goroutine. The rules for stealing goroutines are as follows. All code can be viewed in the runtime sources.

The rules for stealing goroutines are as follows. All code can be viewed in the runtime sources.runtime.schedule() {

}

Thus, based on these rules, P1 should check P2 for the presence of goroutines in its LRQ and take half of what it finds. What happens if P2 finishes serving all its programs and P1 has nothing left in LRQ?P2 has completed all his work and now must steal the goroutines. First, he will look at the LRQ P1, but will not find any Goroutines. Next he will look at GRQ. There he will find the G9.

What happens if P2 finishes serving all its programs and P1 has nothing left in LRQ?P2 has completed all his work and now must steal the goroutines. First, he will look at the LRQ P1, but will not find any Goroutines. Next he will look at GRQ. There he will find the G9. P2 steals G9 from GRQ and starts to do the job. What is good about all this theft is that it allows M to stay busy and not be inactive.

P2 steals G9 from GRQ and starts to do the job. What is good about all this theft is that it allows M to stay busy and not be inactive.

Practical example

With mechanics and semantics, I want to show you how this all comes together so that the Go scheduler can do more work over time. Imagine a multi-threaded application written in C, in which the program manages two OS threads that send messages to each other. There are 2 threads in the picture that send the message back and forth. Thread 1 receives context-switched core 1 and is now running, which allows thread 1 to send its message to thread 2. Further, when thread 1 finishes sending the message, now it needs to wait for a response. This will cause thread 1 to be disconnected from the context of kernel 1 and put into a wait state. As soon as thread 2 receives a message notification, it goes into a healthy state. Now the OS can perform a context switch and run thread 2 on the kernel, which turns out to be kernel 2. Then thread 2 processes the message and sends a new message back to thread 1.

Further, when thread 1 finishes sending the message, now it needs to wait for a response. This will cause thread 1 to be disconnected from the context of kernel 1 and put into a wait state. As soon as thread 2 receives a message notification, it goes into a healthy state. Now the OS can perform a context switch and run thread 2 on the kernel, which turns out to be kernel 2. Then thread 2 processes the message and sends a new message back to thread 1. Next, the stream switches back to context when the message from stream 2 is received by stream 1. Now, stream 2 switches from the run state to the standby state, and stream 1 switches from the standby state to the ready state and finally returns to the run state, which allows it to process and send a new message back. All of these context switches and state changes take time to complete, which limits the speed of the work. Since each context switch entails a delay of ~ 1000 nanoseconds, and we hope that the hardware executes 12 instructions per nanosecond, you look at 12,000 instructions that are more or less not executed during these context switches. Since these flows also intersect between different nuclei,The likelihood of an additional cache-line misses delay is also high.

Next, the stream switches back to context when the message from stream 2 is received by stream 1. Now, stream 2 switches from the run state to the standby state, and stream 1 switches from the standby state to the ready state and finally returns to the run state, which allows it to process and send a new message back. All of these context switches and state changes take time to complete, which limits the speed of the work. Since each context switch entails a delay of ~ 1000 nanoseconds, and we hope that the hardware executes 12 instructions per nanosecond, you look at 12,000 instructions that are more or less not executed during these context switches. Since these flows also intersect between different nuclei,The likelihood of an additional cache-line misses delay is also high. In the figure there are two gorutins who are in harmony with each other, passing the message back and forth. G1 gets the context switch M1, which runs on Core 1, which allows G1 to do its job.

In the figure there are two gorutins who are in harmony with each other, passing the message back and forth. G1 gets the context switch M1, which runs on Core 1, which allows G1 to do its job. Further, when G1 finishes sending the message, now he needs to wait for a response. This will cause G1 to be disconnected from the M1 context and put into the idle state. As soon as G2 is notified of the message, it goes into a healthy state. Now the Go scheduler can perform context switching and run G2 on M1, which still runs on Core 1. Then G2 processes the message and sends a new message back to G1.

Further, when G1 finishes sending the message, now he needs to wait for a response. This will cause G1 to be disconnected from the M1 context and put into the idle state. As soon as G2 is notified of the message, it goes into a healthy state. Now the Go scheduler can perform context switching and run G2 on M1, which still runs on Core 1. Then G2 processes the message and sends a new message back to G1. In the next step, everything switches again when the message sent by G2 is received by G1. Now, the context G2 switches from the execution state to the waiting state, and the context G1 switches from the waiting state to the execution state and, finally, back to the execution state, which allows it to process and send a new message back.

In the next step, everything switches again when the message sent by G2 is received by G1. Now, the context G2 switches from the execution state to the waiting state, and the context G1 switches from the waiting state to the execution state and, finally, back to the execution state, which allows it to process and send a new message back. Things on the surface do not seem to be different. All the same context changes and state changes occur regardless of whether you use Streams or Goroutines. However, there is a big difference between the use of Streams and Gorutin, which may not be obvious at first glance.If goroutine is used, the same OS threads and kernel are used for all processing. This means that from an OS point of view, OS Flow never goes into a wait state; never. As a result, all those instructions that we lost when switching contexts when using streams are not lost when using goroutin.Essentially, Go turned IO / Blocking work into a processor-bound job at the OS level. Since all context switching occurs at the application level, we do not lose the same ~ 12 thousand instructions (on average) on context switching that we lost when using streams. In Go, the same context switches cost you ~ 200 nanoseconds or ~ 2.4 thousand commands. The scheduler also helps improve the performance of caching strings and NUMA. That's why we don’t need more threads than we have virtual cores. Go can do more work over time, because the Go scheduler tries to use fewer threads and do more on each thread, which helps reduce the load on the OS and hardware.

Things on the surface do not seem to be different. All the same context changes and state changes occur regardless of whether you use Streams or Goroutines. However, there is a big difference between the use of Streams and Gorutin, which may not be obvious at first glance.If goroutine is used, the same OS threads and kernel are used for all processing. This means that from an OS point of view, OS Flow never goes into a wait state; never. As a result, all those instructions that we lost when switching contexts when using streams are not lost when using goroutin.Essentially, Go turned IO / Blocking work into a processor-bound job at the OS level. Since all context switching occurs at the application level, we do not lose the same ~ 12 thousand instructions (on average) on context switching that we lost when using streams. In Go, the same context switches cost you ~ 200 nanoseconds or ~ 2.4 thousand commands. The scheduler also helps improve the performance of caching strings and NUMA. That's why we don’t need more threads than we have virtual cores. Go can do more work over time, because the Go scheduler tries to use fewer threads and do more on each thread, which helps reduce the load on the OS and hardware.Conclusion

The Go Scheduler is truly amazing in how it takes into account the intricacies of the operating system and hardware. The ability to turn I / O / lock operation into a processor-bound operation at the operating system level is where we get big gains in using more processor power over time. This is why you don’t need more OS threads than you have virtual kernels. You can reasonably expect that all of your work will be done (with CPU binding and I / O / locks) with one OS thread per virtual kernel. This is possible for network applications and other applications that do not need system calls that block OS threads.As a developer, you should still understand what your application does in terms of type of work. You cannot create an unlimited amount of goroutines and expect amazing performance. Less is always more, but with an understanding of this semantics of the Go scheduler, you can make better engineering decisions.