Hi, I work in the project team of the RRP KP (distributed data register for monitoring the life cycle of wheelsets). Here I want to share the experience of our team in developing a corporate blockchain for this project under the conditions imposed by technology. For the most part I will talk about Hyperledger Fabric, but the approach described here can be extrapolated to any permissioned blockchain. The ultimate goal of our research is to prepare corporate blockchain solutions so that the final product is pleasant to use and not too hard to maintain.There will be no discoveries, unexpected solutions and no unique developments will be covered here (because I do not have them). I just want to share my modest experience, to show that “it was possible” and, perhaps, to read about the experiences of others in making good and not so good decisions in the comments.Problem: blockchains are not scaled yet

Today, the efforts of many developers are aimed at making the blockchain a really convenient technology, and not a time bomb in a beautiful wrapper. State channels, optimistic rollup, plasma, and sharding may become everyday. Someday. Or perhaps TON will again delay the launch for six months, and the next Plasma Group will cease to exist. We can believe in another roadmap and read brilliant white papers for the night, but here and now we need to do something with what we have. Get shit done.The task set for our team in the current project looks like this in general: there are many entities reaching several thousand, not wishing to build relationships on trust; it is necessary to build such a solution on DLT that will work on ordinary PCs without special performance requirements and provide a user experience no worse than any centralized accounting system. The technology underlying the solution should minimize the possibility of malicious manipulation of data - this is why the blockchain is here.Slogans from whitepapers and the media promise us that the next development will allow you to make millions of transactions per second. What really is?Mainnet Ethereum is now running at ~ 30 tps. Already because of this, it is difficult to perceive it as any blockchain suitable for corporate needs. Among permissioned-solutions, benchmarks are known, showing 2000 tps ( Quorum ) or 3000 tps ( Hyperledger Fabric , the publication is slightly smaller, but you need to consider that the benchmark was carried out on the old consensus engine). There was an attempt to radically revise Fabric , which did not give the worst results, 20,000 tps, but so far this is only academic research waiting for its stable implementation. It is unlikely that a corporation that can afford to maintain a department of blockchain developers will put up with such indicators. But the problem is not only throughput, there is still latency.Latency

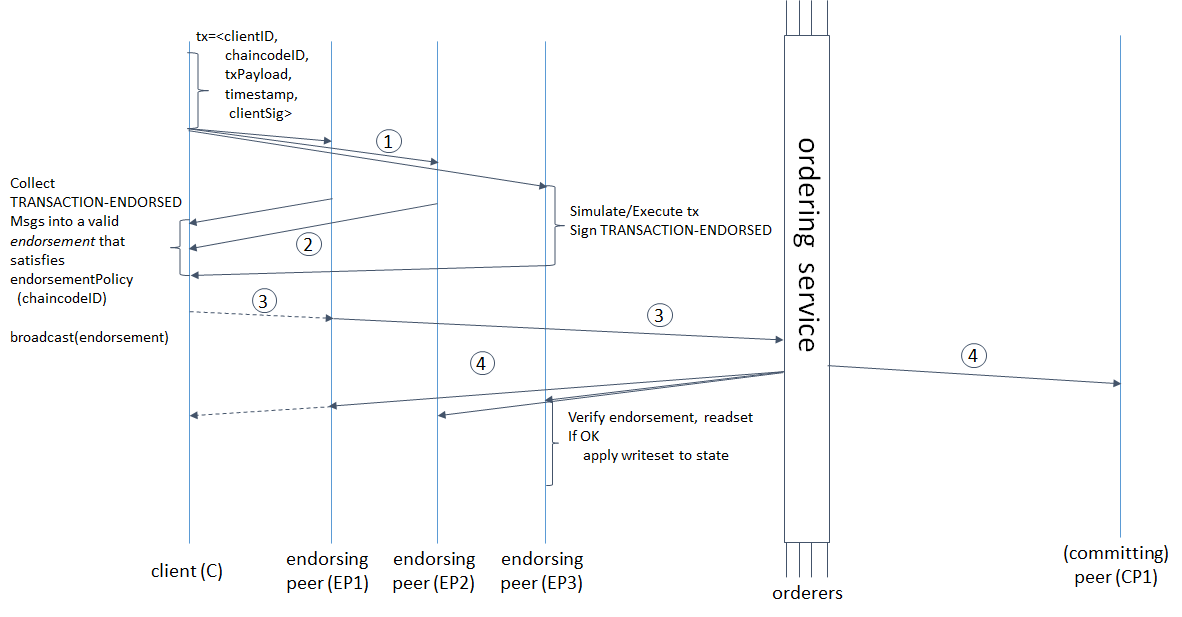

The delay from the moment the transaction is initiated to its final approval by the system depends not only on the speed of the message passing through all stages of validation and ordering, but also on the parameters of the formation of the block. Even if our blockchain allows us to commit at a speed of 1,000,000 tps, but it takes 10 minutes to form a 488 MB block, will it become easier for us?Let's take a closer look at the transaction life cycle in Hyperledger Fabric to understand what the time is spent on and how it relates to the parameters of block formation. taken from here : hyperledger-fabric.readthedocs.io/en/release-1.4/arch-deep-dive.html#swimlane(1) The client generates a transaction, sends it to endorsing peers, the latter simulate the transaction (apply the changes made by the chaincode to the current state, but do not commit to the ledger) and get RWSet - key names, versions and values taken from the collection in CouchDB, ( 2) endorsers send back the signed RWSet to the client, (3) the client either checks for the signatures of all necessary peers (endorsers), and then sends the transaction to the ordering service, or sends it without verification (verification will still take place later), ordering service forms a block and ( 4) sends back to all peers, not only endorsers; peers check that the key versions in the read set match the versions in the database, the signatures of all endorsers, and finally commit the block.But that's not all. The words “order forms a block” hide not only the ordering of transactions, but also 3 consecutive network requests from the leader to the followers and vice versa: the leader adds a message to the log, sends followers, the latter add to his log, send confirmation of successful replication to the leader, the leader commits a message , sends a commit confirmation to followers, followers commit. The smaller the size and time of forming the block, the more often it will be necessary for the ordering service to establish consensus. Hyperledger Fabric has two parameters of block formation: BatchTimeout - time of block formation and BatchSize - block size (number of transactions and the size of the block itself in bytes). As soon as one of the parameters reaches the limit, a new block is released. The more warrant nodes, the longer it will take. Therefore, you need to increase BatchTimeout and BatchSize. But since RWSets are versioned, the more we make a block, the higher the likelihood of MVCC conflicts. In addition, with an increase in BatchTimeout, UX is catastrophically degrading. It seems to me reasonable and obvious the following scheme to solve these problems.

taken from here : hyperledger-fabric.readthedocs.io/en/release-1.4/arch-deep-dive.html#swimlane(1) The client generates a transaction, sends it to endorsing peers, the latter simulate the transaction (apply the changes made by the chaincode to the current state, but do not commit to the ledger) and get RWSet - key names, versions and values taken from the collection in CouchDB, ( 2) endorsers send back the signed RWSet to the client, (3) the client either checks for the signatures of all necessary peers (endorsers), and then sends the transaction to the ordering service, or sends it without verification (verification will still take place later), ordering service forms a block and ( 4) sends back to all peers, not only endorsers; peers check that the key versions in the read set match the versions in the database, the signatures of all endorsers, and finally commit the block.But that's not all. The words “order forms a block” hide not only the ordering of transactions, but also 3 consecutive network requests from the leader to the followers and vice versa: the leader adds a message to the log, sends followers, the latter add to his log, send confirmation of successful replication to the leader, the leader commits a message , sends a commit confirmation to followers, followers commit. The smaller the size and time of forming the block, the more often it will be necessary for the ordering service to establish consensus. Hyperledger Fabric has two parameters of block formation: BatchTimeout - time of block formation and BatchSize - block size (number of transactions and the size of the block itself in bytes). As soon as one of the parameters reaches the limit, a new block is released. The more warrant nodes, the longer it will take. Therefore, you need to increase BatchTimeout and BatchSize. But since RWSets are versioned, the more we make a block, the higher the likelihood of MVCC conflicts. In addition, with an increase in BatchTimeout, UX is catastrophically degrading. It seems to me reasonable and obvious the following scheme to solve these problems.Avoid waiting for block finalization and not lose the ability to track transaction status

The longer the formation time and the block size, the higher the throughput of the blockchain. One of the other does not follow directly, but it should be remembered that consensus building in RAFT requires three network requests from the leader to the followers and vice versa. The more order nodes, the longer it will take. The smaller the size and formation time of the block, the more such interactions. How to increase the formation time and the block size without increasing the waiting time for a system response for the end user?Firstly, you need to somehow solve MVCC conflicts caused by a large block size, which may include different RWSets with the same version. Obviously, on the client side (in relation to the blockchain network, this may well be the backend, and I mean it), you need an MVCC conflict handler, which can be either a separate service or a regular decorator over a transaction-triggering call with retry logic.Retry can be implemented with an exponential strategy, but then latency will degrade just as exponentially. So you should use either a randomized retry within certain small limits, or a permanent one. With an eye on possible conflicts in the first embodiment.The next step is to make the client’s interaction with the system asynchronous so that it does not wait 15, 30, or 10,000,000 seconds, which we will set as BatchTimeout. But at the same time, you need to save the opportunity to make sure that the changes initiated by the transaction are written / not written to the blockchain.You can use a database to store transaction status. The easiest option is CouchDB because of ease of use: the database has a UI out of the box, a REST API, and you can easily configure replication and sharding for it. You can create just a separate collection in the same CouchDB instance that Fabric uses to store its world state. We need to store documents of this kind.{

Status string

TxID: string

Error: string

}

This document is written to the database before the transaction is transferred to peers, an entity ID is returned to the user (the same ID is used as a key) if this is an operation to create something, and then the Status, TxID and Error fields are updated as relevant information from peers is received. In this scheme, the user does not wait for the block to finally form, watching the spinning wheel on the screen for 10 seconds, he receives an instant response from the system and continues to work.We chose BoltDB for storing transaction statuses, because we need to save memory and don’t want to spend time on network interaction with a stand-alone database server, especially when this interaction takes place using the plain text protocol. By the way, you use CouchDB to implement the scheme described above or just to store the world state, in any case, it makes sense to optimize the way data is stored in CouchDB. By default, in CouchDB, the size of b-tree nodes is 1279 bytes, which is much smaller than the sector size on the disk, which means that both reading and rebalancing the tree will require more physical disk accesses. The optimal size complies with the Advanced Format standard and is 4 kilobytes. For optimization, we need to set the btree_chunk_size parameter to 4096in the CouchDB configuration file. For BoltDB, such manual intervention is not required .

In this scheme, the user does not wait for the block to finally form, watching the spinning wheel on the screen for 10 seconds, he receives an instant response from the system and continues to work.We chose BoltDB for storing transaction statuses, because we need to save memory and don’t want to spend time on network interaction with a stand-alone database server, especially when this interaction takes place using the plain text protocol. By the way, you use CouchDB to implement the scheme described above or just to store the world state, in any case, it makes sense to optimize the way data is stored in CouchDB. By default, in CouchDB, the size of b-tree nodes is 1279 bytes, which is much smaller than the sector size on the disk, which means that both reading and rebalancing the tree will require more physical disk accesses. The optimal size complies with the Advanced Format standard and is 4 kilobytes. For optimization, we need to set the btree_chunk_size parameter to 4096in the CouchDB configuration file. For BoltDB, such manual intervention is not required .Backpressure: buffer strategy

But there can be a lot of messages. More than the system is capable of processing, sharing resources with a dozen other services besides those shown in the diagram - and all this should work without fail even on machines on which launching Intellij Idea will be extremely tedious.The problem of different throughputs of communicating systems, producer and consumer, is solved in different ways. Let's see what we could do.Dropping : we can claim to be able to process no more than X transactions in T seconds. All requests that exceed this limit are reset. It's pretty simple, but then you can forget about UX.Controlling: the consumer should have some interface through which, depending on the load, he will be able to control the producer's tps. Not bad, but it imposes obligations on the developers of the client creating the load to implement this interface. For us, this is unacceptable, since the blockchain in the future will be integrated into a large number of long-existing systems.Buffering : Instead of contriving to resist the input data stream, we can buffer this stream and process it at the required speed. Obviously, this is the best solution if we want to provide a good user experience. We implemented the buffer using the queue in RabbitMQ. Two new actions were added to the scheme: (1) after receiving the request for the API, a message with the parameters necessary to call the transaction is queued, and the client receives a message that the transaction was accepted by the system, (2) the backend reads the data with the speed specified in the config from the queue; initiates a transaction and updates the data in the status store.Now you can increase the formation time and block capacity as much as you want, hiding delays from the user.

Two new actions were added to the scheme: (1) after receiving the request for the API, a message with the parameters necessary to call the transaction is queued, and the client receives a message that the transaction was accepted by the system, (2) the backend reads the data with the speed specified in the config from the queue; initiates a transaction and updates the data in the status store.Now you can increase the formation time and block capacity as much as you want, hiding delays from the user.Other tools

Nothing was said here about the chaincode, because, as a rule, there is nothing to optimize in it. Chaincode should be as simple and secure as possible - that's all that is required of it. Cheynkod write simply and safely helps us greatly framework SSKit from S7 Techlab and static analyzer Revive ^ CC .In addition, our team is developing a set of utilities to make working with Fabric simple and enjoyable: a blockchain explorer , a utility for automatically changing the network configuration (adding / deleting organizations, RAFT nodes), a utility for revoking certificates and removing identity . If you want to contribute - welcome.Conclusion

This approach makes it easy to replace Hyperledger Fabric with Quorum, other private Ethereum networks (PoA or even PoW), significantly reduce real bandwidth, but at the same time maintain normal UX (both for users in the browser and for integrated systems). When replacing Fabric with Ethereum in the scheme, you will only need to change the logic of the retry service / decorator from processing MVCC conflicts to the atomic increment nonce and resending. Buffering and status storage allowed to decouple the response time from the block formation time. Now you can add thousands of order nodes and not be afraid that blocks are formed too often and load ordering service.In general, that’s all I wanted to share. I would be glad if this helps someone in their work.