Our team at Sberbank is developing a session data service that organizes the interchange of a single Java session context between distributed applications. Our service urgently needs very fast serialization of Java objects, as this is part of our mission critical task. Initially, they came to our mind: Google Protocol Buffers , Apache Thrift , Apache Avro , CBORet al. The first three of these libraries require serialization of objects to describe the schema of their data. CBOR is so low-level that it can only serialize scalar values and their sets. What we needed was a Java serialization library that “didn’t ask too many questions” and didn’t force manual sorting of serializable objects “into atoms”. We wanted to serialize arbitrary Java objects without knowing practically anything about them, and we wanted to do this as quickly as possible. Therefore, we organized a competition for the available Open Source solutions to the Java serialization problem.

Competitors

For the competition, we selected the most popular Java serialization libraries, mainly using the binary format, as well as libraries that have worked well in other Java serialization reviews .Here we go!Race

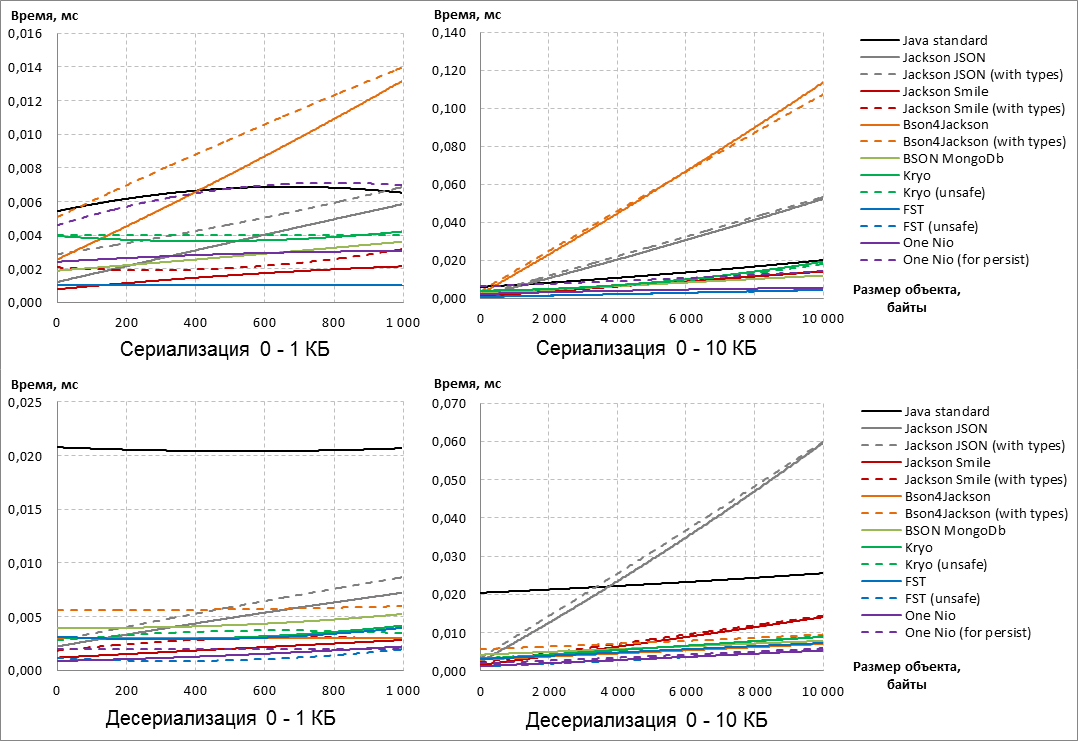

Speed is the main criterion for evaluating Java serialization libraries that are participants in our impromptu competition. In order to objectively evaluate which of the serialization libraries is faster, we took real data from the logs of our system and composed synthetic session data from them of different lengths : from 0 to 1 MB. The format of the data was strings and byte arrays.Note: Looking ahead, it should be said that winners and losers have already appeared on the sizes of serializable objects from 0 to 10 KB. A further increase in the size of objects to 1 MB did not change the outcome of the competition.

In this regard, for better clarity, the following graphs of the performance of Java serializers are limited by the size of objects of 10 KB.

, :: , IBM JRE One Nio ( 13 14). sun.reflect.MagicAccessorImpl private final ( ) , . , IBM JRE sun.reflect.MagicAccessorImpl, , runtime .

(, Serialization-FAQ, One Nio ), fork , sun.reflect.MagicAccessorImpl . sun.reflect.MagicAccessorImpl fork- sun.misc.Unsafe .

In addition, in our fork, the serialization of strings was optimized - strings began to be serialized 30-40% faster when working on IBM JRE.

In this regard, in this publication all the results for the One Nio library were obtained on our own fork, and not on the original library.

Direct measurement of serialization / deserialization speed was performed using Java Microbenchmark Harness (JMH) - a tool from OpenJDK for building and running benchmark-s. For each measurement (one point on the graph), 5 seconds were used to “warm up” the JVM and another 5 seconds for the time measurements themselves, followed by averaging.UPD:JMH benchmark code without some detailspublic class SerializationPerformanceBenchmark {

@State( Scope.Benchmark )

public static class Parameters {

@Param( {

"Java standard",

"Jackson default",

"Jackson system",

"JacksonSmile default",

"JacksonSmile system",

"Bson4Jackson default",

"Bson4Jackson system",

"Bson MongoDb",

"Kryo default",

"Kryo unsafe",

"FST default",

"FST unsafe",

"One-Nio default",

"One-Nio for persist"

} )

public String serializer;

public Serializer serializerInstance;

@Param( { "0", "100", "200", "300", /*... */ "1000000" } )

public int sizeOfDto;

public Object dtoInstance;

public byte[] serializedDto;

@Setup( Level.Trial )

public void setup() throws IOException {

serializerInstance = Serializers.getMap().get( serializer );

dtoInstance = DtoFactory.createWorkflowDto( sizeOfDto );

serializedDto = serializerInstance.serialize( dtoInstance );

}

@TearDown( Level.Trial )

public void tearDown() {

serializerInstance = null;

dtoInstance = null;

serializedDto = null;

}

}

@Benchmark

public byte[] serialization( Parameters parameters ) throws IOException {

return parameters.serializerInstance.serialize(

parameters.dtoInstance );

}

@Benchmark

public Object unserialization( Parameters parameters ) throws IOException, ClassNotFoundException {

return parameters.serializerInstance.deserialize(

parameters.serializedDto,

parameters.dtoInstance.getClass() );

}

}

Here's what happened: First, we note that library options that add additional meta-data to the serialization result are slower than the default configurations of the same libraries (see the “with types” and “for persist” configurations). In general, regardless of the configuration, Jackson JSON and Bson4Jackson, who are out of the race, become outsiders according to the results of serialization . In addition, Java Standard drops out of the race based on deserialization results , as for any size of serializable data, deserialization is much slower than competitors. Take a closer look at the remaining participants: According to the results of serialization, the FST library is in confident leaders

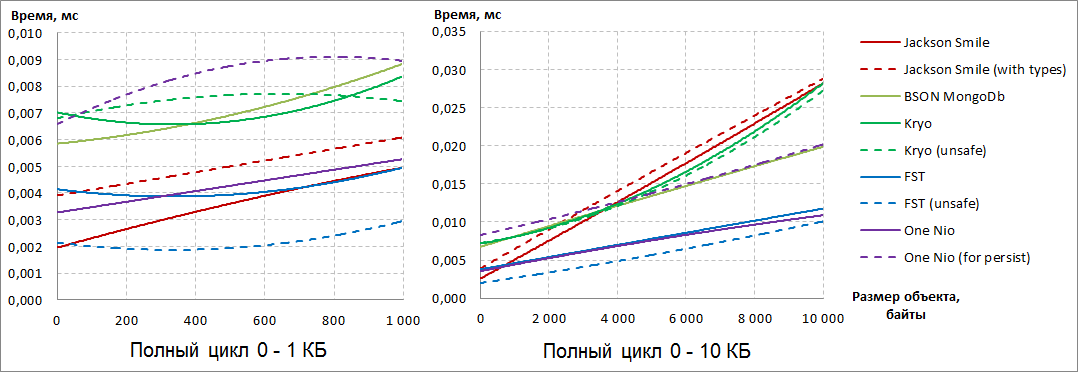

, and with an increase in the size of objects, One Nio “steps on her heels” . Note that for One Nio, the “for persist” option is much slower than the default configuration for serialization speed.If you look at deserialization, we see that One Nio was able to overtake FST with increasing data size . In the latter, on the contrary, the non-standard configuration “unsafe” performs deserialization much faster.In order to put all the points over AND, let's look at the total result of serialization and deserialization: It became obvious that there are two unequivocal leaders: FST (unsafe) and One Nio . If on small objects FST (unsafe)

, and with an increase in the size of objects, One Nio “steps on her heels” . Note that for One Nio, the “for persist” option is much slower than the default configuration for serialization speed.If you look at deserialization, we see that One Nio was able to overtake FST with increasing data size . In the latter, on the contrary, the non-standard configuration “unsafe” performs deserialization much faster.In order to put all the points over AND, let's look at the total result of serialization and deserialization: It became obvious that there are two unequivocal leaders: FST (unsafe) and One Nio . If on small objects FST (unsafe) confidently leads, then with the increase in the size of serializable objects, he begins to concede and, ultimately, inferior to One Nio .The third position with the increase in the size of serializable objects is confidently taken by BSON MongoDb , although it is almost two times ahead of the leaders.

confidently leads, then with the increase in the size of serializable objects, he begins to concede and, ultimately, inferior to One Nio .The third position with the increase in the size of serializable objects is confidently taken by BSON MongoDb , although it is almost two times ahead of the leaders.Weighing

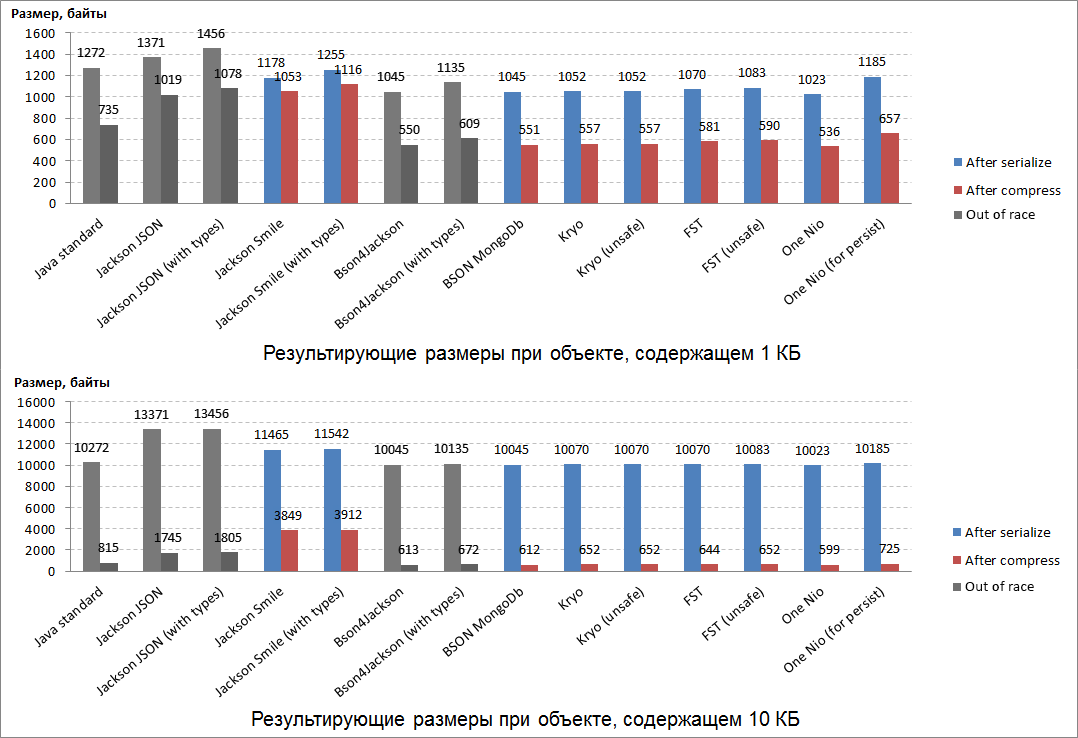

The size of the serialization result is the second most important criterion for evaluating Java serialization libraries. In a way, the speed of serialization / deserialization depends on the size of the result: it is faster to form and process a compact result than a volume one. To "weight" the results of serialization, all the same Java objects were used, formed from real data taken from the system logs (strings and byte arrays).In addition, an important property of the result of serialization is also how much it compresses well (for example, to save it in the database or other storages). In our competition, we used Deflate compression algorithm , which is the basis for ZIP and gzip.The results of the "weighing" were as follows: It is expected that the most compact results were serialization from one of the leaders of the race: One Nio .The second place in compactness went to BSON MongoDb (which took third place in the race).In third place in terms of compactness, the Kryo library “escaped” , which had previously failed to prove itself in the race.The serialization results of these 3 leaders of "weighing" are also perfectly compressed (almost two). It turned out to be the most uncompressible: the binary equivalent of JSON is Smile and JSON itself.A curious fact - all the winners of the "weighing" during serialization add the same amount of service data to both small and large serializable objects.

It is expected that the most compact results were serialization from one of the leaders of the race: One Nio .The second place in compactness went to BSON MongoDb (which took third place in the race).In third place in terms of compactness, the Kryo library “escaped” , which had previously failed to prove itself in the race.The serialization results of these 3 leaders of "weighing" are also perfectly compressed (almost two). It turned out to be the most uncompressible: the binary equivalent of JSON is Smile and JSON itself.A curious fact - all the winners of the "weighing" during serialization add the same amount of service data to both small and large serializable objects.Flexibility

Before making a responsible decision about choosing a winner, we decided to thoroughly check the flexibility of each serializer and its usability.For this, we compiled 20 criteria for evaluating our serializers participating in the competition so that “not a single mouse would slip” past our eyes.

Footnotes with Explanations1 LinkedHashMap.

2 — , — .

3 — , — .

4 sun.reflect.MagicAccessorImpl — : boxing/unboxing, BigInteger/BigDecimal/String. MagicAccessorImpl ( ' fork One Nio) — .

5 ArrayList.

6 ArrayList HashSet .

7 HashMap.

8 — , , /Map-, ( HashMap).

9 -.

10 One Nio — , ' fork- — .

11 .

UPD: According to the 13th criterion, One Nio (for persist) received another point (19th).This meticulous “examination of applicants” was perhaps the most time-consuming stage of our “casting”. But then these comparison results open well the convenience of using serialization libraries. As a consequence, you can use these results as a reference.It was a shame to realize, but our leaders according to the results of races and weighing - FST (unsafe) and One Nio- turned out to be outsiders in terms of flexibility ... However, we were interested in a curious fact: One Nio in the “for persist” configuration (not the fastest and not the most compact) scored the most points in terms of flexibility - 19/20. The opportunity to make the default (fast and compact) One Nio configuration work as flexibly also looked very attractive - and there was a way.At the very beginning, when we introduced the participants to the competition, it was said that One Nio (for persist) included in the result of serialization detailed meta-information about the class of the serializable Java object(*). Using this meta information for deserialization, the One Nio library knows exactly what the class of the serializable object looked like at the time of serialization. It is on the basis of this knowledge that the One Nio deserialization algorithm is so flexible that it provides the maximum compatibility resulting from serialization byte[].It turned out that meta-information (*) can be separately obtained for the specified class, serialized to byte[] and sent to the side where the Java objects of this class will be deserialized:With code in steps ...

one.nio.serial.Serializer<SomeDto> dtoSerializerWithMeta = Repository.get( SomeDto.class );

byte[] dtoMeta = serializeByDefaultOneNioAlgorithm( dtoSerializerWithMeta );

// №1: dtoMeta №2

one.nio.serial.Serializer<SomeDto> dtoSerializerWithMeta = deserializeByOneNio( dtoMeta );

Repository.provideSerializer( dtoSerializerWithMeta );

byte[] bytes1 = serializeByDefaultOneNioAlgorithm( object1 );

byte[] bytes2 = serializeByDefaultOneNioAlgorithm( object2 );

...

SomeDto object1 = deserializeByOneNio( bytes1 );

SomeDto object2 = deserializeByOneNio( bytes2 );

...

If you perform this explicit procedure for exchanging meta-information about classes between distributed services, then such services will be able to send serialized Java objects to each other using the default (fast and compact) One Nio configuration. After all, while the services are running, the versions of the classes on their sides are unchanged, which means there is no need to “drag back and forth” the constant meta information within each serialization result during each interaction. Thus, having done a little more action in the beginning, then you can use the speed and compactness of One Nio simultaneously with the flexibility of One Nio (for persist) . Exactly what is needed!As a result, for transferring Java objects between distributed services in serialized form (this is what we organized this competition for) One Nio was the winner in flexibility (19/20).Among the Java serializers that distinguished themselves earlier in racing and weighing, not bad flexibility was demonstrated:- BSON MongoDb (14.5 / 20),

- Kryo (13/20).

Pedestal

Recall the results of past Java serialization competitions:- in races, the first two lines of the rating were divided by FST (unsafe) and One Nio , and BSON MongoDb took the third place ,

- One Nio defeated the weigh-in , followed by BSON MongoDb and Kryo ,

- in terms of flexibility, just for our task of exchanging session context between distributed applications, One Nio again went first , and BSON MongoDb and Kryo excelled .

Thus, in terms of the totality of the results achieved, the pedestal we obtained is as follows:- One Nio

In the main competition - races - shared the first place with FST (unsafe) , but significantly weighed the competitor in weighing and testing flexibility. - FST (unsafe)

Also a very fast Java serialization library, however, it lacks the direct and backward compatibility of the byte arrays resulting from the serialization. - BSON MongoDB + Kryo

2 3- Java-, . 2- , . Collection Map, BSON MongoDB custom- / (Externalizable ..).

In Sberbank, in our session data service, we used the One Nio library , which won first place in our competition. Using this library, Java session context data was serialized and transferred between applications. Thanks to this revision, the speed of session transport has accelerated several times. Load testing showed that in scenarios that are close to the actual behavior of users in Sberbank Online, an acceleration of up to 40% was obtained only due to this improvement alone. Such a result means a reduction in the response time of the system to user actions, which increases the degree of satisfaction of our customers.In the next article I will try to demonstrate in action the additional acceleration of One Nioderived from using the class sun.reflect.MagicAccessorImpl. Unfortunately, the IBM JRE does not support the most important properties of this class, which means that the full potential of One Nio on this version of the JRE has not yet been revealed. To be continued.