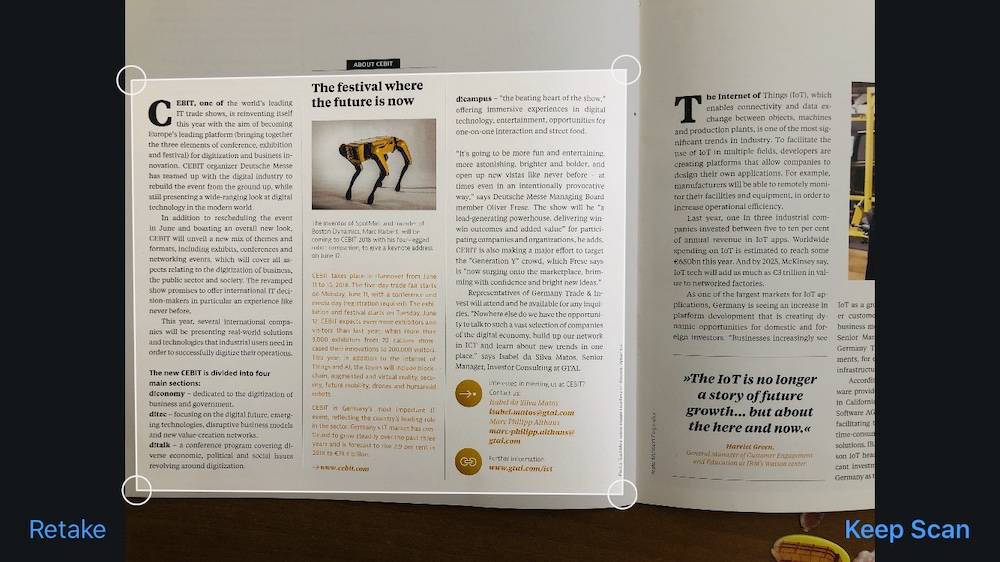

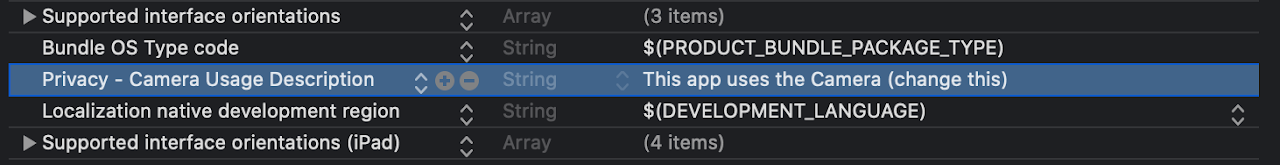

Today we will scan the document and display the recognized text from this document. You do not need to install additional libraries for this: VisionKit is useful for scanning and Vision for text recognition. First, make sure you have Xcode 11 and iOS 13 installed , then create a new project with Storyboard support .We’ll scan using a video camera. So we need to add NSCameraUsageDescription to Info.plist , without this application it will crash.

First, make sure you have Xcode 11 and iOS 13 installed , then create a new project with Storyboard support .We’ll scan using a video camera. So we need to add NSCameraUsageDescription to Info.plist , without this application it will crash.

Scanning

For scanning documents, we use the VisionKit Framework . To open the screen for scanning, you need to create a new sample from VNDocumentCameraViewController and output:let scanner = VNDocumentCameraViewController()

scanner.delegate = self

present(scanner, animated: true)

Add VNDocumentCameraViewControllerDelegate to ViewController :class ViewController: UIViewController, VNDocumentCameraViewControllerDelegate {

...

After clicking “Cancel” or an error, close the open screen:func documentCameraViewControllerDidCancel(_ controller: VNDocumentCameraViewController) {

controller.dismiss(animated: true)

}

func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFailWithError error: Error) {

controller.dismiss(animated: true)

}

After scanning and clicking on “Save”, the following will work:func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFinishWith scan: VNDocumentCameraScan) {

for i in 0 ..< scan.pageCount {

let img = scan.imageOfPage(at: i)

}

controller.dismiss(animated: true)

}

Each page can be processed individually.Text recognition

We figured out the scanning, now we extract the text.To make everything go smoothly, we will perform recognition in the background. To do this, create a DispatchQueue :lazy var workQueue = {

return DispatchQueue(label: "workQueue", qos: .userInitiated, attributes: [], autoreleaseFrequency: .workItem)

}()

For recognition, we need a VNImageRequestHandler with a picture and VNRecognizeTextRequest with the options recognitionLevel , customWords , recognitionLanguages , as well as a completion handler that will give the result in text form. Upon completion, we collect the best text options and display:lazy var textRecognitionRequest: VNRecognizeTextRequest = {

let req = VNRecognizeTextRequest { (request, error) in

guard let observations = request.results as? [VNRecognizedTextObservation] else { return }

var resultText = ""

for observation in observations {

guard let topCandidate = observation.topCandidates(1).first else { return }

resultText += topCandidate.string

resultText += "\n"

}

DispatchQueue.main.async {

self.txt.text = resultText

}

}

return req

}()

VNImageRequestHandler :func recognizeText(inImage: UIImage) {

guard let cgImage = inImage.cgImage else { return }

workQueue.async {

let requestHandler = VNImageRequestHandler(cgImage: cgImage, options: [:])

do {

try requestHandler.perform([self.textRecognitionRequest])

} catch {

print(error)

}

}

}

Latest ViewController

import UIKit

import Vision

import VisionKit

class ViewController: UIViewController, VNDocumentCameraViewControllerDelegate {

@IBOutlet weak var txt: UITextView!

lazy var workQueue = {

return DispatchQueue(label: "workQueue", qos: .userInitiated, attributes: [], autoreleaseFrequency: .workItem)

}()

lazy var textRecognitionRequest: VNRecognizeTextRequest = {

let req = VNRecognizeTextRequest { (request, error) in

guard let observations = request.results as? [VNRecognizedTextObservation] else { return }

var resultText = ""

for observation in observations {

guard let topCandidate = observation.topCandidates(1).first else { return }

resultText += topCandidate.string

resultText += "\n"

}

DispatchQueue.main.async {

self.txt.text = self.txt.text + "\n" + resultText

}

}

return req

}()

@IBAction func startScan(_ sender: Any) {

txt.text = ""

let scanner = VNDocumentCameraViewController()

scanner.delegate = self

present(scanner, animated: true)

}

func recognizeText(inImage: UIImage) {

guard let cgImage = inImage.cgImage else { return }

workQueue.async {

let requestHandler = VNImageRequestHandler(cgImage: cgImage, options: [:])

do {

try requestHandler.perform([self.textRecognitionRequest])

} catch {

print(error)

}

}

}

func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFinishWith scan: VNDocumentCameraScan) {

for i in 0 ..< scan.pageCount {

let img = scan.imageOfPage(at: i)

recognizeText(inImage: img)

}

controller.dismiss(animated: true)

}

func documentCameraViewControllerDidCancel(_ controller: VNDocumentCameraViewController) {

controller.dismiss(animated: true)

}

func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFailWithError error: Error) {

print(error)

controller.dismiss(animated: true)

}

}

What's next?

Documentation:developer.apple.com/documentation/visiondeveloper.apple.com/documentation/visionkitWWDC Speech framework video:developer.apple.com/videos/all-videos/?q=VisionGitHub project:github.com/usenbekov / vision-demo