Optimize storage: a case of unification and lower cost of ownership

The article describes the process of optimizing the storage infrastructure of a middle-class company.Justifications for such a transition and a brief description of the process of setting up a new storage system are considered. We give an example of the pros and cons of switching to the selected system.Introduction

The infrastructure of one of our customers consisted of many heterogeneous storage systems of different levels: from SOHO systems QNAP, Synology for user data to Entry and Mid-range storage systems Eternus DX90 and DX600 for iSCSI and FC for service data and virtualization systems.All this differed both in generations and in the disks used; part of the systems was legacy equipment that did not have vendor support.A separate problem was the management of free space, since all available disk space was highly fragmented across many systems. As a result, the inconvenience of administration and the high cost of maintaining a fleet of systems.We faced the challenge of optimizing the storage infrastructure in order to reduce the cost of ownership and unification.The task was comprehensively analyzed by our company experts taking into account customer requirements for data availability, IOPS, RPO / RTO, as well as the possibility of upgrading the existing infrastructure.Implementation

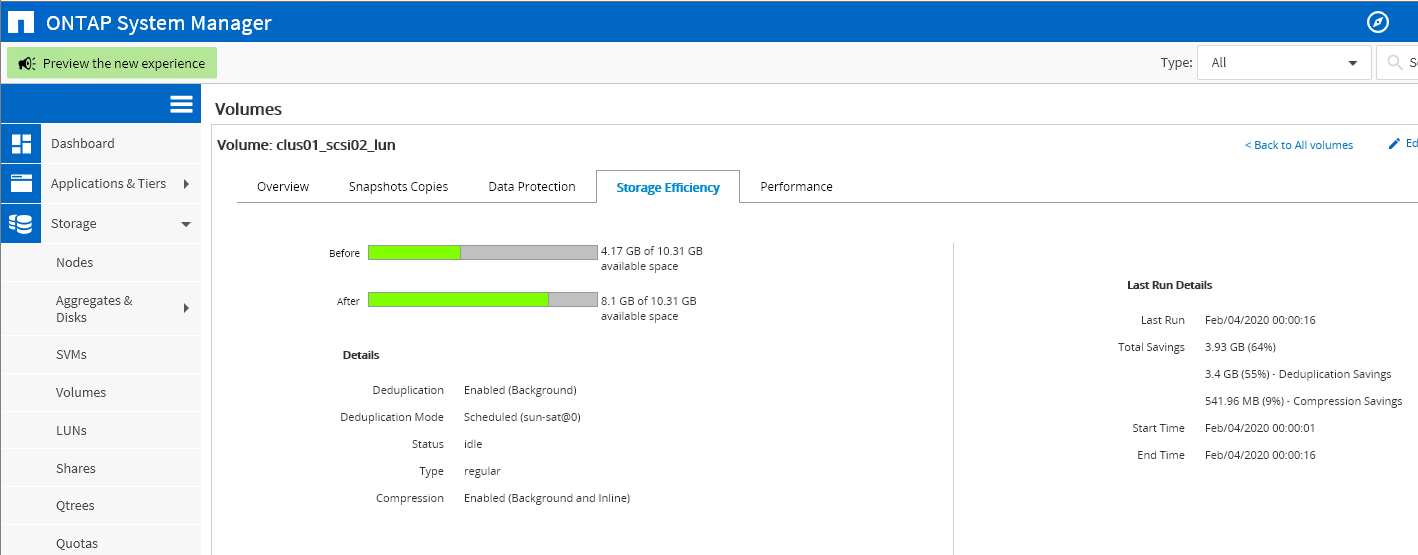

The main players in the market for mid-range storage systems (and above) are IBM with Storwize; Fujitsu, represented by the Eternus line, and NetApp with the FAS series. As a storage system that meets the given requirements, these systems were considered, namely: IBM Storwize V7000U, Fujitsu Eternus DX100, NetApp FAS2620. All three are Unified-SHD, that is, they provide both block access and file access, and provide close performance indicators.But in the case of the Storwize V7000U, file access is organized through a separate controller - a file module that connects to the main block controller, which is an additional point of failure. In addition, this system is relatively difficult to manage, and does not provide proper isolation of services.The Eternus DX100 storage system, also being an Unified storage system, has serious limitations on the number of file systems created, without giving the necessary isolation. In addition, the process of creating a new file system takes a long time (up to half an hour). Both described systems do not allow sharing CIFS / NFS servers used at the network level.Taking into account all parameters, including the total cost of ownership of the system, NetApp FAS2620 was chosen, consisting of a pair of controllers operating in Active-Active mode, and allowing to distribute the load between the controllers. And when combined with the built-in mechanisms of online deduplication and compression, it can significantly save on the space occupied by data on disks. These mechanisms become much more effective when aggregating data on one system compared to the initial situation, when potentially identical data was located on different storage systems and it was impossible to deduplicate them among themselves.Such a system made it possible to place all types of services under the control of a single failover cluster: SAN in the form of block devices for virtualization and NAS in the form of CIFS, NFS shares for Windows user data and * nix systems. At the same time, there remained the possibility of a safe logical separation of these services thanks to the SVM (Storage Virtual Machine) technology: the services responsible for different components do not affect the “neighbors” and do not allow access to them.It also remains possible to isolate services at the disk level, avoiding performance sagging under heavy load from the "neighbors".For services that require fast read / write, you can use a hybrid type of RAID array, adding several SSDs to the HDD aggregate. The system itself will place “hot” data on them, reducing the latency of reading frequently used data. This is in addition to the NVRAM cache, which ensures its atomicity and integrity in addition to the high write speed (data will be stored in the NVRAM powered by the battery until confirmation of their complete recording is received from the file system) in case of a sudden power failure.After data migration to a new storage system, it becomes possible to more efficiently use the cache disk space.Positive sides

As mentioned above, the use of this system made it possible to solve two problems at once:- Unification- , , , .

- . , LUN, .

- . , . , Ethernet Fiber Channel .

- , , . .

—- NetApp SVM (Storage Virtual Machine), , , . SVM, . /.

- .

SVM , , VLAN-. , SVM, VLAN’. , trunk-.

iSCSI-, SAN- , , « » .

- .

- RAID- ( RAID- ), Volume. Volume SVM’, SVM’ . «» , «» SVM’ .

RAID- , .

—- . RAID-, .

- (CPU, RAM). storage-, , IO-, .

- NetApp S3- , on-premise , .

- Having reduced all services under the control of one system, we expectedly get a greater impact from disabling one component (1 out of 2 controllers versus 1 out of 10+ in the old infrastructure).- Decreased distribution of storage infrastructure. If earlier storage systems could be located on different floors / in different buildings, now everything is concentrated in one rack. This item can be offset by purchasing a less efficient system and using synchronous / asynchronous replication in case of force majeure situations.Step by step setup

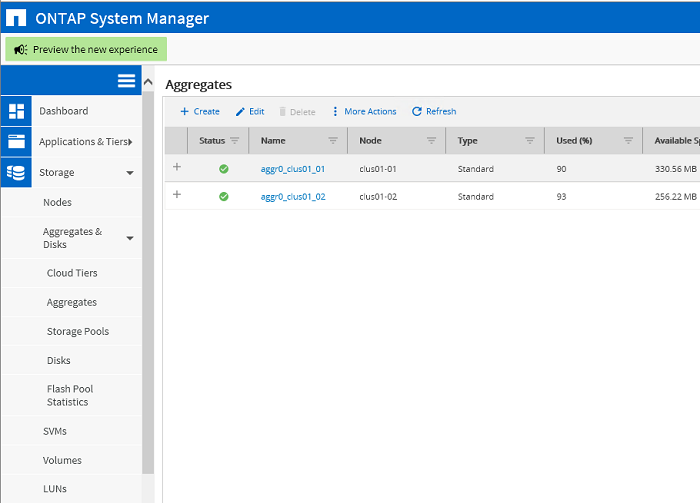

Due to the confidentiality of information, it is impossible to demonstrate screenshots from the real customer’s environment, so the configuration steps are shown in the test environment and completely repeat the steps performed in the customer’s productive environment. The initial state of the cluster. Two aggregates for root partitions of the corresponding clus01_01, clus01_02 cluster nodes

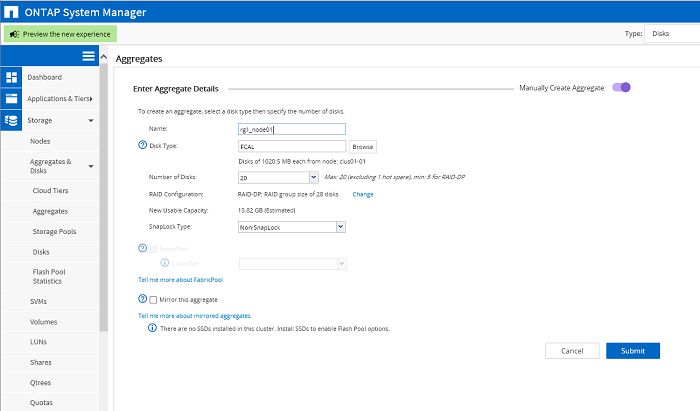

The initial state of the cluster. Two aggregates for root partitions of the corresponding clus01_01, clus01_02 cluster nodes Creating aggregates for data. Each node has its own aggregate consisting of one RAID-DP array.

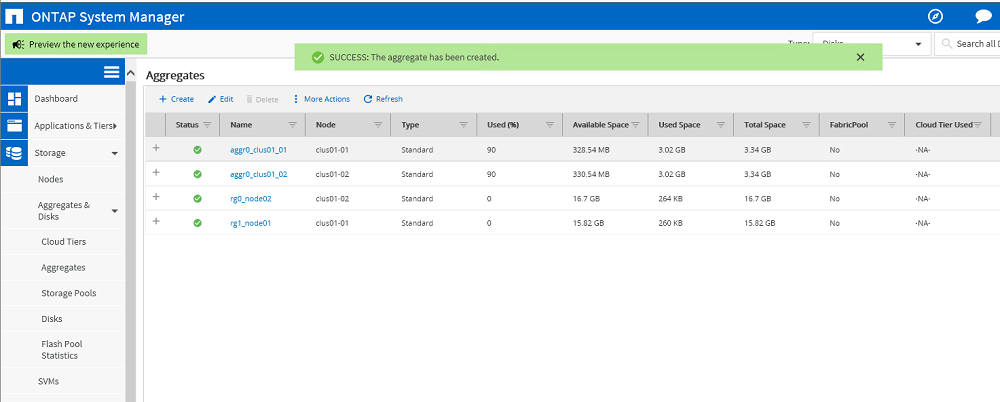

Creating aggregates for data. Each node has its own aggregate consisting of one RAID-DP array. Result: two aggregates were created: rg0_node02, rg1_node01. There is no data on them yet.

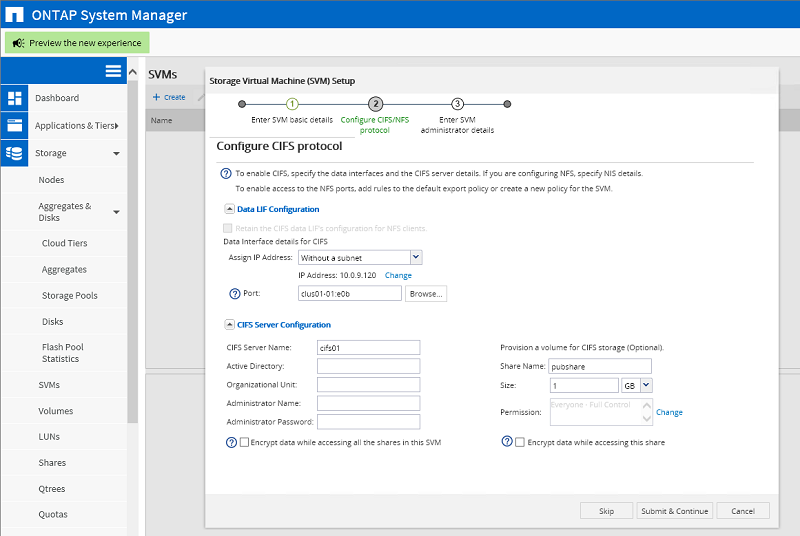

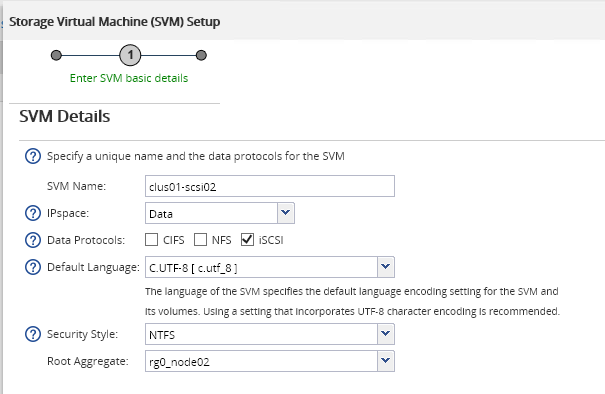

Result: two aggregates were created: rg0_node02, rg1_node01. There is no data on them yet. Creation of SVM as a CIFS server. For SVM, it is mandatory to create a root volume for which the root aggregate is selected - rg1_node01. This volume will store individual SVM settings.

Creation of SVM as a CIFS server. For SVM, it is mandatory to create a root volume for which the root aggregate is selected - rg1_node01. This volume will store individual SVM settings. CIFS- SVM. IP- ., . VLAN-, LACP . Volume , , .

CIFS- SVM. IP- ., . VLAN-, LACP . Volume , , . , . 4,9 , . .

, . 4,9 , . . SVM iSCSI-. , Root Volume . CIFS- IP- iSCSI-, . , (LUN), .

SVM iSCSI-. , Root Volume . CIFS- IP- iSCSI-, . , (LUN), . LUN 10 . , .

LUN 10 . , . Hyper-V Server iqn.

Hyper-V Server iqn. Hyper-V Server LUN Linux. Volume, , , . LUN , .

Hyper-V Server LUN Linux. Volume, , , . LUN , . Source: https://habr.com/ru/post/undefined/

All Articles