How Yandex.Cloud is hosted by Virtual Private Cloud and how our users help us implement useful features

Hi, my name is Kostya Kramlikh, I am a leading developer of the Virtual Private Cloud division in Yandex.Cloud. I am engaged in a virtual network, and, as you might guess, in this article I will talk about the Virtual Private Cloud (VPC) device in general and the virtual network in particular. You will also learn why we, the developers of the service, appreciate the feedback from our users. But first things first.

What is a VPC?

Nowadays, for the deployment of services there are a variety of opportunities. I am sure that someone still keeps the server under the administrator’s table, although, I hope, there are less and less such stories.Now services are trying to go into public clouds, and right here they are faced with VPC. VPC is a part of the public cloud that connects user, infrastructure, platform and other capacities together, wherever they are, in our Cloud or beyond. At the same time, VPC allows not to unnecessarily put these capacities on the Internet, they remain within your isolated network.How a virtual network looks from the outside

By VPC, we understand primarily the overlay network and network services, such as VPNaaS, NATaas, LBaas, etc. And all this works on top of the fail-safe network infrastructure, about which there was already an excellent article here on Habré.Let's look at the virtual network and its device more closely.

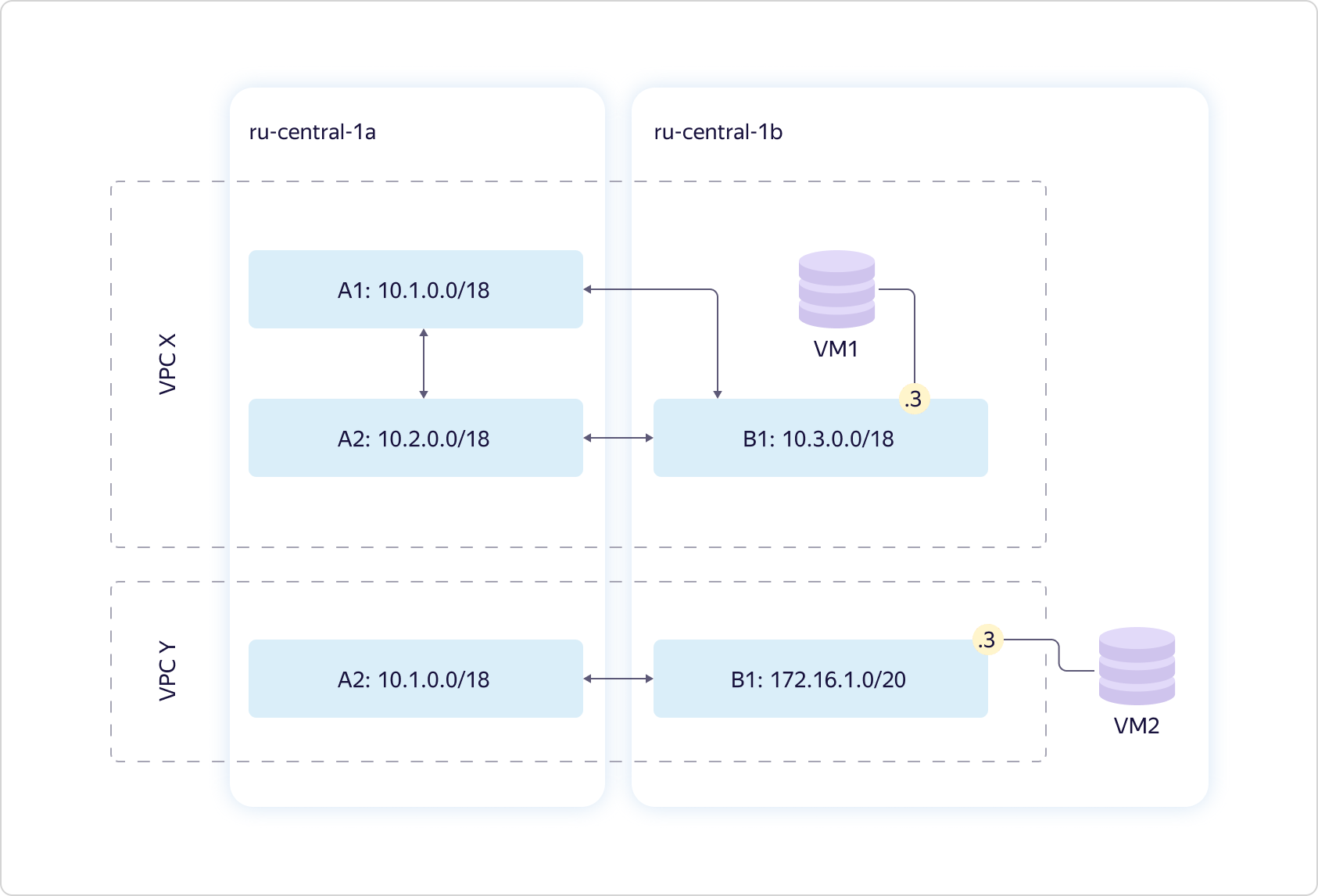

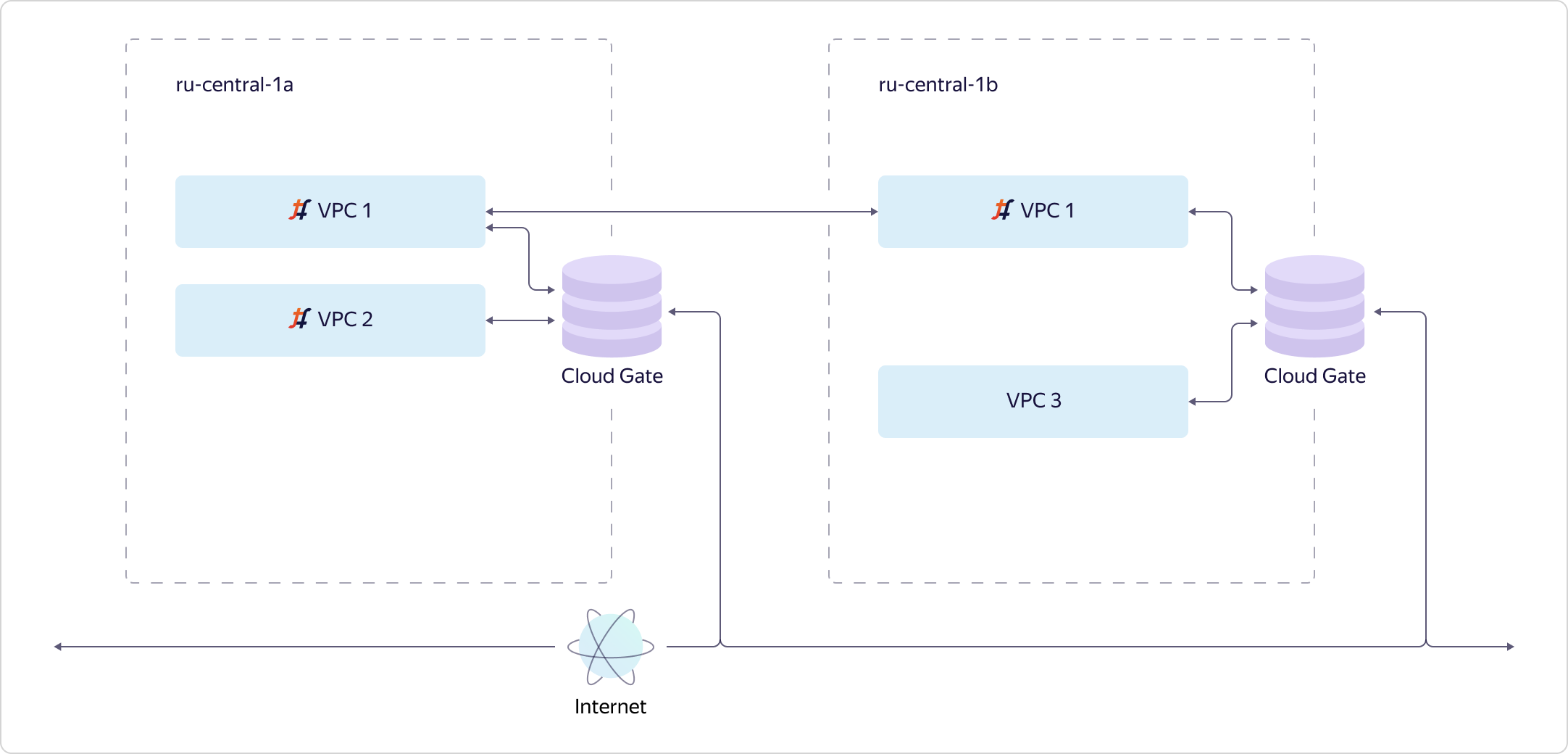

By VPC, we understand primarily the overlay network and network services, such as VPNaaS, NATaas, LBaas, etc. And all this works on top of the fail-safe network infrastructure, about which there was already an excellent article here on Habré.Let's look at the virtual network and its device more closely. Consider two accessibility zones. We provide a virtual network - what we called VPC. In fact, it defines the uniqueness space of your “gray” addresses. Within each virtual network, you completely control the address space that you can assign to computing resources.The network is global. At the same time, it is projected onto each of the access zones in the form of an entity called Subnet. For each Subnet, you assign a certain CIDR of size 16 or less. There can be more than one such entity in each availability zone, while there is always transparent routing between them. This means that all your resources within the same VPC can "communicate" with each other, even if they are in different access zones. "Communicate" without access to the Internet, through our internal channels, "thinking" that they are within the same private network.The diagram above shows a typical situation: two VPCs that somewhere intersect at addresses. Both may belong to you. For example, one for development, another for testing. There may simply be different users - in this case, it doesn’t matter. And one virtual machine is stuck in each VPC.

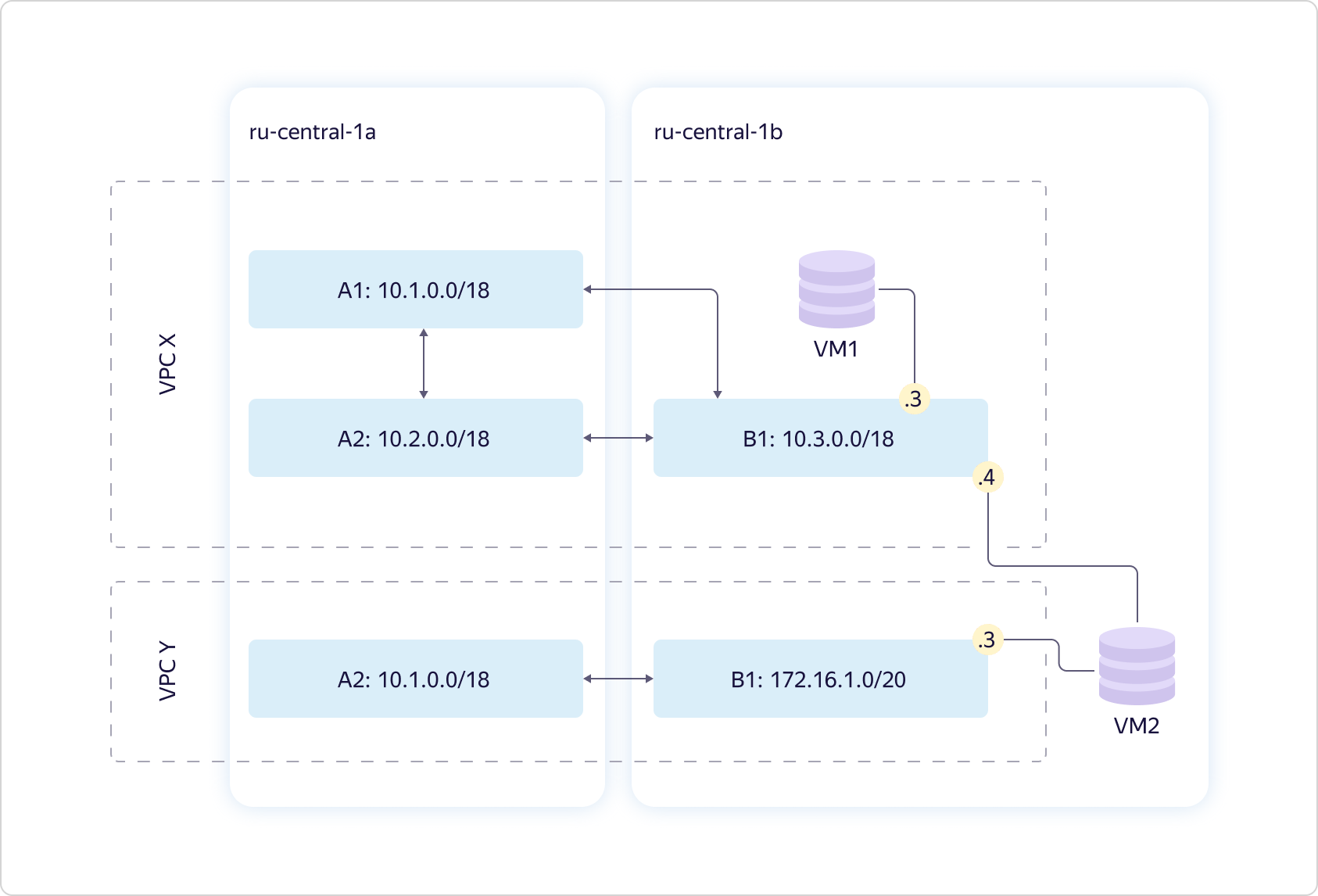

Consider two accessibility zones. We provide a virtual network - what we called VPC. In fact, it defines the uniqueness space of your “gray” addresses. Within each virtual network, you completely control the address space that you can assign to computing resources.The network is global. At the same time, it is projected onto each of the access zones in the form of an entity called Subnet. For each Subnet, you assign a certain CIDR of size 16 or less. There can be more than one such entity in each availability zone, while there is always transparent routing between them. This means that all your resources within the same VPC can "communicate" with each other, even if they are in different access zones. "Communicate" without access to the Internet, through our internal channels, "thinking" that they are within the same private network.The diagram above shows a typical situation: two VPCs that somewhere intersect at addresses. Both may belong to you. For example, one for development, another for testing. There may simply be different users - in this case, it doesn’t matter. And one virtual machine is stuck in each VPC. Compound the scheme. You can make one virtual machine stick into multiple Subnet at once. And not just like that, but in different virtual networks.

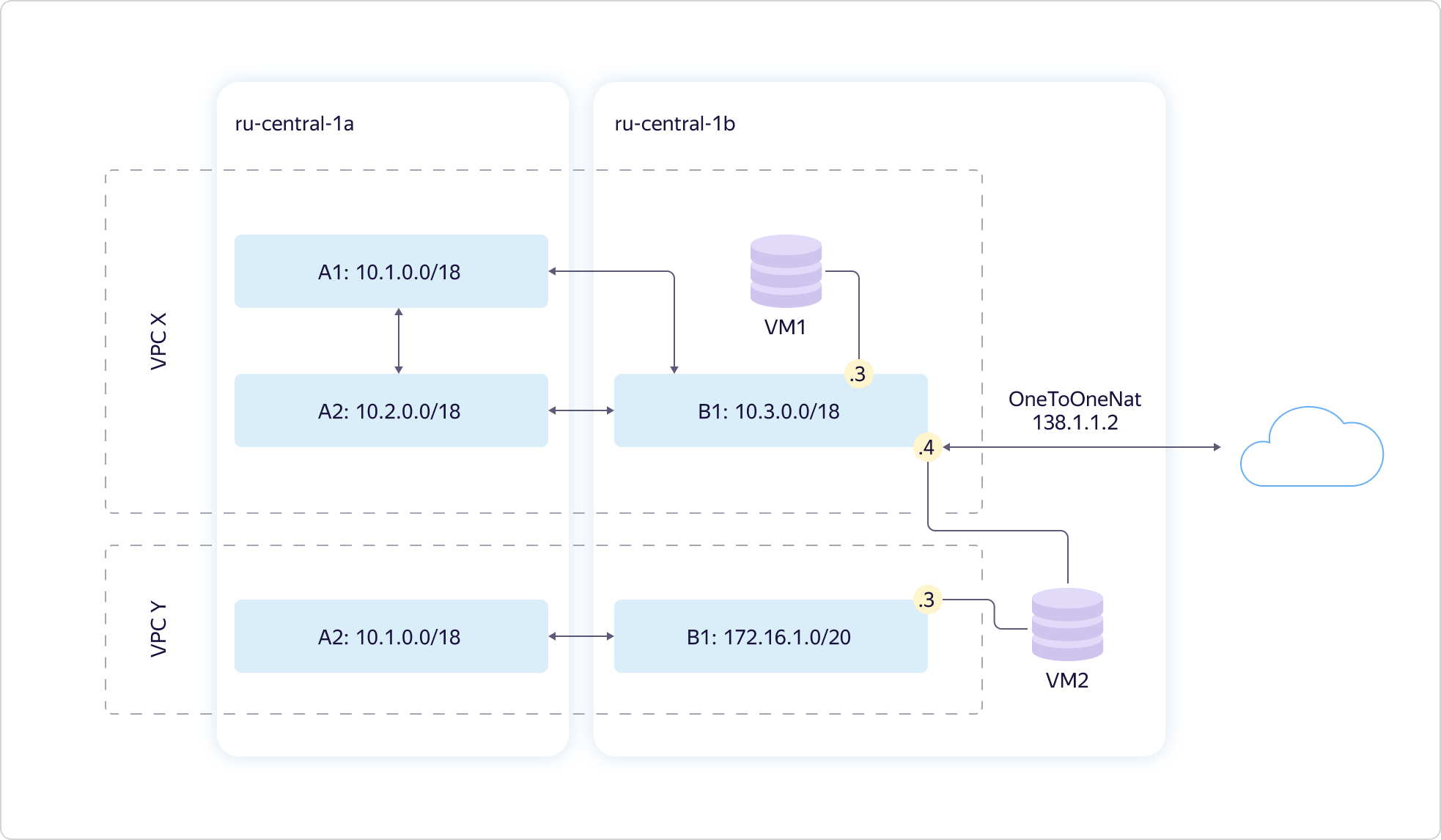

Compound the scheme. You can make one virtual machine stick into multiple Subnet at once. And not just like that, but in different virtual networks. At the same time, if you need to put cars on the Internet, this can be done through the API or UI. To do this, you need to configure NAT translation of your "gray", internal address, to "white" - public. You cannot select a “white” address; it is assigned randomly from our address pool. As soon as you stop using the external IP, it returns to the pool. You pay only for the time you use the "white" address.

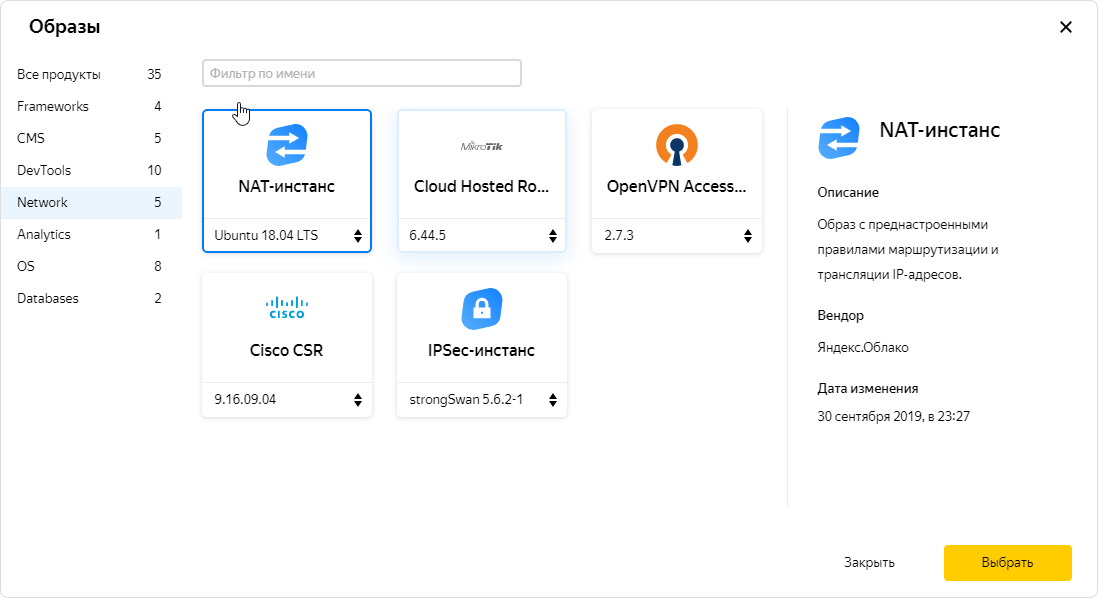

At the same time, if you need to put cars on the Internet, this can be done through the API or UI. To do this, you need to configure NAT translation of your "gray", internal address, to "white" - public. You cannot select a “white” address; it is assigned randomly from our address pool. As soon as you stop using the external IP, it returns to the pool. You pay only for the time you use the "white" address. It is also possible to give the machine access to the Internet using a NAT instance. On an instance, you can get traffic through a static routing table. We have provided such a case, because users need it, and we know about it. Accordingly, in our catalog of images lies a specially configured NAT image.

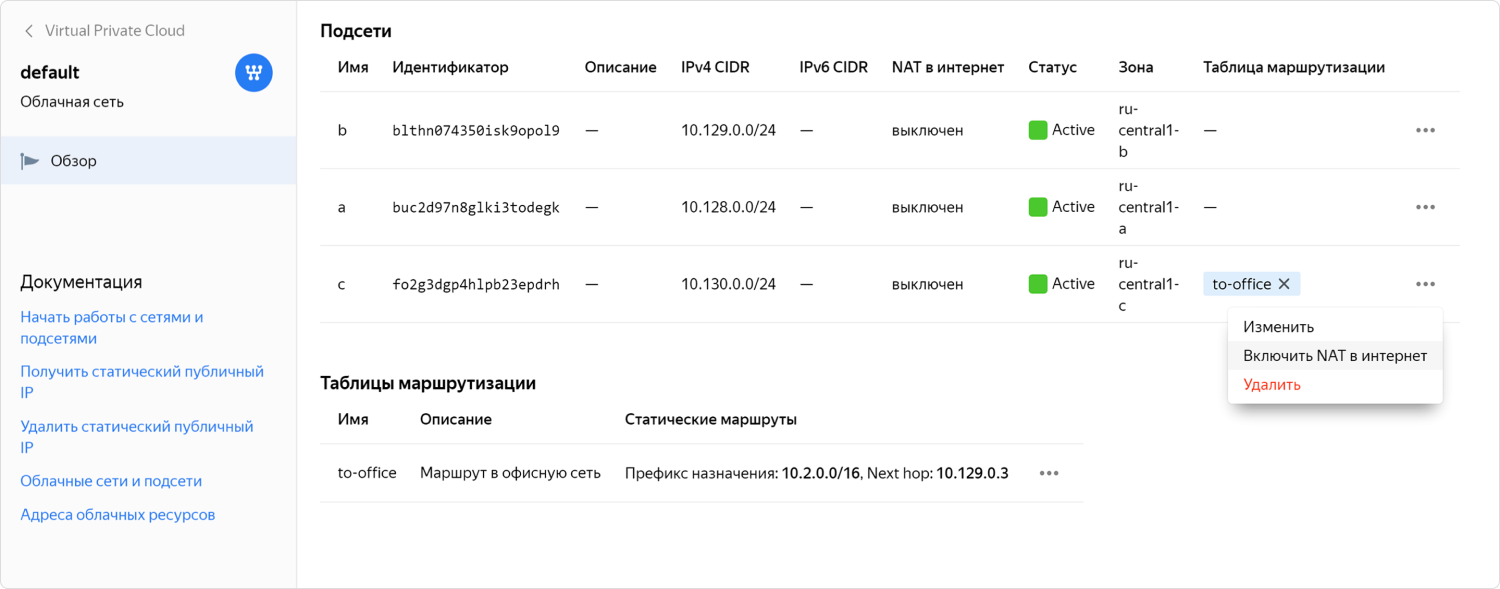

It is also possible to give the machine access to the Internet using a NAT instance. On an instance, you can get traffic through a static routing table. We have provided such a case, because users need it, and we know about it. Accordingly, in our catalog of images lies a specially configured NAT image. But even when there is a ready-made NAT image, the configuration can be complicated. We realized that for some users this is not the most convenient option, so in the end we made it possible to include NAT for the desired Subnet in one click. This feature is still in private preview-access, where it is rolled in with the help of community members.

But even when there is a ready-made NAT image, the configuration can be complicated. We realized that for some users this is not the most convenient option, so in the end we made it possible to include NAT for the desired Subnet in one click. This feature is still in private preview-access, where it is rolled in with the help of community members.How a virtual network works from the inside out

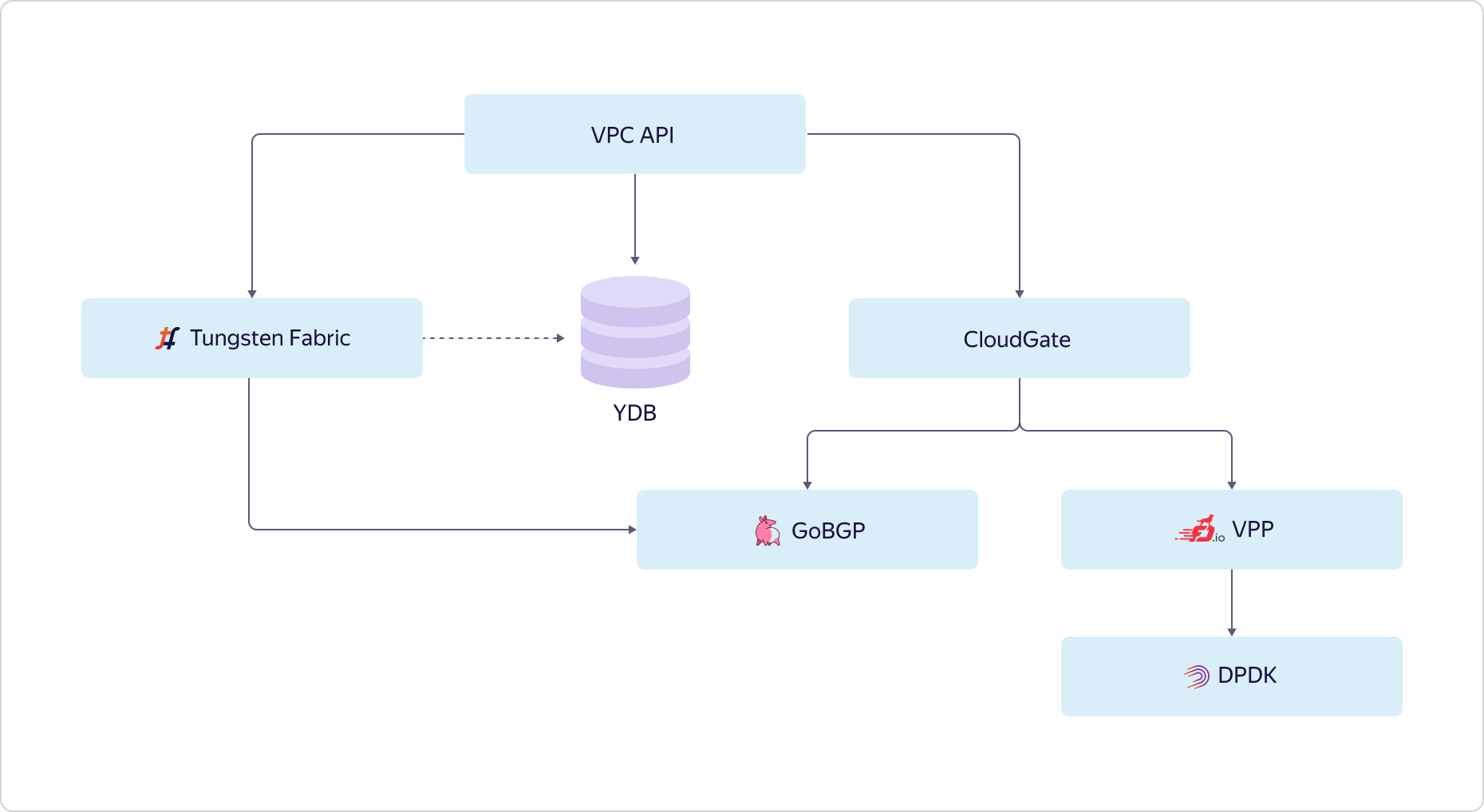

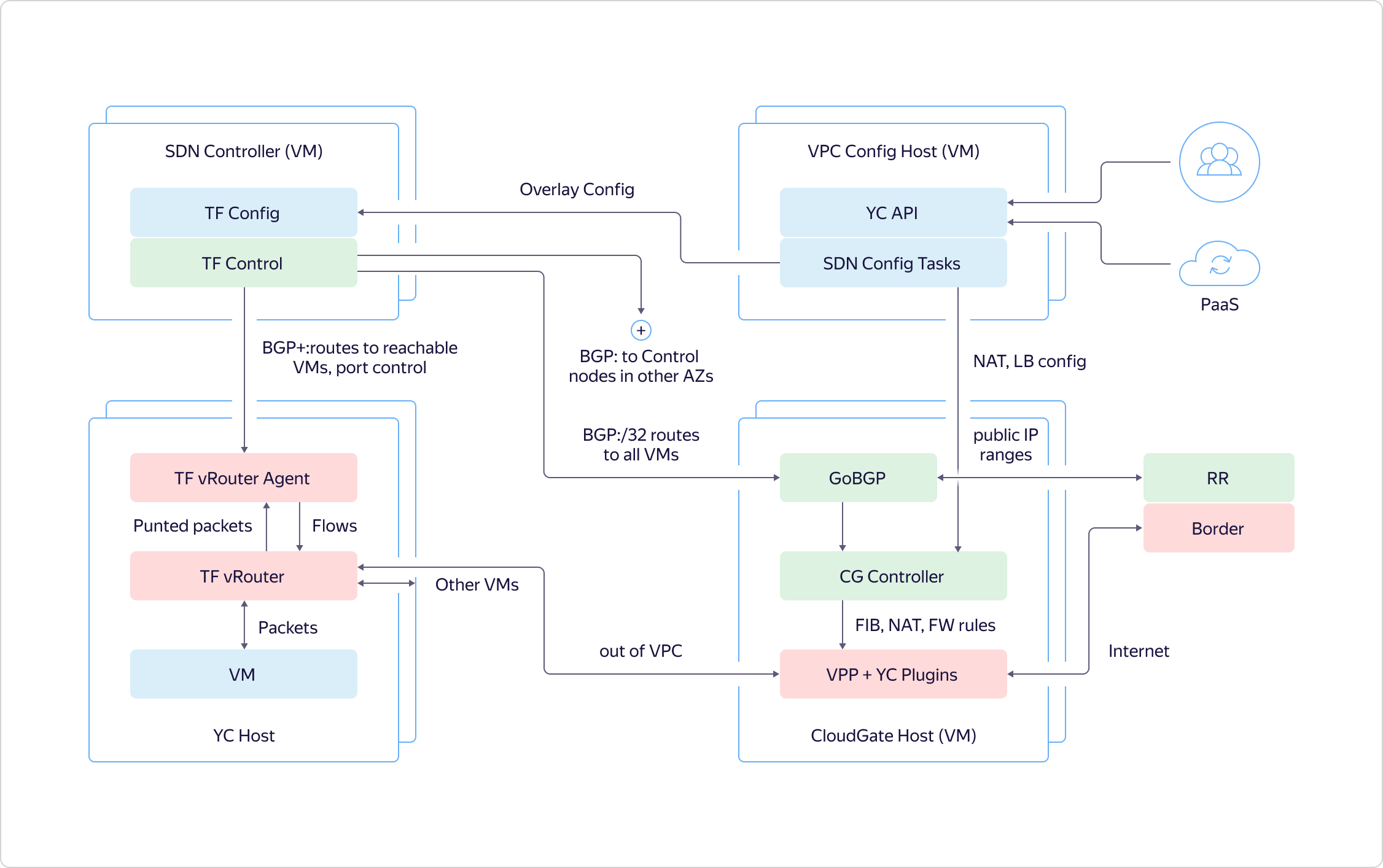

How does a user interact with a virtual network? The network looks outward with its API. The user comes to the API and works with the target state. Through the API, the user sees how everything should be arranged and configured, while he sees the status of how much the actual state differs from the desired one. This is a picture of the user. What is going on inside?We record the desired state in Yandex Database and go to configure different parts of our VPC. The overlay network in Yandex.Cloud is built on the basis of selected components of OpenContrail, which has recently been called Tungsten Fabric. Network services are implemented on a single CloudGate platform. In CloudGate, we also used a number of open source components: GoBGP - for accessing control information, and VPP - for implementing a software router working on top of DPDK for the data path.Tungsten Fabric communicates with CloudGate through GoBGP. Tells what happens on the overlay network. CloudGate, in turn, connects overlay networks to each other and to the Internet.

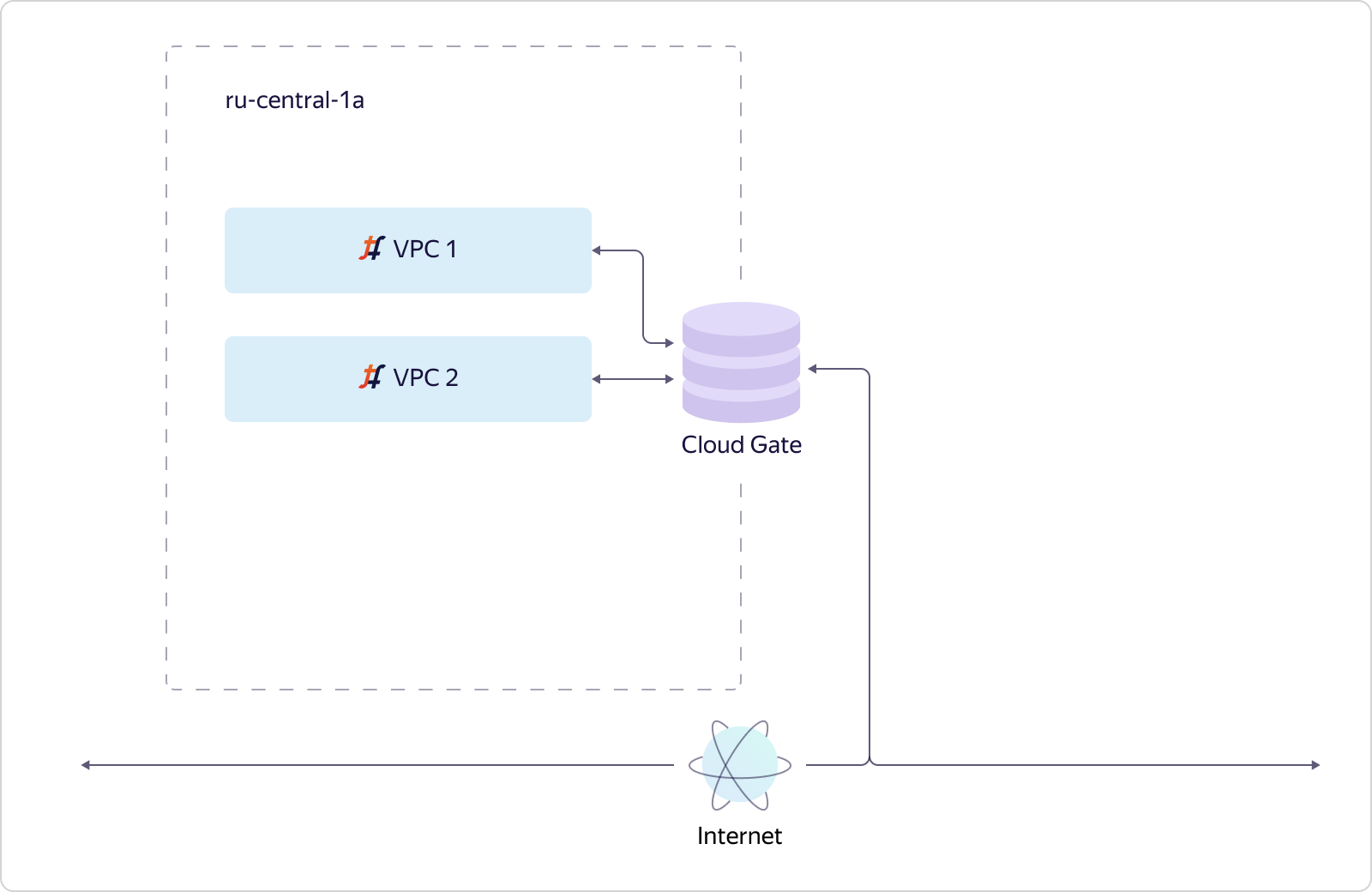

How does a user interact with a virtual network? The network looks outward with its API. The user comes to the API and works with the target state. Through the API, the user sees how everything should be arranged and configured, while he sees the status of how much the actual state differs from the desired one. This is a picture of the user. What is going on inside?We record the desired state in Yandex Database and go to configure different parts of our VPC. The overlay network in Yandex.Cloud is built on the basis of selected components of OpenContrail, which has recently been called Tungsten Fabric. Network services are implemented on a single CloudGate platform. In CloudGate, we also used a number of open source components: GoBGP - for accessing control information, and VPP - for implementing a software router working on top of DPDK for the data path.Tungsten Fabric communicates with CloudGate through GoBGP. Tells what happens on the overlay network. CloudGate, in turn, connects overlay networks to each other and to the Internet. Now let's see how a virtual network addresses scalability and availability. Consider a simple case. There is one availability zone and two VPCs are created in it. We deployed one instance of Tungsten Fabric, and it pulls in tens of thousands of networks. Networks communicate with CloudGate. CloudGate, as we have said, ensures their connectivity with each other and with the Internet.

Now let's see how a virtual network addresses scalability and availability. Consider a simple case. There is one availability zone and two VPCs are created in it. We deployed one instance of Tungsten Fabric, and it pulls in tens of thousands of networks. Networks communicate with CloudGate. CloudGate, as we have said, ensures their connectivity with each other and with the Internet. Suppose a second accessibility zone is added. It should fail completely independently of the first. Therefore, in the second access zone, we must deliver a separate Tungsten Fabric instance. This will be a separate system that deals with overlays and knows little about the first system. And the appearance that our virtual network is global, in fact, creates our VPC API. This is his task.VPC1 is projected into access zone B if there are resources in access zone B that stick into VPC1. If there are no resources from VPC2 in access zone B, we will not materialize VPC2 in this zone. In turn, since resources from VPC3 exist only in zone B, VPC3 is not in zone A. Everything is simple and logical.Let's go a little deeper and see how a particular host is arranged in Y. Cloud. The main thing that I want to note is that all hosts are arranged in the same way. We make it so that only the necessary minimum of services is spinning on the hardware, all the rest work on virtual machines. We build services of a higher order based on basic infrastructure services, and also use the Cloud to solve some engineering problems, for example, as part of Continuous Integration.

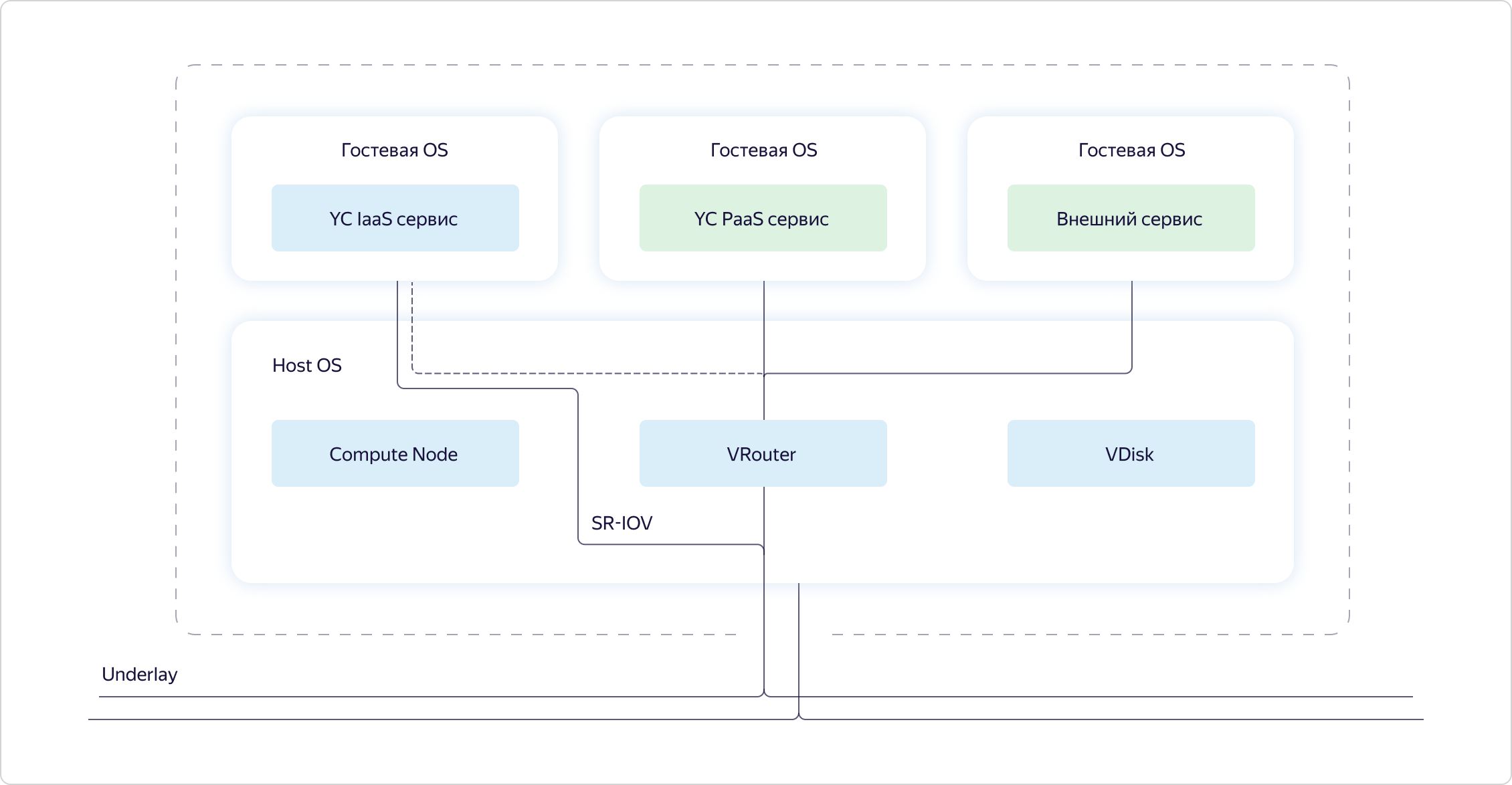

Suppose a second accessibility zone is added. It should fail completely independently of the first. Therefore, in the second access zone, we must deliver a separate Tungsten Fabric instance. This will be a separate system that deals with overlays and knows little about the first system. And the appearance that our virtual network is global, in fact, creates our VPC API. This is his task.VPC1 is projected into access zone B if there are resources in access zone B that stick into VPC1. If there are no resources from VPC2 in access zone B, we will not materialize VPC2 in this zone. In turn, since resources from VPC3 exist only in zone B, VPC3 is not in zone A. Everything is simple and logical.Let's go a little deeper and see how a particular host is arranged in Y. Cloud. The main thing that I want to note is that all hosts are arranged in the same way. We make it so that only the necessary minimum of services is spinning on the hardware, all the rest work on virtual machines. We build services of a higher order based on basic infrastructure services, and also use the Cloud to solve some engineering problems, for example, as part of Continuous Integration. If we look at a specific host, we will see that three components are spinning in the host OS:

If we look at a specific host, we will see that three components are spinning in the host OS:- Compute is the part responsible for the distribution of computing resources on the host.

- VRouter is part of the Tungsten Fabric that organizes the overlay, that is, tunnels packets through the underlay.

- VDisk are pieces of storage virtualization.

In addition, services were launched in virtual machines: Cloud infrastructure services, platform services and customer capabilities. Customer capabilities and platform services always go overlay through VRouter.Infrastructure services can stick into the overlay, but basically they want to work on the underlay. They are stuck in an underlay by means of SR-IOV. In fact, we cut the card into virtual network cards (virtual functions) and push them into infrastructure virtual machines so as not to lose performance. For example, the same CloudGate is launched as one of such infrastructure virtual machines.Now that we have described the global tasks of the virtual network and the arrangement of the basic components of the cloud, let's see how exactly the different parts of the virtual network interact with each other.We distinguish three layers in our system:- Config Plane - sets the target state of the system. This is what the user configures through the API.

- Control Plane - provides user-defined semantics, that is, it brings the state of the Data Plane to what was described by the user in Config Plane.

- Data Plane - directly processes user packets.

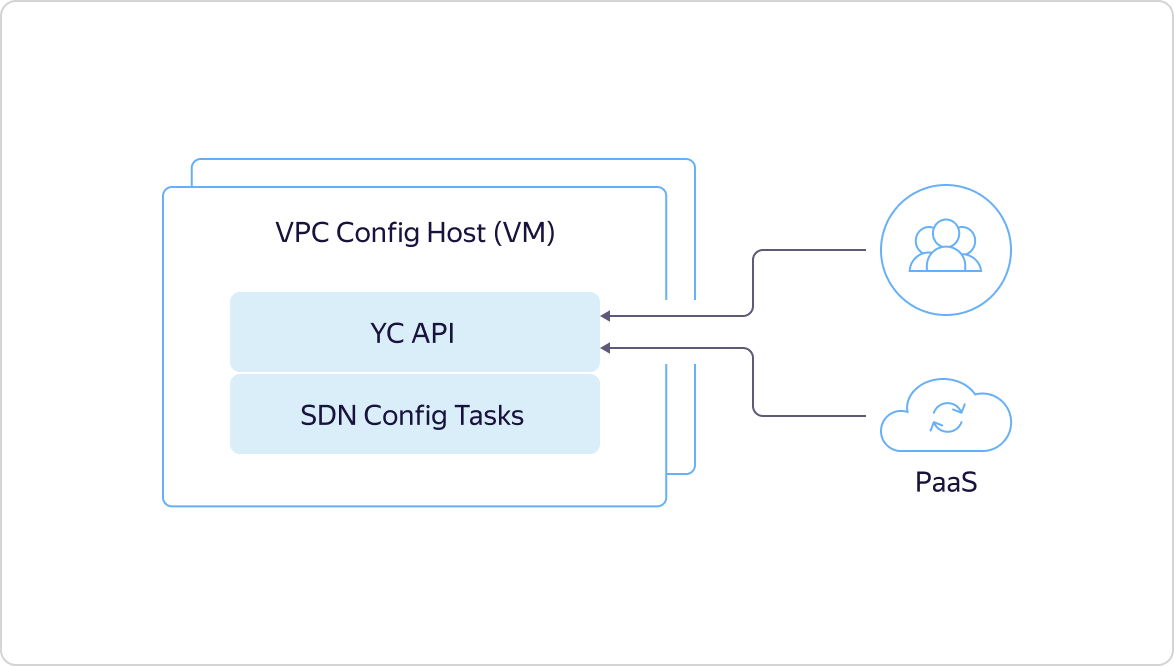

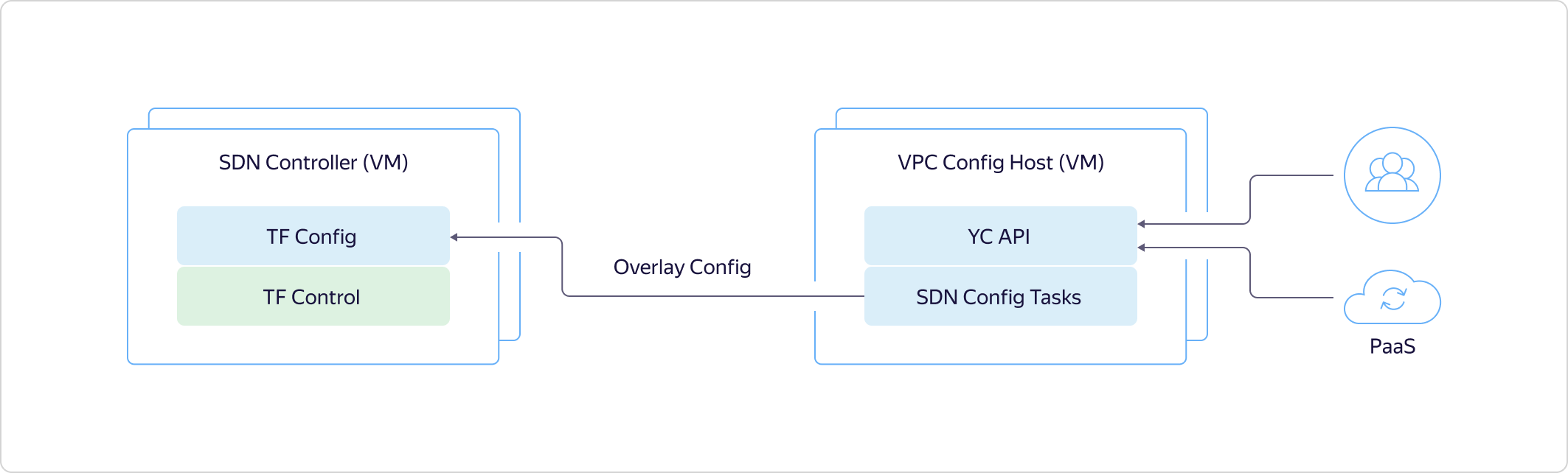

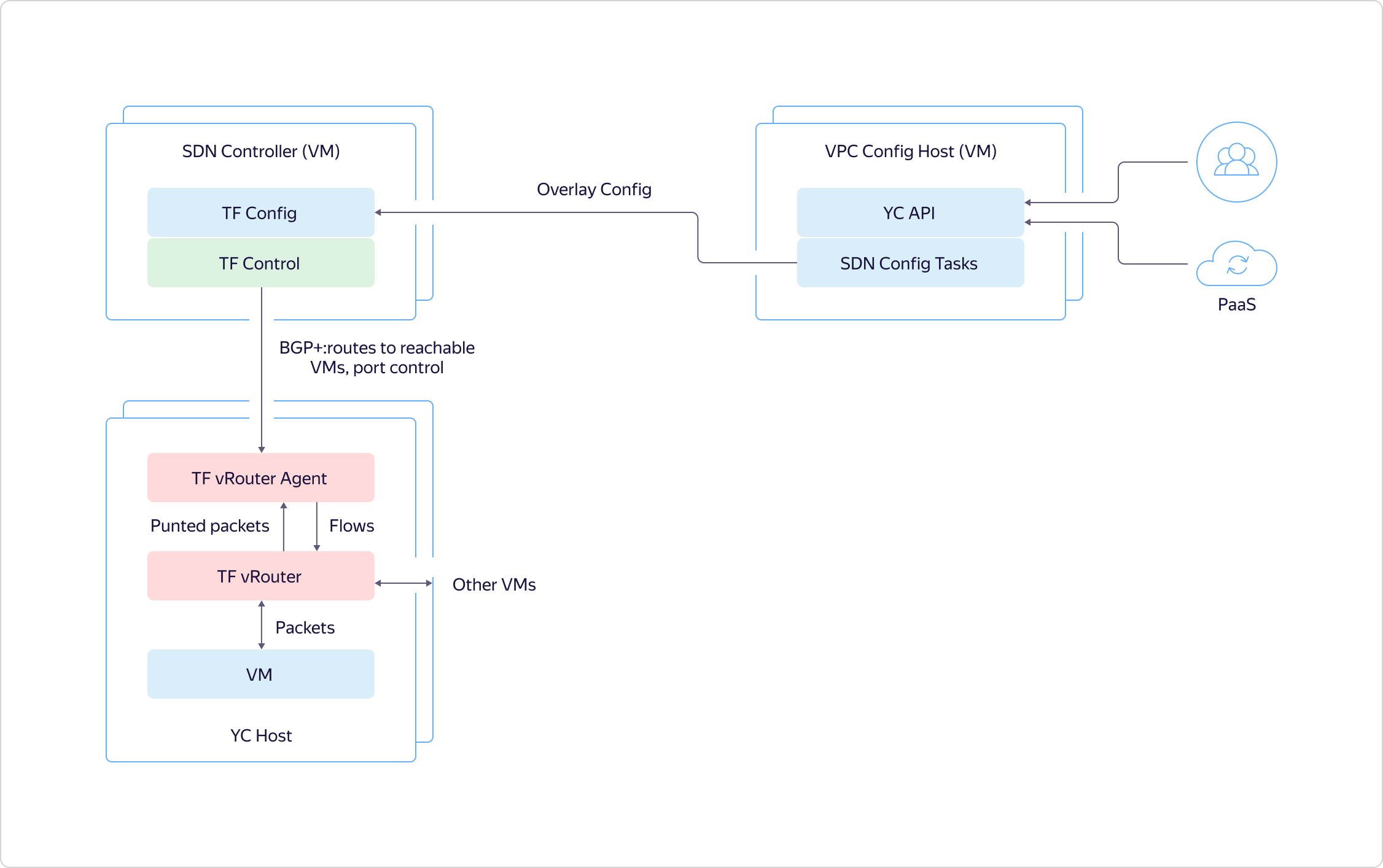

As I said above, it all starts with the fact that a user or an internal platform service comes to the API and describes a specific target state.This state is immediately recorded in the Yandex Database, returns the ID of the asynchronous operation through the API, and starts our internal machinery to return the state that the user wanted. Configuration tasks go to the SDN controller and tell Tungsten Fabric what to do in the overlay. For example, they reserve ports, virtual networks, and the like.

As I said above, it all starts with the fact that a user or an internal platform service comes to the API and describes a specific target state.This state is immediately recorded in the Yandex Database, returns the ID of the asynchronous operation through the API, and starts our internal machinery to return the state that the user wanted. Configuration tasks go to the SDN controller and tell Tungsten Fabric what to do in the overlay. For example, they reserve ports, virtual networks, and the like. The Config Plane at Tungsten Fabric ships the required state to the Control Plane. Through it, Config Plane communicates with hosts, telling what it will turn on them in the near future.

The Config Plane at Tungsten Fabric ships the required state to the Control Plane. Through it, Config Plane communicates with hosts, telling what it will turn on them in the near future. Now let's see what the system looks like on hosts. In the virtual machine there is a certain network adapter stuck in VRouter. VRouter is a Tungsten Fabric core module that looks at packets. If there is already flow for some package, the module processes it. If there is no flow, the module does the so-called punting, that is, it sends the packet to the user-mode process. The process parses the packet and either responds to it itself, as, for example, to DHCP and DNS, or tells VRouter what to do with it. After that, the VRouter can process the packet.Further, traffic between virtual machines within the same virtual network runs transparently, it is not directed to CloudGate. Hosts on which virtual machines are deployed communicate directly with each other. They tunnel traffic and forward it to each other through the underlay.

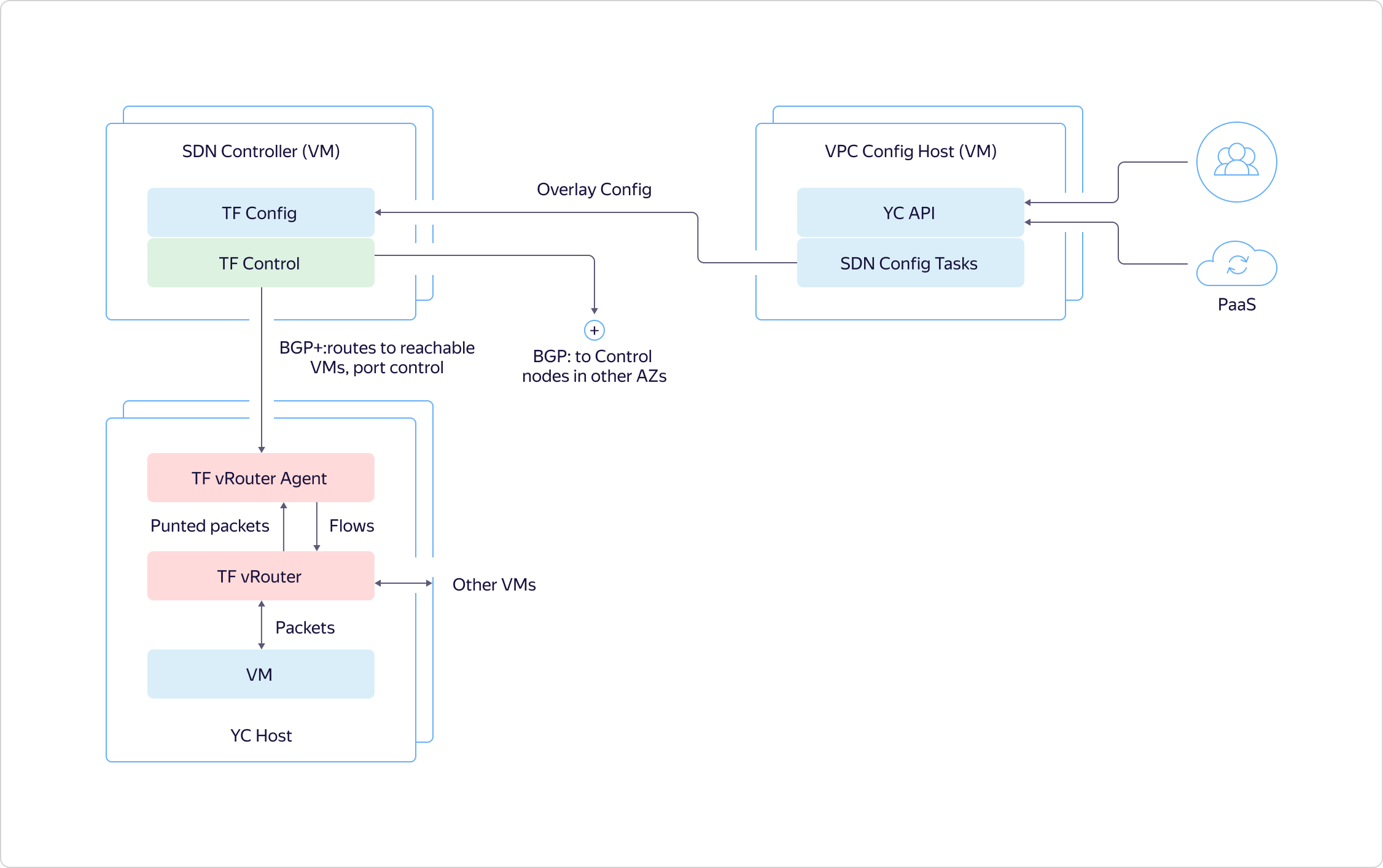

Now let's see what the system looks like on hosts. In the virtual machine there is a certain network adapter stuck in VRouter. VRouter is a Tungsten Fabric core module that looks at packets. If there is already flow for some package, the module processes it. If there is no flow, the module does the so-called punting, that is, it sends the packet to the user-mode process. The process parses the packet and either responds to it itself, as, for example, to DHCP and DNS, or tells VRouter what to do with it. After that, the VRouter can process the packet.Further, traffic between virtual machines within the same virtual network runs transparently, it is not directed to CloudGate. Hosts on which virtual machines are deployed communicate directly with each other. They tunnel traffic and forward it to each other through the underlay. The Control Plane communicates with each other between BGP access zones, as with another router. They tell which machines where they are raised, so that virtual machines in one zone can directly interact with other virtual machines.

The Control Plane communicates with each other between BGP access zones, as with another router. They tell which machines where they are raised, so that virtual machines in one zone can directly interact with other virtual machines. Also, Control Plane communicates with CloudGate. It similarly reports where and which virtual machines are raised, what their addresses are. This allows you to direct external traffic and traffic from balancers to them.Traffic that leaves VPC comes to CloudGate, in the data path, where VPP is quickly chewed with our plugins. Next, traffic is fired either at other VPCs or outward at the edge routers that are configured through CloudGate's Control Plane.

Also, Control Plane communicates with CloudGate. It similarly reports where and which virtual machines are raised, what their addresses are. This allows you to direct external traffic and traffic from balancers to them.Traffic that leaves VPC comes to CloudGate, in the data path, where VPP is quickly chewed with our plugins. Next, traffic is fired either at other VPCs or outward at the edge routers that are configured through CloudGate's Control Plane.Plans for the near future

If we summarize all of the above in several sentences, we can say that VPC in Yandex.Cloud solves two important tasks:- Provides isolation between different customers.

- Combines resources, infrastructure, platform services, other clouds and on-premise into a single network.

And to solve these problems well, you need to provide scalability and fault tolerance at the level of internal architecture, which VPC does.VPC is gradually acquiring functions, we are realizing new opportunities, we are trying to improve something in terms of user convenience. Some ideas are voiced and fall into the priority list thanks to members of our community.Now we have approximately the following list of plans for the near future:- VPN as a service.

- Private DNS – DNS-.

- DNS .

- .

- «» IP- .

The balancer and the ability to switch the IP address for the already created virtual machine were in this list at the request of users. Frankly, without explicit feedback, we would take up these functions a little later. And so we are already working on a task about addresses.Initially, a “white” IP address could only be added when creating the machine. If the user forgot to do this, the virtual machine had to be recreated. The same thing and, if necessary, remove the external IP. Soon it will be possible to turn the public IP on and off without re-creating the machine.Feel free to express your ideas and support the suggestions of other users. You help us make the Cloud better and get important and useful features faster! Source: https://habr.com/ru/post/undefined/

All Articles