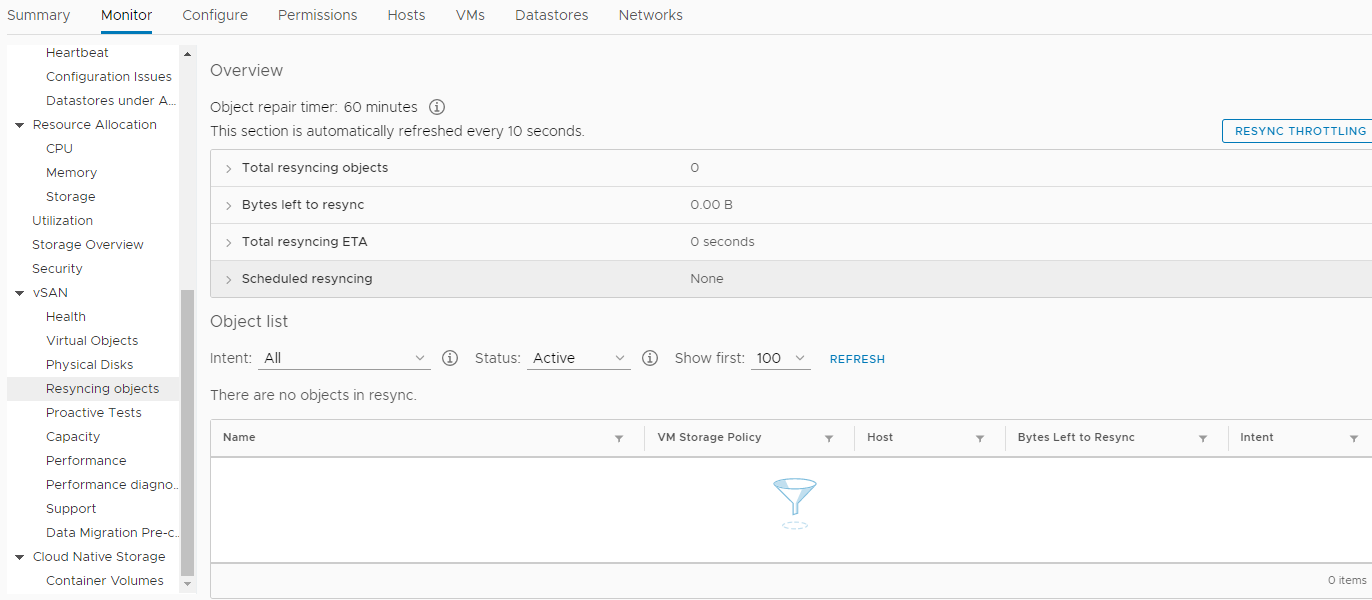

The year 2018 was ending ...Once, on a clear December day, our Company decided to purchase a new hardware. No, of course, this did not happen overnight. The decision was made earlier. Much earlier. But, as usual, not always our desires coincide with the capabilities of shareholders. And there was no money, and we held on. But finally, that joyful moment came when the acquisition was approved at all levels. Everything was fine, the white-collar workers applauded joyfully, they were tired of processing documents for 25 hours monthly on 7-year-old servers, and they very persistently asked the IT Department to come up with something to give them more time for other, equally important things .We promised to reduce the time for processing documents by 3 times, up to 8 hours. For this, a sparrow was fired from a cannon. This option seemed the only one, since our team did not, and never had, a database administrator to apply all kinds of query optimization (DBA).The configuration of the selected equipment was, of course, sky-high. These were three servers from the HPE company - DL560 Gen10. Each of them boasted 4 Intel Xeon Platinum 8164 2.0Ghz processors with 26 cores, 256 DDR4 RAM, as well as 8 SSD 800Gb SAS (SSD 800Gb WD Ultrastar DC SS530 WUSTR6480ASS204) + 8 SSD 1.92Tb (Western Digital Ultrastar DC SS530 )These "pieces of iron" were intended for the VMware cluster (HA + DRS + vSAN). Which has been working with us for almost 3 years on similar servers of the 7th and 8th generations, also from HPE. By the way, there were no problems until HPE refused to support them and upgrade ESXi from version 6.0, even to 6.5, without a tambourine. Well, okay, as a result, it was possible to update. By changing the installation image, removing incompatible problem modules from the installation image, etc. This also added fuel to the fire of our desire to match everything new. Of course, if it weren’t for the new vSAN “tricks”, in the coffin we saw an update of the entire system from version 6.0 to a newer one, and there would be no need to write an article, but we are not looking for easy ways ...So, we purchased this equipment and decided to replace the long-obsolete. We applied the last SPP to each new server, installed in each of them two Ethernet 10G network cards (one for user networks, and the second for SAN, 656596-B21 HP Ethernet 10Gb 2-port 530T). Yes, each new server came with an SFP + network card without modules, but our network infrastructure implied Ethernet (two stacks of DELL 4032N switches for LAN and SAN networks), and the HP distributor in Moscow did not have HPE 813874-B21 modules and we they did not wait.When it came time to install ESXi and incorporate new nodes into a common VMware data center, a “miracle” happened. As it turned out, HPE ESXi Custom ISO version 6.5 and below is not designed to be installed on new Gen10 servers. Hardcore only, only 6.7. And we had to unwittingly follow the precepts of the “virtual company”.A new HA + DRS cluster was created, a vSAN cluster was created, all in strict compliance with VMware HCL and this document . Everything was configured according to Feng Shui and only periodic “alarms” were suspicious in monitoring vSAN about non-zero parameter values in this section: We, with peace of mind, moved all virtual machines (about 50 pieces) to new servers and to a new vSAN storage built on SSD disks, we checked the performance of document processing in the new environment (by the way, it turned out to save a lot more time than we planned) . Until the heaviest base was transferred to the new cluster, the operation, which was mentioned at the beginning of the article, took about 4 hours instead of 25! This was a significant contribution to the New Year mood of all participants in the process. Some probably began to dream of a prize. Then everyone happily left for the New Year holidays.When the weekdays of the new year 2019 began, nothing portended a catastrophe. All services, transferred to new capacities, without exaggeration, took off! Only events in the re-synchronization section of objects became much more. And after a couple of weeks trouble happened. In the early morning, almost all of the key services of the Company (1s, MSSQL, SMB, Exchange, etc.) stopped responding, or began to respond with a long delay. The entire infrastructure plunged into complete chaos, and no one knew what happened and what to do. All virtual machines in vCenter looked “green”, there were no errors in their monitoring. Rebooting did not help. Moreover, after a reboot, some machines could not even boot, displaying various process errors in the console. Hell seemed to come to us and the devil was rubbing his hands in anticipation.Under the pressure of serious stress, it was possible to determine the source of the disaster. This problem turned out to be vSAN distributed storage. Uncontrolled data corruption on virtual machine disks occurred, at first glance - for no reason. At that time, the only solution that seemed rational was to contact VMware technical support with screams: SOS, save-help!And this decision, subsequently, saved the Company from the loss of relevant data, including employee mailboxes, databases and shared files. Together, we are talking about 30+ terabytes of information.He is obliged to pay tribute to the VMware support staff who did not “play football” with the holder of the basic technical support subscription, but included this case in the Enterpise segment, and the process spun around the clock.What happened next:

We, with peace of mind, moved all virtual machines (about 50 pieces) to new servers and to a new vSAN storage built on SSD disks, we checked the performance of document processing in the new environment (by the way, it turned out to save a lot more time than we planned) . Until the heaviest base was transferred to the new cluster, the operation, which was mentioned at the beginning of the article, took about 4 hours instead of 25! This was a significant contribution to the New Year mood of all participants in the process. Some probably began to dream of a prize. Then everyone happily left for the New Year holidays.When the weekdays of the new year 2019 began, nothing portended a catastrophe. All services, transferred to new capacities, without exaggeration, took off! Only events in the re-synchronization section of objects became much more. And after a couple of weeks trouble happened. In the early morning, almost all of the key services of the Company (1s, MSSQL, SMB, Exchange, etc.) stopped responding, or began to respond with a long delay. The entire infrastructure plunged into complete chaos, and no one knew what happened and what to do. All virtual machines in vCenter looked “green”, there were no errors in their monitoring. Rebooting did not help. Moreover, after a reboot, some machines could not even boot, displaying various process errors in the console. Hell seemed to come to us and the devil was rubbing his hands in anticipation.Under the pressure of serious stress, it was possible to determine the source of the disaster. This problem turned out to be vSAN distributed storage. Uncontrolled data corruption on virtual machine disks occurred, at first glance - for no reason. At that time, the only solution that seemed rational was to contact VMware technical support with screams: SOS, save-help!And this decision, subsequently, saved the Company from the loss of relevant data, including employee mailboxes, databases and shared files. Together, we are talking about 30+ terabytes of information.He is obliged to pay tribute to the VMware support staff who did not “play football” with the holder of the basic technical support subscription, but included this case in the Enterpise segment, and the process spun around the clock.What happened next:- VMware technical support posed two main questions: how to recover data and how to solve the problem of “phantom” data corruption in virtual machine disks in the “vSAN” combat cluster. By the way, the data was nowhere to recover, since the additional storage was occupied by backup copies and there was simply nowhere to deploy “combat” services.

- While I, jointly with VMware, tried to put together the “damaged” objects in the vSAN cluster, my colleagues urgently mined a new storage that could accommodate all 30+ terabytes of Company data.

- , , VMware , , «» - - . , ?

- .

- , « » .

- , , «» .

- I had to temporarily (for a couple of days) sacrifice the efficiency of mail, for the sake of an additional 6 terabytes of free space in the store, to launch the key services on which the Company's income depended.

- Thousands of chat lines with English-speaking colleagues from VMware were saved “for memory”, here is a short excerpt from our conversations:

I understood that you are now migrating all the VMs out of vSAN datastore.

May I know, how the migration task is going on.? How many VMs left and how much time is expected to migrate the remaining VMs. ?

There are 6 vms still need to be migrated. 1 of them is fail so far.

How much time is expected to complete the migration for the working VMs..?

I think atleast 2-3 hours

ok

Can you please SSH to vCenter server ?

you on it

/localhost/Datacenter ###CLUB/computers/###Cluster> vsan.check_state .

2019-02-02 05:22:34 +0300: Step 1: Check for inaccessible vSAN objects

Detected 3 objects to be inaccessible

Detected 7aa2265c-6e46-2f49-df40-20677c0084e0 on esxi-dl560-gen10-2.####.lan to be inaccessible

Detected 99c3515c-bee0-9faa-1f13-20677c038dd8 on esxi-dl560-gen10-3.####.lan to be inaccessible

Detected f1ca455c-d47e-11f7-7e90-20677c038de0 on esxi-dl560-gen10-1.####.lan to be inaccessible

2019-02-02 05:22:34 +0300: Step 2: Check for invalid/inaccessible VMs

Detected VM 'i.#####.ru' as being 'inaccessible'

2019-02-02 05:22:34 +0300: Step 3: Check for VMs for which VC/hostd/vmx are out of sync

Did not find VMs for which VC/hostd/vmx are out of sync

/localhost/Datacenter ###CLUB/computers/###Cluster>

Thank you

second vm with issues: sd.####.ru

How this problem manifested itself (in addition to the firmly fallen organization services).Exponential growth of checksum errors (CRC) “out of the blue” during data exchange with disks in HBA mode. How to check this - enter the following command in the console of each ESXi node:while true; do clear; for disk in $(localcli vsan storage list | grep -B10 'ity Tier: tr' |grep "VSAN UUID"|awk '{print $3}'|sort -u);do echo ==DISK==$disk====;vsish -e get /vmkModules/lsom/disks/$disk/checksumErrors | grep -v ':0';done; sleep 3; done

As a result of execution, you can see CRC errors for each disk in the vSAN cluster of this node (zero values will not be displayed). If you have positive values, and moreover, they are constantly growing, then there is a reason for constantly arising tasks in the Monitor -> vSAN -> Resincing objects section of the cluster.How to recover disks of virtual machines that do not clone or migrate by standard means?Who would have thought using the powerful cat command:1. cd vSAN

[root@esxi-dl560-gen10-1:~] cd /vmfs/volumes/vsanDatastore/estaff

2. grep vmdk uuid

[root@esxi-dl560-gen10-1:/vmfs/volumes/vsan:52f53dfd12dddc84-f712dbefac32cd1a/2636a75c-e8f1-d9ca-9a00-20677c0084e0] grep vsan *vmdk

estaff.vmdk:RW 10485760 VMFS "vsan://3836a75c-d2dc-5f5d-879c-20677c0084e0"

estaff_1.vmdk:RW 41943040 VMFS "vsan://3736a75c-e412-a6c8-6ce4-20677c0084e0"

[root@esxi-dl560-gen10-1:/vmfs/volumes/vsan:52f53dfd12dddc84-f712dbefac32cd1a/2636a75c-e8f1-d9ca-9a00-20677c0084e0]

3. VM , :

mkdir /vmfs/volumes/POWERVAULT/estaff

4. vmx

cp *vmx *vmdk /vmfs/volumes/POWERVAULT/estaff

5. , ^_^

/usr/lib/vmware/osfs/bin/objtool open -u 3836a75c-d2dc-5f5d-879c-20677c0084e0; sleep 1; cat /vmfs/devices/vsan/3836a75c-d2dc-5f5d-879c-20677c0084e0 >> /vmfs/volumes/POWERVAULT/estaff/estaff-flat.vmdk

6. cd :

cd /vmfs/volumes/POWERVAULT/estaff

7. - estaff.vmdk

[root@esxi-dl560-gen10-1:/tmp] cat estaff.vmdk

# Disk DescriptorFile

version=4

encoding="UTF-8"

CID=a7bb7cdc

parentCID=ffffffff

createType="vmfs"

# Extent description

RW 10485760 VMFS "vsan://3836a75c-d2dc-5f5d-879c-20677c0084e0" <<<<< "estaff-flat.vmdk"

# The Disk Data Base

#DDB

ddb.adapterType = "ide"

ddb.deletable = "true"

ddb.geometry.cylinders = "10402"

ddb.geometry.heads = "16"

ddb.geometry.sectors = "63"

ddb.longContentID = "6379fa7fdf6009c344bd9a64a7bb7cdc"

ddb.thinProvisioned = "1"

ddb.toolsInstallType = "1"

ddb.toolsVersion = "10252"

ddb.uuid = "60 00 C2 92 c7 97 ca ae-8d da 1c e2 3c df cf a5"

ddb.virtualHWVersion = "8"

[root@esxi-dl560-gen10-1:/tmp]

How to recognize naa.xxxx ... disks in disk groups:[root@esxi-dl560-gen10-1:/vmfs/volumes] vdq -Hi

Mappings:

DiskMapping[0]:

SSD: naa.5000c5003024eb43

MD: naa.5000cca0aa0025f4

MD: naa.5000cca0aa00253c

MD: naa.5000cca0aa0022a8

MD: naa.5000cca0aa002500

DiskMapping[2]:

SSD: naa.5000c5003024eb47

MD: naa.5000cca0aa002698

MD: naa.5000cca0aa0029c4

MD: naa.5000cca0aa002950

MD: naa.5000cca0aa0028cc

DiskMapping[4]:

SSD: naa.5000c5003024eb4f

MD: naa.5000c50030287137

MD: naa.5000c50030287093

MD: naa.5000c50030287027

MD: naa.5000c5003024eb5b

MD: naa.5000c50030287187

How to find out vUAN UUIDs for each naa ....:[root@esxi-dl560-gen10-1:/vmfs/volumes] localcli vsan storage list | grep -B15 'ity Tier: tr' | grep -E '^naa|VSAN UUID'

naa.5000cca0aa002698:

VSAN UUID: 52247b7d-fed5-a2f2-a2e8-5371fa7ef8ed

naa.5000cca0aa0029c4:

VSAN UUID: 52309c55-3ecc-3fe8-f6ec-208701d83813

naa.5000c50030287027:

VSAN UUID: 523d7ea5-a926-3acd-2d58-0c1d5889a401

naa.5000cca0aa0022a8:

VSAN UUID: 524431a2-4291-cb49-7070-8fa1d5fe608d

naa.5000c50030287187:

VSAN UUID: 5255739f-286c-8808-1ab9-812454968734

naa.5000cca0aa0025f4: <<<<<<<

VSAN UUID: 52b1d17e-02cc-164b-17fa-9892df0c1726

naa.5000cca0aa00253c:

VSAN UUID: 52bd28f3-d84e-e1d5-b4dc-54b75456b53f

naa.5000cca0aa002950:

VSAN UUID: 52d6e04f-e1af-cfb2-3230-dd941fd8a032

naa.5000c50030287137:

VSAN UUID: 52df506a-36ea-f113-137d-41866c923901

naa.5000cca0aa002500:

VSAN UUID: 52e2ce99-1836-c825-6600-653e8142e10f

naa.5000cca0aa0028cc:

VSAN UUID: 52e89346-fd30-e96f-3bd6-8dbc9e9b4436

naa.5000c50030287093:

VSAN UUID: 52ecacbe-ef3b-aa6e-eba3-6e713a0eb3b2

naa.5000c5003024eb5b:

VSAN UUID: 52f1eecb-befa-12d6-8457-a031eacc1cab

And the most important thing.The problem turned out to be the incorrect operation of the firmware of the RAID controller and the HPE driver with vSAN.Previously, in VMware 6.7 U1, compatible firmware for the HPE Smart Array P816i-a SR Gen10 controller in vSAN HCL was version 1.98 (which turned out to be fatal for our organization), and now it says 1.65 .Moreover, version 1.99, which solved the problem at that time (January 31, 2019), was already in the HPE bins, but they did not pass it to either VMware or us, referring to the lack of certification, despite our disclaimers and all that , they say, the main thing for us is to solve the problem with the storage and that's it.As a result, the problem was finally solved only after three months, when the firmware version 1.99 for the disk controller was released!What conclusions have I drawn?- ( ), .

- ! .

- «» , «» «» , 30% «».

- HPE, , .

- , :

- HPE - . , Enterprise . , , ).

- I did not foresee a situation where additional disk space may be needed to place copies of all the Company's servers in case of emergency.

- Additionally, in the light of what has happened, for VMware I will no longer buy hardware for large companies, any vendors other than DELL. Why, because DELL, as far as I know, acquired VMware, and now the integration of hardware and software in this direction is expected to be as close as possible.

As they say, burnt in milk, blow into the water.That's all guys. I wish you never to get into such terrible situations.As I recall, I will startle already!