Qrator Labs Annual Network Security and Availability Report

We have a tradition in Qrator Labs - at the beginning, and February is definitely not the end, every year publish a report on the previous year.Like any perennial entity, it is surrounded by many related stories. For example, it has already become a "good" omen when in the beginning of January another DDoS attack arrives on our corporate pages, which we do not have time to make out in the report. 2020 was half the exception - we managed to describe the attack vector (TCP SYN-ACK amplification), but it was to us, on qrator.net, that the guest came (and not one) only on January 18, but immediately with the guests: 116 Gbps at 26 Mpps.It is worth confessing that retelling the events of the past year is a specific genre. Therefore, we decided in the process of reflection to focus on what will happen - primarily with our team and product - this year, so do not be surprised to read about our development plans.We will start with two of the most interesting topics for us last year: SYN-ACK amplifications and BGP “optimizations”.

We have a tradition in Qrator Labs - at the beginning, and February is definitely not the end, every year publish a report on the previous year.Like any perennial entity, it is surrounded by many related stories. For example, it has already become a "good" omen when in the beginning of January another DDoS attack arrives on our corporate pages, which we do not have time to make out in the report. 2020 was half the exception - we managed to describe the attack vector (TCP SYN-ACK amplification), but it was to us, on qrator.net, that the guest came (and not one) only on January 18, but immediately with the guests: 116 Gbps at 26 Mpps.It is worth confessing that retelling the events of the past year is a specific genre. Therefore, we decided in the process of reflection to focus on what will happen - primarily with our team and product - this year, so do not be surprised to read about our development plans.We will start with two of the most interesting topics for us last year: SYN-ACK amplifications and BGP “optimizations”.TCP SYN-ACK amplification and other protocols

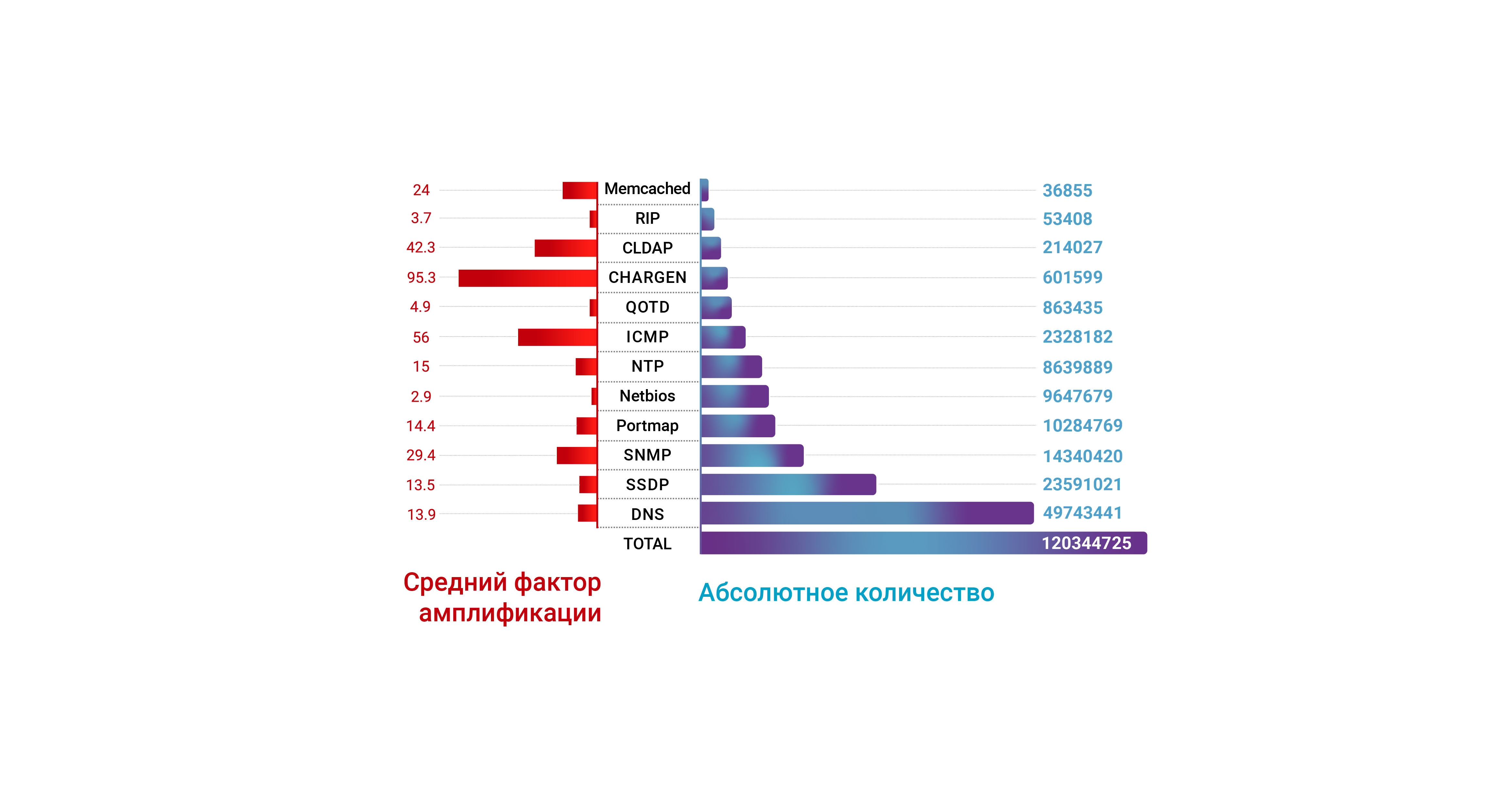

The IoT market growth, among other things, means that attackers can exploit vulnerable devices if they wish, creating a significant attack bandwidth - as happened in the middle of the year when the WSDD protocol was used to cause visible damage. The Apple ARMS protocol, which was used to obtain an amplification coefficient of the order of 35.5, was also visible in attacks on the Qrator Labs filtering network.During 2019, the public also learned about new amplifiers (PCAP), and also personally saw the long-known attack vector involving TCP - SYN-ACK. The main difference between this method and typical UDP amplification is that the SYN-ACK vector does not use a response larger than the request - instead, it only tries to answer the TCP request several times, thereby creating a noticeable amplification coefficient. Since public clouds on the Internet respond to packets with spoofing the source address, attacks involving the SYN-ACK amplification vector have become one of the most serious network threats. The apogee happened when a major cloud hosting provider Servers.com turned to Qrator Labswith a request for help in neutralizing DDoS attacks, including the SYN-ACK vector.Quite interesting is the fact that the reaction method most often used in the past in the form of dumping all UDP traffic, which virtually neutralizes the lion's share of attacks using amplification, does not help to neutralize the SYN-ACK vector at all. Smaller Internet companies have enormous difficulties in neutralizing such threats, since it requires the use of comprehensive measures to combat DDoS attacks. Although TCP SYN-ACK amplification is not new, so far it has remained a relatively unknown attack vector. An attacker sends SYN packets to all public TCP services on the Internet, replacing the source address with the address of the victim, and each of these services in turn responds several times in an attempt to establish a connection - usually from 3 to 5. For a very long time, this attack vector was considered meaningless, and only in July 2019 did we see that attackers were able to generate enough attacking bandwidth to shake even very large and distributed network infrastructures. This is especially unusual, given the already mentioned fact of the absence of an “amplification of the response” as such and the use of only the possibility of reconnection inherent in the protocol in case of failure. For those,anyone interested in other protocols with similar “capabilities”, you can point to the QUIC protocol, which already provides the server with the option to respond to a client’s request with an enlarged response (although the draft protocol also “recommends” not to send a response more than three times the size of the request).Amplification has ceased to be a threat with coefficients of about 100x - it is obvious that 3-5x is quite enough today. The solution to this problem is almost impossible without eliminating the phenomenon of “spoofed” traffic as a category - an attacker should not be able to simulate someone’s network identifier and flood it with traffic from sources of legitimate content. BCP38 (a set of best and generally accepted practices for setting up networks and solving problematic situations) does not work at all, and the creators of new transport protocols - such as QUIC - poorly assess the danger posed even by very small amplification capabilities. They are on the other side.Networks need a reliable way to discard or at least limit spoofed traffic - and this tool should have enough information about the legitimacy of the request source. Cloud networks owned by companies such as Amazon, Akamai, Google and Azure today are an almost perfect target for TCP amplification - they have a lot of powerful hardware that can satisfy almost all the goals of an attacker.

Although TCP SYN-ACK amplification is not new, so far it has remained a relatively unknown attack vector. An attacker sends SYN packets to all public TCP services on the Internet, replacing the source address with the address of the victim, and each of these services in turn responds several times in an attempt to establish a connection - usually from 3 to 5. For a very long time, this attack vector was considered meaningless, and only in July 2019 did we see that attackers were able to generate enough attacking bandwidth to shake even very large and distributed network infrastructures. This is especially unusual, given the already mentioned fact of the absence of an “amplification of the response” as such and the use of only the possibility of reconnection inherent in the protocol in case of failure. For those,anyone interested in other protocols with similar “capabilities”, you can point to the QUIC protocol, which already provides the server with the option to respond to a client’s request with an enlarged response (although the draft protocol also “recommends” not to send a response more than three times the size of the request).Amplification has ceased to be a threat with coefficients of about 100x - it is obvious that 3-5x is quite enough today. The solution to this problem is almost impossible without eliminating the phenomenon of “spoofed” traffic as a category - an attacker should not be able to simulate someone’s network identifier and flood it with traffic from sources of legitimate content. BCP38 (a set of best and generally accepted practices for setting up networks and solving problematic situations) does not work at all, and the creators of new transport protocols - such as QUIC - poorly assess the danger posed even by very small amplification capabilities. They are on the other side.Networks need a reliable way to discard or at least limit spoofed traffic - and this tool should have enough information about the legitimacy of the request source. Cloud networks owned by companies such as Amazon, Akamai, Google and Azure today are an almost perfect target for TCP amplification - they have a lot of powerful hardware that can satisfy almost all the goals of an attacker. It is difficult to eliminate the consequences of such attacks on the modern Internet. As already mentioned, modern frontends and backends of applications and libraries used to create them are mutually integrated. Using the capabilities of open source software solutions located inside large clouds in their own development stack can lead to serious troubles as a result of an attack using SYN-ACK amplification from the same cloud. Broken repositories and non-updating configuration files as a result of blocking (due to a fake address of the request source, your address) from the side of the cloud service provider is a situation in which no one wants to be. During 2019, we faced this situation several times, dealing with complaints from affected companies,discovered unimaginable critical dependencies for the first time during their existence and development.Further development of the BGP protocol is necessary in order to use it in the fight against spoofing in the TCP / IP protocol stack. Routing tables are fundamentally different from subnet tables, and we need to teach the network to quickly isolate and discard an illegitimate packet — in other words, provide authentication at the network infrastructure level. Attention should be paid not to the “destination address”, but to the “source address” in order to match it with the information contained in the routing table.

It is difficult to eliminate the consequences of such attacks on the modern Internet. As already mentioned, modern frontends and backends of applications and libraries used to create them are mutually integrated. Using the capabilities of open source software solutions located inside large clouds in their own development stack can lead to serious troubles as a result of an attack using SYN-ACK amplification from the same cloud. Broken repositories and non-updating configuration files as a result of blocking (due to a fake address of the request source, your address) from the side of the cloud service provider is a situation in which no one wants to be. During 2019, we faced this situation several times, dealing with complaints from affected companies,discovered unimaginable critical dependencies for the first time during their existence and development.Further development of the BGP protocol is necessary in order to use it in the fight against spoofing in the TCP / IP protocol stack. Routing tables are fundamentally different from subnet tables, and we need to teach the network to quickly isolate and discard an illegitimate packet — in other words, provide authentication at the network infrastructure level. Attention should be paid not to the “destination address”, but to the “source address” in order to match it with the information contained in the routing table.

BGP - “optimizers”

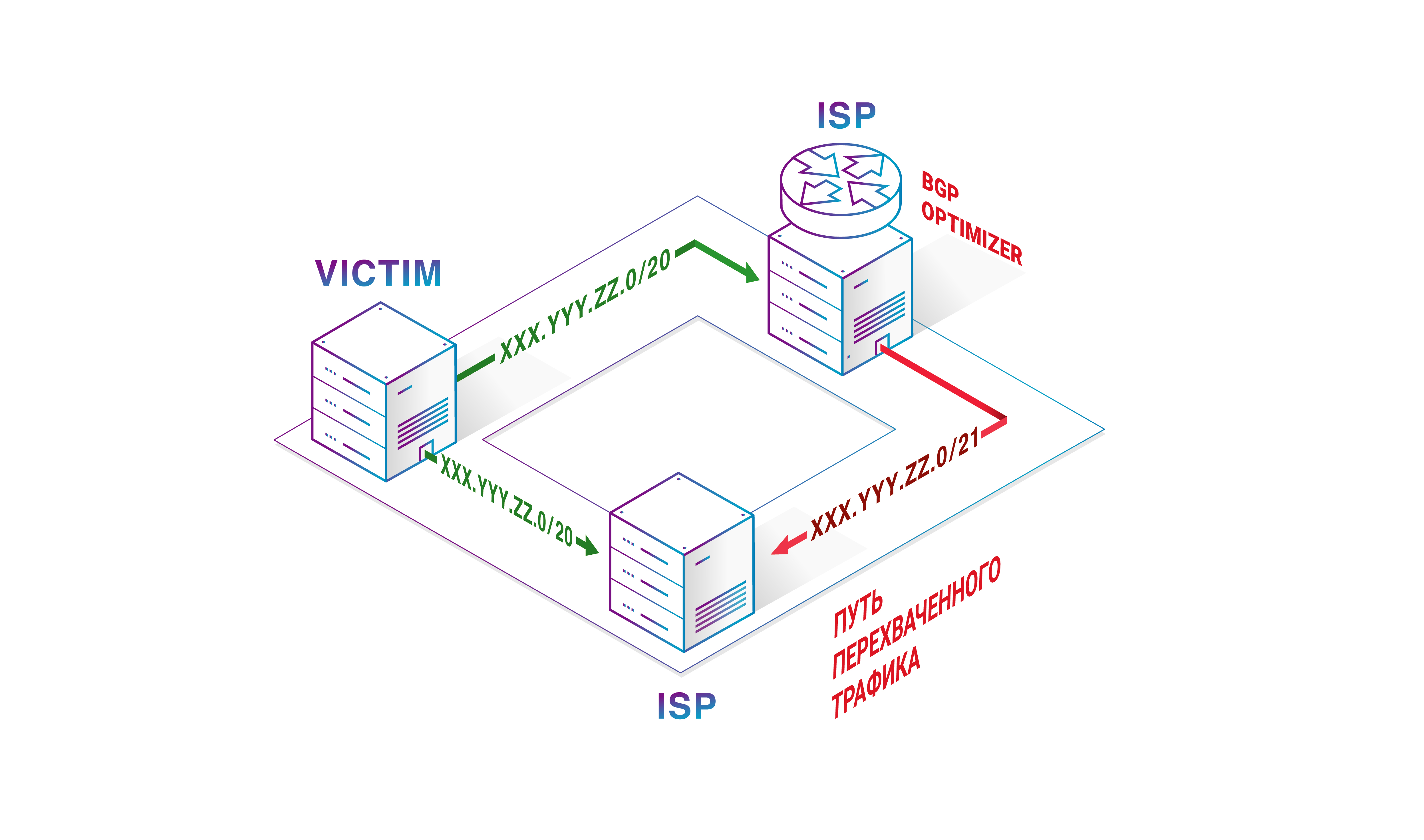

BGP incidents are always ongoing. According to the current scale of magnitude of network incidents, route leaks and interceptions of advertised subnets that have spread far enough carry the main danger in the extent of propagation and the time required to eliminate the consequences. Perhaps this is due to the fact that the development of the network component itself was much slower than the rest of the world of developing new hardware and software. For a long time, this was true - it is time to abandon this heritage. It is necessary to invest time and money in network software and hardware, as well as in people who configure BGP filters.The 2019 incidents involving BGP optimizers showed that the BGP statistics everyone relies on has a lot of problems. The fact is that in the BGP announcement received from the peer, you can change almost all the content before it is announced again - the protocol is very flexible. This is exactly what the optimizer uses: larger or smaller network prefixes, along with idle filters and the local pref option. If someone disaggregates the prefix into two smaller ones, they will usually win the competition for the right to transmit traffic. The optimizers take the correct route and announce its smaller part - quite straightforwardly. And it works by breaking up the big parts of the Internet.BGP optimizers exist because a large number of companies want to control the outgoing traffic flow automatically, without thinking about traffic as such. Accepting routes that should not exist is a huge mistake, because there are no such routes at the point from which they originate.Many texts were written for 2019, including by our company., about the risks of “BGP optimization.” In the case of Verizon, of course, creating a new filtering policy for each new consumer of the service is unpleasant. And it is also known that Verizon has no filters, since it took hundreds of problematic routes from AS396531's own client, which is a “stub” - an autonomous system with a single connection. Moreover, the telecommunications giant also did not have a subnet limit for this connection. There was not even a basic check for the presence of other Tier-1 operators on the way from the consumer (this type of check is started by default and does not require support or change). In the press, this incident, including Verizon and Cloudflare, was discussed quite vigorously. In addition to possible action by Verizon, many have noted the benefits of RPKI and a strict maximum record in ROA. But what about maxLength? It is known that with a strict check of the maximum recording length, all announcements with an indication of smaller subnets become incorrect when checking the ROA. It is also known that there is a policy for resetting invalid paths. There is also a draft within the IETF, indicating that maxLength should be equal to the length of the network prefix.Cloudflare follows best practices. However, there is a small problem. Verizon does not support the reset policy for invalid routes. Perhaps he did not have any RPKI verification at all. As a result, all routes with smaller subnets spread even further, although they were incorrect from the point of view of Route Origin validation and pulled all traffic onto themselves. At the same time, despite the Invalid status, Cloudflare could not announce the same routes by itself, as its suppliers would drop them as incorrect.A route leak can be eliminated by simply manipulating the AS_PATH attribute in the form: ASx AS396531 ASx (where ASx is the number of the autonomous source system) during the creation of the announcement - this will help to avoid leakage by using the loop detection mechanism while the problem is solved in other ways. Although each time with such manipulation it will be necessary to keep such policies in mind.Most often in real life, the standard method is used - the complaint. What results in extra hours of waiting. Communication can be painful, and we cannot blame Cloudflare for this - they did everything they could, given the circumstances.

In the press, this incident, including Verizon and Cloudflare, was discussed quite vigorously. In addition to possible action by Verizon, many have noted the benefits of RPKI and a strict maximum record in ROA. But what about maxLength? It is known that with a strict check of the maximum recording length, all announcements with an indication of smaller subnets become incorrect when checking the ROA. It is also known that there is a policy for resetting invalid paths. There is also a draft within the IETF, indicating that maxLength should be equal to the length of the network prefix.Cloudflare follows best practices. However, there is a small problem. Verizon does not support the reset policy for invalid routes. Perhaps he did not have any RPKI verification at all. As a result, all routes with smaller subnets spread even further, although they were incorrect from the point of view of Route Origin validation and pulled all traffic onto themselves. At the same time, despite the Invalid status, Cloudflare could not announce the same routes by itself, as its suppliers would drop them as incorrect.A route leak can be eliminated by simply manipulating the AS_PATH attribute in the form: ASx AS396531 ASx (where ASx is the number of the autonomous source system) during the creation of the announcement - this will help to avoid leakage by using the loop detection mechanism while the problem is solved in other ways. Although each time with such manipulation it will be necessary to keep such policies in mind.Most often in real life, the standard method is used - the complaint. What results in extra hours of waiting. Communication can be painful, and we cannot blame Cloudflare for this - they did everything they could, given the circumstances. What is the result? Sometimes we are asked how difficult it is to use BGP in order to organize something bad. Suppose you are a novice villain. It is necessary to connect to a large telecom operator, which is bad with filter settings. Then select any target and take its network prefixes by announcing their smaller parts. Also, do not forget to discard all transit packets. Congratulations! You have just created a black hole on the Internet for all transit traffic of this service through your provider. The victim will lose real money due to such a denial of service and, quite possibly, will suffer significant reputation losses. It takes at least an hour to find the causes of such an incident, and an hour is required forto bring everything back to normal - provided that such a situation is unintentional and there is goodwill for resolution among all participants.In March 2019, there was another case , which at one time we did not associate with BGP optimization. However, it also deserves attention.Imagine that you are a transit provider announcing subnets of your own customers. If such clients have several providers, and not you one, you will receive only part of their traffic. But the more traffic, the greater the profit. So you decide to advertise smaller subnets from these networks with the same AS_PATH attribute to get all the traffic for such networks. With the remaining money, of course.Will ROA help in this case? Maybe yes, but only if you decide not to use maxLength at all and you do not have ROA records with intersecting prefixes. For some telecom operators, this option is not feasible.If we talk about other BGP security mechanisms, then ASPA would not help in this type of interception, because AS_PATH belongs to the correct path. BGPSec is currently inefficient due to lack of support and the remaining ability to conduct downgrade attacks.There remains a motive for increasing profitability due to the receipt of all the traffic of autonomous systems with several providers and the absence of any protection.

What is the result? Sometimes we are asked how difficult it is to use BGP in order to organize something bad. Suppose you are a novice villain. It is necessary to connect to a large telecom operator, which is bad with filter settings. Then select any target and take its network prefixes by announcing their smaller parts. Also, do not forget to discard all transit packets. Congratulations! You have just created a black hole on the Internet for all transit traffic of this service through your provider. The victim will lose real money due to such a denial of service and, quite possibly, will suffer significant reputation losses. It takes at least an hour to find the causes of such an incident, and an hour is required forto bring everything back to normal - provided that such a situation is unintentional and there is goodwill for resolution among all participants.In March 2019, there was another case , which at one time we did not associate with BGP optimization. However, it also deserves attention.Imagine that you are a transit provider announcing subnets of your own customers. If such clients have several providers, and not you one, you will receive only part of their traffic. But the more traffic, the greater the profit. So you decide to advertise smaller subnets from these networks with the same AS_PATH attribute to get all the traffic for such networks. With the remaining money, of course.Will ROA help in this case? Maybe yes, but only if you decide not to use maxLength at all and you do not have ROA records with intersecting prefixes. For some telecom operators, this option is not feasible.If we talk about other BGP security mechanisms, then ASPA would not help in this type of interception, because AS_PATH belongs to the correct path. BGPSec is currently inefficient due to lack of support and the remaining ability to conduct downgrade attacks.There remains a motive for increasing profitability due to the receipt of all the traffic of autonomous systems with several providers and the absence of any protection. The total number of static loops in the network.What can still be done? The obvious and most radical step is to review current routing policies. This will help you divide the address space into the smallest possible parts (without intersections) that you would like to announce. Sign an ROA only for these routes using the maxLength option. Current ROV validation can save you from such an attack. But again, some cannot afford to use only the smallest subnets.Using Radar.Qrator, you can track such events. To do this, we need basic information about your prefixes. You can establish a BGP session with a collector and provide information about your visibility of the Internet. We also positively appreciate those who are ready to send us a full routing table (full-view) - this helps to track the extent of incidents, but for your own benefit and starting to use the tool, a list of routes is sufficient only for your prefixes. If you already have a session with Radar.Qrator, please check that you are sending routes. For automatic detection and notification of attacks on your address space, this information is necessary.We repeat - if a similar situation is detected, you can try to counteract it. The first approach is self-announcement of paths with prefixes of smaller subnets. At the next attack on these prefixes - repeat. The second approach is to punish the attacker by denying the autonomous system access to your paths. As described earlier, this is achieved by adding the attacker’s autonomous system number to AS_PATH of your old paths, causing protection against loops to work.

The total number of static loops in the network.What can still be done? The obvious and most radical step is to review current routing policies. This will help you divide the address space into the smallest possible parts (without intersections) that you would like to announce. Sign an ROA only for these routes using the maxLength option. Current ROV validation can save you from such an attack. But again, some cannot afford to use only the smallest subnets.Using Radar.Qrator, you can track such events. To do this, we need basic information about your prefixes. You can establish a BGP session with a collector and provide information about your visibility of the Internet. We also positively appreciate those who are ready to send us a full routing table (full-view) - this helps to track the extent of incidents, but for your own benefit and starting to use the tool, a list of routes is sufficient only for your prefixes. If you already have a session with Radar.Qrator, please check that you are sending routes. For automatic detection and notification of attacks on your address space, this information is necessary.We repeat - if a similar situation is detected, you can try to counteract it. The first approach is self-announcement of paths with prefixes of smaller subnets. At the next attack on these prefixes - repeat. The second approach is to punish the attacker by denying the autonomous system access to your paths. As described earlier, this is achieved by adding the attacker’s autonomous system number to AS_PATH of your old paths, causing protection against loops to work.Banks

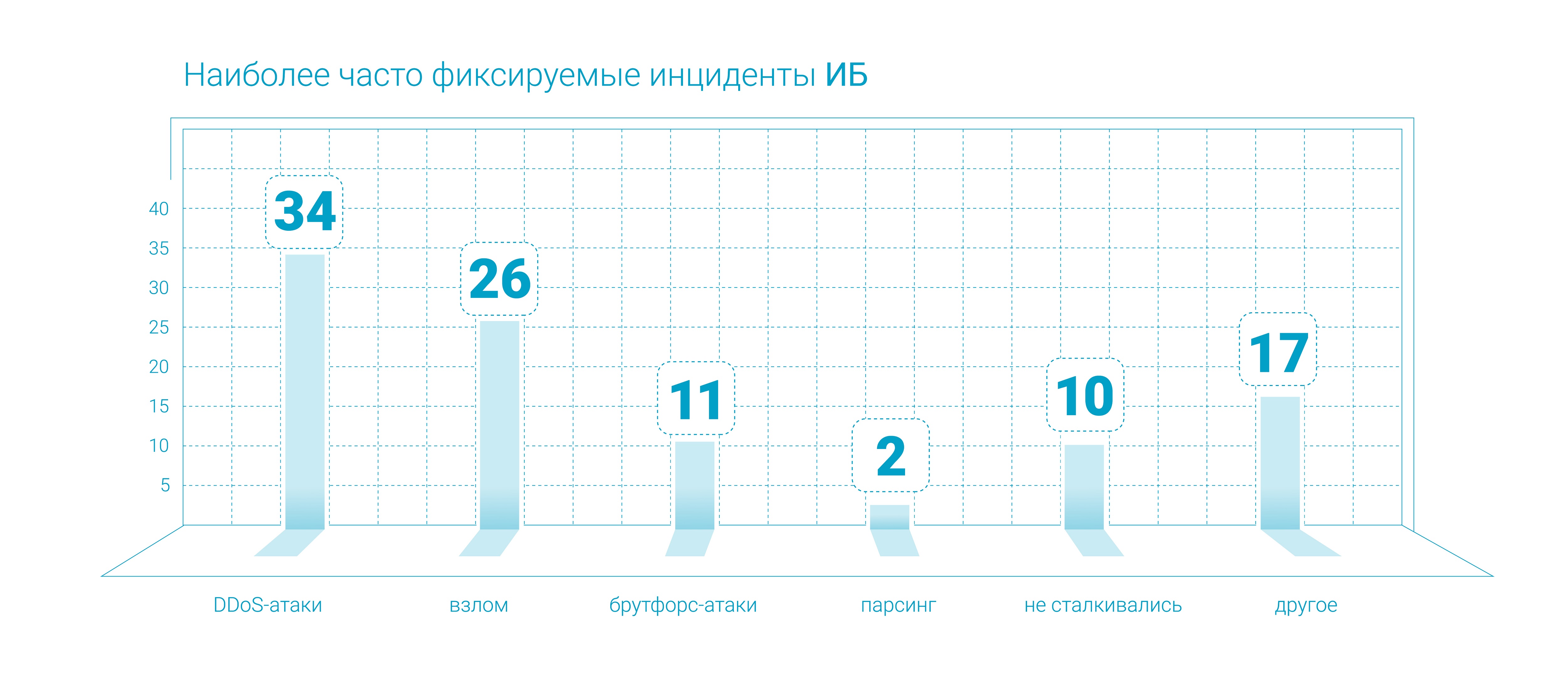

In 2019, we conducted a study in Russia , which showed that financial institutions recorded an increase in the importance of information security and began to give such investments a higher priority.Respondent banks identify financial and reputational damage as the most serious consequences of information security breaches.Most financial institutions surveyed consider hybrid solutions to be the most effective means of countering distributed denial of service attacks.The dynamics of the last two years clearly indicate that the field of information security is growing at an enormous pace: over the past 2 years, most banks have increased investment in information security. Cybersecurity has already become visible at the level of company management. Business leaders are beginning to pay more attention to the processes of implementing security policies, and the position of Director of Information Security has acquired a new role. IS managers are gradually turning into key advisers for top managers of financial organizations, introducing business tactics and security strategies in accordance with the needs of the company.

In 2019, we conducted a study in Russia , which showed that financial institutions recorded an increase in the importance of information security and began to give such investments a higher priority.Respondent banks identify financial and reputational damage as the most serious consequences of information security breaches.Most financial institutions surveyed consider hybrid solutions to be the most effective means of countering distributed denial of service attacks.The dynamics of the last two years clearly indicate that the field of information security is growing at an enormous pace: over the past 2 years, most banks have increased investment in information security. Cybersecurity has already become visible at the level of company management. Business leaders are beginning to pay more attention to the processes of implementing security policies, and the position of Director of Information Security has acquired a new role. IS managers are gradually turning into key advisers for top managers of financial organizations, introducing business tactics and security strategies in accordance with the needs of the company.Electronic commerce

A similar study was conducted in the field of e-commerce, where we found that DDoS attacks remain a significant threat to Russian retail, especially for developing digital service channels. The number of attacks in this segment continues to grow.In some segments of the market, despite its overall stabilization and consolidation, confidence in the cleanliness of competitors is still at a low level. At the same time, large online stores for the most part trust their consumers and do not consider personal motives of customers as a serious cause of cyber attacks.As a rule, the medium and large e-commerce businesses learn about their readiness for DDoS attacks only in practice, passing a “battle test”. The need for preliminary risk assessment of project preparation is far from being recognized by all, and even fewer companies actually carry out such an assessment. The main reasons for the hacking, respondents consider a malfunction of the store, as well as theft of the user base.In general, the level of retail maturity in approaches to ensuring cybersecurity is growing. So, all respondents use some means of DDoS protection and WAF.In further studies, it is planned to include a representative sample of small online businesses among the respondents and to study in detail this market segment, its risks and the current level of security.

A similar study was conducted in the field of e-commerce, where we found that DDoS attacks remain a significant threat to Russian retail, especially for developing digital service channels. The number of attacks in this segment continues to grow.In some segments of the market, despite its overall stabilization and consolidation, confidence in the cleanliness of competitors is still at a low level. At the same time, large online stores for the most part trust their consumers and do not consider personal motives of customers as a serious cause of cyber attacks.As a rule, the medium and large e-commerce businesses learn about their readiness for DDoS attacks only in practice, passing a “battle test”. The need for preliminary risk assessment of project preparation is far from being recognized by all, and even fewer companies actually carry out such an assessment. The main reasons for the hacking, respondents consider a malfunction of the store, as well as theft of the user base.In general, the level of retail maturity in approaches to ensuring cybersecurity is growing. So, all respondents use some means of DDoS protection and WAF.In further studies, it is planned to include a representative sample of small online businesses among the respondents and to study in detail this market segment, its risks and the current level of security.DNS-over-HTTPS vs DNS-over-TLS

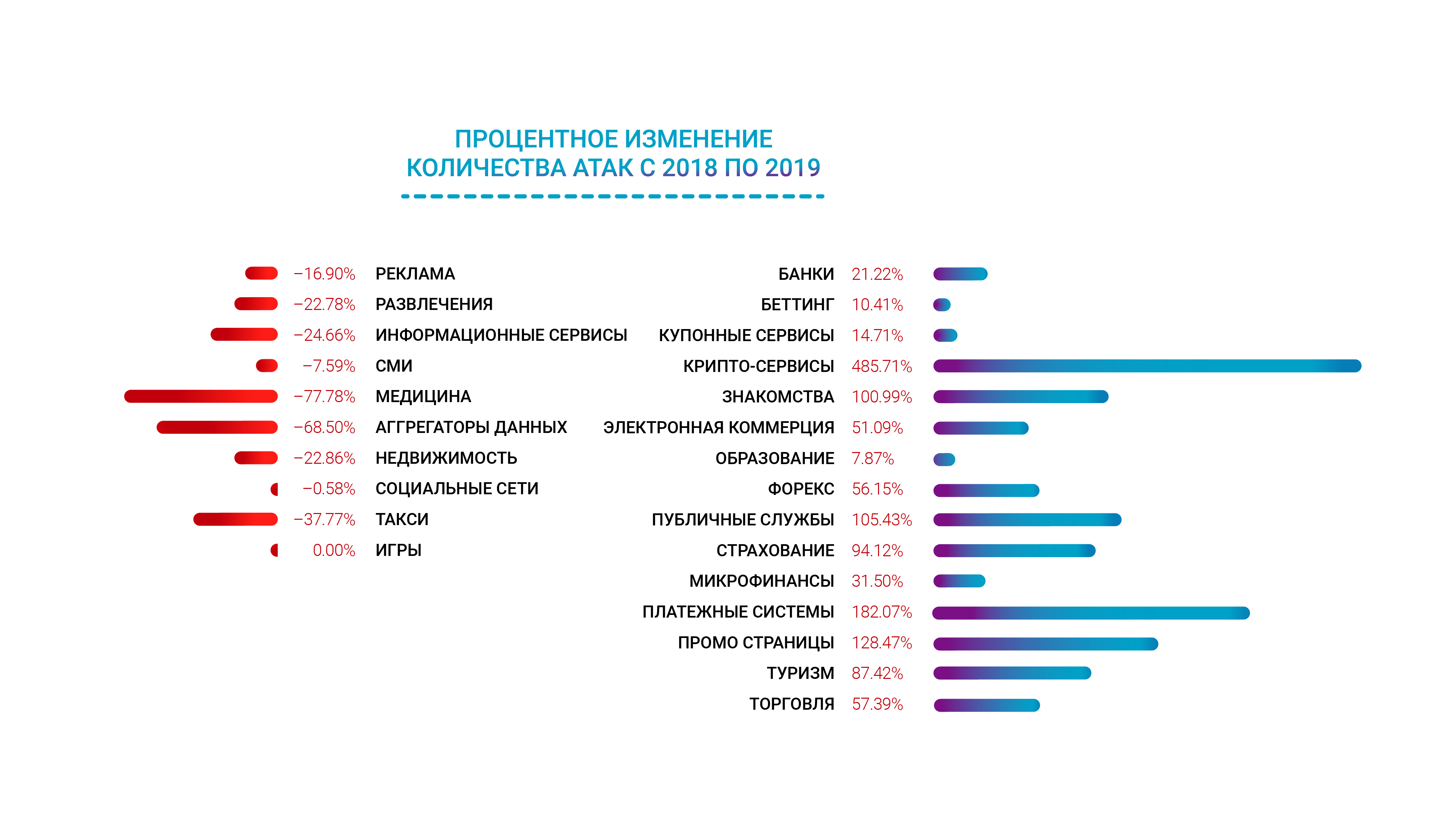

One of the hottest topics of 2019 was the debate over which technology the future holds - DoH or DoT. The initial contradiction is due to both significant differences in different legislatures (EU GDPR versus federal and state laws in the USA) and competition in the main browser market: Google Chrome and Mozilla Firefox, as well as Apple Safari. We are not ready to say whether the introduction of any of these technologies will reduce the number of amplifiers on the Internet. However, with the DoT option this seems more possible from an architectural point of view due to the creation of secure connections between DNS resolvers. Regarding other forecasts, we will wait for a decision that the market will make. The most attacked industries in 2019.

The most attacked industries in 2019.TCP acknowledgment vulnerabilities

In June 2019, the first details about the existence of serious vulnerabilities appeared in some implementations of the TCP / IP protocol stack, as reported by Netflix . Presumably, the problem was originally discovered in the FreeBSD operating system, after which our company received confirmation of the presence of the same and other vulnerabilities in Linux.The most dangerous CVE-2019-11477 (SACK Panic), which can allow an attacker to call kernel panic using a sequence of specially formed packages. Three other vulnerabilities can lead to excessive resource consumption, which could result in denial of service.Disabling SACK functionality can lead to increased delays, however, this will protect servers from possible denial of service attacks - a temporary decrease in TCP / IP performance, according to Qrator Labs, is a reasonable way to neutralize a serious vulnerability. Patches covering these vulnerabilities have long been available and are recommended for installation.The future of BGP routing

At the end of 2019, Radar.Qrator is the largest BGP global routing collection and analytics platform with more than 650 established sessions.The Radar.Qrator team is working to improve the usability and reliability of the service, making improvements to the BGP relationship model, which is the basis of a paid service for monitoring an autonomous system in real time.In 2019, great efforts were made to accelerate the data processing process and the implementation of SLA, which guarantees the quality of the flow of analytical data. Today Radar is the largest analytical platform and BGP collector in the world, with more than 600 established sessions, and we hope to use the data to the full in order to notify consumers of the paid part of the service about all events occurring in BGP routing without delay.Radar.Qrator is growing faster than expected - both in terms of the number of website visitors and the number of subscribers at the same time. In 2020, thanks to feedback from customers, several significant improvements will be presented at once, one of such things will be a new incident storage for each autonomous system.One of the problems we encountered was the expected response time in the Radar web interface, accessible to every visitor to the site. With the increase in the amount of data, it became necessary to update both the data storage model and the architecture of user requests to it. Radar should be able to quickly ship all data for the requested period to any visitor. The proposed scheme of the ASPA mechanism.We also hope that during this year ASPAwill become RFC - an approved network standard. The need for a broader than the combination of IRR / RPKI, and more lightweight than the BGPSec solution, is obvious to everyone in the industry. In 2019, it became clear how an incorrect BGP configuration can lead to route leaks with terrible consequences in the form of unavailability of a huge number of services involving the largest Internet service providers. Surprisingly, these incidents once again proved that there is no silver bullet that can overcome all possible scenarios of their development.The largest Internet providers in the world need to support the initial movement. Engaging large communities that can help find the source of route diversion is the next step. In simple terms, ASPA is a new entity that combines the current capabilities of ROA and RPKI - it implements the simple principle: "Know your supplier." The owner of AS needs to know only his upstream in order to implement a reliable enough way to protect against BGP routing incidents.As in the case of ROA, ASPA allows you to filter routes for any connection: with a higher provider, a neighbor, or, of course, a consumer. The combination of ROA and ASPA can solve the lion's share of network security tasks without the need for fundamental changes to the protocol itself, filtering out intentional attacks and errors related to the human factor. However, it will also be necessary to wait for support from software and hardware manufacturers - although there is confidence that open source support will not be long in coming.One of the main advantages of ASPA is that it is a very simple idea. In 2020, it is planned to complete work on a draft protocol, and if successful, the IETF and the co-authors of the draft will come to a consensus on consolidating the status of the protocol.

The proposed scheme of the ASPA mechanism.We also hope that during this year ASPAwill become RFC - an approved network standard. The need for a broader than the combination of IRR / RPKI, and more lightweight than the BGPSec solution, is obvious to everyone in the industry. In 2019, it became clear how an incorrect BGP configuration can lead to route leaks with terrible consequences in the form of unavailability of a huge number of services involving the largest Internet service providers. Surprisingly, these incidents once again proved that there is no silver bullet that can overcome all possible scenarios of their development.The largest Internet providers in the world need to support the initial movement. Engaging large communities that can help find the source of route diversion is the next step. In simple terms, ASPA is a new entity that combines the current capabilities of ROA and RPKI - it implements the simple principle: "Know your supplier." The owner of AS needs to know only his upstream in order to implement a reliable enough way to protect against BGP routing incidents.As in the case of ROA, ASPA allows you to filter routes for any connection: with a higher provider, a neighbor, or, of course, a consumer. The combination of ROA and ASPA can solve the lion's share of network security tasks without the need for fundamental changes to the protocol itself, filtering out intentional attacks and errors related to the human factor. However, it will also be necessary to wait for support from software and hardware manufacturers - although there is confidence that open source support will not be long in coming.One of the main advantages of ASPA is that it is a very simple idea. In 2020, it is planned to complete work on a draft protocol, and if successful, the IETF and the co-authors of the draft will come to a consensus on consolidating the status of the protocol.

Current and Future Development of Qrator Filtration Network

Filtering logic

Over the previous two years, we have invested significant amounts of time and effort in improving the filtering logic, which is the basis of the attack mitigation service that we provide. To date, they are already working on the entire network, showing a significant increase in both operational efficiency and a decrease in the load on the memory and central processor at the points of presence. New filters also allow you to synchronously analyze requests and provide a variety of JavaScript tasks to check the legitimacy of the visitor, improving the quality of attack detection.The main reason for updating the filtering logic is the need to detect a whole class of bots from the first request. Qrator Labs uses combinations of checks with and without state memorization, and only after confirmation of the user's legitimacy sends the request further to the protected server. Therefore, it is necessary that the filters work very quickly - modern DDoS attacks pass with an intensity of tens of millions of packets per second, and some of them can be one-time, unique requests.In 2020, as part of this process, significant changes will also be made to the process of “onboarding” traffic, that is, the start of work with a new protected service. The traffic of each service is unique in its own way and we strive to ensure that, no matter what, new clients quickly receive the complete neutralization of all types of attacks, even if the model is not well trained. As a result, we will ensure a smoother inclusion of filtering when connected under attack, and the beginning of filtering will be accompanied by fewer false positives. All this is important when the service and the people behind it are in the middle of the struggle for survival under massive network attacks. Distribution of DDoS attacks for 2019 by used band.

Distribution of DDoS attacks for 2019 by used band.HTTP / 2

Problems with implementations of the HTTP / 2 protocol (denial of service vulnerabilities) were mainly resolved during 2019, which allowed us to enable support for this protocol inside the Qrator filtering network.Now the work is actively ongoing in order to provide HTTP / 2 protection to each client, completing a long and deep testing period. Along with support for HTTP / 2, TLS 1.3 introduced the possibility of using eSNI with the automated issuance of Let's Encrypt security certificates.Currently, HTTP / 2 is available upon request as an additional option.

Containers and Orchestration

Current containerization trends are poorly compatible with our security approaches. This means that working with Kubernetes has to overcome a variety of challenges. Security and accessibility are among the highest priorities, which does not allow us to rely on common practices among those working with Kubernetes, first of all giving the orchestration process full control over the operating system with all the features - this would leave us exclusively at the mercy of the Kubernetes developers and add-ons. So far, Kubernetes does not have all the necessary capabilities out of the box, and some are even hidden to the state “use as is, there is no guarantee” and cannot be investigated in detail. But all this does not turn away from further work on the implementation of Kubernetes in the management of the filtering network,gradually adjusting it to our requirements and making it a rather important part of the internal infrastructure.The future of the development and development of distributed and fault-tolerant networks requires the introduction of appropriate tools (CI / CD and automatic testing are part of it) and the ability to use it to create and manage a stable and reliable system. As the amount of data only increases, monitoring efforts should grow after them. The most natural way to build monitoring is to provide a safe and easily observable communication method for applications. We believe that Kubernetes has already proven its versatility and applicability, and its further specialization for the needs of the infrastructure fighting against DDoS attacks is the reality of working with any open source solution. Change in the average duration of DDoS attacks from 2018 to 2019.

Change in the average duration of DDoS attacks from 2018 to 2019.Yandex.Cloud and Ingress 2.0

In 2019, together with Yandex.Cloud, we presented an updated version of our service for filtering incoming traffic - Ingress, significantly expanding its filtering and configuration capabilities, providing the end user with clear management interfaces. The new version of the service already works on the Qrator Labs filtering network, as well as on the Yandex.Cloud network.The Yandex.Cloud team has come a long way with us, combining two infrastructures using Qrator Labs physical nodes located inside the partner’s infrastructure working on its traffic.Finally, after a period of in-depth testing, the new version of Ingress is ready to use. With the help of Yandex, we were able to make one of the best solutions for filtering incoming traffic, created specifically for the needs of services that generate a lot of content.We also consider it a great success that the new version of the service is intuitive to the end user and allows you to more flexibly configure filtering parameters.TLS 1.3 and ECDSA certificates

At the beginning of 2019, Qrator Labs wrote about supporting TLS version 1.3 while disabling SSL v.3. Due to the availability of the DDoS neutralization service without disclosing encryption keys, additional improvements were made to this filtering scheme, increasing the speed and reliability of syslog translation. Let us recall the results of performance measurements .As you can see, on the same core of the Intel® Xeon® Silver 4114 CPU @ 2.20GHz (released in the third quarter of 17), the total difference between ECDSA performance and the generally accepted RSA with a key length of 2048 bits is 3.5 times.Let's look at the results of running the openssl -speed command for ECDSA and RSA on the same processor.Here we can find confirmation of a previously written thesis on how the computational operations of a signature and its verification differ between ECC and RSA. Currently, armed with TLS 1.3, cryptography based on elliptic curves gives a significant increase in server performance with a higher level of protection compared to RSA. This is the main reason why we at Qrator Labs recommend and strongly encourage customers who are ready to use ECDSA certificates as well. On modern CPUs, the performance gain in favor of ECDSA is 5x.Smaller improvements

One of the small and inconspicuous, but nonetheless important innovations during 2019 was the introduction of the process of actively checking the status of upstream. If for some reason something happens during one of the superior connections of our client, the filtering service will be the first to know about it, taking the connection out of operation until the problem is resolved. In this he helps not only monitoring errors and traffic conditions, but also setting up a special accessibility check interface, which is done together with the service consumer.At the end of 2019, a large update of the filtering service user interface was made. And although the possibility of using the old version of your personal account is provided, new features are already being developed in the updated part, which provides wider functionality, for example, management of TLS certificates.While the old version of the personal account used server-side rendering, the updated version in the best traditions of the modern web is a JavaScript application that runs on a client communicating with the server via the REST API. This approach will allow you to quickly provide new functions to the user, while giving deeper opportunities for individual settings of each personal account of an individual consumer. One of such things is the Analytics section, where it is possible to customize individual metrics, the number of which is constantly increasing. Comparison of the two main classification groups for DDoS attacks for 2019.

Comparison of the two main classification groups for DDoS attacks for 2019.IPv6

Qrator Labs is actively preparing to start using IPv6 as part of traffic filtering services. For any cybersecurity company, such a transition cannot be elementary. Due to the very nature of DDoS attacks, neutralizing network attacks using IPv6 requires a radical change in approach, since it is no longer possible to deal with any form of “list” when dealing with a theoretical limit of 2,128 IP addresses. And it is only about TCP, not considering UDP.For us, 2020 will be the year of IPv6. With the depletion of free IPv4 address space, there is no other way to develop a network that meets future requirements. We hope that we will be able to effectively answer all the challenges facing us in the framework of neutralizing IPv6 attacks. First of all, this means that we will be able to announce the IPv6-subnet of the consumer filtering services using our network, while offering a first-class cybersecurity service.Antibot

In 2019, the industry observed a fierce battle between fingerprinting (visitor identification) and attempts to limit it. The desire of owners and developers of Internet services to limit or separate requests from legitimate users from automated requests from bots is understandable. On the other hand, there is nothing strange in the desire of browser developers to secure software users. Qrator Labs has for years reminded the market that if some information is publicly available and no authorization is required to receive it, then the probability of protecting it from automated parsing tends to zero. At the same time, we remind the owners of the digital business that they have the right to choose what to do with requests coming to the server. A proactive approach in certain situations helps to assess and identify the threat.As bots generate an increasing share of traffic, we can expect a future moment when large services will create special interfaces for good bots, giving them legitimate information. The rest will be blocked. Even now, at the beginning of 2020, it can be argued that not a 100% legitimate user will not be able to immediately access any resource on the Internet - many will not allow this if, say, you just change the browser user-agent to an arbitrary one.Inside Qrator Labs, the antibot service has been actively developing for several years, and in 2020 we expect to offer it to our first customers as part of the beta service. This service will operate in semi- and automatic modes, making it possible to identify and set security rules against a number of automated or outgoing requests from bots.

Equipment

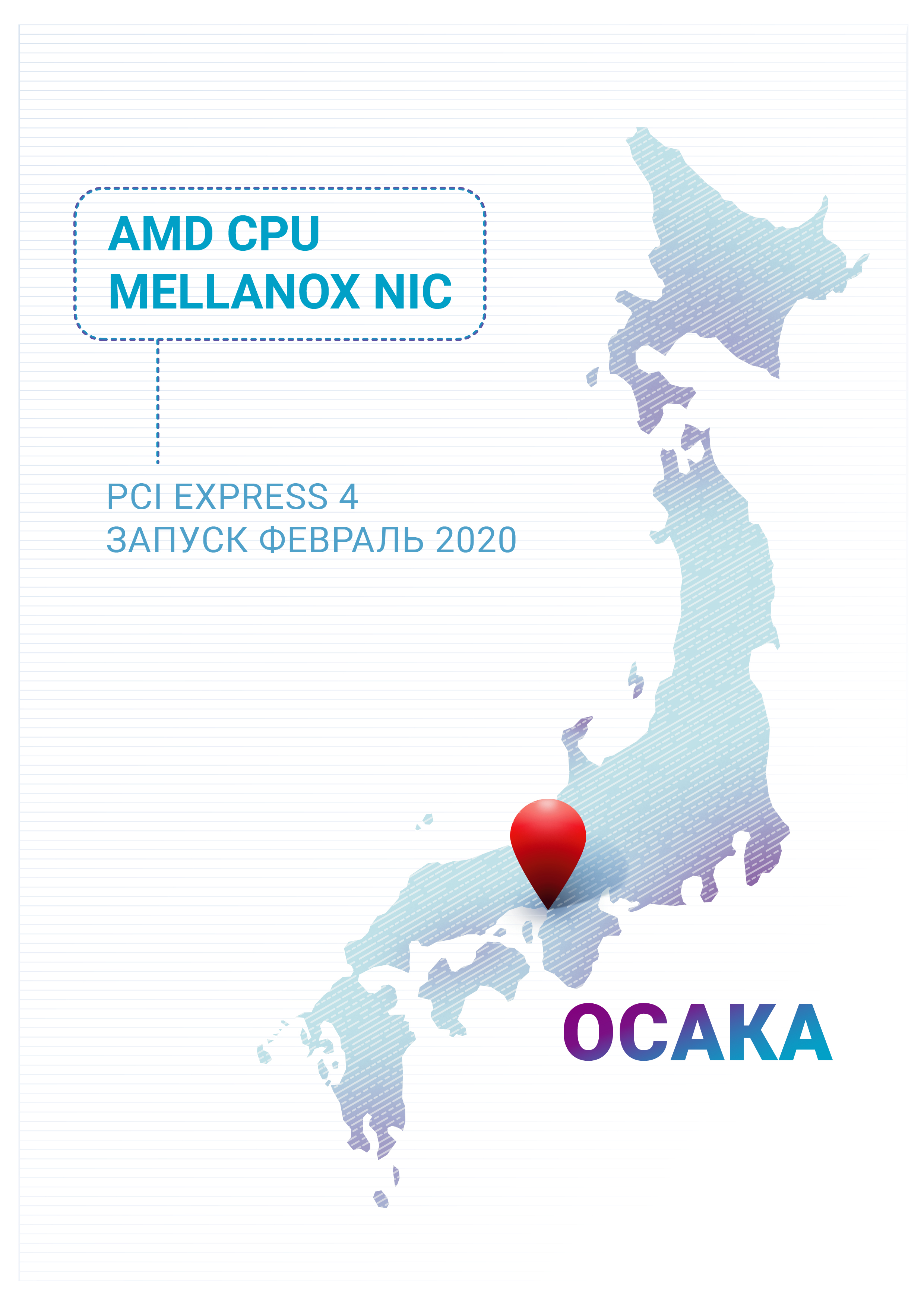

Having tested the new AMD processors, the responsible employees of the company were so intrigued that in the first quarter of 2020 Qrator Labs will commission a new point of presence in Osaka, Japan, completely built on AMD processors. 4th Generation PCI Express, available with AMD CPUs, promises to double the bandwidth between the CPU and the network interface. During the year, the use of this ligament in the most stressful scenarios will be tested and evaluated. The channel between the network interface and the processor gradually becomes a narrow neck with an increase in the amount of data transmitted in the networks. The combination of additional cores, a larger cache and the new version of PCI Express promises to bring a good result.Another reason for using the AMD CPU is the need to diversify the network architecture, although this is the first point of presence for Qrator Labs, built entirely on the new processor.We are really looking forward to the start of work of new equipment, the launch of which will be reported separately at the beginning of 2020. We hope that the combination of AMD processors and high-performance network cards using PCI Express 4 will meet our requirements. The Asian market is one of the fastest growing, and I would like to appreciate the further prospects of neutralizing DDoS attacks with new equipment there.At the same time, the arrival of a new generation of Intel processors is also expected - Ice Lake. Their further use in our infrastructure will largely depend on the results of their testing and comparison.In addition to processors, the entire industry expects the release of Intel 800 series network cards. In 2020, we will try to find them application inside the infrastructure of the filtration network.Regarding switches - Qrator Labs continues to work with Mellanox, which has repeatedly proved itself to be a reliable supplier and partner.Saying this is not easy, but while Broadcom dominates the network equipment market, we hope that merging with NVidia will give Mellanox a chance for worthy competition.Future improvements to the filtering network

During 2020, our company expects to significantly deepen its partnership with suppliers of a wide variety of services and services, such as Web Application Firewall and Content Delivery Network within the filtering network. We have always tried to integrate a variety of suppliers into the infrastructure to provide the best combinations of possible services for consumers. By the end of 2020, it is planned to announce the availability of a number of new service providers in conjunction with Qrator.2019 also revealed a number of questions regarding the protection of WebSockets technology. Its prevalence and popularity is only growing, and the complexity of proper protection is not reduced. During 2020, we expect to work with some of our customers using WebSockets technology to find the best way to protect it, even if we send arbitrary (unknown) data.What we do is not know-how and not revelation. The company came to this market later than many competitors. The team makes great efforts to do something unique and working. Part of this opportunity also lies in the genuine interest of the company's employees in academic and scientific research in the main areas of effort, which allows us to be prepared for “sudden” events.Since you have read to the end, here is the .pdf for distribution . Do not forget about network security yourself - and do not give it to others.Source: https://habr.com/ru/post/undefined/

All Articles