The task of detecting objects in the image today is one of the leading in the field of machine vision. Its essence is not only to classify the object in the picture, but also to indicate its exact location.The results of object detection can be supplemented with information on how far this object is located. The distance measurement problem can be solved using the Intel RealSense D435 Depth Camera , which measures depth at each point.In this article, we will solve the problem of measuring the distance to an object in real time using the OpenCV library and RealSense technology.

Requirements

RealSense CameraTo measure distance, we need a RealSense D435 Depth Camera . For this example, there is no need to install the RealSense SDK .Pyrealsense2 libraryAll the code, for ease of demonstration, we will write in Python 3.7 , so we need the pyrealsense2 package .pip install pyrealsense2

OpenCV libraryAlso, we need the OpenCV library (any version starting from 3.4 is suitable). The library installation manual can be viewed on the official OpenCV website .Together with OpenCV , one more necessary library will be installed - numpy .Object Detection

The first step is to select objects in the video. To detect objects in real time, the so-called one-stage models (for example, Retina Net, SSD, YOLO ), which win in speed of operation, are perfect .For simplicity of the experiment, we will take the trained model SSDLite Mobilenet v2 . Instead, any other model can be used that returns the coordinates of the object in one form or another.You can download the archive with the model files in the official TensorFlow repository .The archive contains a frozen calculation graph in binary .pb format, as well as various configs. But to use the model in OpenCV, you must also have a graph of calculations in the text format .pbtxt . It is not in the archive, so it must be generated manually.Copy two scripts from the OpenCV repository :- github.com/opencv/opencv/blob/master/samples/dnn/tf_text_graph_ssd.py

- github.com/opencv/opencv/blob/master/samples/dnn/tf_text_graph_common.py

Then, execute the following command, specifying the necessary paths:python tf_text_graph_ssd.py --input /path/to/model.pb --config /path/to/example.config --output /path/to/graph.pbtxt

The output is a graph.pbtxt file .We put the existing model files in the project folder. In my case, this is the models / folder .Development

Now everything is ready for development. Let's start writing code.We import the necessary libraries and make a stub on the draw_predictions () method , which we will need later:import pyrealsense2 as rs

import numpy as np

import cv2 as cv

def draw_predictions(pred_img, color_img, depth_image):

pass

Create the main objects:pipeline = rs.pipeline()

config = rs.config()

colorizer = rs.colorizer()

spatial = rs.spatial_filter()

spatial.set_option(rs.option.holes_fill, 3)

We initialize the ssd model. Here, the .pbtxt file that we generated above is useful to us :

model = cv.dnn.readNetFromTensorflow(

"models/ssdlite_mobilenet_v2_coco_2018_05_09/frozen_inference_graph.pb",

"models/ssdlite_mobilenet_v2_coco_2018_05_09/graph.pbtxt"

)

We start camera streams:

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

profile = pipeline.start(config)

note that we capture the color stream in BGR format , since this is the format that uses OpenCV by default.We start a cycle in which we will sequentially capture and process frames:cv.namedWindow("RealSense object detection", cv.WINDOW_AUTOSIZE)

try:

while True:

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

For further processing of frames, we represent them in the form of numpy arrays:

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

We use the ssd model to detect:

model.setInput(cv.dnn.blobFromImage(color_image, size=(300, 300), swapRB=True, crop=False))

pred = model.forward()

draw_predictions(pred, color_image, depth_image)

We draw the result in the window:

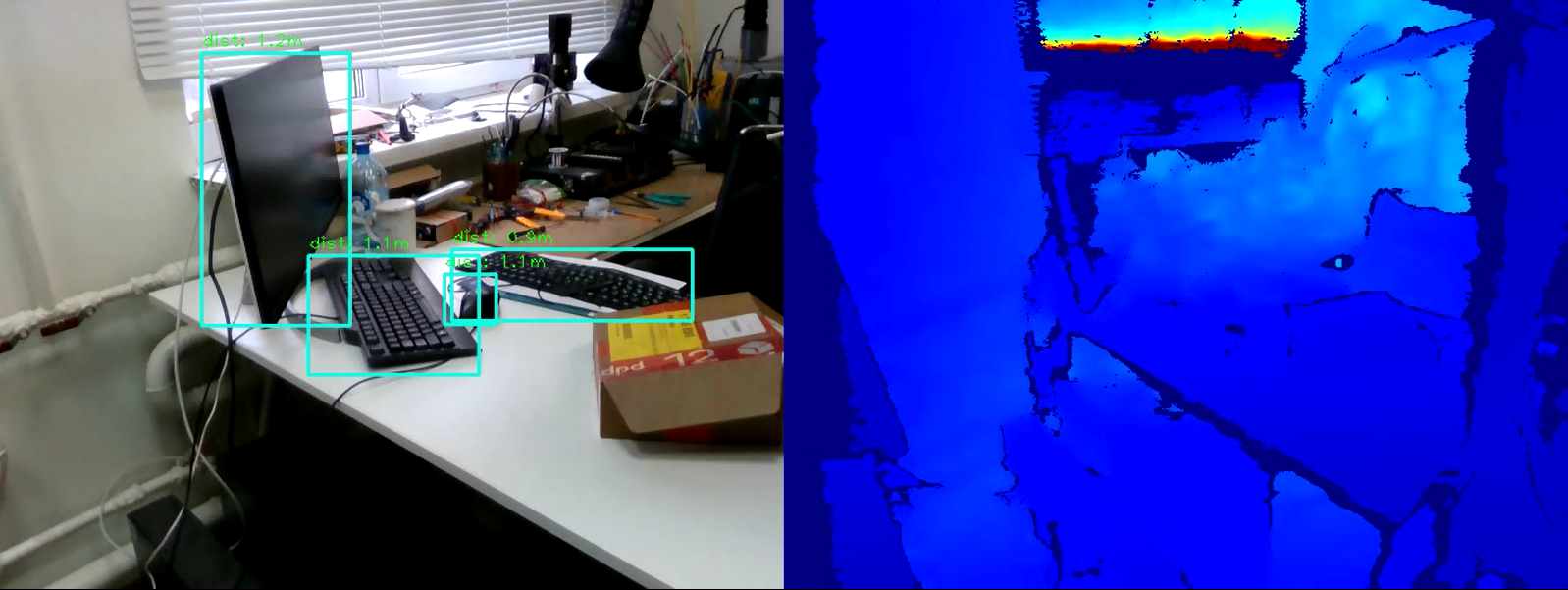

colorized_depth = cv.applyColorMap(cv.convertScaleAbs(depth_image, alpha=0.03), cv.COLORMAP_JET)

images = np.hstack((color_image, colorized_depth))

cv.imshow("RealSense object detection", images)

We write the condition for exiting the loop:

key = cv.waitKey(1) & 0xFF

if (key == 27) or (key == ord('q')):

cv.destroyWindow("RealSense object detection")

break

Close the stream:finally:

pipeline.stop()

And the last one. We fill in the draw_predictions () rendering method that we created at the very beginning. In it, we will consider the distance to objects. I decided to calculate the distance as follows: take the average of all distance points in the frame of the found object:def draw_predictions(pred_img, color_img, depth_image):

for detection in pred_img[0,0,:,:]:

score = float(detection[2])

if score > 0.5:

left = detection[3] * color_img.shape[1]

top = detection[4] * color_img.shape[0]

right = detection[5] * color_img.shape[1]

bottom = detection[6] * color_img.shape[0]

cv.rectangle(color_img, (int(left), int(top)), (int(right), int(bottom)), (210, 230, 23), 2)

depth = depth_image[int(left):int(right), int(top):int(bottom)].astype(float)

depth_scale = profile.get_device().first_depth_sensor().get_depth_scale()

depth = depth * depth_scale

dist,_,_,_ = cv.mean(depth)

dist = round(dist, 1)

cv.putText(color_img, "dist: "+str(dist)+"m", (int(left), int(top)-5), cv.FONT_HERSHEY_PLAIN, 1, (0, 255, 0), 1)

Full codeimport pyrealsense2 as rs

import numpy as np

import cv2 as cv

def draw_predictions(pred_img, color_img, depth_image):

for detection in pred_img[0,0,:,:]:

score = float(detection[2])

if score > 0.5:

left = detection[3] * color_img.shape[1]

top = detection[4] * color_img.shape[0]

right = detection[5] * color_img.shape[1]

bottom = detection[6] * color_img.shape[0]

cv.rectangle(color_img, (int(left), int(top)), (int(right), int(bottom)), (210, 230, 23), 2)

depth = depth_image[int(left):int(right), int(top):int(bottom)].astype(float)

depth_scale = profile.get_device().first_depth_sensor().get_depth_scale()

depth = depth * depth_scale

dist,_,_,_ = cv.mean(depth)

dist = round(dist, 1)

cv.putText(color_img, "dist: "+str(dist)+"m", (int(left), int(top)-5), cv.FONT_HERSHEY_PLAIN, 1, (0, 255, 0), 1)

pipeline = rs.pipeline()

config = rs.config()

colorizer = rs.colorizer()

model = cv.dnn.readNetFromTensorflow(

"models/ssdlite_mobilenet_v2_coco_2018_05_09/frozen_inference_graph.pb",

"models/ssdlite_mobilenet_v2_coco_2018_05_09/graph.pbtxt"

)

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

profile = pipeline.start(config)

cv.namedWindow("RealSense object detection", cv.WINDOW_AUTOSIZE)

try:

while True:

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

model.setInput(cv.dnn.blobFromImage(color_image, size=(300, 300), swapRB=True, crop=False))

pred = model.forward()

draw_predictions(pred, color_image, depth_image)

colorized_depth = cv.applyColorMap(cv.convertScaleAbs(depth_image, alpha=0.03), cv.COLORMAP_JET)

images = np.hstack((color_image, colorized_depth))

cv.imshow("RealSense object detection", images)

key = cv.waitKey(1) & 0xFF

if (key == 27) or (key == ord('q')):

cv.destroyWindow("RealSense object detection")

break

finally:

pipeline.stop()

Conclusion

Thus, we have a simple script to estimate the distance to the selected object. The algorithm may make a mistake of about 50 cm, but overall works well within four meters. To increase the accuracy of the measurement, you can apply additional filters embedded in pyrealsense2 to the depth map to increase the image quality, or modify the depth calculation algorithm itself (for example, calculate a weighted average or measure the distance in one central area). As you can see, the task is not difficult, but interesting.