2018 was a turning point for the development of machine learning models aimed at solving text processing problems (or, more correctly, processing Natural Language (NLP)). A conceptual understanding of how to present words and sentences for the most accurate extraction of their semantic meanings and relationships between them is growing rapidly. Moreover, the NLP community promotes incredibly powerful tools that can be downloaded and used for free in their models and pipelines. This turning point is also called NLP's ImageNet moment , referring to the moment several years ago, when similar developments significantly accelerated the development of machine learning in the field of computer vision problems.

(ULM-FiT has nothing to do with Korzhik, but something better did not occur)

– BERT', , NLP. BERT – , NLP-. , , BERT', . , , , .

BERT'. 1: ( ); 2: .

BERT , NLP-, , : Semi-supervised Sequence learning ( – Andrew Dai Quoc Le), ELMo ( – Matthew Peters AI2 UW CSE), ULMFiT ( – fast.ai Jeremy Howard Sebastian Ruder), OpenAI Transformer ( – OpenAI Radford, Narasimhan, Salimans, Sutskever) (Vaswani et al).

, , BERT'. , , .

:

BERT – . :

, , (classifier) BERT' . (fine-tuning), Semi-supervised Sequence Learning ULMFiT.

, : , . . («» « »).

BERT':

- (sentiment analysis)

- (fact-checking):

- : . : «» (Claim) « » (Not Claim)

- / :

- : (Claim sentence). : «» «»

- Full Fact – , . , , ( , , , )

- :

, BERT', , .

BERT' :

- BERT BASE () – OpenAI Transformer;

- BERT LARGE () – , (state of the art), .

, BERT – . . , – BERT’ , .

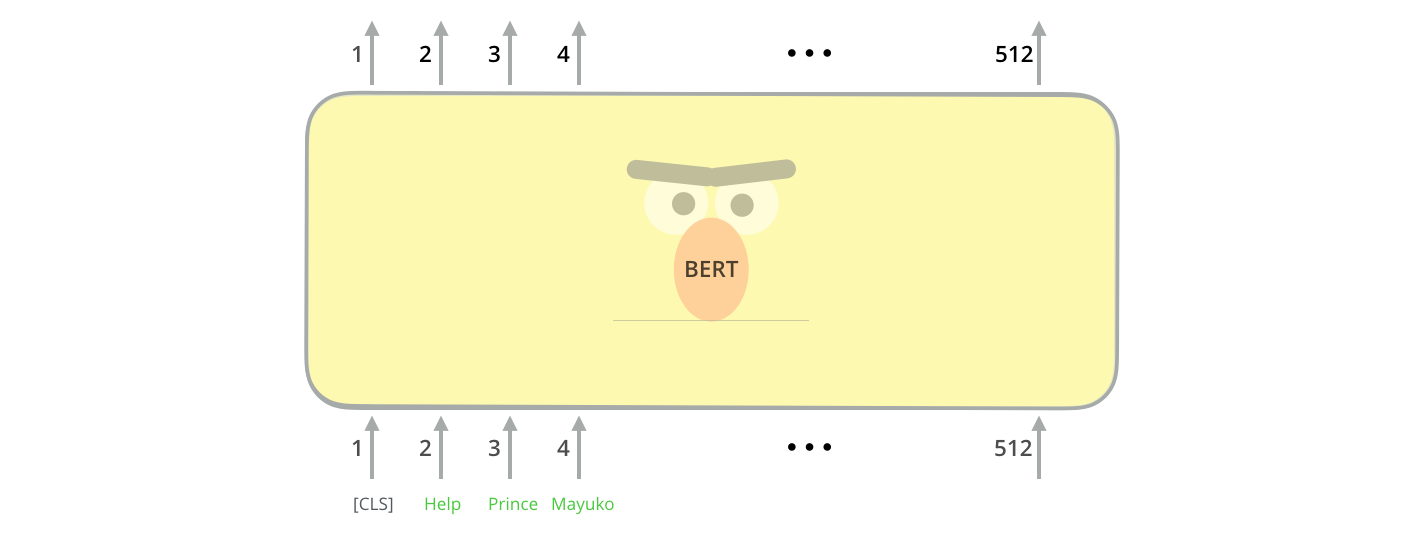

BERT' ( « » (Transformer Blocks)): 12 24 . (768 1024 ) «» (attention heads)(12 16 ), , (6 , 512 , 8 «» ).

[CLS] , . CLS .

, , BERT , . (self-attention) , .

, ( , ). .

hidden_size (768 BERT'). , , ( [CLS]).

. , .

(, «», « », « », «» .), .

, , , VGGNet .

. , NLP- , : Word2Vec GloVe. , , , .

, . Word2Vec , ( ), , (.. , «» – «» «» – «»), (, , «» «» , «» «»).

, , , . , Word2Vec GloVe. GloVe «stick» ( – 200):

«stick» GloVe – 200 ( 2 ).

.

ELMo:

GloVe, «stick» . « », – NLP- ( Peters et. al., 2017, McCann et. al., 2017 Peters et. al., 2018 ELMo). – « «stick» , . , – , , ?». (contextualized word-embeddings).

.

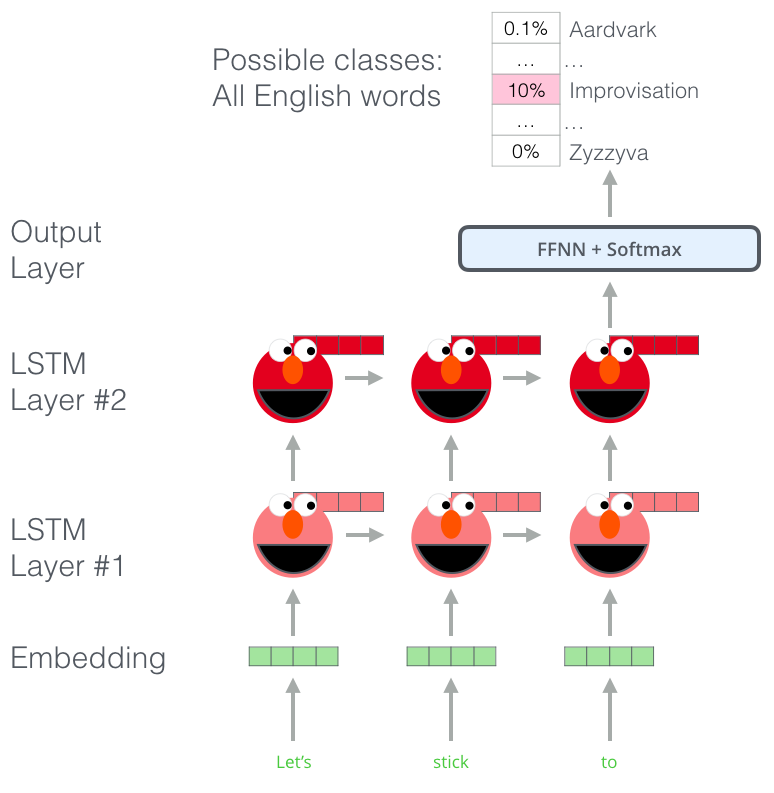

, , ELMo , . (bi-directional LSTM), .

ELMo NLP. ELMo LSTM , , .

ELMo?

ELMo – , (language modeling). , , .

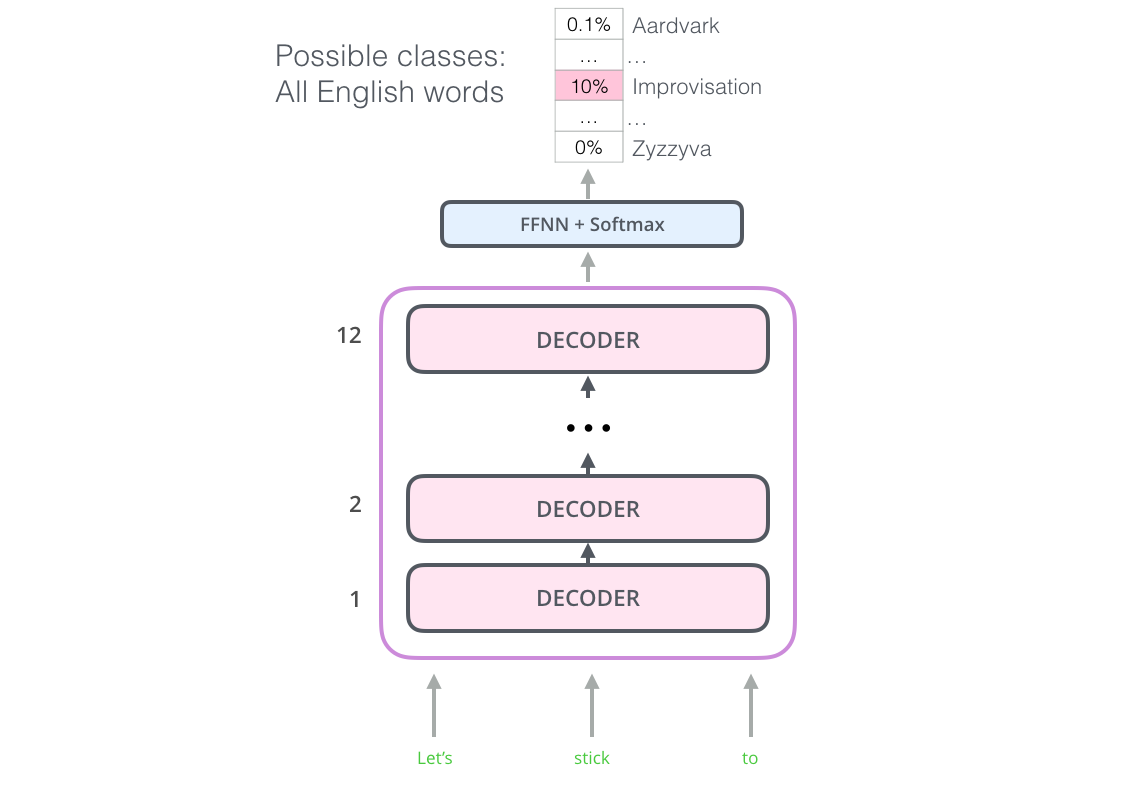

ELMo: «Let's stick to», – . . , . , , , , «hang», «out» ( «hang out»), «camera».

, LSTM - ELMo. , .

, ELMo LSTM – , «» , .

ELMo

ELMo ( ) ( ).

ULM-FiT: NLP

ULM-FiT , – . ULM-FiT .

NLP , , , .

, , , , NLP- LSTM. .

- . ? , (.. , )?

, , NLP-. . : , ( ).

OpenAI Transformer

12 . - , . , ( ).

: , . 7 . , .. , – , , .

OpenAI Transformer 7000

, OpenAI Transformer , . ( «» « »):

OpenAI , . :

, ?

BERT:

OpenAI Transformer , . - LSTM . ELMo , OpenAI Transformer . , , ( – « , »)?

« », – .

(masked language model)

« », – .

« !» – . – « , .»

« », – .

BERT «» 15% .

– , BERT , « » (masked language model) ( «-»).

15% , BERT , . .

OpenAI Transformer, , - (, ? , ?).

, BERT , : ( ); , ?

, BERT – . , .. BERT WordPieces, – .

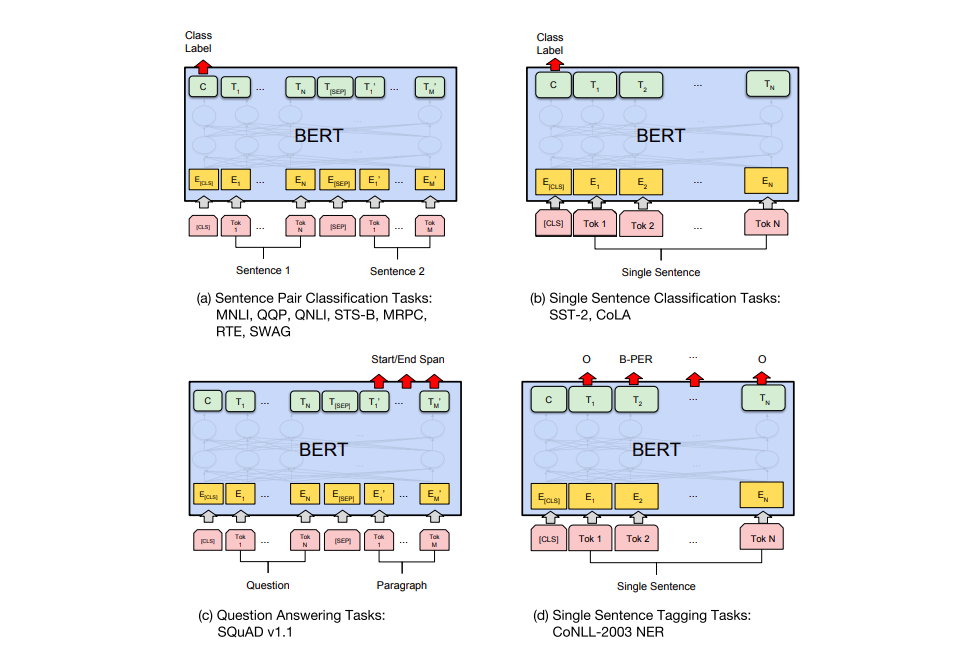

BERT' .

a) : MNLI, QQP, QNLI, STS-B, MRPC, RTE, SWAG; b) : SST-2, CoLA; c) - : SQuAD v1.1; d) : CoNLL-2003 NER.

BERT

– BERT. ELMo, BERT' . – , , , , (named-entity recognition).

? . 6 ( , 96,4):

- BERT'

BERT – BERT FineTuning with Cloud TPUs, Google Colab. Cloud TPU, , .. BERT' TPU, CPU GPU.

– BERT':

PyTorch- BERT'. AllenNLP BERT'a .