Currently, the popularity of programs using artificial neural networks is growing, in connection with this there is a large number of technologies to simplify the work associated with them. This article will describe one of the possible ways to implement the application with the introduction of such technologies.What will our application do?

Recognize two positions of the hand - fist and palm. And depending on it, change the application interface elements.

What do we need?

To train our neural network, we will use the Keras library, we will implement the interface in the Swift programming language, and for the bundle we will use the Apple framework presented at WWDC'17 for working with machine learning technologies CoreML.Let's start with the model of our ANN and its training

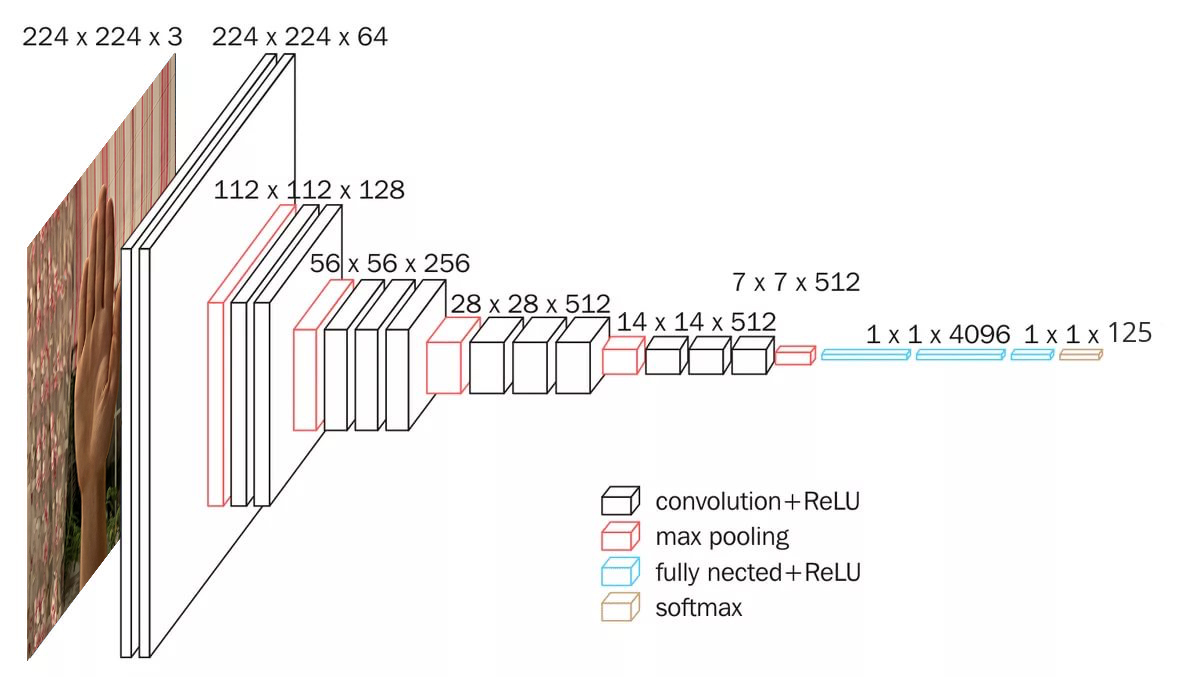

Since we use the data in the form of images, we need a convolution base. The Keras framework includes many pre-trained imageNet convolutional models, such as: Xception, ResNet50, MobileNetV2, DenseNet, NASNet, InceptionV3, VGG16, VGG19, and so on. Our project will use the VGG16 model and it looks like this: Let's create it:

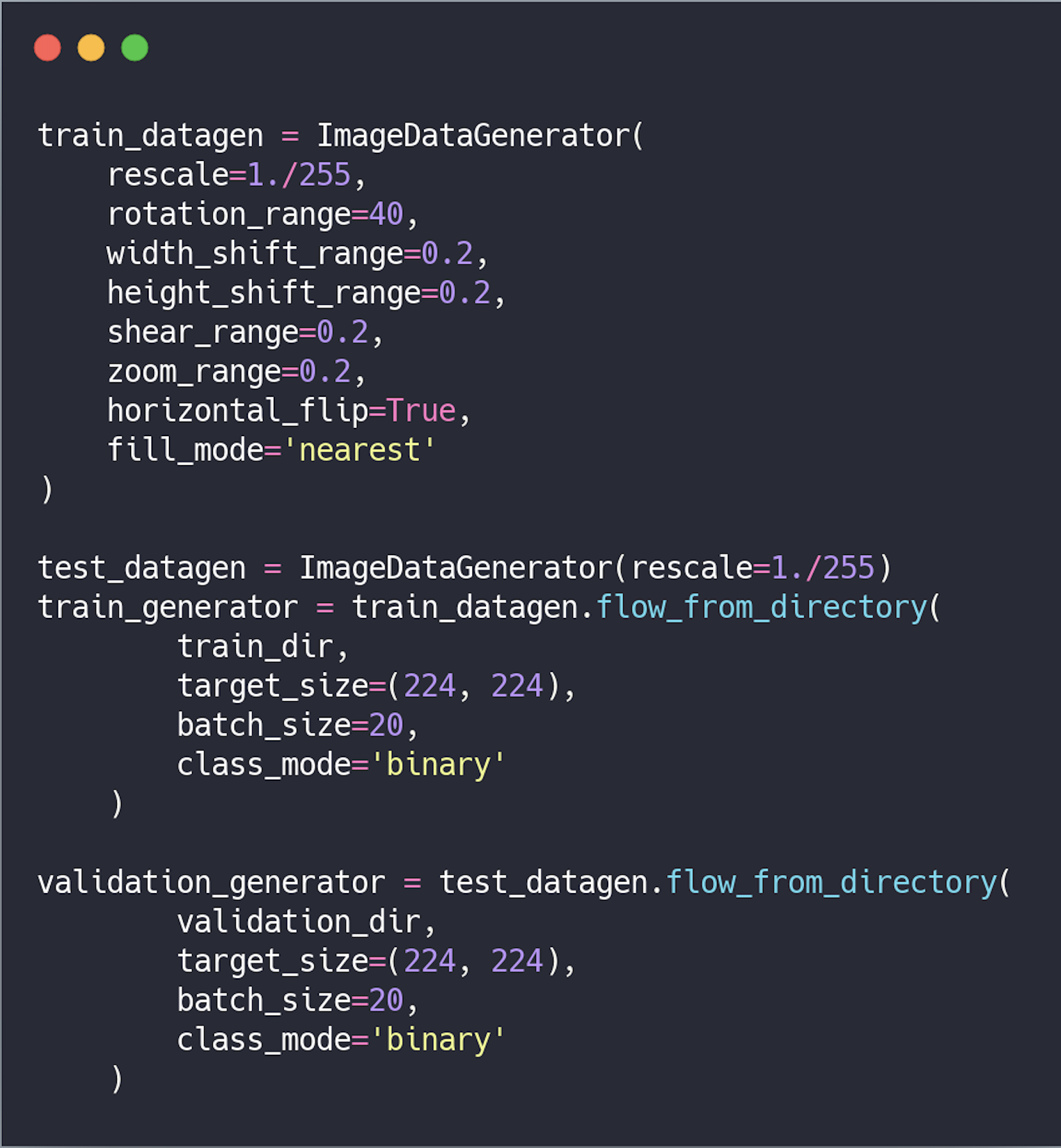

Let's create it: Data preprocessing . Before being transferred to the network, the data must be converted into a tensor with real numbers. Currently, data is stored in the form of images, so they need to be prepared for transmission to the network by following these steps:

Data preprocessing . Before being transferred to the network, the data must be converted into a tensor with real numbers. Currently, data is stored in the form of images, so they need to be prepared for transmission to the network by following these steps:- Read image files;

- Decode images from the .PNG, .JPG format to the RGB pixel table;

- Convert them to tensors with real numbers;

- Normalize values.

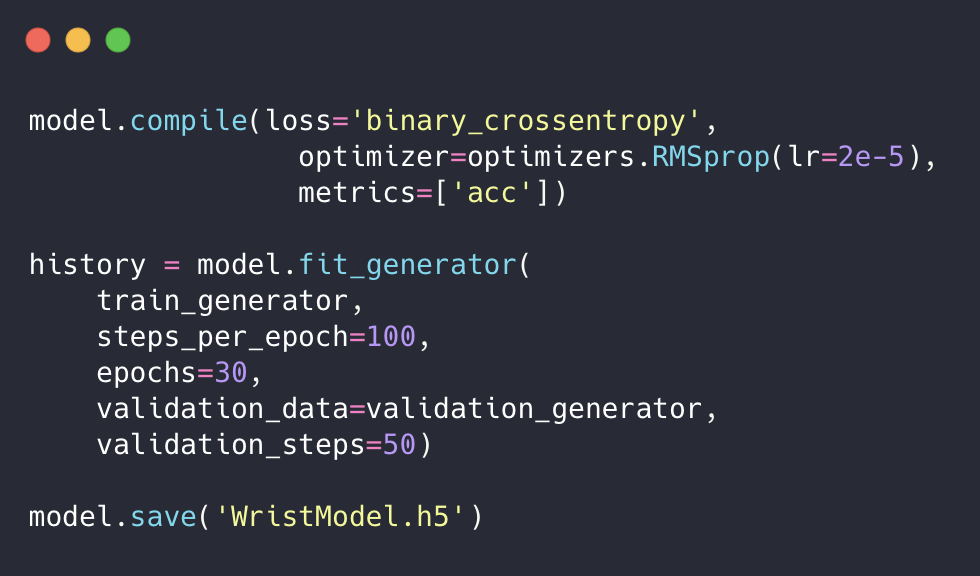

We will also use the data extension to prevent retraining of the network and increase its accuracy. Then we set up the model for training, run it using the model generator and save it.

Then we set up the model for training, run it using the model generator and save it. We trained our network, but the .h5 extension is not suitable for solving our problem. Therefore, we should convert it to a view acceptable for CoreML, that is, to the .mlmodel format.To do this, install coremltools :

We trained our network, but the .h5 extension is not suitable for solving our problem. Therefore, we should convert it to a view acceptable for CoreML, that is, to the .mlmodel format.To do this, install coremltools :$ pip install --upgrade coremltools

And we transform our model:

We are writing an application

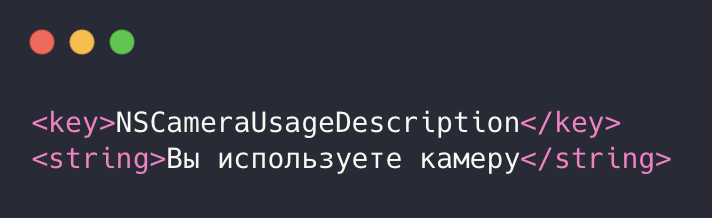

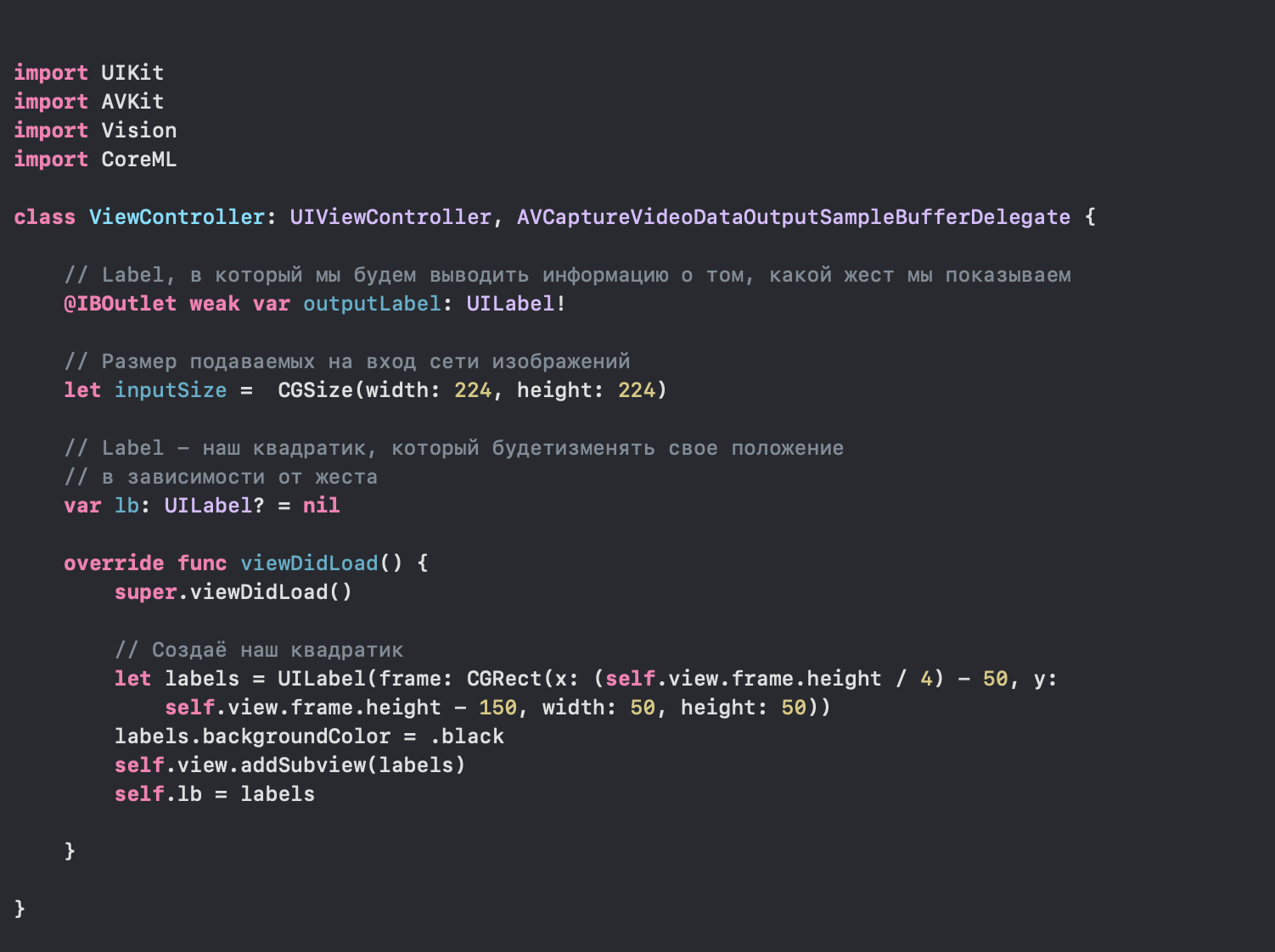

We go in Xcode, create an application and first of all give permission to use the camera. To do this, go to info.plist and add the following lines to XML: After that, go to the ViewController.swift file.

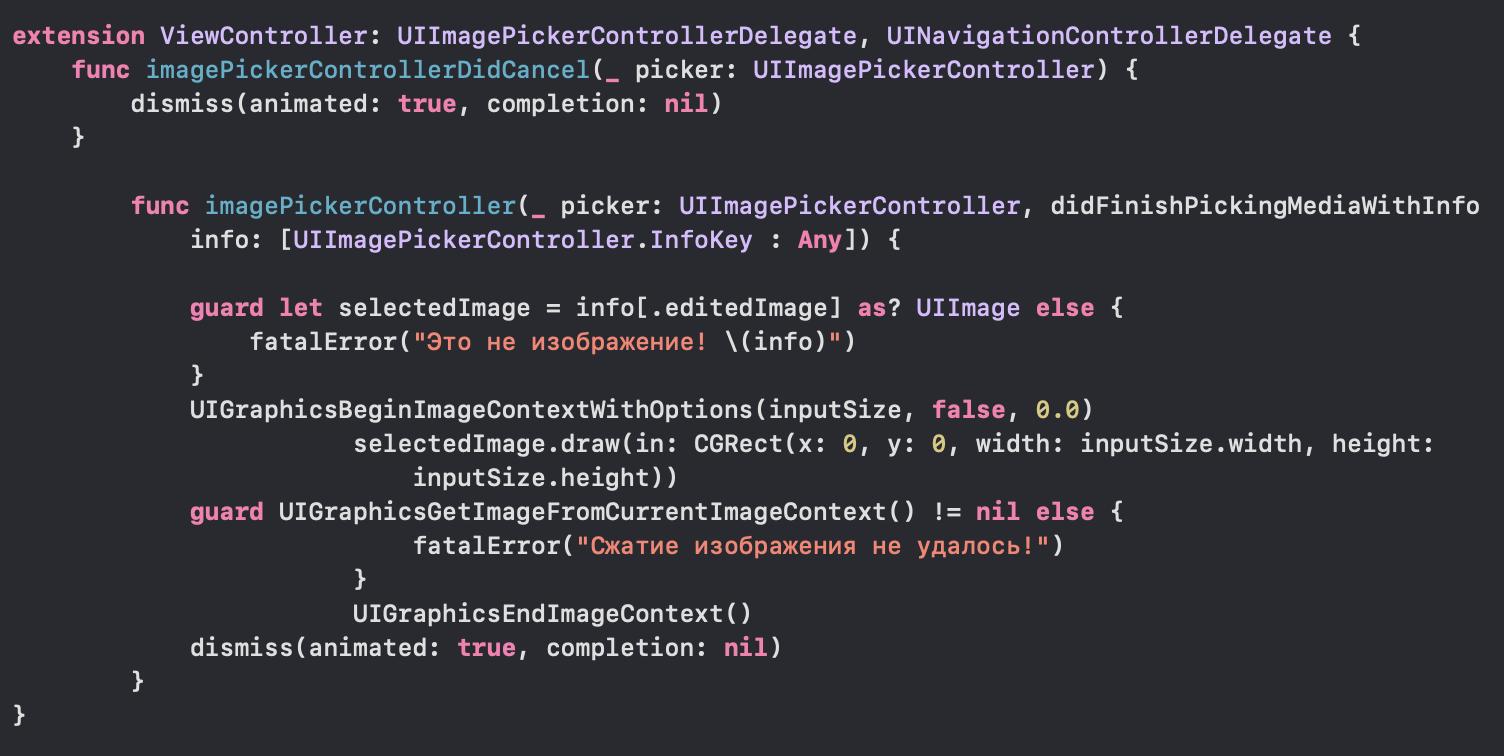

After that, go to the ViewController.swift file. Next, configure cameraInput and cameraOutput, add them to the session and run it to get the data stream.

Next, configure cameraInput and cameraOutput, add them to the session and run it to get the data stream. Earlier we added the delagate method AVCaptureVideoDataOutputSampleBufferDelegate, which is called with every new frame received from the camera. Now we set the request in it and execute it.

Earlier we added the delagate method AVCaptureVideoDataOutputSampleBufferDelegate, which is called with every new frame received from the camera. Now we set the request in it and execute it. In this method, we connect the WristModel.mlmodel model, which we transferred to our project:

In this method, we connect the WristModel.mlmodel model, which we transferred to our project: We also check its connection and asynchronously change the position of our square depending on the coefficient.But our application will not work without preliminary processing of images entering the network, so we add it:

We also check its connection and asynchronously change the position of our square depending on the coefficient.But our application will not work without preliminary processing of images entering the network, so we add it: Launch the application:

Launch the application: Hurray , we managed to create control of the interface of our application using gestures. Now you can use the full potential of ANN in your iOS applications. Thank you for the attention!

Hurray , we managed to create control of the interface of our application using gestures. Now you can use the full potential of ANN in your iOS applications. Thank you for the attention!List of sources and literature

1) keras.io2) apple.imtqy.com/coremltools3) habr.com/en/company/mobileup/blog/3325004) developer.apple.com/documentation/coreml