Why Discord Migrates from Go to Rust

Rust is becoming a first-class language in a wide range of fields. We at Discord successfully use it on both the server and client side. For example, on the client side in the video encoding pipeline for Go Live, and on the server side for the Elixir NIF (Native Implemented Functions) functions.We recently dramatically improved the performance of a single service, rewriting it from Go to Rust. This article will explain why it made sense for us to rewrite the service, how we did it and how much productivity improved.

Rust is becoming a first-class language in a wide range of fields. We at Discord successfully use it on both the server and client side. For example, on the client side in the video encoding pipeline for Go Live, and on the server side for the Elixir NIF (Native Implemented Functions) functions.We recently dramatically improved the performance of a single service, rewriting it from Go to Rust. This article will explain why it made sense for us to rewrite the service, how we did it and how much productivity improved.Read State Tracking Service (Read States)

Our company is built around one product, so let's start with some context, what exactly we transferred from Go to Rust. This is a Read States service. Her only task is to keep track of which channels and messages you read. Read States is accessed every time you connect to Discord, every time you send a message, and every time you read the message. In short, states are read continuously and are on a “hot path”. We want to make sure that Discord is always fast, so state checking should be fast.The implementation of the service on Go did not meet all the requirements. Most of the time it worked quickly, but every few minutes there were strong delays, noticeable to users. After examining the situation, we determined that the delays are explained by Go's key features: its memory model and garbage collector (GC).Why Go Doesn’t Meet Our Performance Goals

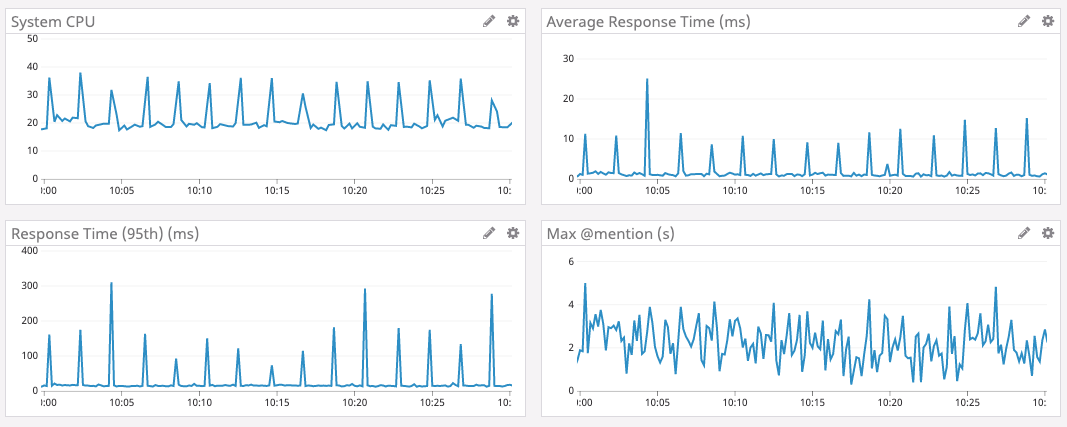

To explain why Go doesn’t meet our performance targets, we first need to discuss data structures, scale, access patterns, and service architecture.To store state information, we use a data structure, which is called: Read State. There are billions of them in Discord: one state for each user per channel. Each state has several counters, which must be atomically updated and often reset to zero. For example, one of the counters is the number @mentionin the channel.To quickly update the atomic counter, each Read States server has a Least Recently Used (LRU) cache. Each cache has millions of users and tens of millions of states. The cache is updated hundreds of thousands of times per second.For safety, the cache is synchronized with the Cassandra database cluster. When a key is pushed out of the cache, we enter the states of this user in the database. In the future, we plan to update the database within 30 seconds with each state update. This is tens of thousands of records in the database every second.The graph below shows the response time and CPU load at the peak time interval for the Go 1 service. It can be seen that delays and bursts of load on the CPU occur approximately every two minutes.

So where does the growth of delays every two minutes come from?

In Go, memory is not freed immediately when a key is pushed out of the cache. Instead, the garbage collector runs periodically and looks for unused portions of memory. This is a lot of work that can slow down a program.It is very likely that periodic slowdowns of our service are associated with garbage collection. But we wrote a very efficient Go code with a minimal amount of memory allocation. There should not be much trash left. What is the matter?Rummaging through the Go source code, we learned that Go forcibly starts garbage collection at least every two minutes . Regardless of the heap size, if the GC did not start for two minutes, Go will force it to start.We decided that if you run GC more often, you can avoid these peaks with large delays, so we set an endpoint in the service to change the GC Percent value on the fly . Unfortunately, the configuration of GC Percent did not affect anything. How could this happen? It turns out that GC did not want to start more often, because we did not allocate memory often enough.We began to dig further. It turned out that such large delays do not occur due to the huge amount of freed memory, but because the garbage collector scans the entire LRU cache to check all the memory. Then we decided that if we decrease the LRU cache, then the scan volume will decrease. Therefore, we added one more parameter to the service to change the size of the LRU cache, and changed the architecture, breaking the LRU into many separate caches on each server.And so it happened. With smaller caches, peak delays are reduced.Unfortunately, the compromise with decreasing LRU cache raised the 99th percentile (that is, the average value for a sample of 99% of the delays increased, excluding peak ones). This is because decreasing the cache reduces the likelihood that the user's Read State will be in the cache. If it is not here, then we must turn to the database.After a large amount of load testing on different cache sizes, we kind of found an acceptable setting. Although not ideal, it was a satisfactory solution, so we left the service for a long time to work like that.At the same time, we implemented Rust very successfully in other Discord systems, and as a result we made a collective decision to write frameworks and libraries for new services only in Rust. And this service seemed to be a great candidate for porting to Rust: it is small and autonomous, and we hoped that Rust would fix these spikes with delays and ultimately make the service more pleasant for users 2.Memory Management in Rust

Rust is incredibly fast and efficient with memory: in the absence of a runtime environment and garbage collector, it is suitable for high-performance services, embedded applications and integrates easily with other languages. 3

Rust does not have a garbage collector, so we decided that there would be no such delays, like Go.In memory management, he uses a rather unique approach with the idea of "owning" memory. In short, Rust keeps track of who has the right to read from and write to memory. He knows when a program uses memory, and immediately frees it as soon as memory is no longer needed. Rust enforces memory rules at compile time, which virtually eliminates the possibility of memory errors at run time. 4You do not need to manually track the memory. The compiler will take care of this.Thus, in the Rust version, when Read State is excluded from the LRU cache, memory is freed immediately. This memory does not sit and does not wait for the garbage collector. Rust knows that it is no longer in use and immediately releases it. There is no process in runtime for scanning which memory to free.Asynchronous Rust

But there was one problem with the Rust ecosystem. At the time of implementation of our service, there were no decent asynchronous functions in the stable branch of Rust. For a network service, asynchronous programming is a must. The community has developed several libraries, but with a non-trivial connection and very stupid error messages.Fortunately, the Rust team worked hard to simplify asynchronous programming, and it was already available on the unstable channel (Nightly).Discord was never afraid to learn promising new technologies. For example, we were one of the first users of Elixir, React, React Native, and Scylla. If some technology looks promising and gives us an advantage, then we are ready to face the inevitable difficulty of implementation and the instability of advanced tools. This is one of the reasons that we have so quickly reached an audience of 250 million users with less than 50 programmers in the state.The introduction of new asynchronous functions from the unstable Rust channel is another example of our willingness to adopt a new, promising technology. The engineering team decided to implement the necessary functions without waiting for their support in the stable version. Together with other representatives of the community, we have overcome all the problems that have arisen, and now asynchronous Rustmaintained in a stable branch. Our rate has paid off.Implementation, stress testing and launch

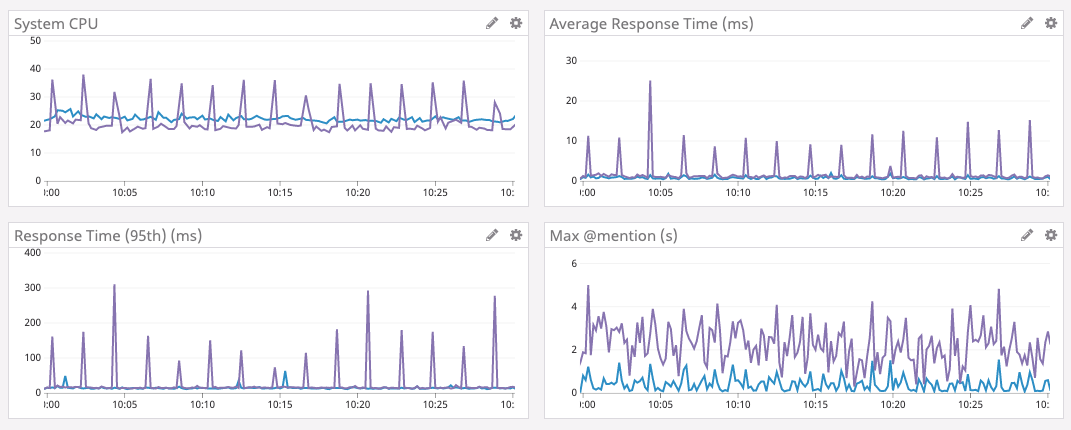

Just rewriting the code was easy. We started with a rough broadcast, then reduced it to places where it made sense. For example, Rust has an excellent type system with extensive support for generics (for working with data of any type), so we quietly threw away the Go code, which compensated for the lack of generics. In addition, the Rust memory model takes into account the memory security in different threads, so we threw away the protective goroutines.Load testing immediately showed an excellent result. Service performance on Rust turned out to be as high as that of the Go version, but without these bursts of increased delay !Typically, we practically did not optimize the Rust version. But even with the simplest optimizations, Rust was able to outperform a carefully tuned version of Go.This is an eloquent proof of how easy it is to write effective Rust programs compared to going deep into Go.But we did not satisfy the simple performance quo. After a little profiling and optimization, we surpassed Go in all respects . Delay, CPU and memory - everything got better in the Rust version.Rust performance optimizations included:- Switching to BTreeMap instead of HashMap in the LRU cache to optimize memory usage.

- Replacing the original library of metrics with a version with support for modern concurrency Rust.

- Decrease the number of copies in memory.

Satisfied, we decided to deploy the service.The launch went pretty smoothly, as we conducted stress tests. We connected the service to one test node, discovered and fixed several borderline cases. Soon after, they rolled a new version to the entire server park.The results are shown below.The purple graph is Go, the blue graph is Rust.

Increase cache size

When the service successfully worked for several days, we decided to increase the LRU cache again. As mentioned above, in the Go version, this could not be done, because the time for garbage collection increased. Since we no longer do garbage collection, you can increase the cache counting on an even greater increase in performance. So, we have increased the memory on the servers, optimized the data structure for less memory usage (for fun) and increased the cache size to 8 million Read State states.The results below speak for themselves. Note that average time is now measured in microseconds, and maximum delay @mentionis measured in milliseconds.Ecosystem development

Finally, Rust has a wonderful ecosystem that is growing rapidly. For example, recently a new version of the asynchronous runtime that we use is Tokio 0.2. We updated, and without any effort on our part, automatically reduced the load on the CPU. In the graph below, you can see how the load has decreased since about January 16th.Final thoughts

Discord currently uses Rust in many parts of the software stack: for GameSDK, capturing and encoding video in Go Live, Elixir NIF , several backend services, and many more.When starting a new project or software component, we are definitely considering using Rust. Of course, only where it makes sense.In addition to performance, Rust provides developers with many other benefits. For example, its type safety and borrow checker (borrow checker) greatly simplify refactoring as you change product requirements or introduce new language features. The ecosystem and tools are excellent and are developing rapidly.Fun fact: the Rust team also uses Discord to coordinate. There is even a very usefulRust community server , where we sometimes chat.Footnotes

- Charts taken from Go version 1.9.2. We tried versions 1.8, 1.9 and 1.10 without any improvements. The initial migration from Go to Rust was completed in May 2019. [to return]

- For clarity, we do not recommend rewriting everything in Rust for no reason. [to return]

- Quote from the official site. [to return]

- Of course, until you use unsafe . [to return]

Source: https://habr.com/ru/post/undefined/

All Articles