Most likely, no one needs to tell what webhooks are. But just in case: webhooks are a mechanism for reporting events in an external system. For example, about buying in an online store through an online cashier, sending a code to a GitHub repository, or user actions in chats. In a typical API, you need to constantly query the server if the user wrote something in the chat. Using the webhook mechanism, you can "subscribe" to notifications, and the server itself will send an HTTP request when an event occurs. This is more convenient and faster than constantly requesting new data on the server. ManyChat is a platform that helps businesses communicate with their customers via chat in instant messengers. Webhooks are one of the important parts of ManyChat, because it is through them that the business communicates with customers. And they communicate a lot - for example, through a system, businesses send billions of messages a month to their customers.Most messages are sent via Facebook Messenger. It has a feature - a slow API. When a customer writes a message to order pizza, Facebook sends a webhook to ManyChat. The platform processes it, sends the request back and the user receives a message. Due to the slow API, some requests go a few seconds. But when the platform does not respond for a long time, the business loses the client, and Facebook can disconnect the application from webhooks.Therefore, processing webhooks is one of the main engineering tasks of the platform. To solve the problem, ManyChat changed its processing architecture several times over the course of three years from a simple controller in Yii to a distributed system with Galaxies. Read more about this under the cut Dmitry Kushnikov (cancellarius)Dmitry Kushnikov leads development at ManyChat and has been professionally programming in PHP since 2001. Dmitry will tell you how architecture has changed along with the growth of service and load, what solutions and technologies were applied at different stages, how webhook processing has evolved and how the platform manages to cope with a huge load using modest resources in PHP.Note. The article is based on Dmitry's report “The evolution of Facebook webhook processing: from zero to 12,500 per second” in PHP Russia 2019 . But while he was preparing, indicators rose to 25,000.

ManyChat is a platform that helps businesses communicate with their customers via chat in instant messengers. Webhooks are one of the important parts of ManyChat, because it is through them that the business communicates with customers. And they communicate a lot - for example, through a system, businesses send billions of messages a month to their customers.Most messages are sent via Facebook Messenger. It has a feature - a slow API. When a customer writes a message to order pizza, Facebook sends a webhook to ManyChat. The platform processes it, sends the request back and the user receives a message. Due to the slow API, some requests go a few seconds. But when the platform does not respond for a long time, the business loses the client, and Facebook can disconnect the application from webhooks.Therefore, processing webhooks is one of the main engineering tasks of the platform. To solve the problem, ManyChat changed its processing architecture several times over the course of three years from a simple controller in Yii to a distributed system with Galaxies. Read more about this under the cut Dmitry Kushnikov (cancellarius)Dmitry Kushnikov leads development at ManyChat and has been professionally programming in PHP since 2001. Dmitry will tell you how architecture has changed along with the growth of service and load, what solutions and technologies were applied at different stages, how webhook processing has evolved and how the platform manages to cope with a huge load using modest resources in PHP.Note. The article is based on Dmitry's report “The evolution of Facebook webhook processing: from zero to 12,500 per second” in PHP Russia 2019 . But while he was preparing, indicators rose to 25,000.What is ManyChat

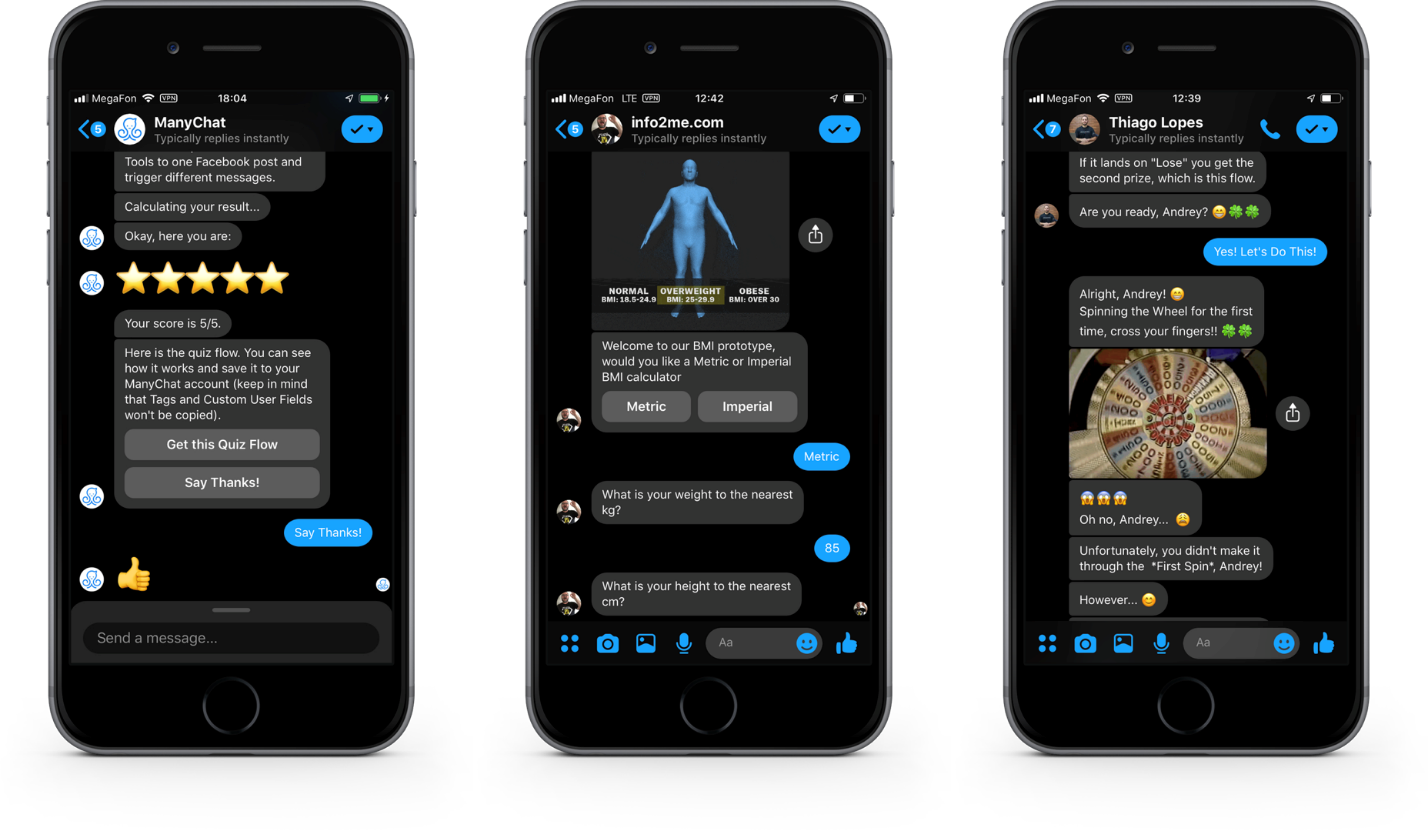

First, I’ll introduce you into the context of our tasks. ManyChat is a service that helps businesses use instant messengers for marketing, sales, and support. The main product is the platform for Messenger Marketing on Facebook Messenger . For three years, more than 1 million businesses from 100 countries of the world used the service to communicate with 700 million of their customers.On the client side, it looks like this. Buttons, pictures and galleries in the dialogs in Facebook Messenger.This is the Facebook Messenger interface. In addition to text messages, you can send interactive elements in it to interact with customers, engage in dialogue, increase interest in your products and sell.From the business sideeverything looks different. This is the interface of our web application where, using a visual interface, business representatives create and program dialogue scripts. The picture is one example of a scenario.

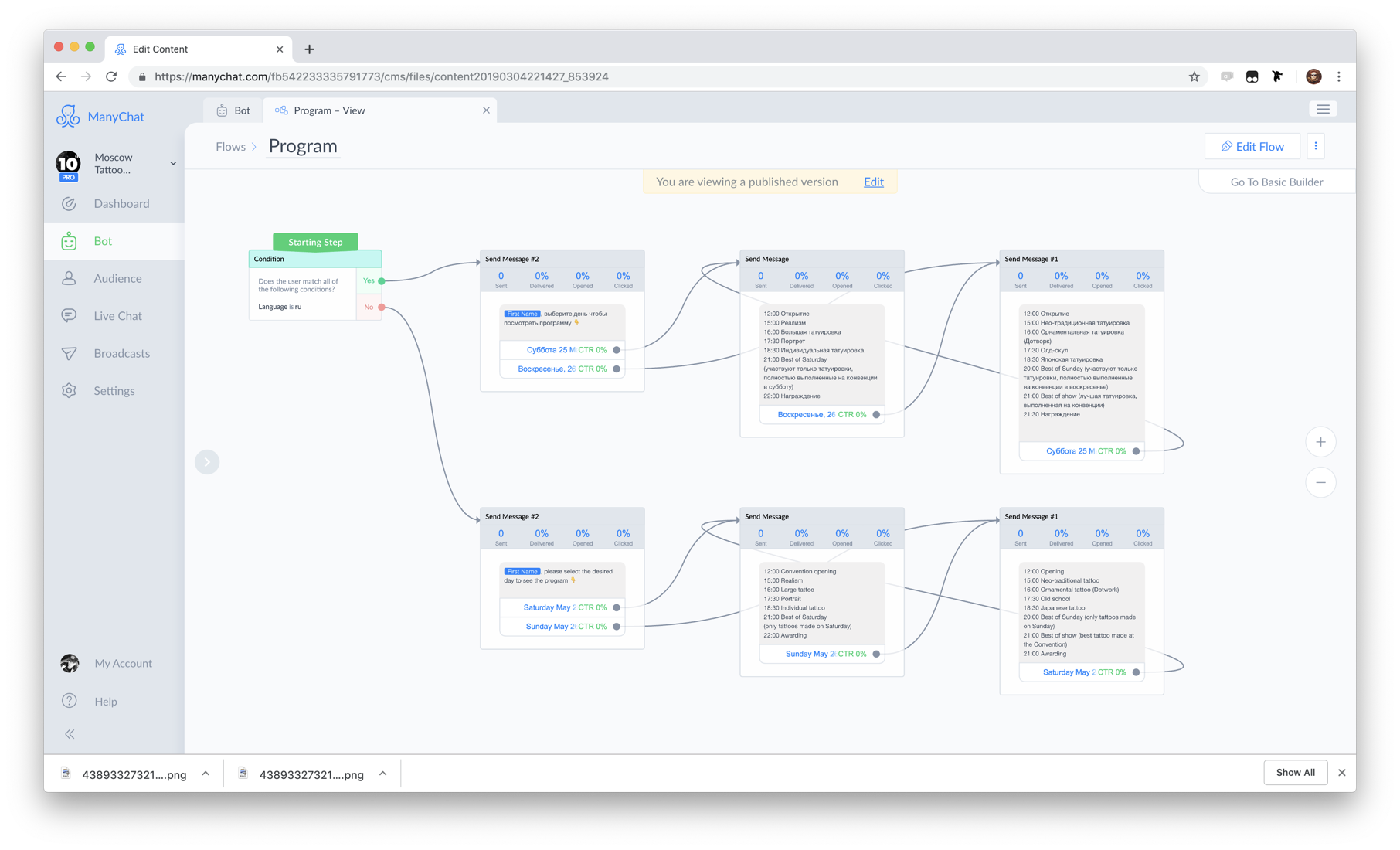

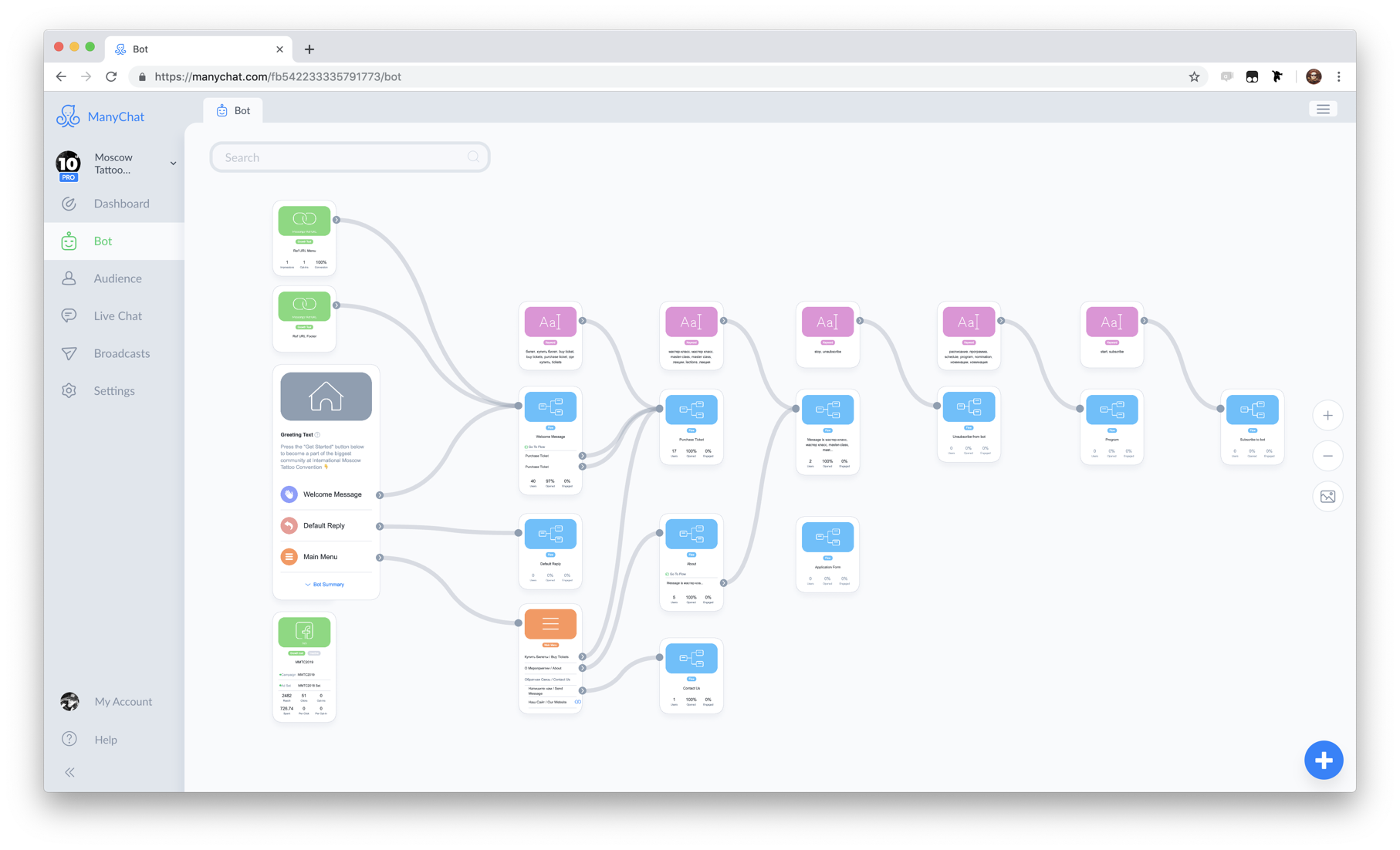

Buttons, pictures and galleries in the dialogs in Facebook Messenger.This is the Facebook Messenger interface. In addition to text messages, you can send interactive elements in it to interact with customers, engage in dialogue, increase interest in your products and sell.From the business sideeverything looks different. This is the interface of our web application where, using a visual interface, business representatives create and program dialogue scripts. The picture is one example of a scenario. The heart of our system is the Flow Builder component.The set of scripts and automation rules we call a bot . Therefore, to simplify, we can say that ManyChat is a bot designer.

The heart of our system is the Flow Builder component.The set of scripts and automation rules we call a bot . Therefore, to simplify, we can say that ManyChat is a bot designer. An example of a bot.The client of the business that participates in the dialogue is called the subscriber , because for interaction the client subscribes to the bot .

An example of a bot.The client of the business that participates in the dialogue is called the subscriber , because for interaction the client subscribes to the bot .Why Facebook

Why Facebook Messenger, we are the country of the surviving Telegram? There are reasons for this.- Telegram , №1 Facebook. 1,5 , Telegram 200-300 .

- Facebook , . , Facebook - .

- Facebook F8 - 300 . Facebook Messenger. 20 . ManyChat 40%.

Facebook

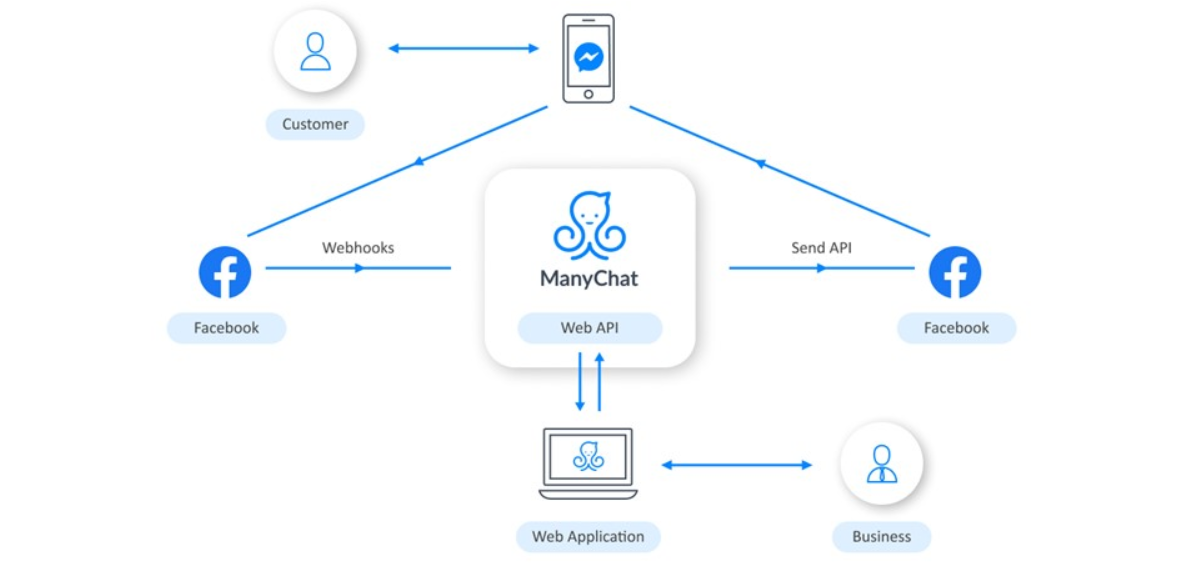

Interaction with Facebook is organized like this: Business uses a web application to configure the logic of the bot. When a client interacts with the bot via the phone, Facebook receives information about this and sends us a webhook. ManyChat processes it, depending on the logic that is programmed by the business, and sends the request back. Then Facebook delivers the message to the user's phone.

Business uses a web application to configure the logic of the bot. When a client interacts with the bot via the phone, Facebook receives information about this and sends us a webhook. ManyChat processes it, depending on the logic that is programmed by the business, and sends the request back. Then Facebook delivers the message to the user's phone.Technological stack

We do all this on a modest stack. At the core, of course, is PHP. The web server runs Nginx, the main database is PostgreSQL, and there are also Redis and Elasticsearch. It all spins in the Amazon Web Services clouds.Handling Facebook Webhook

This is what Facebook’s webcam looks like: this is a request with payload in JSON format.{

"object":"page",

"entry":[

}

"id":"<PAGE_ID>",

"time":1458692752478,

"messaging":[

{

"sender":{

"id":"<PSID>"

},

"recipient":{

"id":"<PAGE_ID>"

},

...

}

]

}

]

}

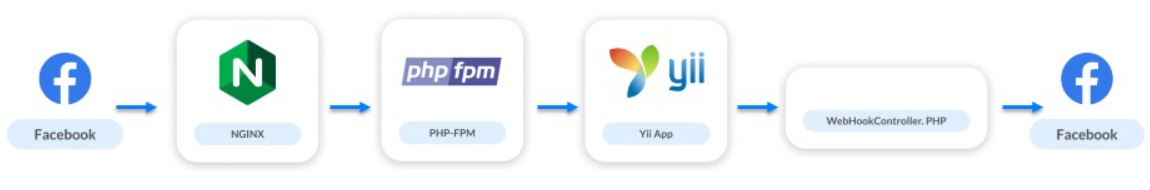

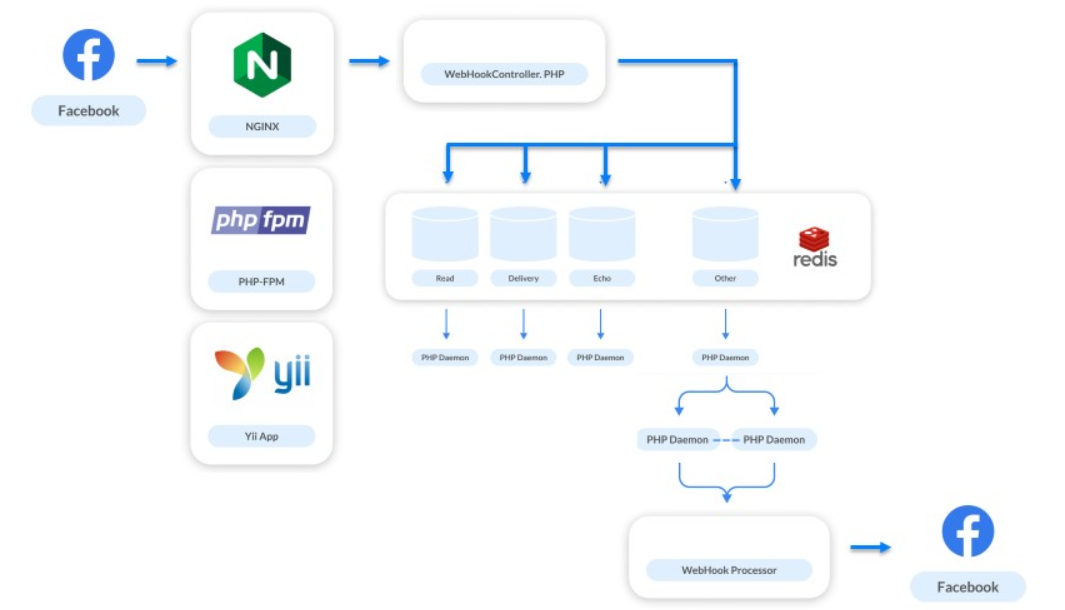

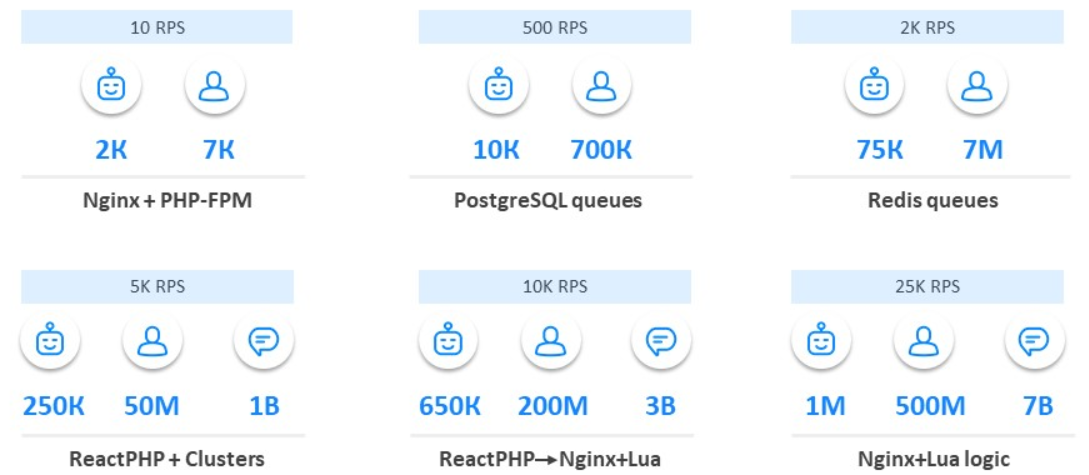

Webhooks are only 10% of our load, but the most important part of the system. Through them, the business communicates with users. If messages slow down or are not sent, then the user refuses to interact with the bot, and the business loses the client.Let's take a look at the evolution of our architecture since the launch of the product.May 2016 . We just launched our service: 20 bots, 10 of which are test ones, and 20 subscribers. The load was 0 RPS.The interaction scheme looked like this:

- The request goes to nginx.

- Nginx accesses PHP-FPM.

- PHP-FPM takes the application up to Yii.

- The webhook controller processes the logic and sends requests to Facebook in accordance with it.

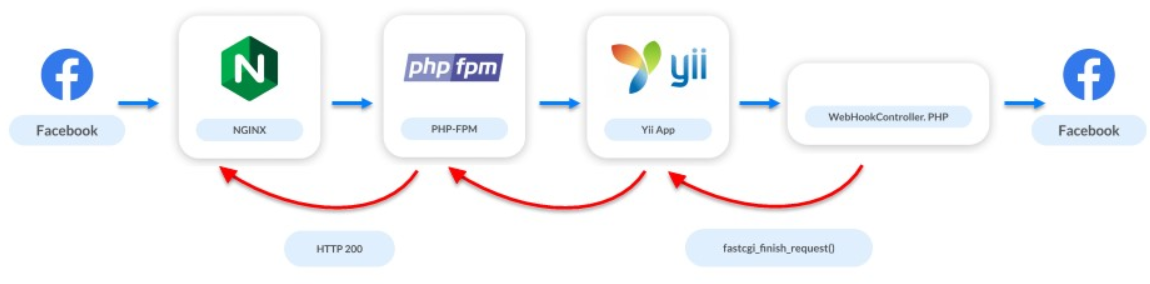

A bunch of Nginx and PHP-FPM

June 2016. A month later, we announced ManyChat on ProductHunt and the number of bots increased to 2 thousand. The number of subscribers has increased to 7 thousand.At this moment, the first problem appeared in the system. The Facebook API is not very fast: some requests can take several seconds, and several requests can take tens of seconds. But the webhook server wants us to respond quickly. Due to the slow API, we do not respond for a long time: the server first swears, and then it can completely disconnect the application from webhooks.There are few users, we are still developing the application, we are looking for our market, audience, and the load problem has already appeared. But we were saved by a simple solution: at the moment when the controller starts, we interrupt the access to Facebook. We tell Facebook that everything is fine, but in the background we process requests and webhook.

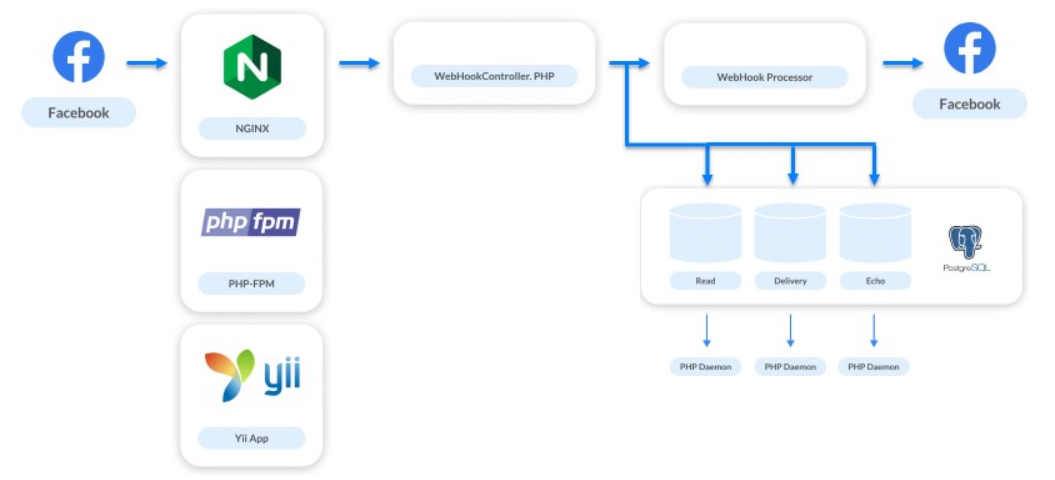

Queues on PostgreSQL

December 2016. The service grew 5-10 times: 10 thousand bots and 700 thousand subscribers.At the same time, we worked on new tasks: displaying statistics, message delivery, conversion of impressions and transitions. Also implemented Live Chat. In addition to automating interactions, it gives businesses the ability to write messages directly to their subscriber.The solution to these problems increased the number of tracked hooks by 4 times. For each message we sent, we received 3 additional webhooks. The processing system needed to be improved again. We are a small platform, only two people worked on the backend, so we chose the simplest solution - queues on PostgreSQL.We do not yet want to implement complex systems, so we simply share the processing flows. Webhooks that need to be processed quickly so that the user receives a response are processed synchronously. All the rest are sent in queues for asynchronous requests.

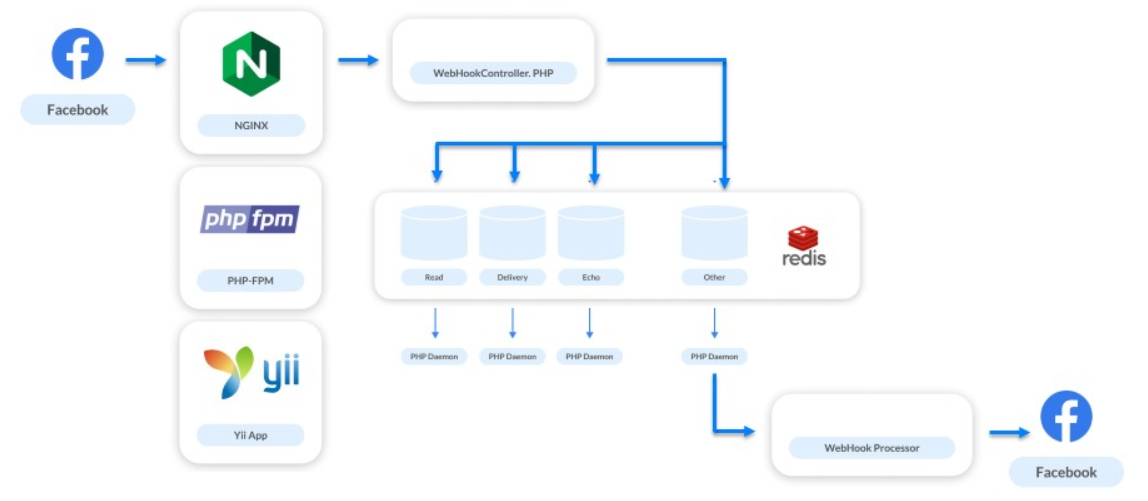

Queues at Redis

June 2017. Service is growing: 75 thousand bots, 7 million subscribers.We are implementing another new feature. All the webhooks that we processed concerned only communications in the messenger. But now we decided to give businesses the opportunity to communicate with subscribers of business pages and began to process new types of webhooks - those that relate to the feed of the page itself.Business page feeds are not infrequently updated. Marketers often post something, then they follow each like and count them. There is no huge traffic on business pages. But there are reverse situations, for example, Katy Perry Day .Katy Perry is a famous American singer with a huge number of fans around the world. There are 64 million subscribers in her Facebook group alone. At some point, the singer’s marketers decided to make a bot on Facebook Messenger and chose our platform. At that moment, when they published a message calling to subscribe to the bot, our load increased 3-4 times.This situation helped us understand that without the normal implementation of the queues, we can do nothing. As a solution, they chose Redis.Choosing Redis for queues is a fantastically good decision.

He helped to solve a huge number of problems. Now every second through our Redis-cluster passes 1 million different requests. We use it not only for all cascading queues, but also for other tasks, for example, monitoring.Queues on Redis were not implemented on the first try. When we started just folding webhooks in Redis and processing them in one process, we expanded the funnel at the top: there were more incoming webhooks processed, too, but the process itself still took some time. This first decision was unsuccessful. When they tried to scale the number of these requests, there was a slight collapse. The queue can accumulate requests from different pages, but requests from one page can go in a row. If one handler is slow, then requests from one subscriber and from one bot will be processed in the wrong order. The user sends messages, performs some actions with the bot, but receives a response randomly.

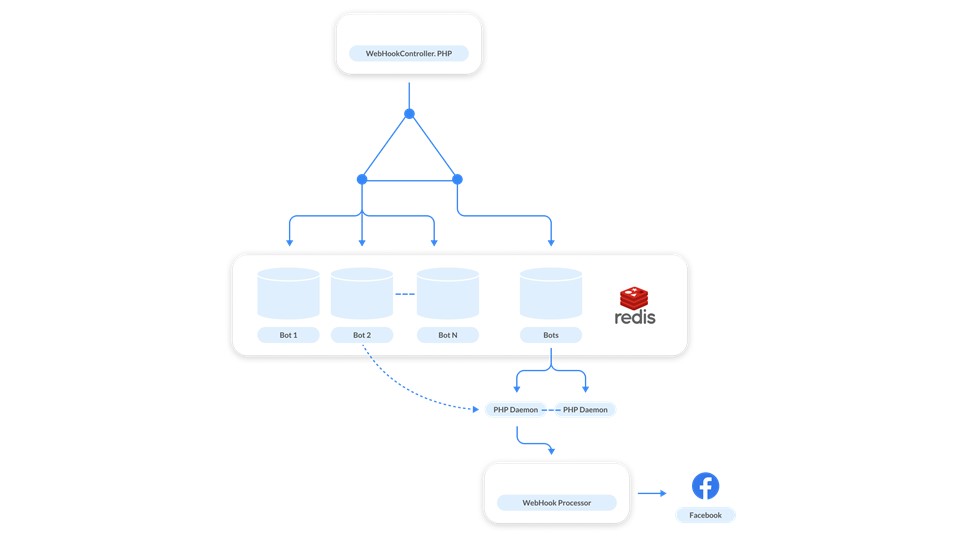

When they tried to scale the number of these requests, there was a slight collapse. The queue can accumulate requests from different pages, but requests from one page can go in a row. If one handler is slow, then requests from one subscriber and from one bot will be processed in the wrong order. The user sends messages, performs some actions with the bot, but receives a response randomly. This seems to be a rare case, but testing on our workloads has shown that this will happen frequently.We started looking for another solution. Here the simplicity and power of Redis came to the rescue - we decided to make a queue for each bot .

This seems to be a rare case, but testing on our workloads has shown that this will happen frequently.We started looking for another solution. Here the simplicity and power of Redis came to the rescue - we decided to make a queue for each bot . How it works? Messages that relate to each bot are added to the queue. In order not to raise the handler to each queue, we made a control queue . She works like that. Each time a request comes from a bot, two messages are published in Redis: one in the bot's queue, the second in the control. The handler monitors the control and each time it starts the daemon when there is a task to process the bot. The demon rakes the queue of the corresponding bot.In addition to the main task, we solved the problem of “noisy neighbors”. This is when one bot generated a huge mass of webhooks and it slows down the system, because other pages are waiting for processing. To solve the problem, it is enough to scale : when the control queue is full, we add new handlers.In addition, the queues are virtual . These are just cells in Redis memory. When there is nothing in the queue, it does not exist, it does not occupy anything.

How it works? Messages that relate to each bot are added to the queue. In order not to raise the handler to each queue, we made a control queue . She works like that. Each time a request comes from a bot, two messages are published in Redis: one in the bot's queue, the second in the control. The handler monitors the control and each time it starts the daemon when there is a task to process the bot. The demon rakes the queue of the corresponding bot.In addition to the main task, we solved the problem of “noisy neighbors”. This is when one bot generated a huge mass of webhooks and it slows down the system, because other pages are waiting for processing. To solve the problem, it is enough to scale : when the control queue is full, we add new handlers.In addition, the queues are virtual . These are just cells in Redis memory. When there is nothing in the queue, it does not exist, it does not occupy anything.ReactPHP

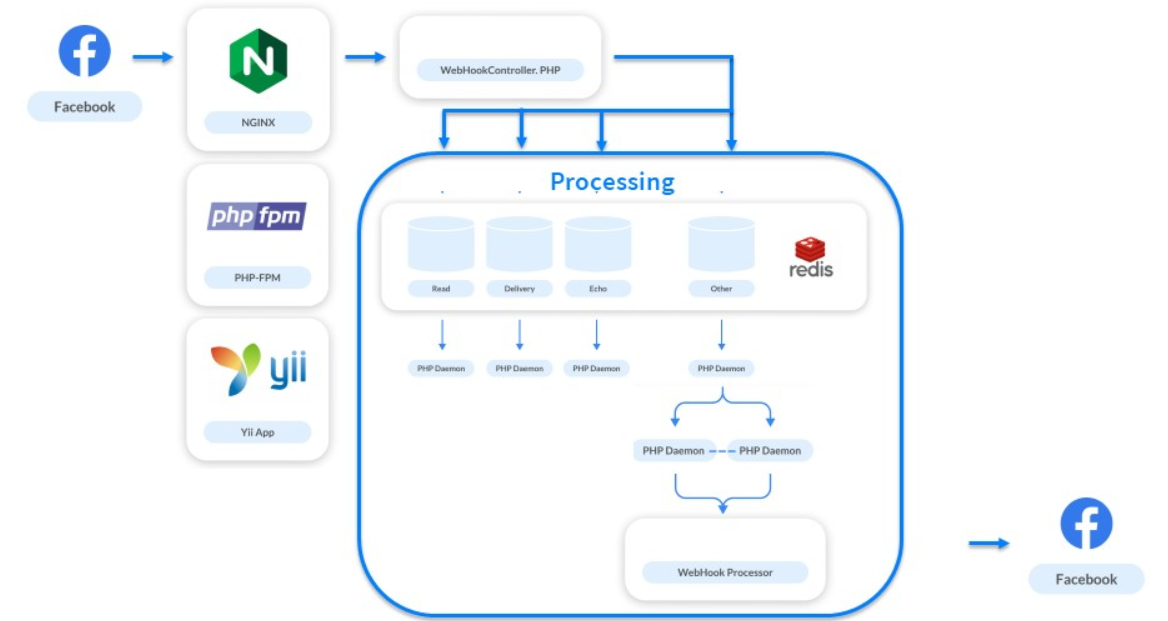

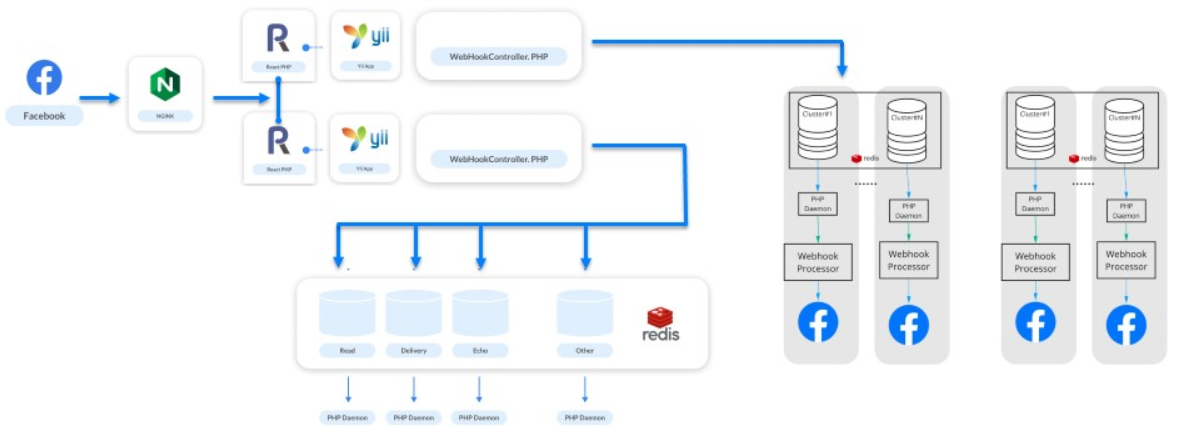

January 2018 . We have reached 1 billion posts per month.The load was 5 thousand RPS per system. This is not peak load, but standard. When bots of famous singers appear, everything grows several times already from this figure. But it's not a problem. The problem is in PHP-FPM: it can no longer withstand the load of 5 thousand RPS.Everyone at that time was talking about fashionable asynchronous processing. We took a closer look at it, saw ReactPHP, conducted quick tests, replaced it with PHP-FPM and instantly got a 4-fold increase. We did not rewrite the processing of our processing - ReactPHP raised the Yii framework. First, we raised 4 ReactPHP services, and later we reached 30. For a long time we lived on them, and the framework coped with the load.As soon as we expanded the funnel, another collapse occurred: after starting the funnel at the reception, processing began to suffer again. To solve this problem already, we decided to separate processing into clusters.

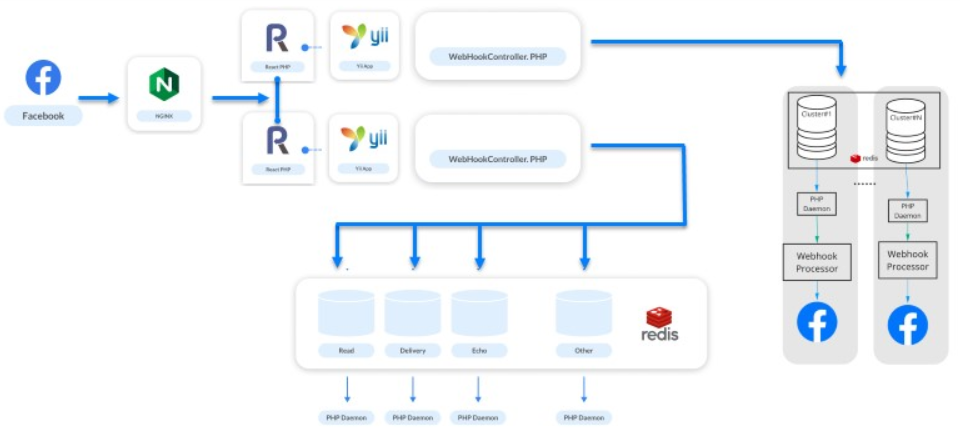

We did not rewrite the processing of our processing - ReactPHP raised the Yii framework. First, we raised 4 ReactPHP services, and later we reached 30. For a long time we lived on them, and the framework coped with the load.As soon as we expanded the funnel, another collapse occurred: after starting the funnel at the reception, processing began to suffer again. To solve this problem already, we decided to separate processing into clusters.Clusters

They took bots, distributed them into clusters and built logical chains from Redis, Postgres and a handler. As a result, we have formed the concept of “Galaxy” - a logical physical abstraction over processing . It consists of instances: Redis, PostgreSQL and a set of PHP services. Each bot belongs to a particular cluster, and ReactPHP knows which cluster the message for this bot needs to be placed in. The scheme above works further.

As a result, we have formed the concept of “Galaxy” - a logical physical abstraction over processing . It consists of instances: Redis, PostgreSQL and a set of PHP services. Each bot belongs to a particular cluster, and ReactPHP knows which cluster the message for this bot needs to be placed in. The scheme above works further. The Universe is expanding, the Universe of our systems too, and we add a new “Galaxy” when this happens.

The Universe is expanding, the Universe of our systems too, and we add a new “Galaxy” when this happens.Galaxies are our way of scaling.

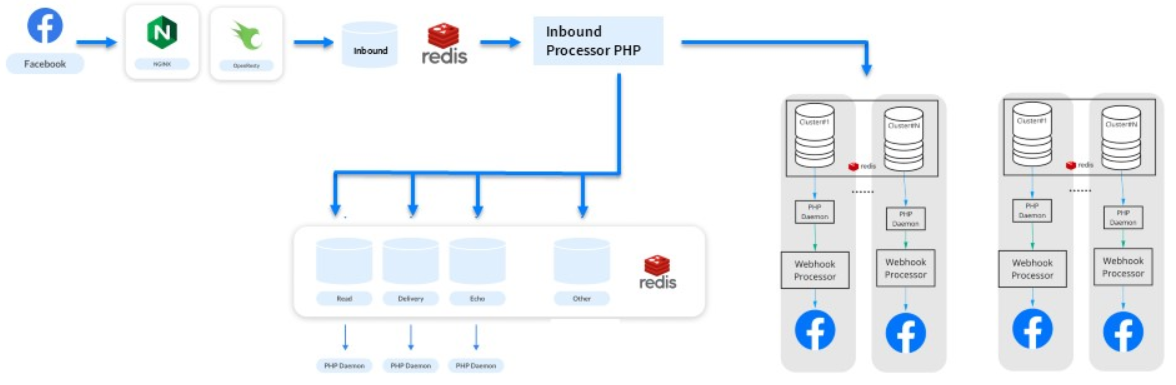

Replacing ReactPHP with a bunch of Nginx and Lua

For the next six months, we continued to grow: 200 million subscribers and 3 billion messages per month. Imagine a site for 200 million registered users - the same load.A new problem has arisen. Webhooks are small tasks of the same type, and PHP is not suitable for solving them. Even ReactPHP didn't help anymore.- He could not cope with the load of 10 thousand RPS - since the introduction of ReactPHP, the load has increased.

- It was necessary to restart it even with deployments, moreover, sequentially, because you can not interrupt the processing of incoming webhooks. Facebook disables the application when it realizes that it has problems. For ManyChat, this is a disaster - 650 thousand actively operating businesses will not forgive us.

Therefore, we gradually bit off different logic from ReactPHP, passed it to the processors, and isolated new queues. In the process, they noticed that ReactPHP performs one simple task - it takes a webhook and puts it in a queue . All the rest is done by processings. Are there any analogues for such a simple task?We remembered that Nginx has modules and noticed the OpenResty library . In addition to supporting the Lua programming language, she had a module for working with Redis. A test written in 3 hours showed that all the work of 30 services on ReactPHP can be done directly on the nginx side. This is how it turned out: we process some kind of endpoint, pick up the request body and add it directly to Redis.

This is how it turned out: we process some kind of endpoint, pick up the request body and add it directly to Redis.location / {

error_log /var/log/nginx/error.log;

resolver

content_by_lua '

ngx.req.read_body()

local mybody = ngx.req.get_body_data()

if not mybody then

return ngx.exit(400)

end

local hash = ngx.crc32_long(mybody)

local cluster = hash % ###wh_inbound_shards### + 1

local redis = require "resty.redis";

local red = radis.new()

red:set_timeout(3000)

local ok, err = red:connect("###redisConnectionWh2.server.host###", 6379)

if not ok then

ngx.log(ngx.ERR, err, "Redis failed to connect")

return ngx.exit(403)

end

local ok, err = red:rpush("###wh_inbound_queue###" .. queuesuffix .. cluster, mybody)

if not ok then

ngx.log(ngx.ERR, err, "Failed to write data", mybody)

return ngx.exit(500)

end

local ok, err = red:set_keepalive(10000, 100)

ngn.say("ok")

';

}

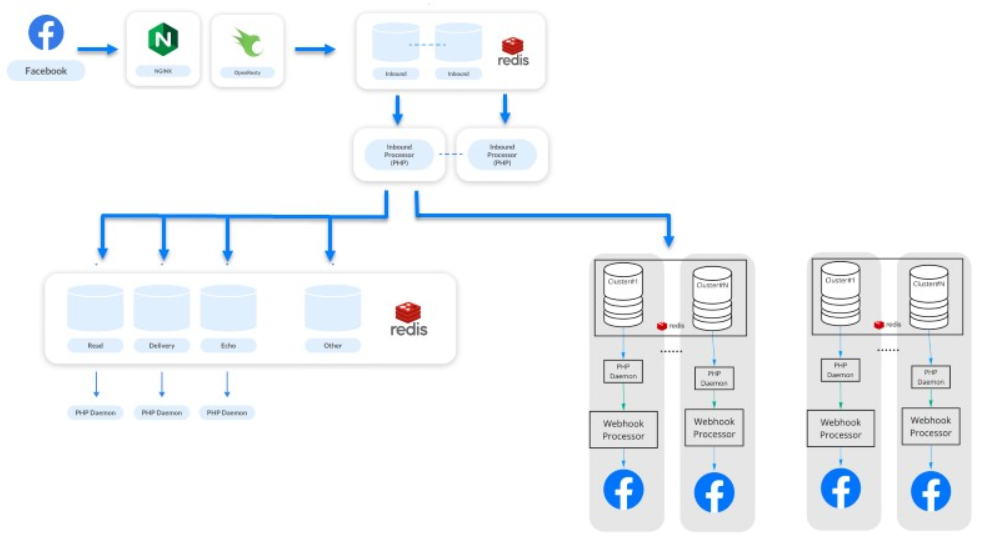

OpenResty and Lua have helped increase throughput. We continue to cope with our workload, the service lives on, everyone is happy.Improving the solution on Lua

The last stage ( note: at the time of the report ) is February 2019 . 500 million subscribers send and receive 7 million messages from a million bots every month.This is a step to improve our solution on Lua. Gradually bite off some logic from the queues, and transfer the primary processing of distributing webhooks between systems to Lua. Now our systems are more productive and less dependent. We maintain separate processing and asynchronous processing . Processing concerns statistics and other things - now it is a completely different system.The system seems simple, but it is not. Under the hood, there are 500 services that process their requests. The whole system runs on 50 Amazon instances: Redis, PostgreSQL, and the PHP handlers themselves.

We maintain separate processing and asynchronous processing . Processing concerns statistics and other things - now it is a completely different system.The system seems simple, but it is not. Under the hood, there are 500 services that process their requests. The whole system runs on 50 Amazon instances: Redis, PostgreSQL, and the PHP handlers themselves.Processing Evolution

Highload can be cool to do in PHP.

Briefly recall how we did this in the process of developing the system.- Started with regular Nginx and PHP-FPM.

- Added queues to PostgreSQL, and then to Redis.

- Added clustering.

- Implemented ReactPHP.

- We replaced ReactPHP with a bunch of Nginx and Lua, and later moved the logic to the bunch.

From our experience, we found out that it is possible to grow and build architecture by successively changing vulnerable parts, using simple well-known approaches and at the same time not expanding the stack.

From our experience, we found out that it is possible to grow and build architecture by successively changing vulnerable parts, using simple well-known approaches and at the same time not expanding the stack., , 11 TeamLead Conf. , LeSS, .

PHP Russia Saint HighLoad++, . PHP , — PHP Russia 13 . highload PHP, Saint HighLoad++ .