The processes of human neurons showed unexpected ability to calculate

Dendrites, processes of some neurons in the human brain, can perform logical calculations, which, as previously thought, can only whole neural networks

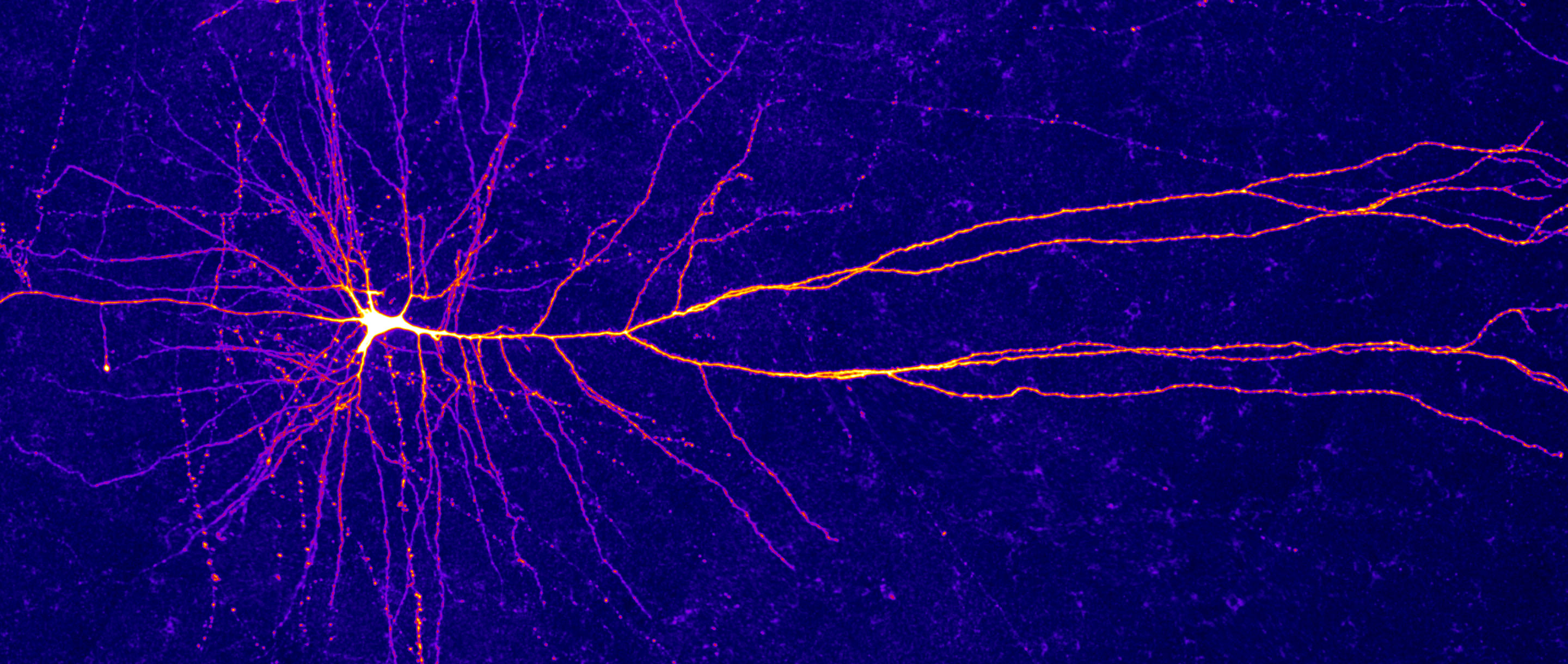

Thin dendrites resembling the roots of a plant diverge in all directions from the cell body of this cortical neuron. Individual dendrites can independently process signals received from neighboring neurons before transferring them to the input of the cell.We are often told that the brain's ability to process information lies in the trillions of connections that connect its neurons to the network. But over the past few decades, more and more research has gradually shifted attention to individual neurons, which take on a much greater responsibility for computing than previously imagined.The newest of these many testimonies is associated with the discovery by scientists of a new type of electrical signal passing through the upper levels of the human cerebral cortex. In laboratory studies and on models, it has already been shown that tiny compartments of dendrites, processes of neurons of the cerebral cortex, are themselves capable of performing complex operations from the field of mathematical logic. However, it now appears that individual dendritic compartments can also perform a special operation - an “ exclusive OR ” (XOR) - which, as previously thought, was not available to individual neurons."I think we are quite shallow dug in the area of what is actually involved in the neurons," - said Albert Gideon , a postdoc at the University of Humboldt in Berlin, lead author of the journal Science published works , which describes this discovery.This discovery demonstrates the ever-increasing need for individual neurons to be considered complex information processors in studies of the nervous system. “The brain can be far more complex than we thought,” Conrad Cording said., a computational neuroscientist from the University of Pennsylvania who was not involved in this work. Perhaps the discovery may also prompt computer scientists to change the working strategies of artificial neural networks, in which neurons have always been considered as simple switches.

Thin dendrites resembling the roots of a plant diverge in all directions from the cell body of this cortical neuron. Individual dendrites can independently process signals received from neighboring neurons before transferring them to the input of the cell.We are often told that the brain's ability to process information lies in the trillions of connections that connect its neurons to the network. But over the past few decades, more and more research has gradually shifted attention to individual neurons, which take on a much greater responsibility for computing than previously imagined.The newest of these many testimonies is associated with the discovery by scientists of a new type of electrical signal passing through the upper levels of the human cerebral cortex. In laboratory studies and on models, it has already been shown that tiny compartments of dendrites, processes of neurons of the cerebral cortex, are themselves capable of performing complex operations from the field of mathematical logic. However, it now appears that individual dendritic compartments can also perform a special operation - an “ exclusive OR ” (XOR) - which, as previously thought, was not available to individual neurons."I think we are quite shallow dug in the area of what is actually involved in the neurons," - said Albert Gideon , a postdoc at the University of Humboldt in Berlin, lead author of the journal Science published works , which describes this discovery.This discovery demonstrates the ever-increasing need for individual neurons to be considered complex information processors in studies of the nervous system. “The brain can be far more complex than we thought,” Conrad Cording said., a computational neuroscientist from the University of Pennsylvania who was not involved in this work. Perhaps the discovery may also prompt computer scientists to change the working strategies of artificial neural networks, in which neurons have always been considered as simple switches.Limitations of the stupid neuron model

In the 1940s and 50s, a certain idea began to dominate in neurobiology: the "dumb" role of a neuron as a simple integrator, a network point summing up all the inputs. Branching processes of the cell, dendrites, receive thousands of signals from neighboring neurons - some of which are exciting, others - inhibitory. In the body of a neuron, all these signals are weighed and summed, and if the sum exceeds a certain threshold, the neuron produces a sequence of electrical impulses (in fact, electrical potentials) that control the stimulation of neighboring neurons.At about the same time, the researchers realized that a single neuron could work as a logical gate, like the ones that make up digital circuits (although it is not yet clear how the brain performs these calculations when processing information). The neuron was, in fact, the “AND gate”, since it was activated only after receiving the necessary amount of input data.Thus, a network of neurons could theoretically carry out any calculations. Yet such a neuron model was limited. Its computational metaphors were too simplistic, and for decades, experimental units lacked the ability to record the activity of various components of a single neuron. “It essentially squeezed a neuron to a point in space,” Barlett Mel said., Computational Neuroscientist at the University of Southern California. “He had no internal manifestations of activity.” The model ignored the fact that thousands of signals entering the neuron were at different points of its various dendrites. She ignored the idea (subsequently confirmed) that individual dendrites can work in different ways. And she ignored the possibility that various internal structures of the neuron could carry out various calculations.However, in the 1980s, things began to change. Models of neuroscientist Christoph Koch and others, who subsequently confirmed in experiments, demonstratedthat one neuron does not produce a single or uniform voltage signal. Instead, the signals decreased, passing along the dendrite into the neuron, and often did not contribute to the final cell output.Such isolation of signals means that individual dendrites can process information independently of each other. “This was contrary to the point neuron hypothesis, in which the neuron simply stacked everything regardless of location,” Mel said.This prompted Koch and other biologists, including Gordon Shepherdfrom Yale School of Medicine, to model how the structure of dendrites in principle could allow a neuron to work not as a simple logic gate, but as a complex multi-component signal processing system. They simulated dendritic trees, on which are located many logical operations that work through hypothetical mechanisms.Later, Mel and colleagues studied in more detail how a cell could be controlled with several incoming signals on separate dendrites. What they found surprised them: dendrites generated local peaks, they had their own non-linear input / output curves, and their own activation thresholds that differed from the neuron as a whole. The dendrites themselves could work as "I" gates, or place other computing devices on themselves.Chalk with a former graduate studentYota Poiratsi (now a working neuroscientist at the Institute of Molecular Biology and Biotechnology in Greece) realized that this means that a single neuron can be considered a two-layer network . Dendrites serve as auxiliary nonlinear computing modules that collect input data and provide intermediate output data. Then these signals are combined in the body of the cell, which determines how the neuron as a whole will respond to it. Yota Poiratsi, a computational neuroscientist at the Institute of Molecular Biology and Biotechnology in GreeceWhether the activity at the level of dendrites influenced the activation of a neuron and the activity of neighboring neurons was not clear. But in any case, such local processing can prepare or set up the system so that it responds to future incoming signals differently, as Shepherd says.Be that as it may, “the trend was this: okay, be careful, the neuron may be more capable, we thought,” Mel said.Shepherd agrees. “A significant amount of computation taking place in the cortex is at a level that does not reach the threshold,” he said. “A system from a single neuron can be more than just the only integrator.” There may be two layers, or even more. ” In theory, almost all computational operations can be performed by one neuron with a sufficient number of dendrites, each of which is capable of performing its own non-linear operation.In a recent work in the journal Science, researchers took this idea even further. They suggested that a single dendrite compartment could perform these complex operations on its own.

Yota Poiratsi, a computational neuroscientist at the Institute of Molecular Biology and Biotechnology in GreeceWhether the activity at the level of dendrites influenced the activation of a neuron and the activity of neighboring neurons was not clear. But in any case, such local processing can prepare or set up the system so that it responds to future incoming signals differently, as Shepherd says.Be that as it may, “the trend was this: okay, be careful, the neuron may be more capable, we thought,” Mel said.Shepherd agrees. “A significant amount of computation taking place in the cortex is at a level that does not reach the threshold,” he said. “A system from a single neuron can be more than just the only integrator.” There may be two layers, or even more. ” In theory, almost all computational operations can be performed by one neuron with a sufficient number of dendrites, each of which is capable of performing its own non-linear operation.In a recent work in the journal Science, researchers took this idea even further. They suggested that a single dendrite compartment could perform these complex operations on its own.Unexpected bursts and old obstacles

Matthew Larkum , a neuroscientist from Humboldt, and his team, began to study dendrites from different perspectives. The activity of dendrites was mainly studied using rodents as an example, and researchers were interested in how signal propagation can differ in human neurons, whose dendrites are much longer. They got at the disposal sections of the brain tissue of the 2nd and 3rd layer of the cortex, which contains especially large neurons with a large number of dendrites. And when they began to stimulate these dendrites with the help of electric current, they noticed something strange.They saw unexpected and repetitive bursts - which were completely unlike other known neural signals. They were especially fast and short, like action potentials., and arose due to calcium ions. This was interesting because the usual action potentials are generated by sodium and potassium ions. Although the signals generated by calcium were already observed in rodent dendrites, these bursts lasted much longer.Even stranger, an increase in the intensity of electrical stimulation reduced the response rate of neurons. “Suddenly, we, stimulating more, began to receive less,” Gidon said. “It got our attention.”To understand what these new types of bursts can do, scientists teamed up with Poiratsi and a researcher from her Greek laboratory, Atanasia Paputzi , to create a model that reflects the behavior of neurons.The model showed that dendrites produce bursts in response to two input signals separately, but do not produce if these signals are combined. This is the equivalent of a non-linear calculation, known as an exclusive OR, or XOR, giving 1 only if one, and only one of the inputs is 1.This discovery immediately resonated among computer scientists. For many years, it was believed that one neuron was not able to count the XOR function. In the 1969 book, Perceptrons, computer scientists Marvin Minsky and Seymour Papert provided evidence that single-layer artificial neural networks could not calculate XOR. This conclusion was such a blow that many computer scientists explained by this fact the stagnation in which the neural networks were located until the 1980s.Researchers of neural networks eventually found ways to overcome the obstacle found by Minsky and Papert, and neuroscientists found examples of these solutions in nature. For example, Poiratsi already knew that a single neuron can calculate XOR: only two dendrites are capable of this. But in new experiments, he and his colleagues proposed a plausible biophysical mechanism for performing such a calculation in a single dendrite.“For me, this is another degree of system flexibility,” said Poiratsi. “It shows that the system has many ways to do the calculations.” However, she points out that if a single neuron is already able to solve this problem, "why then should the system go to tricks by creating more complex modules inside the neuron?"Processors inside processors

Of course, not all neurons are like that. Gidon says that in other parts of the brain there are quite a few smaller, pointy neurons. Probably, such a complexity of neurons exists for a reason. So why do parts of neurons need the ability to do what a neuron is capable of, or a small network of neurons? The obvious option is a neuron that behaves like a multilayer network, can process more information, and, accordingly, it is better to learn and store more. “Perhaps we have a whole deep network in a separate neuron,” said Poiratsi. “And this is a much more powerful apparatus for teaching complex tasks.”Perhaps Cording adds, “The only neuron capable of computing truly complex functions. For example, he could independently recognize the object. " The presence of such powerful individual neurons may help the brain save energy, according to Poiratsi.The Larkum group plans to look for similar signals in the dendrites of rodents and other animals to determine if these computational abilities are unique to humans. They also want to go beyond the model to relate the observed activity of neurons to real behavior. Poiratsi hopes to compare the dendrite calculations with what is happening in the networks of neurons in order to understand what advantages may arise in the first case. This will include checking other logical operations and exploring how they can contribute to learning or remembering. “Until we mark it all up, we cannot appreciate the importance of the discovery,” said Poiratsi.Although there is still much work to be done, researchers believe that these discoveries demonstrate the need to rethink the approach to brain modeling. Perhaps it’s not enough to concentrate only on the connectedness of various neurons and brain regions.New results should also raise new questions in the areas of machine learning and artificial intelligence. Artificial neural networks work with point neurons, considering them to be nodes that sum the input and transmit the sum through the activation function. “Very few people took seriously the idea that a single neuron could be a sophisticated computing device,” said Gary Marcus , a cognitive scientist at New York University who has been very skeptical about some of the deep learning claims.Although the work from Science magazine is just one discovery in the rich history of works demonstrating this idea, computer scientists may react more actively to it because it works with the XOR problem, which has so long tormented research on neural networks. “She seems to be saying that we need to seriously think about it,” said Marcus. “This whole game - getting smart reasoning from stupid neurons - may be wrong.”“And it very clearly demonstrates this point of view,” he added. “This performance will drown out all the background noise.”Source: https://habr.com/ru/post/undefined/

All Articles