In a previous article, we examined the attention mechanism, an extremely common method in modern deep learning models that can improve the performance indicators of neural machine translation applications. In this article, we will look at Transformer, a model that uses the attention mechanism to increase learning speed. Moreover, for a number of tasks, Transformers outperform Google’s neural machine translation model. However, the biggest advantage of Transformers is their high efficiency in parallelization conditions. Even Google Cloud recommends using Transformer as a model when working on Cloud TPU . Let's try to figure out what the model consists of and what functions it performs.

The Transformer model was first proposed in the article Attention is All You Need . An implementation on TensorFlow is available as part of the Tensor2Tensor package , in addition, a group of NLP researchers from Harvard created a guide annotation of the article with an implementation on PyTorch . In this same guide, we will try to most simply and consistently outline the main ideas and concepts, which, we hope, will help people who do not have a deep knowledge of the subject area to understand this model.

High Level Review

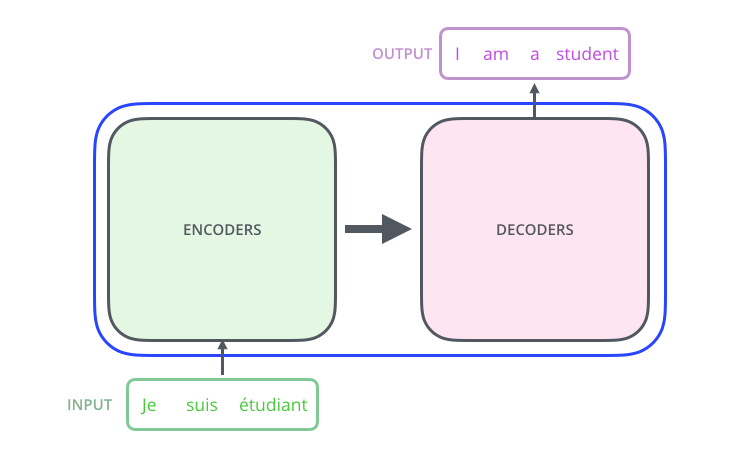

Let's look at the model as a kind of black box. In machine translation applications, it accepts a sentence in one language as input and displays a sentence in another.

, , , .

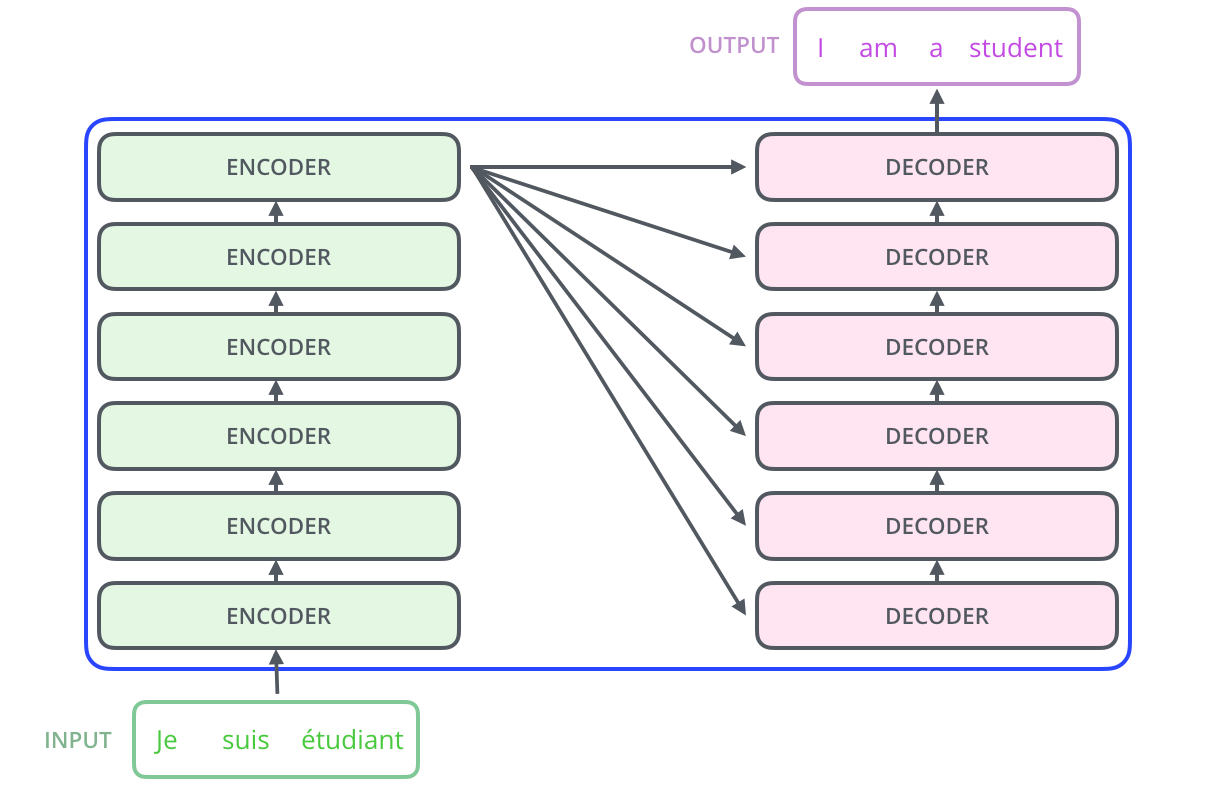

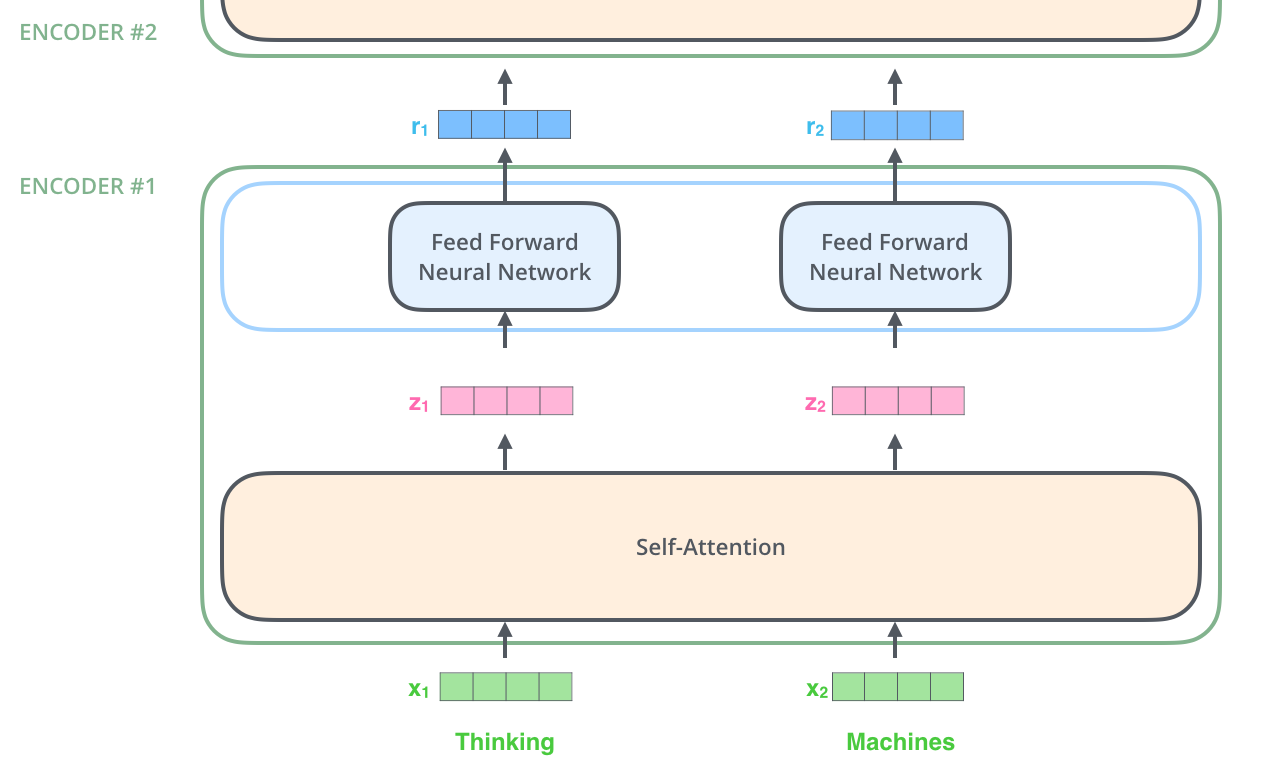

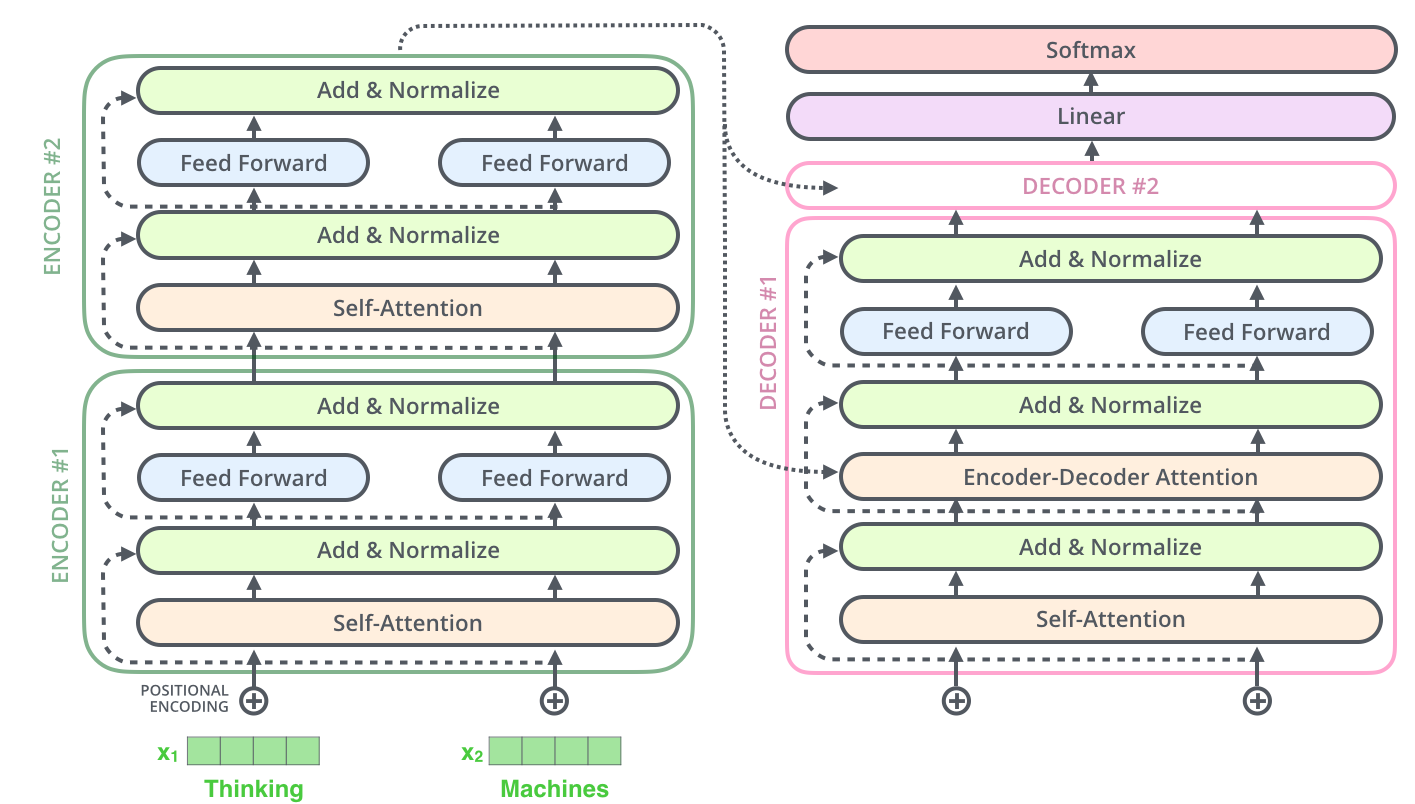

– ; 6 , ( 6 , ). – , .

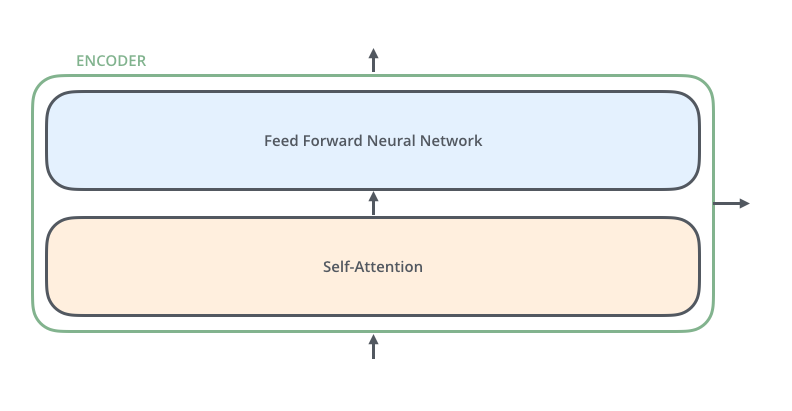

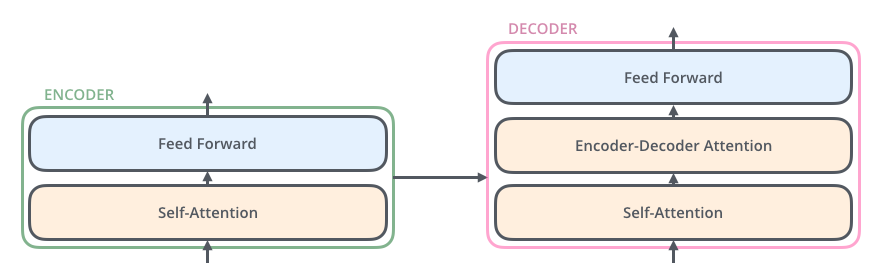

, . :

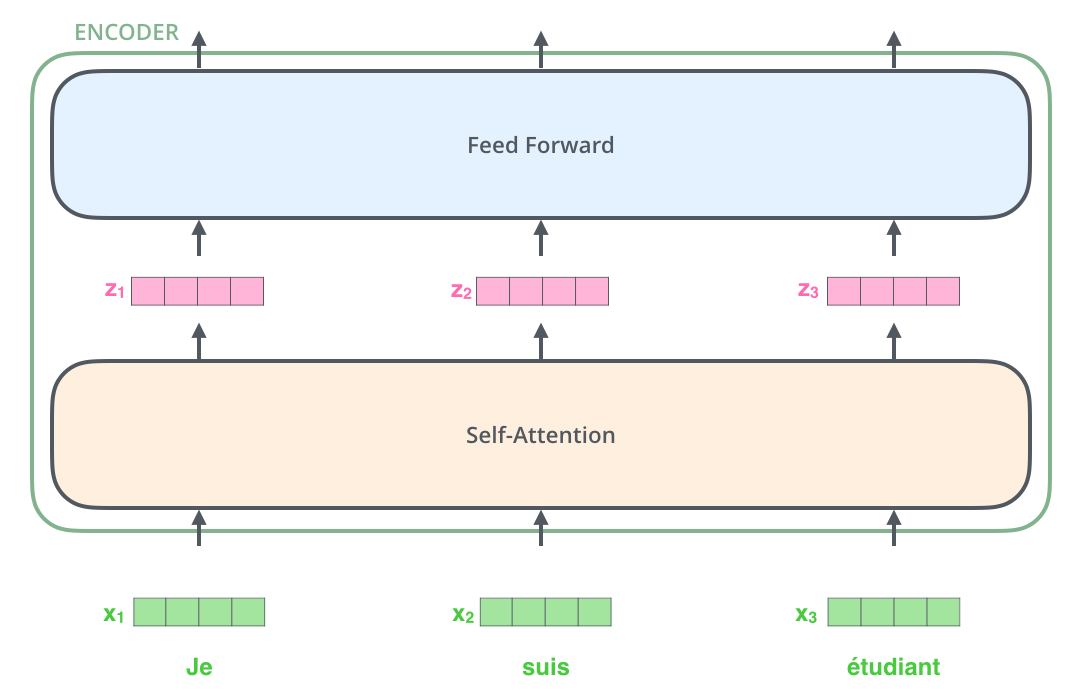

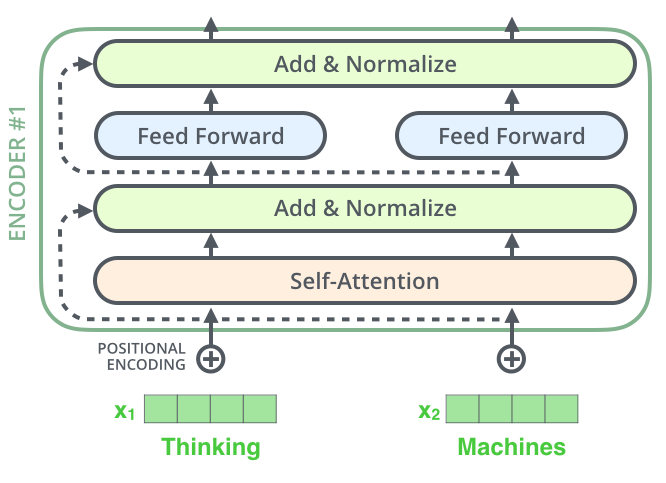

, , (self-attention), . .

(feed-forward neural network). .

, , ( , seq2seq).

, , /, , .

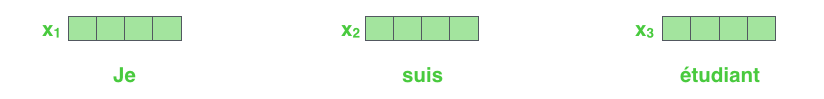

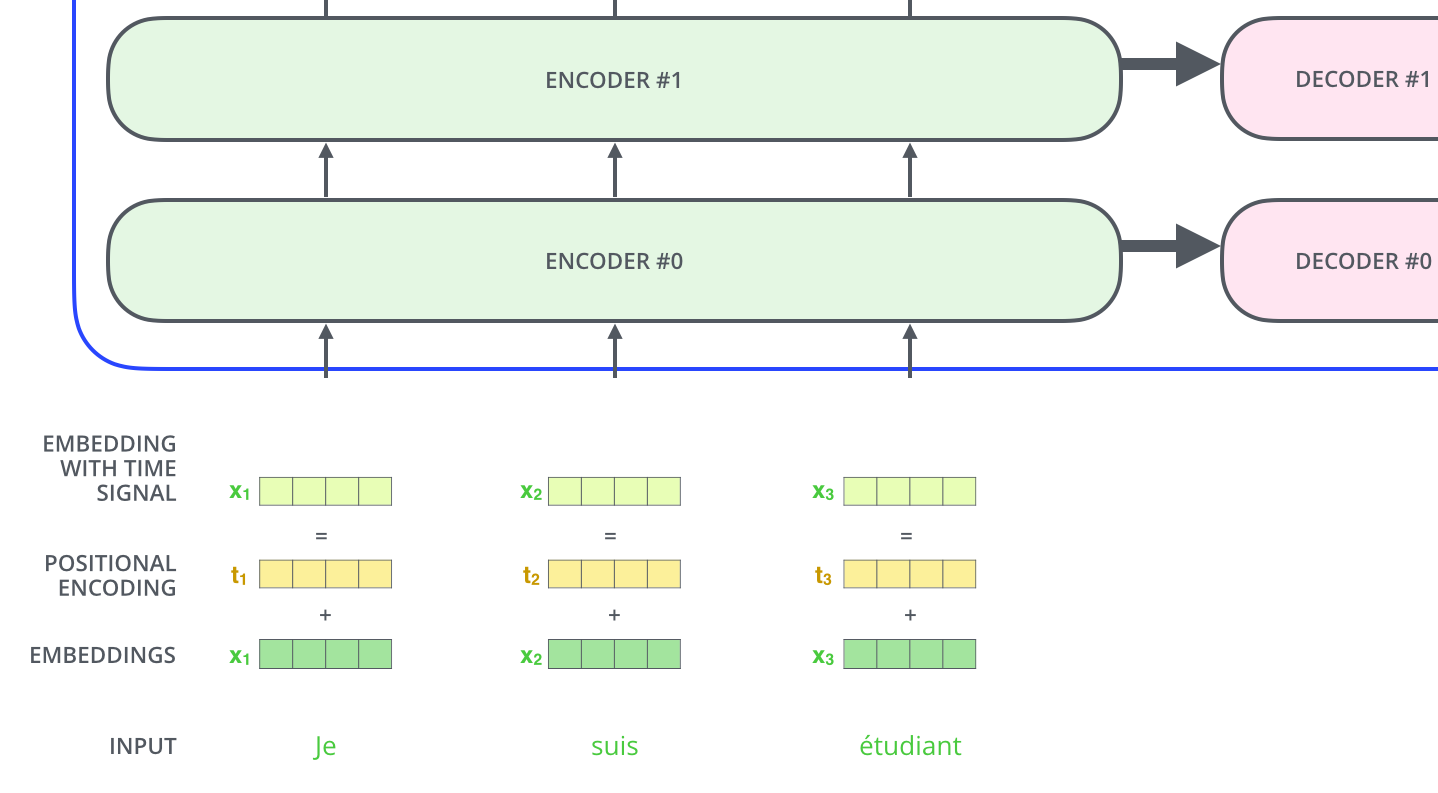

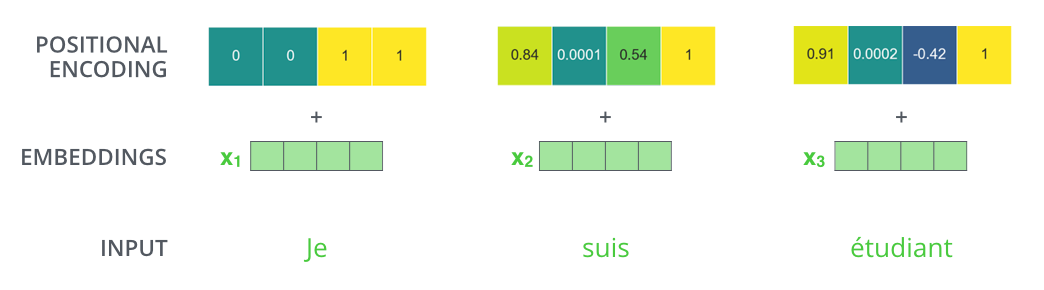

NLP-, , , (word embeddings).

512. .

. , , : 512 ( , – ). , , , , .

, .

: . , , , .

, .

!

, , – , , , .

. , .

, « » -, . , «Attention is All You Need». , .

– , :

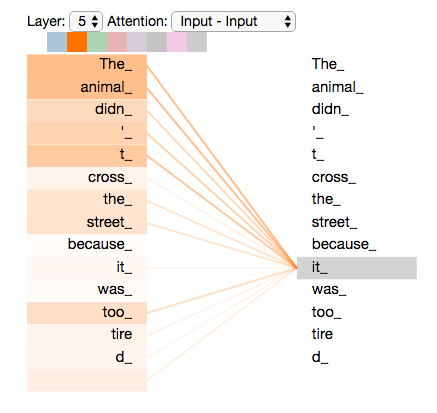

”The animal didn't cross the street because it was too tired”

«it» ? (street) (animal)? .

«it», , «it» «animal».

( ), , .

(RNN), , RNN /, , . – , , «» .

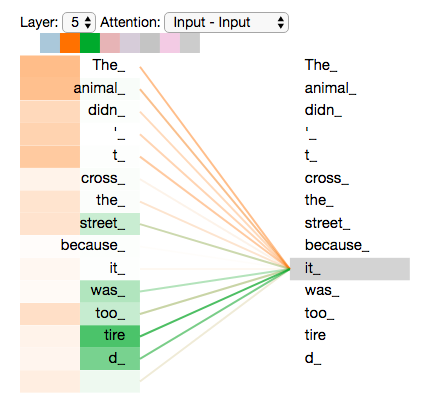

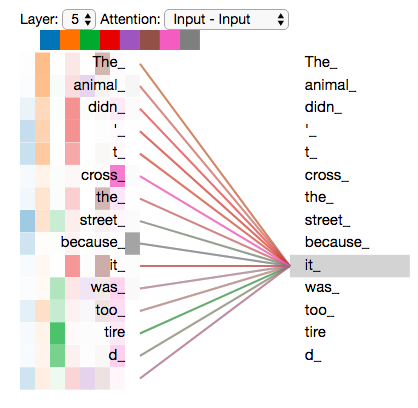

«it» #5 ( ), «The animal» «it».

Tensor2Tensor, , .

, , , .

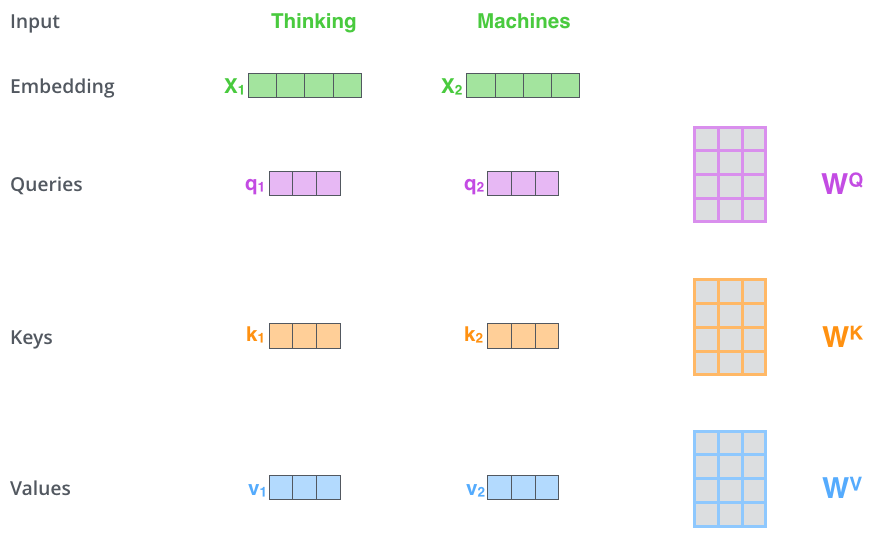

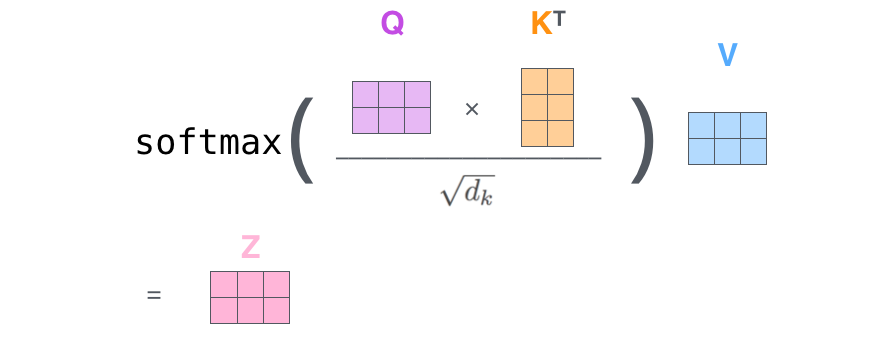

– ( – ): (Query vector), (Key vector) (Value vector). , .

, , . 64, / 512. , (multi-head attention) .

x1 WQ q1, «», . «», «» «» .

«», «» «»?

, . , , , .

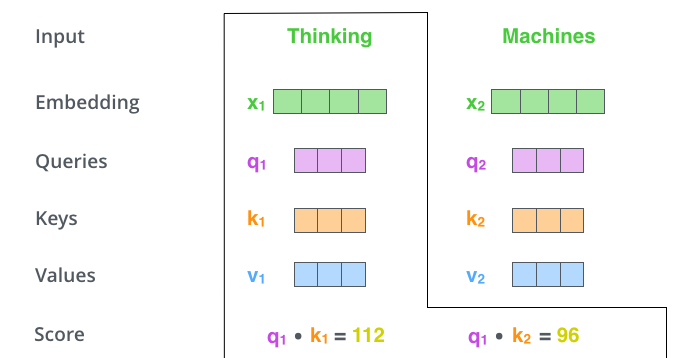

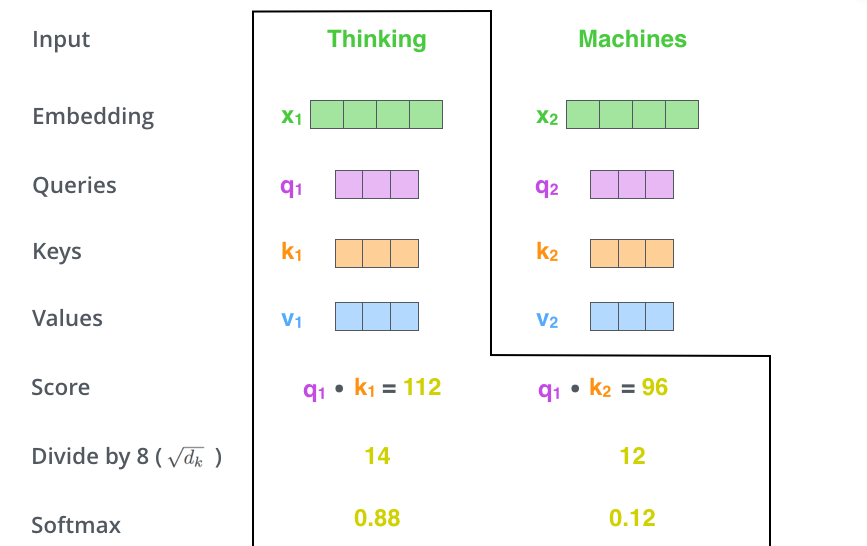

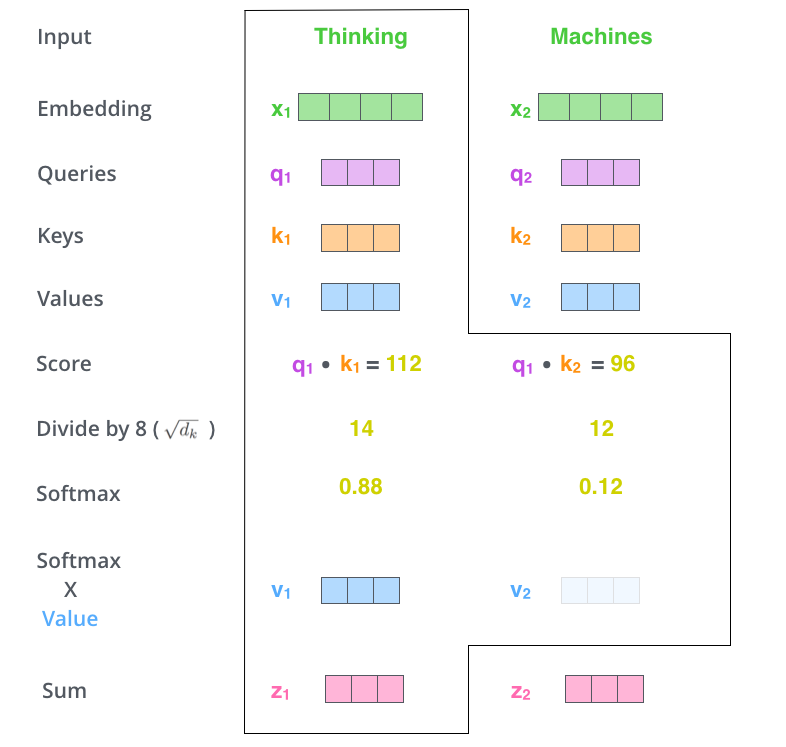

– (score). , – «Thinking». . , .

. , #1, q1 k1, — q1 k2.

– 8 ( , – 64; , ), (softmax). , 1.

- (softmax score) , . , -, , .

– - ( ). : , , ( , , 0.001).

– . ( ).

. , . , , . , , .

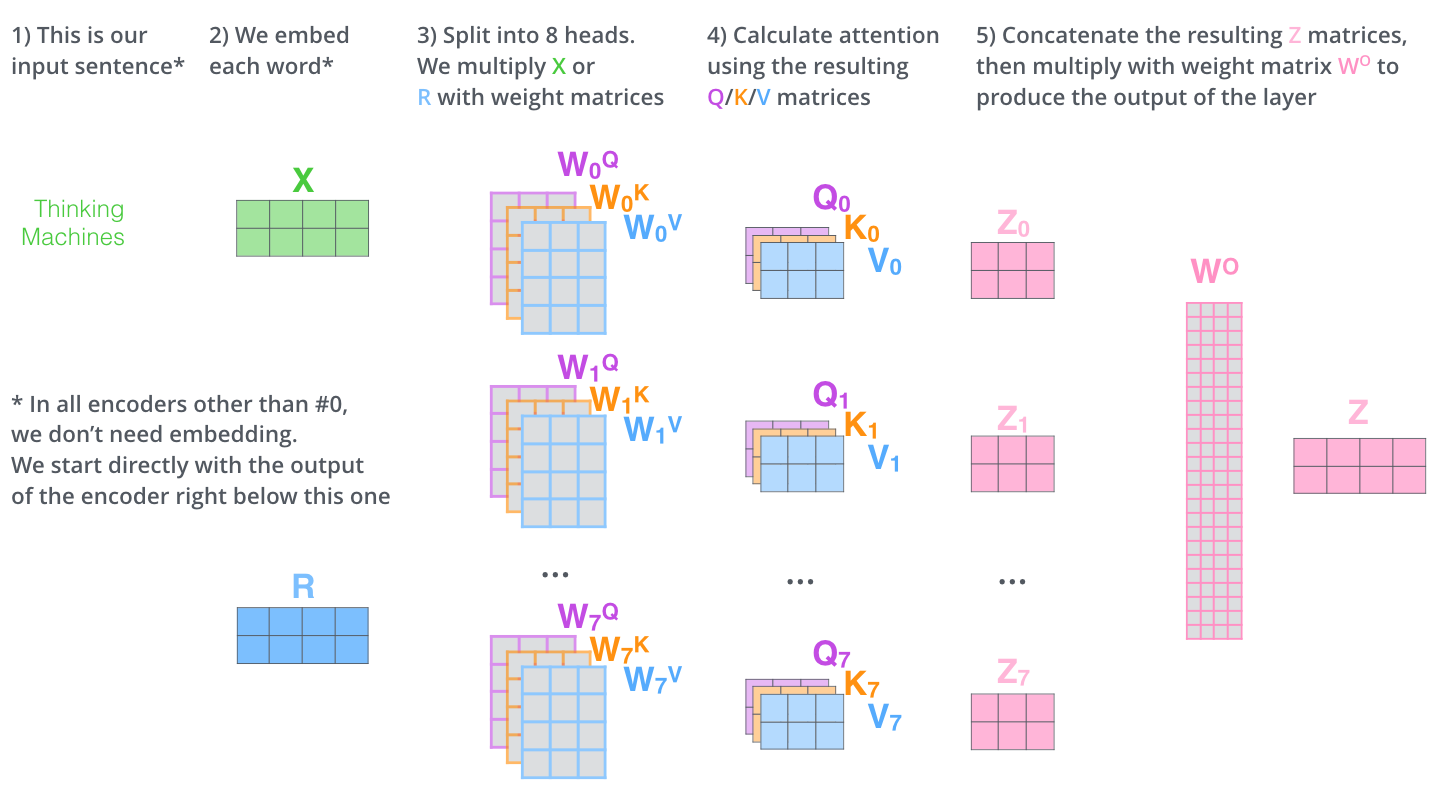

– , . X , (WQ, WK, WV).

. (512, 4 ) q/k/v (64, 3 ).

, , 2-6 .

.

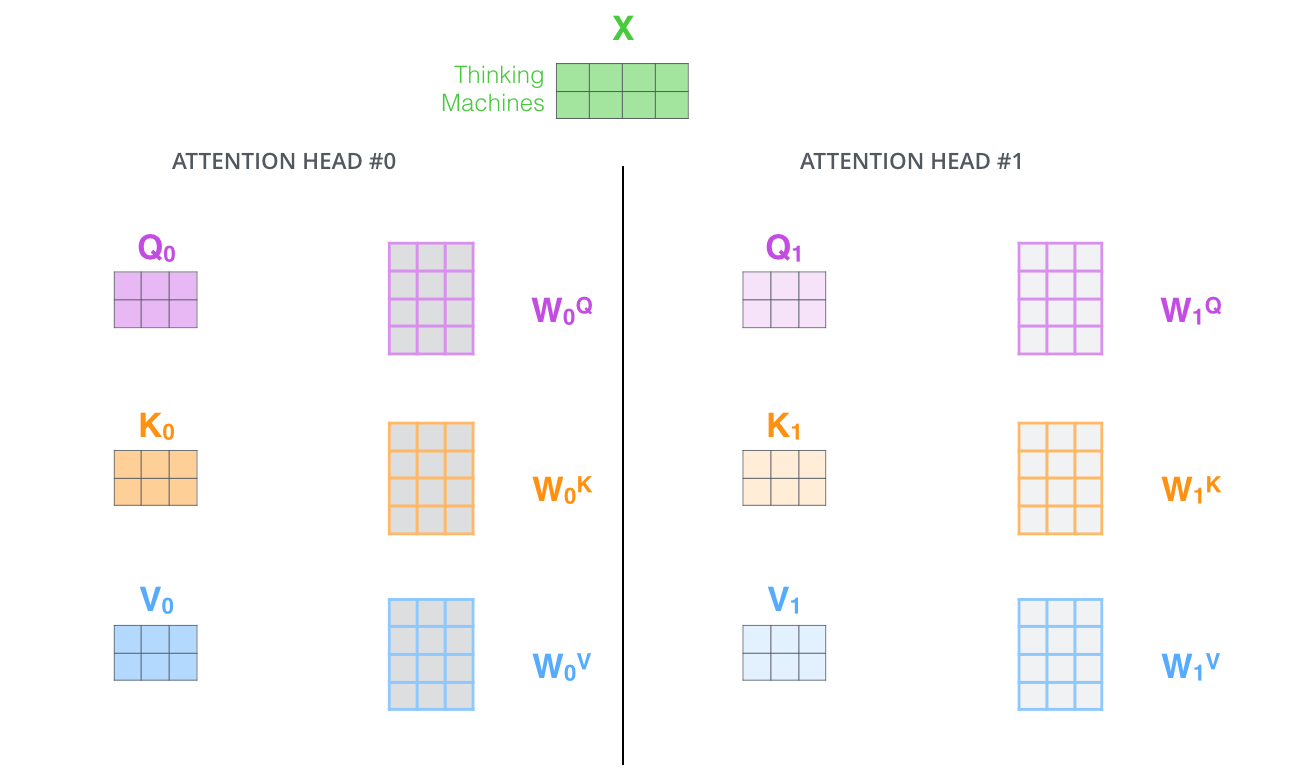

, (multi-head attention). :

- . , , z1 , . «The animal didn’t cross the street because it was too tired», , «it».

- « » (representation subspaces). , , // ( 8 «» , 8 /). . ( /) .

, WQ/WK/WV «», Q/K/V . , WQ/WK/WV Q/K/V .

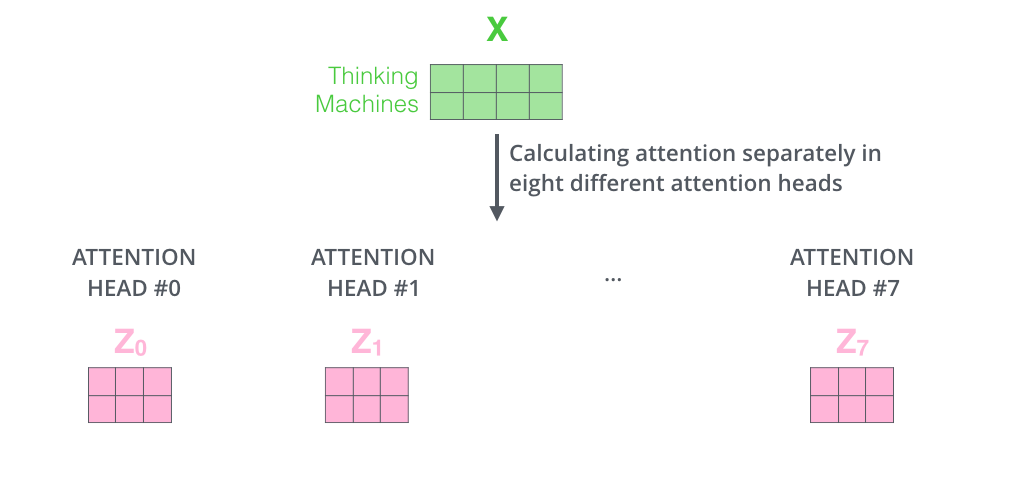

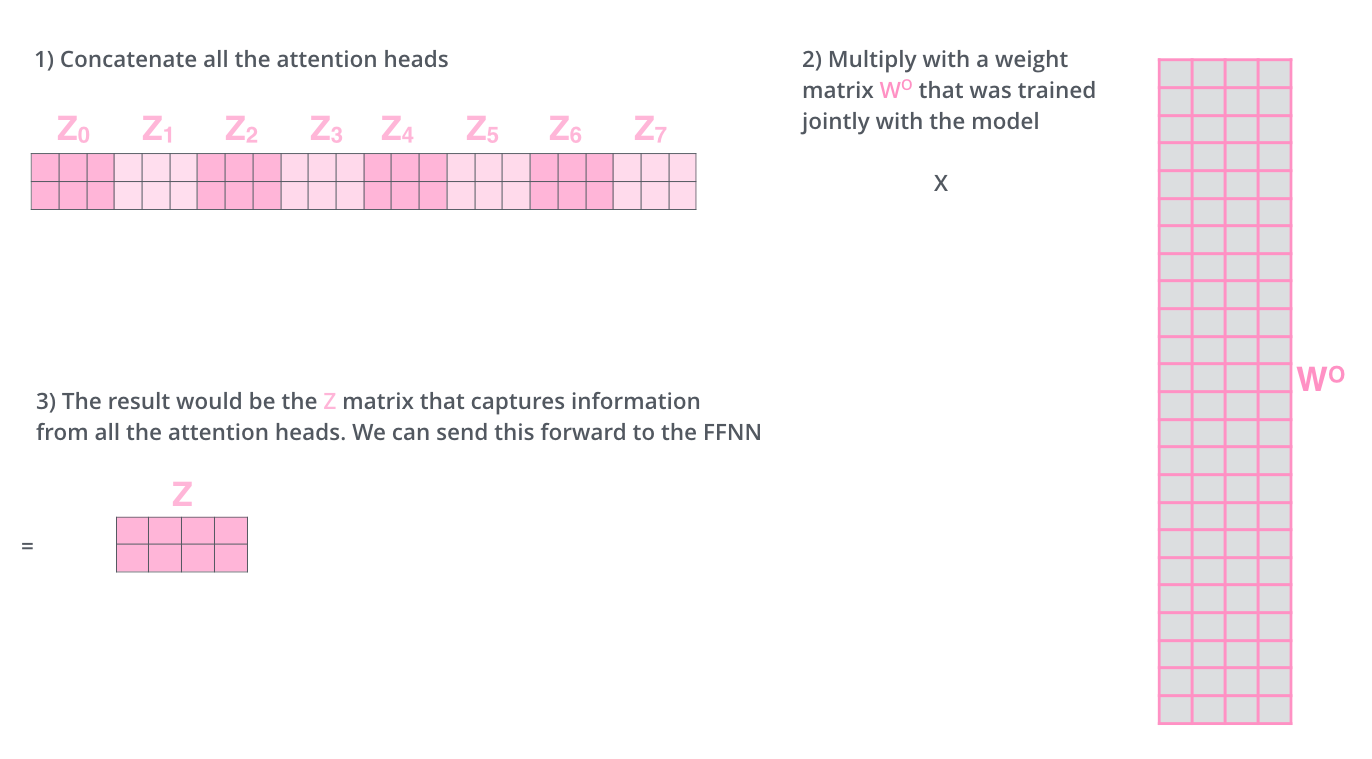

, , 8 , 8 Z .

. , 8 – ( ), Z .

? WO.

, , . , . , .

, «» , , , «» «it» :

«it», «» «the animal», — «tired». , «it» «animal» «tired».

«» , , .

— .

. , . , Q/K/V .

, , , .

, 4, :

?

: , , , — .. 512 -1 1. , .

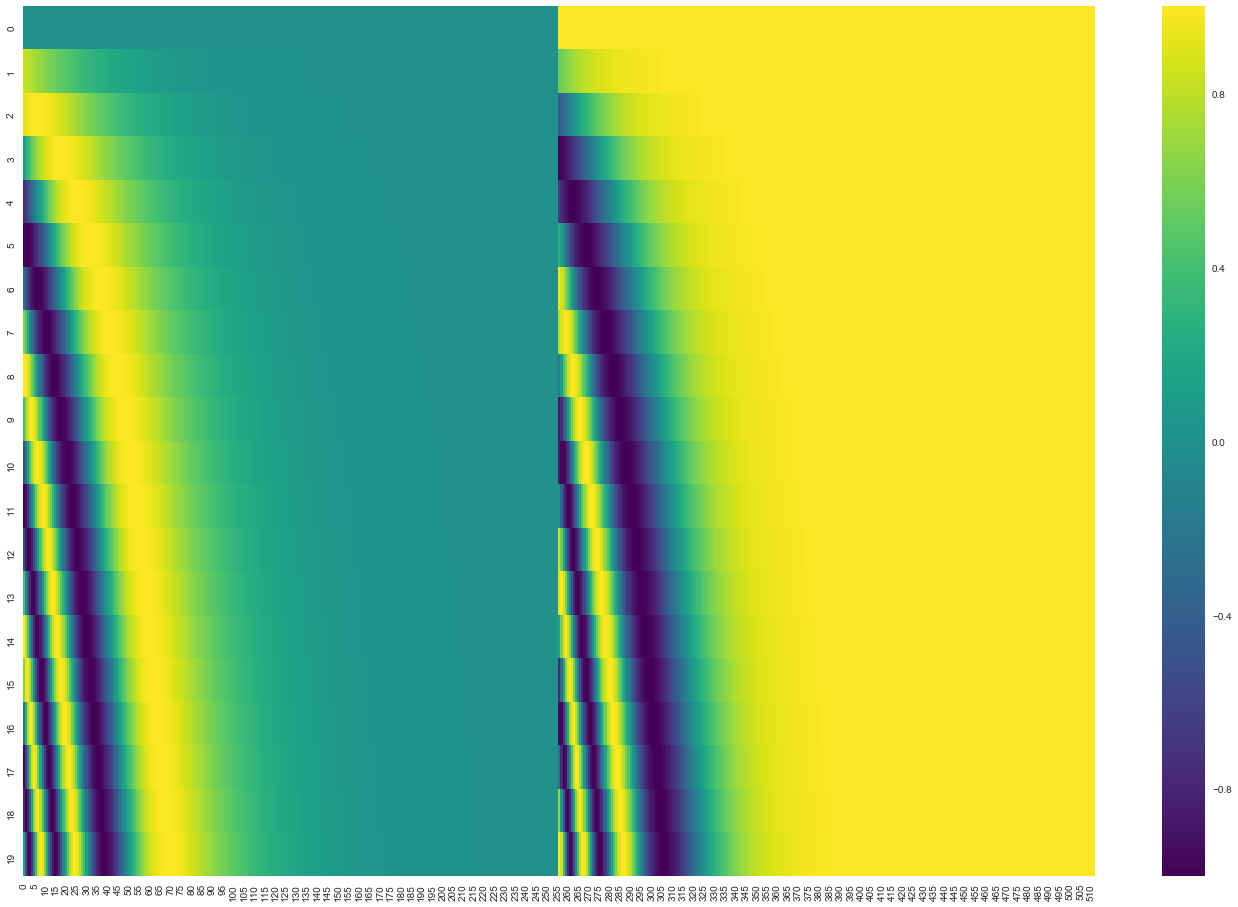

20 () 512 (). , : ( ), – ( ). .

( 3.5). get_timing_signal_1d(). , , (, , , ).

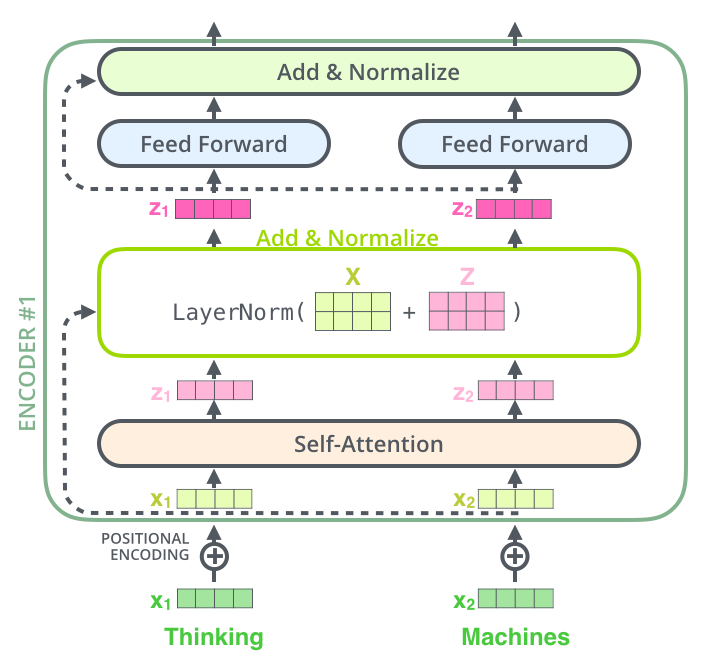

, , , , ( , ) , (layer-normalization step).

, , :

. , :

, , , . , .

. K V. «-» , :

. ( – ).

, , . , , . , , , .

.

. ( –inf) .

«-» , , , , .

. ? .

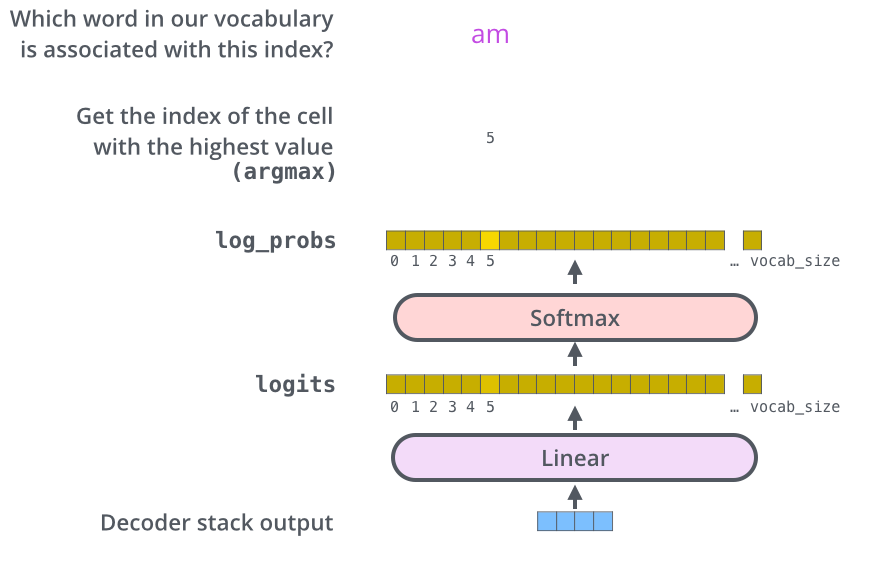

– , , , , (logits vector).

10 (« » ), . , 10 000 – . .

( , 1). .

, , .

, , , , .

, . .. , .

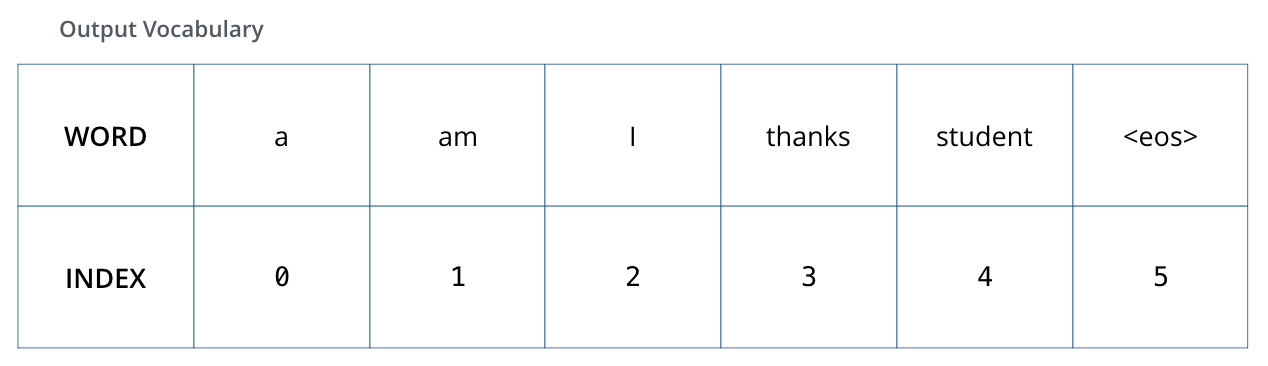

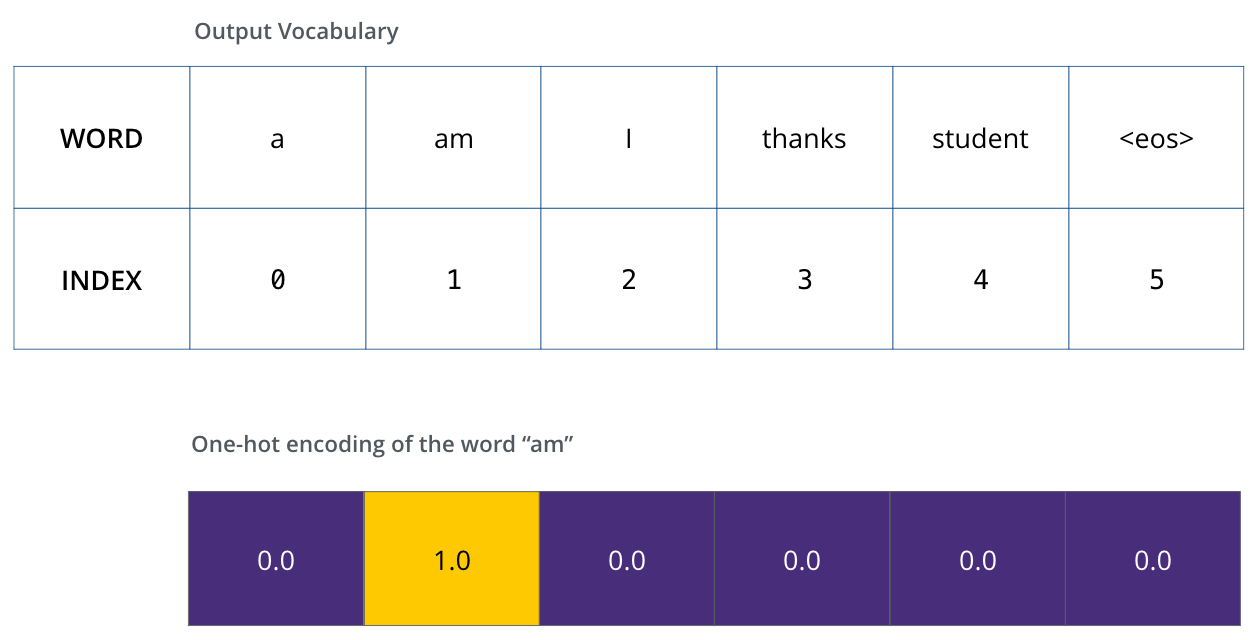

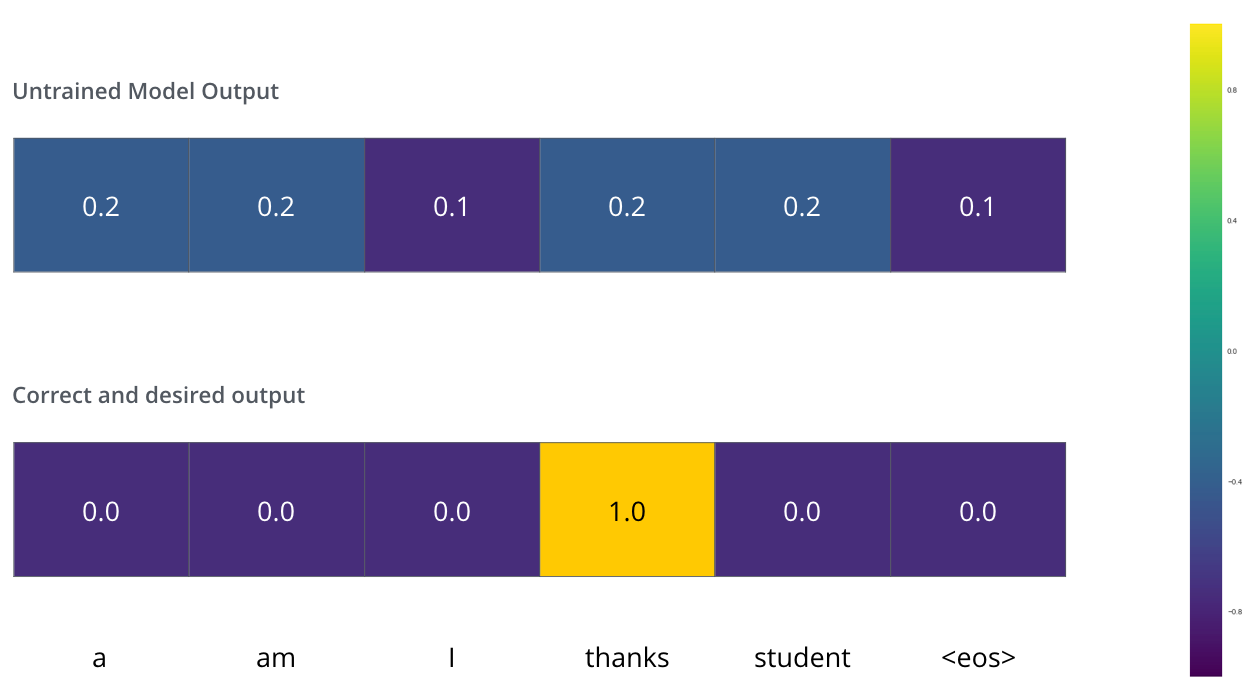

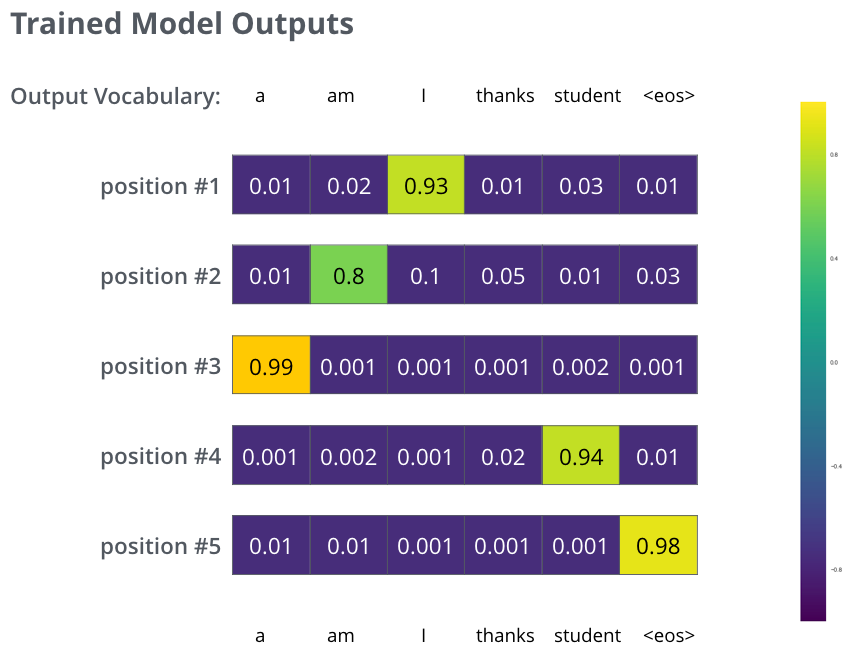

, 6 («a», «am», «i», «thanks», «student» «<eos>» (« »).

.

, (, one-hot-). , «am», :

: one-hot- .

(loss function) – , , , .

, . – «merci» «thanks».

, , , «thanks». .. , .

() , /. , , , .

? . , . -.

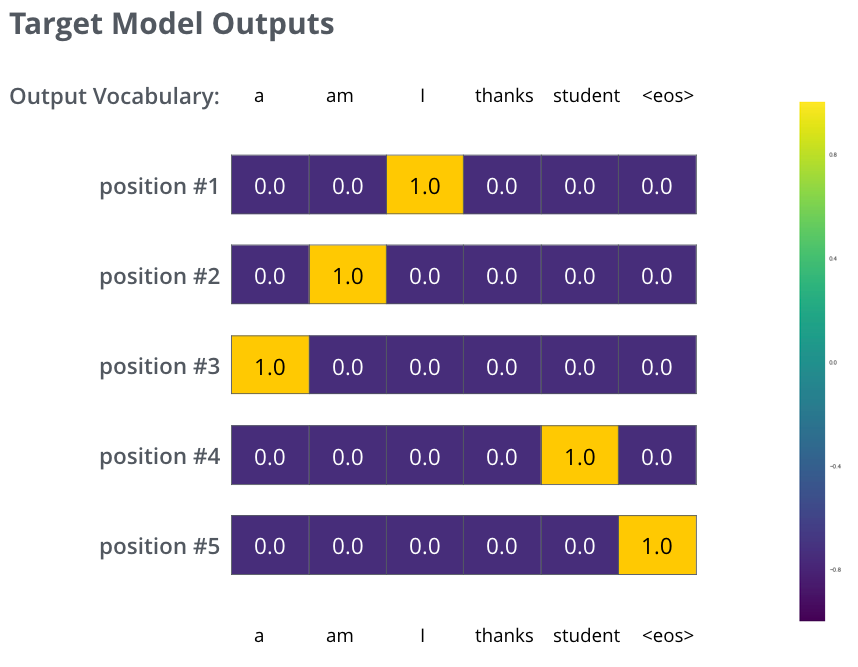

, . . , «je suis étudiant» – «I am a student». , , , :

- (6 , – 3000 10000);

- , «i»;

- , «am»;

- .. , .

, :

, . , , (.: ). , , , – , .

, , , , . , (greedy decoding). – , , 2 ( , «I» «a») , , : , «I», , , «a». , , . #2 #3 .. « » (beam search). (beam_size) (.. #1 #2), - (top_beams) ( ). .

, . , :

:

Authors