Hello, Habr! I present to you the translation of the article "Visualizing A Neural Machine Translation Model (Mechanics of Seq2seq Models With Attention)" by Jay Alammar.

Sequence-to-sequence models (seq2seq) are deep learning models that have achieved great success in tasks such as machine translation, text summarization, abstract images, etc. For example, at the end of 2016, a similar model was built into Google Translate. The foundations of seq2seq models were laid back in 2014 with the release of two articles - Sutskever et al., 2014 , Cho et al., 2014 .

In order to sufficiently understand and then use these models, some concepts must first be clarified. The visualizations proposed in this article will be a good complement to the articles mentioned above.

Sequence-to-sequence model is a model that receives a sequence of elements (words, letters, image attributes, etc.) as input and returns another sequence of elements. The trained model works as follows:

In neural machine translation, a sequence of elements is a collection of words that are processed in turn. The conclusion is also a set of words:

Take a look under the hood

Under the hood, the model has an encoder and decoder.

The encoder processes each element of the input sequence, translates the received information into a vector called a context. After processing the entire input sequence, the encoder sends the context to the decoder, which then begins to generate the output sequence element by element.

The same thing happens with machine translation.

( ), , , (. RNN — A friendly introduction to Recurrent Neural Networks).

– . , .

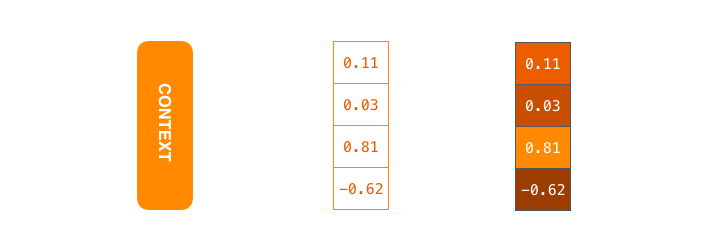

– (hidden units) RNN. 4- , 256, 512 1024.

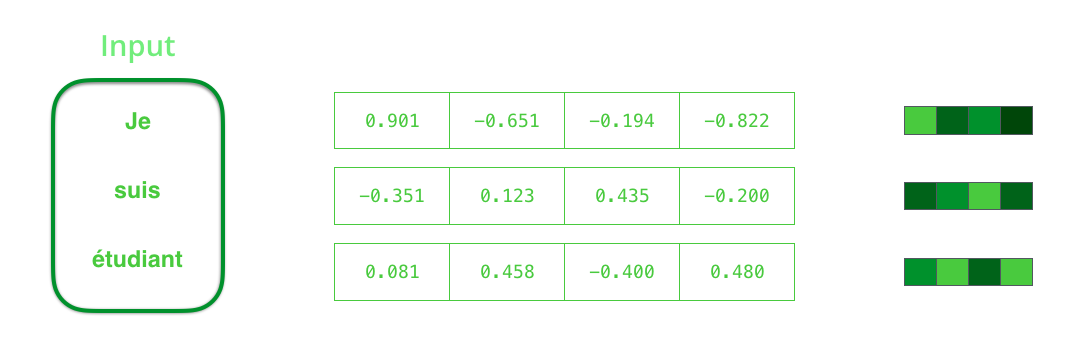

RNN : ( , ) (hidden state). , , . , « » (word embeddings). , (, «» – «» + «» = «»).

, . . , . 200-300 — ; 4.

, /, RNN :

RNN #1 . .

. , RNN, . , .

, . ( , .)

sequence-to-sequence . , – .. (unrolled) , , , . .

!

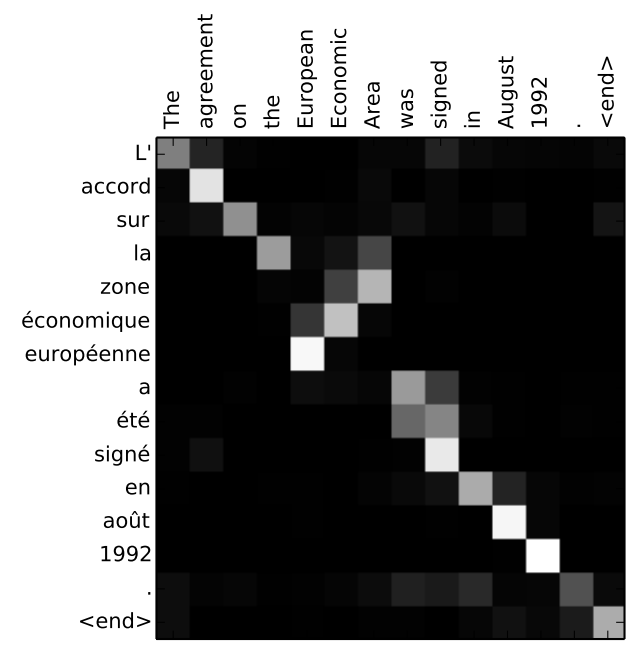

, , . Bahdanau et al., 2014 Luong et al., 2015, , « » (attention). , .

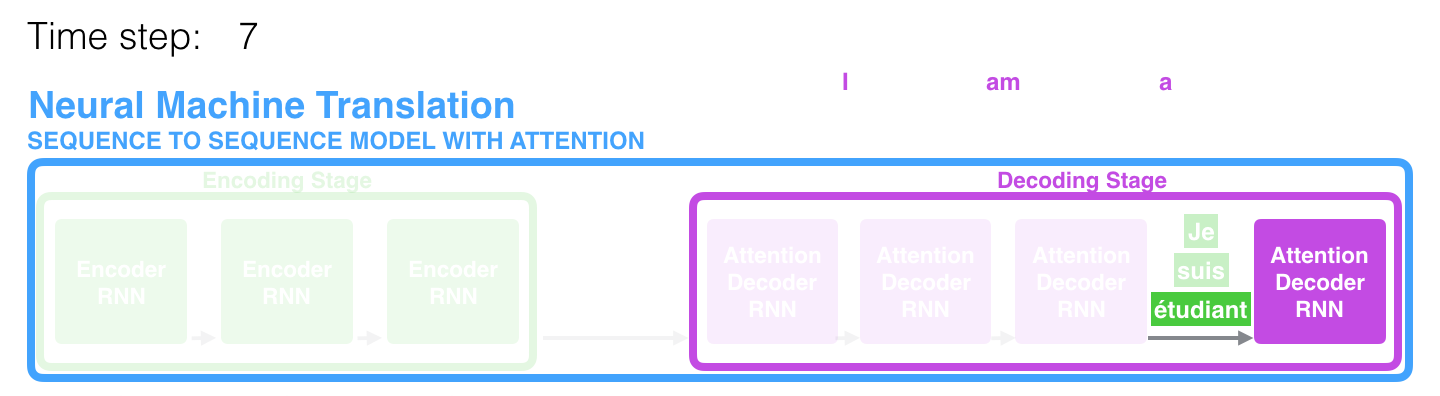

7- «étudiant» («student» -), . , , .

sequence-to-sequence .

-, : , :

-, , . , , , :

- , – ;

- ( , );

- softmax , , , .

« » .

, , :

- RNN <END> .

- RNN , (h4). .

- h4 (C4) .

- h4 C4 .

- (feedforward neural network, FFN), .

- FFN .

- .

, :

, . , ( — ). , , , .

, , Neural Machine Translation (seq2seq) TensorFlow.