3D , 3D . , , . , , , . , 3D ML , .

.

, , .

IT- “VR/AR & AI” — PHYGITALISM.

, [1] . . , , :

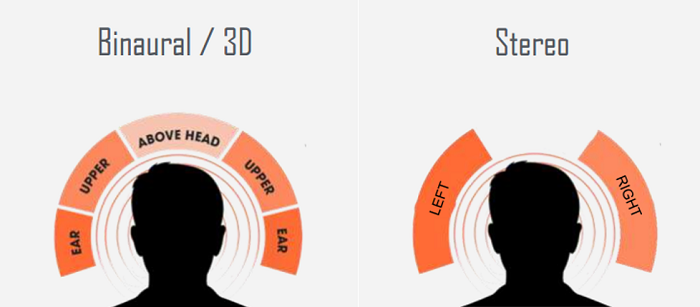

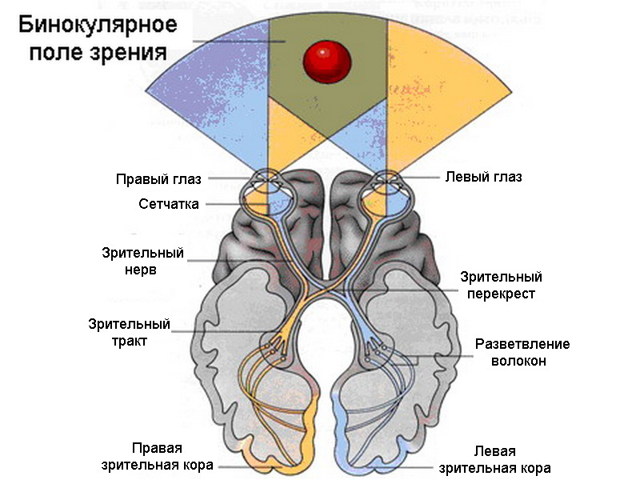

, “” “”. , , , .

. , . , , , , .

, . , , , . , . , , . , , , .

, , , . , , RGB-D , Intel Realsense Microsoft Azure Kinect, RGB-D [ (RGB + (depth map)], , , . , , .

, :

, -, . : , ? , , , RGB , ?

. “ ” (black box), , , / , , , 3D ML (three dimensional data machine learning problems) , Geometrical deep learning, .

, 3D ML :

- ( 3D ),

- 3D ( )

- 3D RGB (2D-to-3D),

.

.1 3D ML IDF0 scheme.

3D ML . , . , / , 3D . [2] — , ( 3D ).

, , “Characterizing Structural Relationships in Scenes Using Graph Kernels” [3] , “Fast human pose estimation using 3D Zernike descriptors” [4] . 3D ML, . .

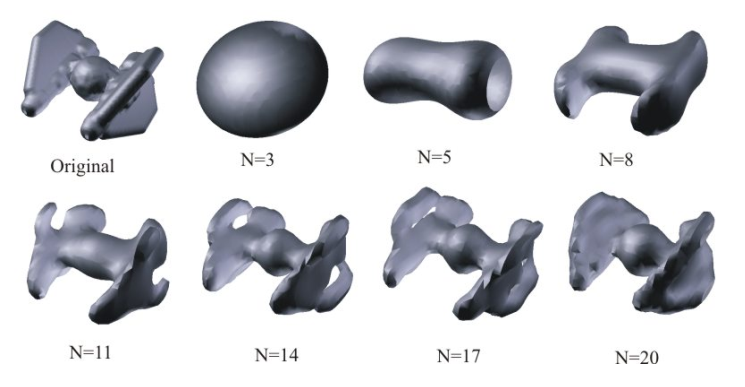

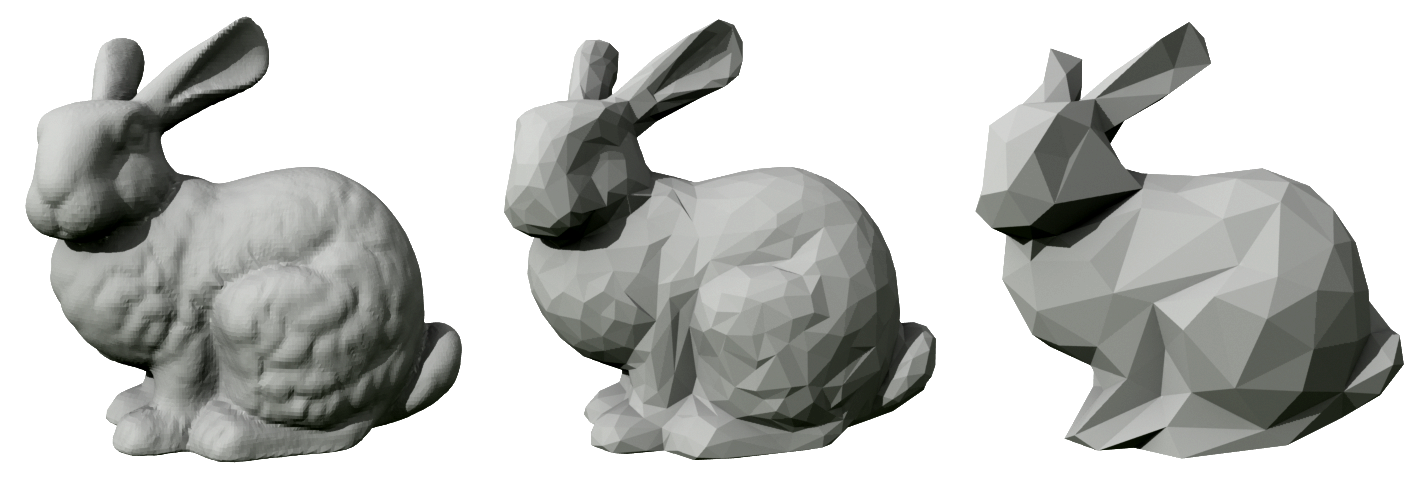

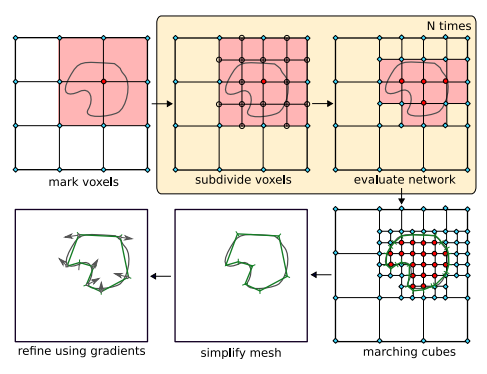

.2 — 3D . — , , . N , . [2].

3D ML, , . 3D ML, single image 2D-to-3D, .

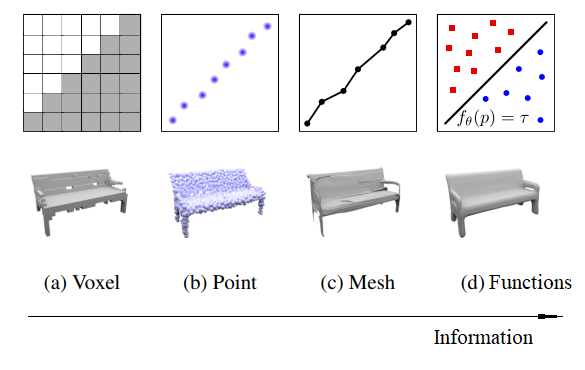

, 3D .

— . , , . , , , . .

, , . , . , , , , , .

, , , .

, RGB , .. . , , .. , — , .

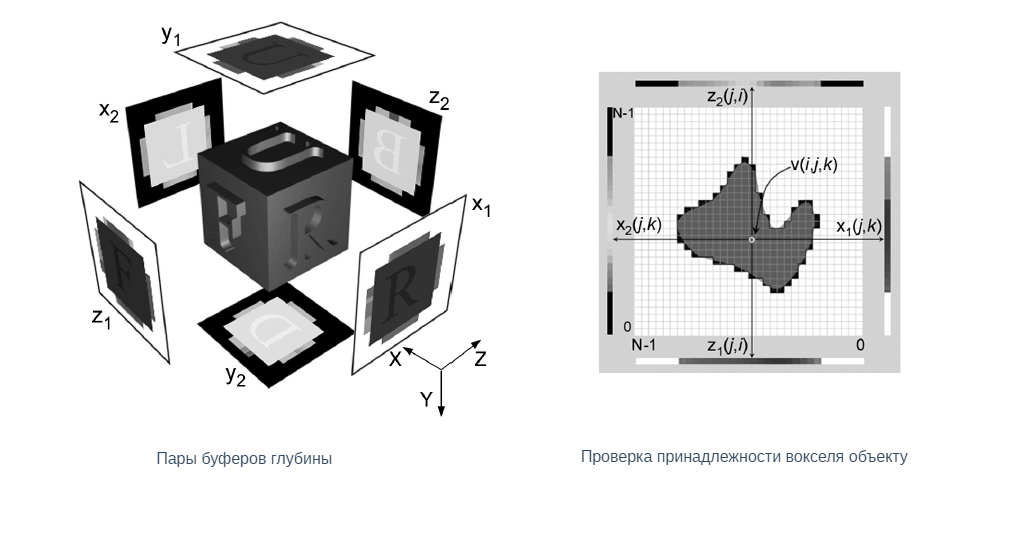

.3 3D : 4 , 2D .

3D ML, , , .

Python, 3D:

GitHub.

, , , pytorch CUDA .

import os

os.environ['PYOPENGL_PLATFORM'] = 'egl'

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

%matplotlib inline

import torch

from pytorch3d.utils import ico_sphere

from pytorch3d.loss import (

chamfer_distance,

mesh_edge_loss,

mesh_laplacian_smoothing,

mesh_normal_consistency

)

from pytorch3d.io import load_obj

from pytorch3d.ops import sample_points_from_meshes

from pytorch3d.structures import Meshes, Textures

from pytorch3d.renderer import (

look_at_view_transform,

OpenGLPerspectiveCameras,

DirectionalLights,

RasterizationSettings,

MeshRenderer,

MeshRasterizer,

HardPhongShader

)

from mesh_to_sdf import mesh_to_sdf, sample_sdf_near_surface

import trimesh

from trimesh.voxel.creation import voxelize

if torch.cuda.is_available():

device = torch.device('cuda:0')

torch.cuda.set_device(device)

else:

device = torch.device('cpu')

, [5], .

(Mesh, polygonal models)

— . , FBX, glTF, glb. .obj . , .. : , , , .

.obj ASCII ( .obj ), , , , , .

obj

v -3.4101800e-003 1.3031957e-001 2.1754370e-002

v -8.1719160e-002 1.5250145e-001 2.9656090e-002

v -3.0543480e-002 1.2477885e-001 1.0983400e-003

v -2.4901590e-002 1.1211138e-001 3.7560240e-002

...

f 1069 1647 1578

f 1058 909 939

f 421 1176 238

f 1055 1101 1042

f 238 1059 1126

f 1254 30 1261

f 1065 1071 1

f 1037 1130 1120

f 1570 2381 1585

f 2434 2502 2473

f 1632 1654 1646

...

:

:

phygital- , , Unity 3D.

, , 3D.

sphere.obj 4 subdivision:

trimesh_sphere = trimesh.primitives.Sphere(subdivisions= 4)

sphere_mesh = ico_sphere(4, device)

verts_rgb = torch.ones_like(sphere_mesh.verts_list()[0])[None]

sphere_mesh.textures = Textures(verts_rgb=verts_rgb.to(device))

bunny.obj:

path_to_model = os.path.join("/data","bunny.obj")

bunny_trimesh = trimesh.load(path_to_model)

if isinstance(bunny_trimesh, trimesh.Scene):

bunny_trimesh = bunny_trimesh.dump(concatenate=True)

bunny_trimesh.vertices -= bunny_trimesh.center_mass

scaling = 2 / bunny_trimesh.scale

bunny_trimesh.apply_scale(scaling=scaling)

verts, faces_idx, _ = load_obj(path_to_model)

faces = faces_idx.verts_idx

center = verts.mean(0)

verts = verts - center

scale = max(verts.abs().max(0)[0])

verts = verts / scale

verts_rgb = torch.ones_like(verts)[None]

textures = Textures(verts_rgb=verts_rgb.to(device))

bunny_mesh = Meshes(

verts=[verts.to(device)],

faces=[faces.to(device)],

textures=textures

)

— , , , , .

:

cameras = OpenGLPerspectiveCameras(device=device)

raster_settings = RasterizationSettings(

image_size=1024,

blur_radius=0,

faces_per_pixel=1,

)

ambient_color = torch.FloatTensor([[0.0, 0.0, 0.0]]).to(device)

diffuse_color = torch.FloatTensor([[1.0, 1.0, 1.0]]).to(device)

specular_color = torch.FloatTensor([[0.1, 0.1, 0.1]]).to(device)

direction = torch.FloatTensor([[1, 1, 1]]).to(device)

lights = DirectionalLights(ambient_color=ambient_color,

diffuse_color=diffuse_color,

specular_color=specular_color,

direction=direction,

device=device)

phong_renderer = MeshRenderer(

rasterizer=MeshRasterizer(

cameras=cameras,

raster_settings=raster_settings

),

shader=HardPhongShader(

device=device,

cameras=cameras,

lights=lights

)

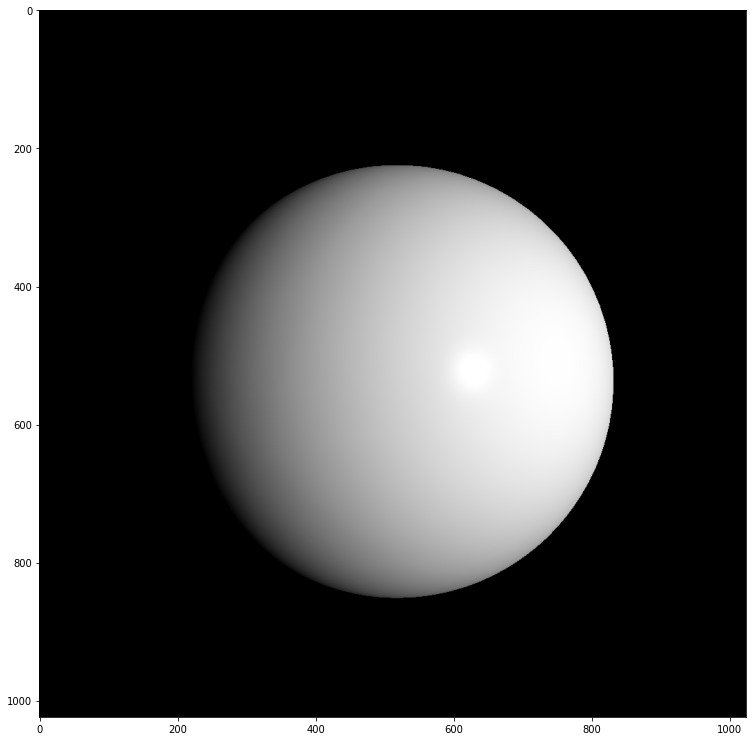

)

:

distance = 2.0

elevation = 40.0

azimuth = 0.0

R, T = look_at_view_transform(distance, elevation, azimuth, device=device,at=((-0.02,0.1,0.0),))

image_bunny = phong_renderer(meshes_world=bunny_mesh, R=R, T=T)

image_bunny = image_bunny.cpu().numpy()

plt.figure(figsize=(13, 13))

plt.imshow(image_bunny.squeeze())

plt.grid(False)

distance = 3.0

elevation = 40.0

azimuth = 0.0

R, T = look_at_view_transform(distance, elevation, azimuth, device=device,at=((-0.02,0.1,0.0),))

image_sphere = phong_renderer(meshes_world=sphere_mesh, R=R, T=T)

image_sphere = image_sphere.cpu().numpy()

plt.figure(figsize=(13, 13))

plt.imshow(image_sphere.squeeze())

plt.grid(False)

, , , , ( , ). trimesh :

bunny_trimesh.show()

, , , , . , , (watertight mesh) , .

print(

" bunny Xi = V - E + F =",

bunny_trimesh.euler_number

)

print(

" sphere Xi = V - E + F =",

trimesh_sphere.euler_number

)

print("Is bunny mesh watertight:", bunny_trimesh.is_watertight)

print("Is sphere mesh watertight:", trimesh_sphere.is_watertight)

print(" bunny.obj:", bunny_trimesh.volume)

print(" sphere.obj:", trimesh_sphere.volume)

(4/3)*np.pi

Out:

>> bunny Xi = V - E + F = -2

>> sphere Xi = V - E + F = 2

>>Is bunny mesh watertight: False

>>Is sphere mesh watertight: True

>> bunny.obj: 0.3876657353353089

>> sphere.obj: 4.1887902047863905

>>4.1887902047863905

(Voxels)

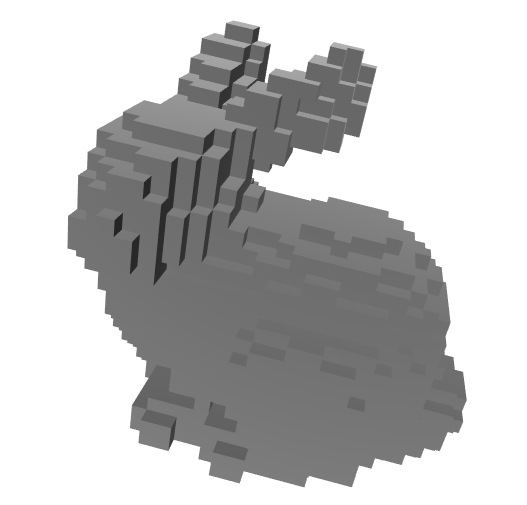

( . volumetric + pixel) . 3D , (). . , , , , .vox . (.. pixel and voxel art), .

, , , , , , , , . , , (1 — , 0 — ).

trimesh:

low_idx_bunny = bunny_trimesh.scale / 15

high_idx_bunny = bunny_trimesh.scale / 39

low_idx_sphere = trimesh_sphere.scale / 15

high_idx_sphere = trimesh_sphere.scale / 30

vox_high_bunny = voxelize(bunny_trimesh,pitch=high_idx_bunny)

vox_high_sphere = voxelize(trimesh_sphere,pitch=high_idx_sphere)

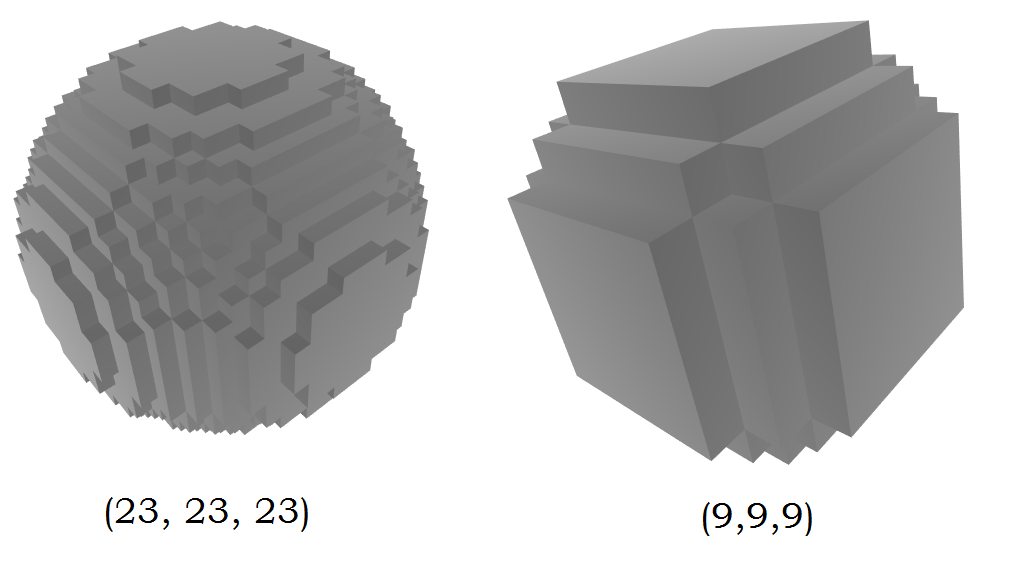

print(" :", vox_high_sphere.shape)

print(" :", vox_low_sphere.shape)

print(" :\n",np.array(vox_high_sphere.matrix, dtype=np.uint8)[1])

Out:

>> : (9, 9, 9)

>> : (19, 19, 19)

>> :

array([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]],

dtype=uint8)

:

:

, trimesh :

vox_high_sphere.show()

vox_low_sphere.show()

, - , - , , . . , , , , [6] OGN (Octree Generating Networks), , .

(Point clouds)

. -. () , . . (RGBD), . 2.5D, , , , . RGBD .

, .pcd , .ply . , , — PCL.

.ply :

plyply

format ascii 1.0

obj_info is_cyberware_data 1

obj_info is_mesh 0

obj_info is_warped 0

obj_info is_interlaced 1

obj_info num_cols 512

obj_info num_rows 400

obj_info echo_rgb_offset_x 0.013000

obj_info echo_rgb_offset_y 0.153600

obj_info echo_rgb_offset_z 0.172000

obj_info echo_rgb_frontfocus 0.930000

obj_info echo_rgb_backfocus 0.012660

obj_info echo_rgb_pixelsize 0.000010

obj_info echo_rgb_centerpixel 232

obj_info echo_frames 512

obj_info echo_lgincr 0.000500

element vertex 40256

property float x

property float y

property float z

element range_grid 204800

property list uchar int vertex_indices

end_header

-0.06325 0.0359793 0.0420873

-0.06275 0.0360343 0.0425949

-0.0645 0.0365101 0.0404362

-0.064 0.0366195 0.0414512

-0.0635 0.0367289 0.0424662

-0.063 0.0367836 0.0429737

-0.0625 0.0368247 0.0433543

-0.062 0.0368657 0.0437349

-0.0615 0.0369067 0.0441155

-0.061 0.0369614 0.044623

...

:

:

, , .ply PointClud, .obj . , , , , , . .

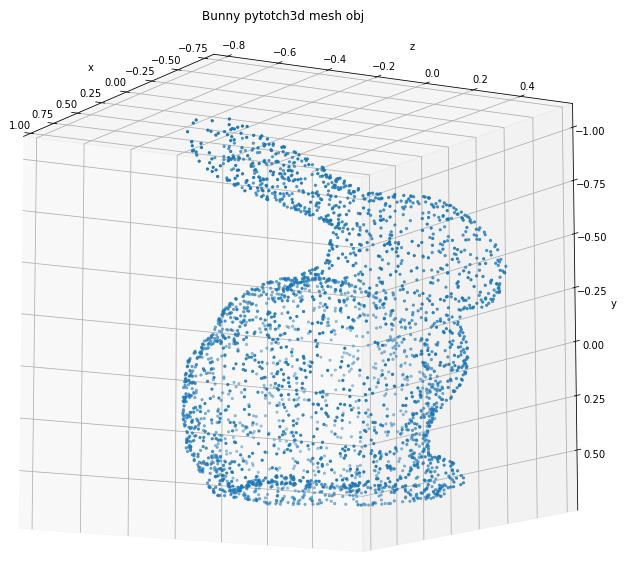

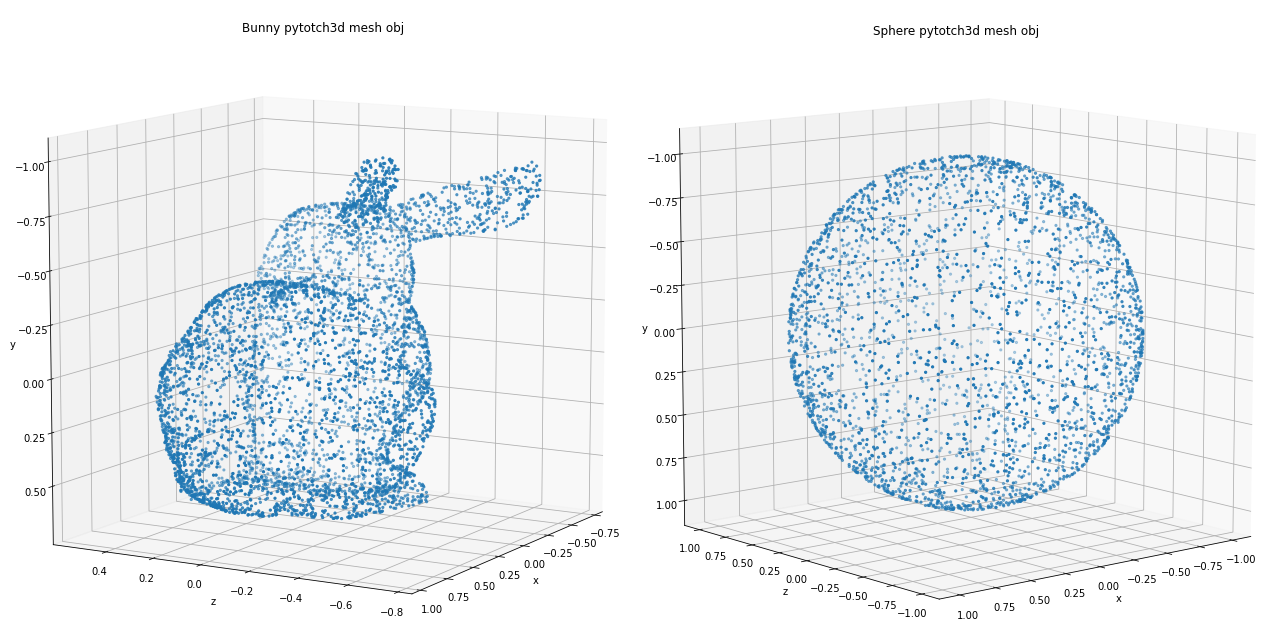

, pytorch3d, :

num_points_to_sample = 25000

bunny_vert, bunny_norm = sample_points_from_meshes(

bunny_mesh,

num_points_to_sample ,

return_normals=True

)

sphere_vert, sphere_norm = sample_points_from_meshes(

sphere_mesh,

num_points_to_sample,

return_normals=True

)

:

def plot_pointcloud(points, elev=70, azim=-70, title=""):

fig = plt.figure(figsize=(10, 10))

ax = Axes3D(fig)

x, y, z = points

ax.scatter3D(x, z, -y,marker='.')

ax.set_xlabel('x')

ax.set_ylabel('z')

ax.set_zlabel('y')

ax.set_title(title)

ax.view_init(elev, azim)

plt.show()

(pytorch3d):

points = sample_points_from_meshes(bunny_mesh, 5000)

points = points.clone().detach().cpu().squeeze().unbind(1)

plot_pointcloud(points, elev=190, azim=150, title='Bunny pytotch3d mesh obj')

points = sample_points_from_meshes(sphere_mesh, 3000)

points = points.clone().detach().cpu().squeeze().unbind(1)

plot_pointcloud(points, elev=190, azim=130, title='Sphere pytotch3d mesh obj')

( 3D , , ) , , 3D ML. 2D-to-3D, , PC2Mesh ( ).

/ (CAD models, functions)

(. . ) . , , , , , . (), CAD CAM . , . , , CAD .

, , : , , , , , ( [5]).

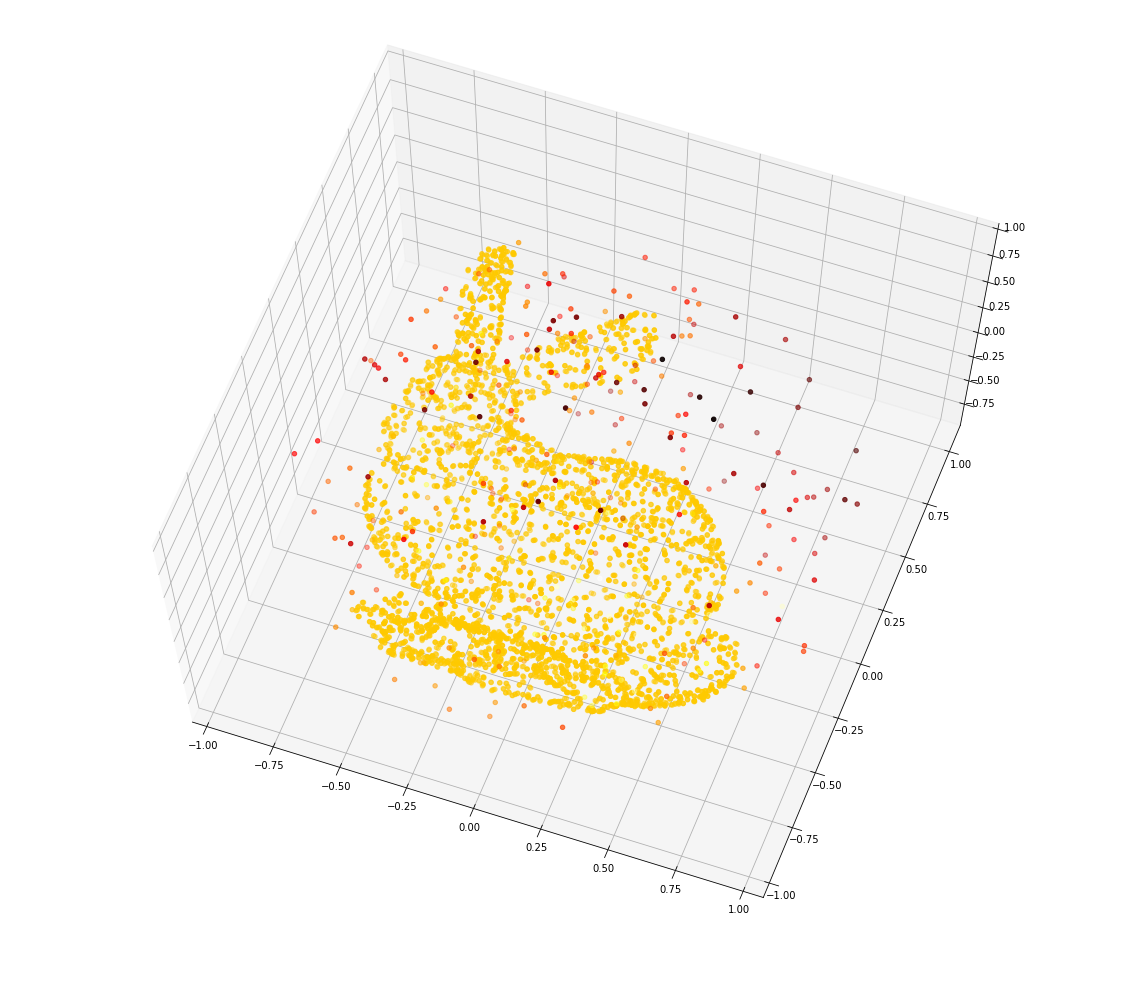

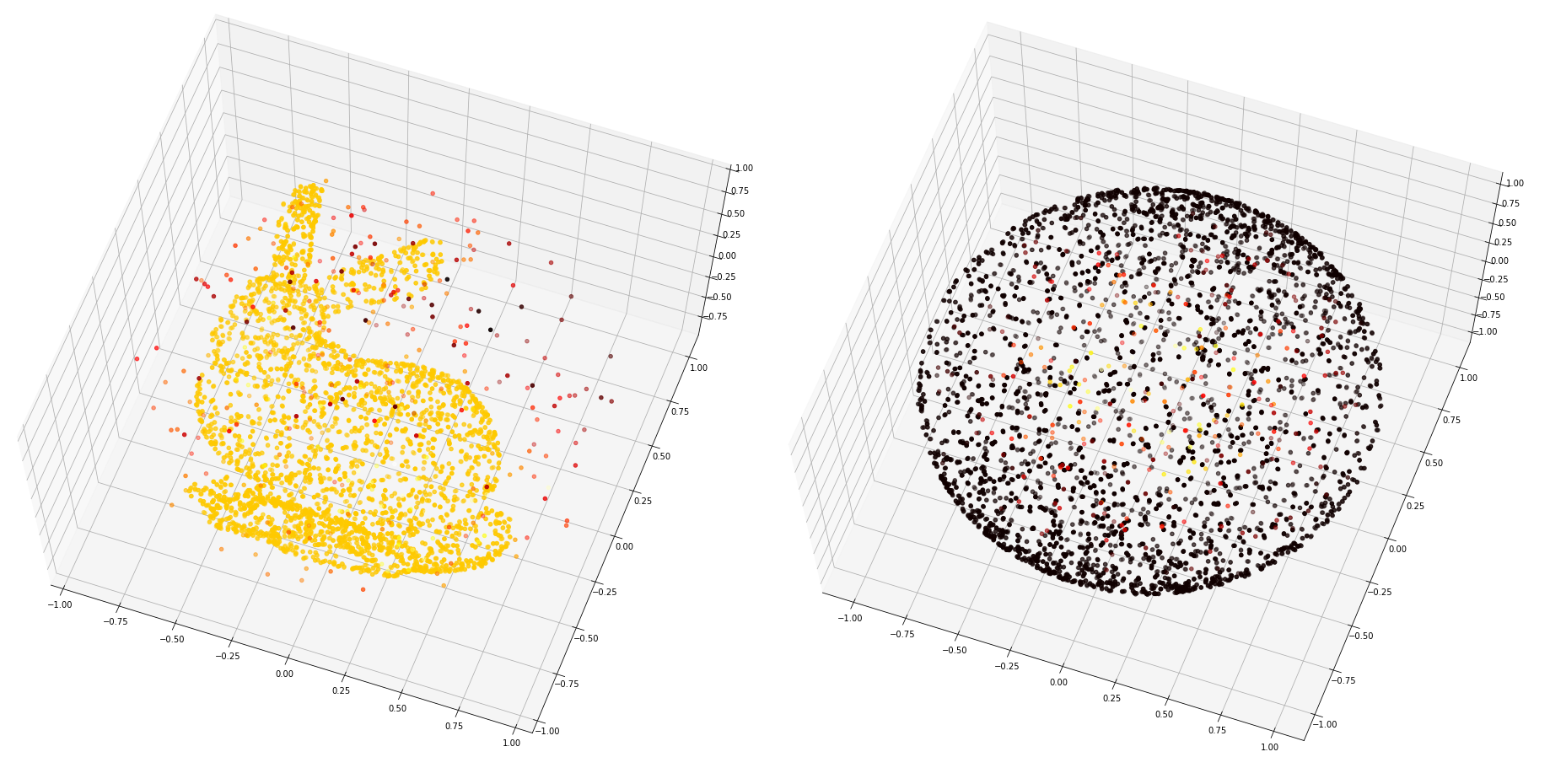

3D ML .. signed distance function (SDF) — , . .

3D , .. , — , .

, :

: . , DeepSDF [10]. mesh_to_sdf, SDF 3D .

SDF :

center_mass = bunny_trimesh.center_mass

query_points = np.array([[center_mass],[[3,3,3]]])

for point in query_points:

print(

"SDF{0} = {1}".format(point[0],mesh_to_sdf(bunny_trimesh,point)[0])

)

Out:

>>SDF[-0.00036814 0.01044934 -0.00012521] = -0.26781705021858215

>>SDF[3. 3. 3.] = 4.746691703796387

center_mass = trimesh_sphere.center_mass

query_points = np.array([[center_mass],[[3,3,3]]])

for point in query_points:

print(

"SDF{0} = {1}".format(point[0],mesh_to_sdf(trimesh_sphere,point)[0])

)

Out:

>>SDF[ 0.0000000e+00 0.0000000e+00 -8.8540061e-18] = -0.9988667964935303

>>SDF[3. 3. 3.] = 4.195330619812012

SDF, :

points, sdf = sample_sdf_near_surface(trimesh_sphere, number_of_points=5000)

fig = plt.figure(figsize=(20, 18))

ax = fig.add_subplot(111, projection="3d")

ax.view_init(elev=70, azim=-70)

ax.scatter(points[:, 0], points[:, 1], zs=-points[:, 2], c=sdf, cmap="hot_r")

points, sdf = sample_sdf_near_surface(bunny_trimesh, number_of_points=5000)

fig = plt.figure(figsize=(20, 18))

ax = fig.add_subplot(111, projection="3d")

ax.view_init(elev=70, azim=-70)

ax.scatter(points[:, 0], points[:, 1], zs=-points[:, 2], c=sdf, cmap="hot_r")

, SDF .

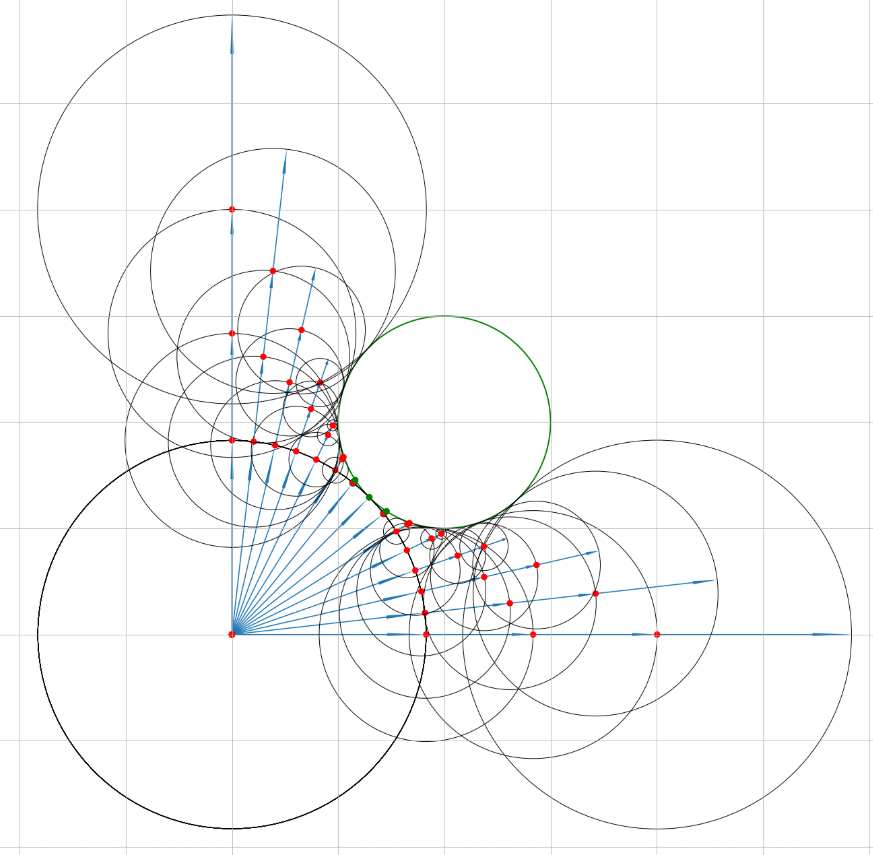

raymarching , ( SDF). GitHub (raymarch_sdf.ipynb) raymarchng . , , raymarching :

, - , SDF. . SDF ( ). . , . . . , 1024.

3D . , SDF .

, ( Unity) ( ).

:

:

, . , , Multiresolution IsoSurface Extraction, Occupancy Net [5], , [7].

.4 Multiresolution IsoSurface Extraction. . . . .

, : G — V — . , . , , . , 3D. .

.5 . 6 “” — , .

, , . 3. . “ ”, , . , [8] [9], , (data driven), . , Occupancy Net., , , .

, — data science . , , .

: , — ? , , , .

, , 3D ML, 3D ML , 2D-to-3D .

. : , . , . / , , 2018 ., 1408 .

Novotni, Marcin and Reinhard Klein. “Shape retrieval using 3D Zernike descriptors.” Computer-Aided Design 36 (2004): 1047-1062. [project page]

Fisher, Matthew & Savva, Manolis & Hanrahan, Pat. (2011). Characterizing Structural Relationships in Scenes Using Graph Kernels. ACM Trans. Graph… 30. 34. 10.1145/2010324.1964929.

Berjón, Daniel & Morán, Francisco. (2012). Fast human pose estimation using 3D Zernike descriptors. Proceedings of SPIE — The International Society for Optical Engineering. 8290. 19-. 10.1117/12.908963.

Mescheder, Lars & Oechsle, Michael & Niemeyer, Michael & Nowozin, Sebastian & Geiger, Andreas. (2018). Occupancy Networks: Learning 3D Reconstruction in Function Space. [code]

Tatarchenko, Maxim & Dosovitskiy, Alexey & Brox, Thomas. (2017). Octree Generating Networks: Efficient Convolutional Architectures for High-resolution 3D Outputs. [code]

Bao, Fan & Sun, Yankui & Tian, Xiaolin & Tang, Zesheng. (2007). Multiresolution Isosurface Extraction with View-Dependent and Topology Preserving. 2007 IEEE/ICME International Conference on Complex Medical Engineering, CME 2007. 521-524. 10.1109/ICCME.2007.4381790.

J. C. Carr, R. K. Beatson, J. B. Cherrie, T. J. Mitchell, W. R. Fright, B. C. McCallum, and T. R. Evans. Reconstruction and representation of 3d objects with radial basis functions. In SIGGRAPH, pages 67–76. ACM, 2001

M. Kazhdan and H. Hoppe. Screened poisson surface reconstruction. ACM TOG, 32(3):29, 2013.

J. J. Park, P. Florence, J. Straub, R. Newcombe, and S. Lovegrove. DeepSDF: Learning continuous signed distance functions for shape representation. arXiv.org, 2019. [code]